4K monitors are finally becoming more affordable, and so you may be thinking it’s time to upgrade that 1440p or even 1080p display to something with way more pixels, and a much sharper image as a result.

However, before you get too excited about pushing more than eight million pixels into your eyeballs, there are some things you need to keep in mind that could mean a 4K monitor isn’t the right choice for you.

5

Resolution Isn’t the Only Part of Picture Quality

The quality of the image your eye perceives depends on multiple factors, of which resolution is just one. It’s why my old 51-inch 720p plasma TV made every 1080p LCD model at the time look like a washed-out photograph. Contrast, motion clarity, color gamut, and numerous other factors determine if your brain thinks the image looks good or not.

At a given budget, you’re always giving up something in exchange for something else. If you buy a monitor that’s excellent at everything, you’re compromising on budget. So if you buy a 4K monitor, that extra resolution might come at the cost of a panel technology that has poor contrast, or a low refresh rate, or a smaller screen than you could otherwise afford, and so on.

As always, which factors matter the most to you depend on the job you need the monitor to do. If you edit photos or do other visual design work, color accuracy and gamut may matter much more than raw resolution, whereas if you’re a gamer you might care more about super-high refresh rates and blur-free motion. Of course, if money is no object to you, then you can solve nearly all of these issues by spending more of it.

Related

TN vs. IPS vs. VA: What’s the Best Display Panel Technology?

The most influential decision you can make when you buy a new monitor is the panel type. So, what’s the difference between TN, VA, and IPS, and which one is right for you?

4

4K Gaming Is Expensive to Do Right

It takes a lot of rendering horsepower to run a game at 4K, especially if you want to have higher graphical settings, or (gasp!) heavy options like ray tracing enabled. To get the most out of a 4K monitor while gaming, you’re looking at spending between $1,000 and $2,000 on a GPU (such as the RTX 5080 or 5090), and that’s before you price in the rest of the computer that those GPUs need to run their best.

Of course, thanks to technologies like DLSS and FSR, which use AI to upscale graphics from a lower resolution, it’s now possible to get decent value from mid-range GPUs at “4K”, but there’s a reason PC gamers tend to favor 1440p as the best balance between image crispness and great performance. If gaming is your main reason for wanting a 4K monitor, you may want to divert your budget to getting a lower resolution screen that has better specs in other areas that will arguably benefit your experience even more.

3

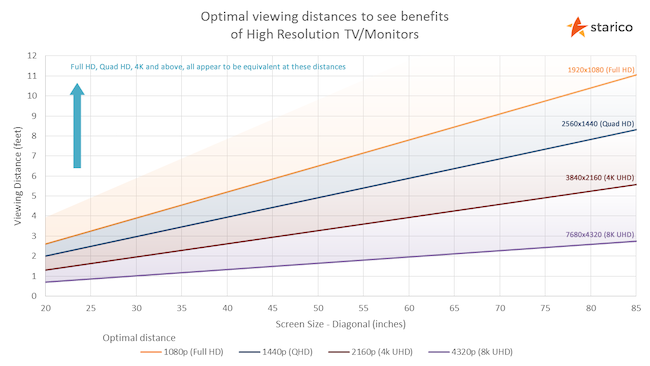

Many 4K Monitors Are Too Small for the Resolution

The above chart gives you a rough idea of the distance you need to be from a 4K screen at a given screen size to benefit from the extra resolution. Now, before you bring it up, charts like these are based on use cases like watching movies, or playing video games, where you’d want to have the whole screen within your field of view.

If we’re talking about work on a computer, then things are different, because you’re probably scrutinizing a small section of the screen more closely than if you were just watching a video.

However, what’s undeniable is that once you increase pixel density beyond a certain point, you literally cannot tell the difference in resolution, because you’ve gone beyond the eye’s ability to even see that detail. It’s why few if any phone makers bother to put 4K panels in phones, and it’s also why even Apple doesn’t bother to put a 4K screen inside a 14-inch MacBook. There’s just no real benefit to it.

That’s not to say that someone with sharp vision couldn’t tell the difference between a 1440p and 4K UHD 24-inch monitor at normal viewing distances. It’s just that whatever difference there is to perceive doesn’t justify the expense and complexity involved. Personally, I’ve found that 32-inch widescreen and 34-inch ultrawide displays at 1440p look more than sharp enough, but again this is something you need to judge with your own eyes.

Regardless, I’d consider 27 inches to be the bottom of the range where 4K makes any practical sense, and really it’s at larger sizes where the benefit is unambiguous.

Related

2

You’ll End Up Scaling Your UI Anyway

One of the reasons 4K monitors are appealing is that you can get much more information on screen. However, this is only true if the UI (user interface) elements on the screen are scaled 1:1 with the pixels of the display. Unless you’ve bought a truly large display (or a 4K television) that makes things like text, icons, and menus hard to read and even harder to click on.

Operating systems like macOS and Windows 11 will automatically scale their UI elements to provide a comfortable experience on high-resolution displays if the size of the screen makes it necessary. On my 34-inch 1440p ultrawide monitor, scaling isn’t necessary.

However, if this were a 4K monitor at the same physical size, you’d have to scale the elements up to get the same comfortable UI experience. Now, you have the option to disable this manually, and if you have great vision and don’t mind tiny text, you’ll get an enormous amount of space on your desktop, but if you can’t deal with that then you’re getting the same amount of on-screen real estate, but with crisper text and graphics.

Related

Should You Use HDMI, DisplayPort, or USB-C for a 4K Monitor?

HDMI, DisplayPort, USB-C, and Thunderbolt are all common now, but which is best?

1

A Wider Screen Might Be a More Useful Option

There are ways to add more pixels to a screen without increasing its pixel density. Specifically, if you change the aspect ratio of a screen, you can add more space. Take a 2560×1440 screen and widen it to 3440×1440, and you have so much room for activities. If you mainly care about 16:9 content, then this is of course not a solution, but both gamers and those who work on their computers can benefit from an ultrawide aspect ratio.

For me, in particular, having a wide screen is much more useful, since it’s like having two monitors side-by-side with no bezel. Splitting a 16:9 screen down the middle results in windows that are too narrow for my needs, but they’re nice and square on a 21:9 display!

Related

The Best Ultrawide Monitors of 2025

Looking for a bigger screen for gaming or productivity? An ultrawide monitor is for you.

There are many good reasons to buy a 4K monitor, but the truth is most people don’t need them, and even if they did get them wouldn’t see immense benefit from it. Don’t let that stop you from getting a great 4K monitor if you really want one, but at the same time, you shouldn’t feel like this is the inevitable upgrade path you should take. More pixels don’t always make things better!