On Monday, Apple shared its plans to allow users to opt into on-device Apple Intelligence training using Differential Privacy techniques that are incredibly similar to its failed CSAM detection system.

Differential Privacy is a concept Apple embraced openly in 2016 with iOS 10. It is a privacy-preserving method of data collection that introduces noise to sample data to prevent the data collectors from figuring out where the data came from.

According to a post on Apple’s machine learning blog, Apple is working to implement Differential Privacy as a method to gather user data to train Apple Intelligence. The data is provided on an opt-in basis, anonymously, and in a way that can’t be traced back to an individual user.

The story was first covered by Bloomberg, which explained Apple’s report on using synthetic data trained on real-world user information. However, it isn’t as simple as grabbing user data off of an iPhone to analyze in a server farm.

Instead, Apple will utilize a technique called Differential Privacy, which, if you’ve forgotten, is a system designed to introduce noise to data collection so individual data points cannot be traced back to the source. Apple takes it a step further by leaving user data on device — only polling for accuracy and taking the poll results off of the user’s device.

These methods ensure that Apple’s principles behind privacy and security are preserved. Users that opt into sharing device analytics will participate in this system, but none of their data will ever leave their iPhone.

Analyzing data without identifiers

Differential Privacy is a concept Apple leaned on and developed since at least 2006, but didn’t make a part of its public identity until 2016. It started as a way to learn how people used emojis, to find new words for local dictionaries, to power deep links within apps, and as a Notes search tool.

Apple says that starting with iOS 18.5, Differential Privacy will be used to analyze user data and train specific Apple Intelligence systems starting with Genmoji. It will be able to identify patterns of common prompts people use so Apple can better train the AI and get better results for those prompts.

Basically, Apple provides artificial prompts it believes are popular, like “dinosaur in a cowboy hat” and it looks for pattern matches in user data analytics. Because of artificially injected noise and a threshold of needing hundreds of fragment matches, there isn’t any way to surface unique or individual-identifying prompts.

Plus, these searches for fragments of prompts only result in a positive or negative poll, so no user data is derived from the analysis. Again, no data can be isolated and traced back to a single person or identifier.

The same technique will be used for analyzing Image Playground, Image Wand, Memories Creation, and Writing Tools. These systems rely on short prompts, so the analysis can be limited to simple prompt pattern matching.

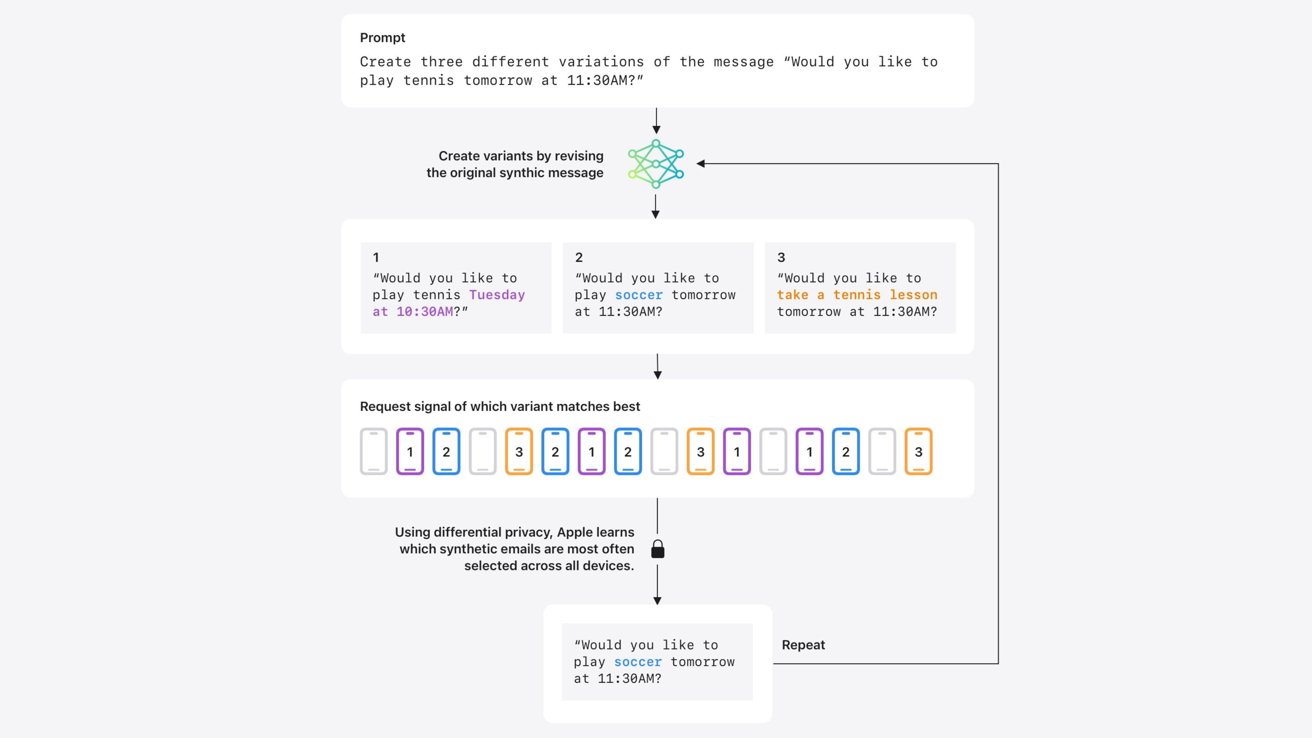

Apple wants to take these methods further by implementing them for text generation. Since text generation for email and other systems results in much longer prompts, and likely, more private user data, Apple took extra steps.

Apple is using recent research into developing synthetic data that can be used to represent aggregate trends in real user data. Of course, this is done without removing a single bit of text from the user’s device.

After generating synthetic emails that may represent real emails, they are compared to limited samples of recent user emails that have been computed into synthetic embeddings. The synthetic embeddings closest to the samples across many devices prove which synthetic data generated by Apple are most representative of real human communication.

Once a pattern is found across devices, that synthetic data and pattern matching can be refined to work across different topics. The process enables Apple to train Apple Intelligence to produce better summaries and suggestions.

Again, the Differential Privacy method of Apple Intelligence training is opt-in and takes place on-device. User data never leaves the device, and gathered polling results have noise introduced, so even while user data isn’t present, individual results can’t be tied back to a single identifier.

These Apple Intelligence training methods should sound very familiar

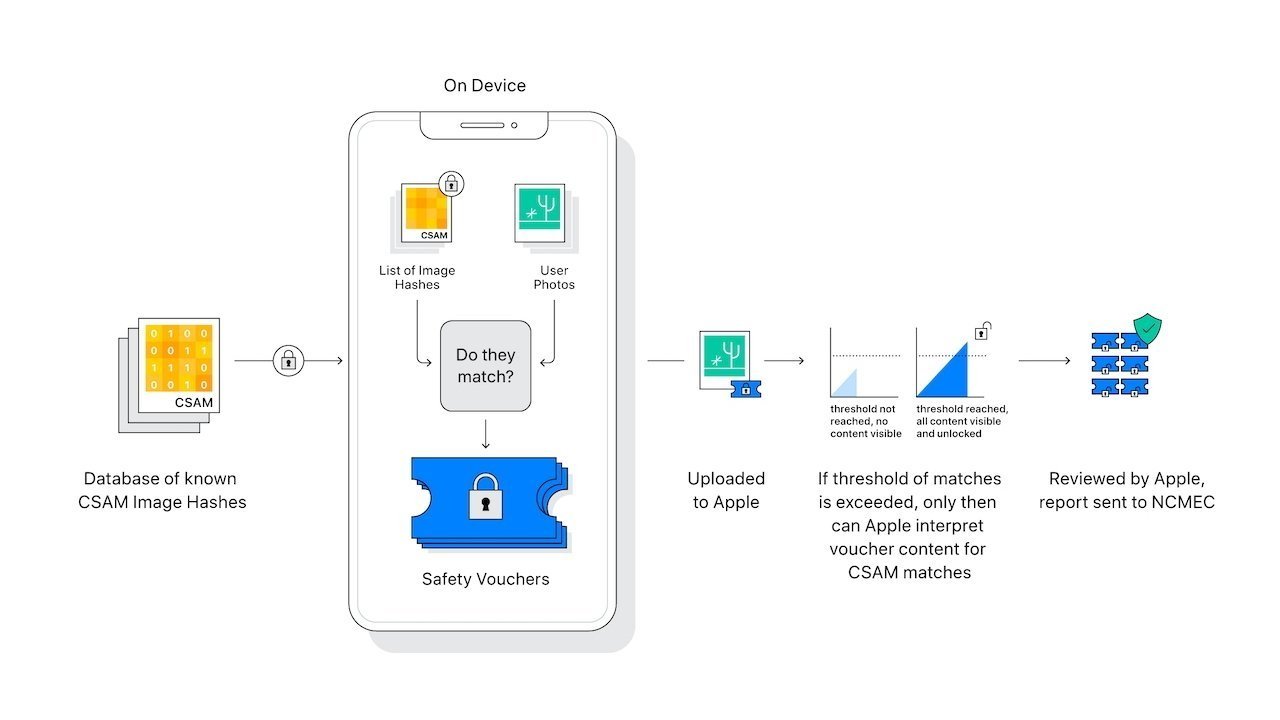

If Apple’s methods here ring any bells, it’s because they are nearly identical to the methods the company planned to implement for CSAM detection. The system would have converted user photos into hashes that were compared to a database of hashes of known CSAM.

Apple’s CSAM detection feature relied on hashing photos without violating privacy or breaking encryption

That analysis would occur either on-device for local photos, or in iCloud photo storage. In either instance, Apple was able to perform the photo hash matching without ever looking at a user photo or removing a photo from the device or iCloud.

When enough instances of potential positive results for CSAM hash matches occurred on a single device, it would trigger a system that sent affected images to be analyzed by humans. If the discovered images were CSAM, the authorities were notified.

The CSAM detection system preserved user privacy, data encryption, and more, but it also introduced many new attack vectors that may be abused by authoritarian governments. For example, if such a system could be used to find CSAM, people worried governments could compel Apple to use it to find certain kinds of speech or imagery.

Apple ultimately abandoned the CSAM detection system. Advocates have spoken out against Apple’s decision, suggesting the company is doing nothing to prevent the spread of such content.

Opting out of Apple Intelligence training

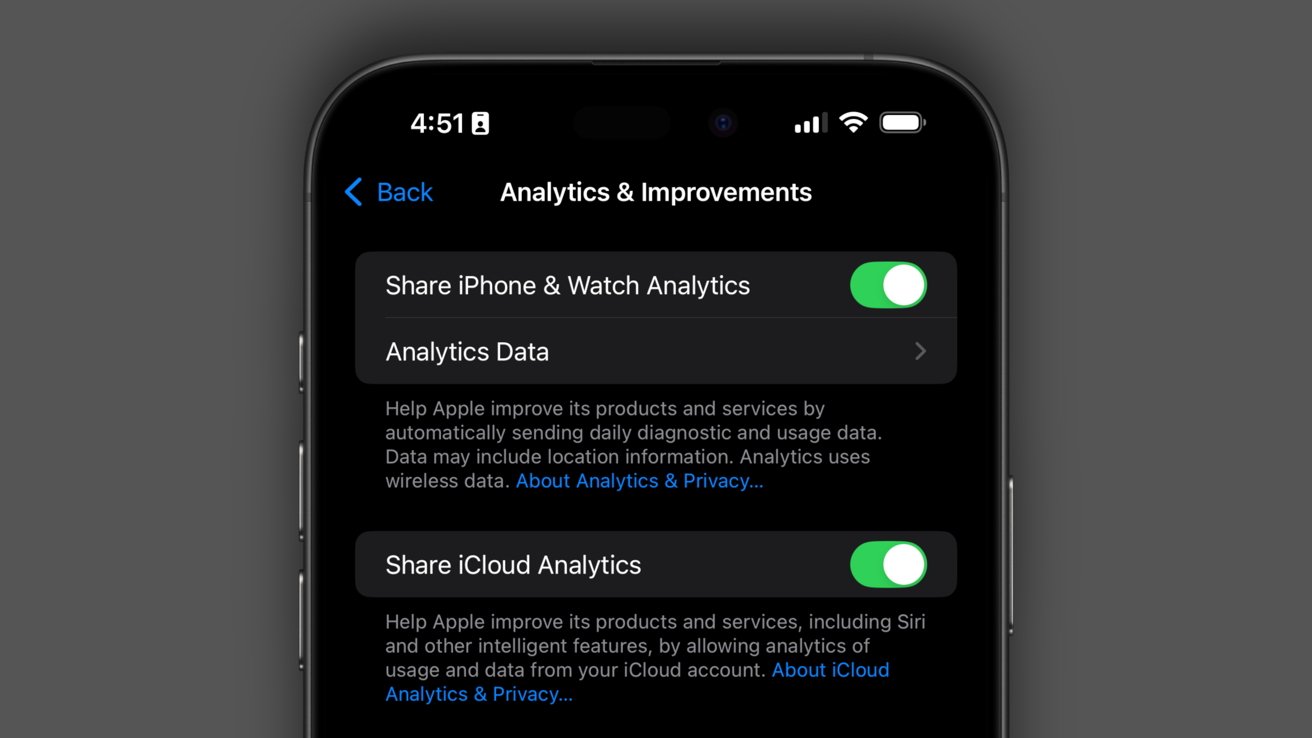

While the technology backbone is the same, it seems Apple has landed on a much less controversial use. Even so, there are those that would prefer not to offer data, privacy protected or not, to train Apple Intelligence.

Nothing has been implemented yet, so don’t worry, there’s still time to ensure you are opted out. Apple says it will introduce the feature in iOS 18.5 and testing will begin in a future beta.

To check if you’re opted in or not, open Settings, scroll down and select Privacy & Security, then selecting Analytics & Improvements. Toggle the “Share iPhone & Watch Analytics” setting to opt out of AI training if you haven’t already.