Summary

- HDR includes improved brightness, contrast, and color to enhance image quality for a realistic viewing experience.

- HDR formats like HDR10, HDR10+, Dolby Vision, and HLG vary and support ranges from displays.

- Ensure a meaningful HDR experience by seeking a screen with brightness above 600 nits, wide color gamut, and 10-bit panel depth.

Whether it’s a TV, PC monitor, smartphone, or tablet, you’ve probably seen the letters “HDR” in the spec sheet somewhere. HDR or High Dynamic Range is now an important standard for both displays and content, but it can be quite confusing if you haven’t been keeping track.

So, under the assumption that you know absolutely nothing about HDR, but want to make sure you buy the right stuff the next time it comes up, I’ll walk you through what HDR is, why you should care, and give you some pointers to spend your money wisely.

What Is HDR?

The “dynamic range” of a display is the difference between the brightest white and darkest black it can produce, which sounds like contrast ratio, but HDR also includes a wider range of colors, and more nuance in the transition between those colors.

In practice, this allows HDR displays to show you a bright sun in a scene, while the shadows it casts remain dark and full of internal detail. HDR images resemble the color and contrast of the real world more closely, and also expand what content creators can do. Done right, HDR video can really be an eye-popping experience—one that’s hard to come back from.

TCL 65-inch (QM7K) Mini-LED TV

How HDR Works

There are three main components to the HDR pie:

The first is brightness or peak luminance. You’ll usually see a screen’s peak luminance measured in nits, with typical SDR screens offering relatively low levels of brightness in the 300-500 nits range, and good HDR screens offering at least 1000 nits or better. There’s some fuzziness over exactly how manty nits a display should offer to be true HDR, but since it’s about the range and not absolute values, there’s more wiggle room than I’d personally like.

Related

What Are Nits of Brightness on a TV or Other Display?

The competition between TV and display manufacturers has been heating up rapidly, and everyone is talking about how many “nits” their displays have.

The next main component is contrast ratio. This is a measure of how dark and bright a display can be at the same time. So how bright the sun can be in the scene versus a shadow, or a fire versus the dark forest in the background. Some display technologies, particularly LCDs, have poor contrast ratios. OLED technology, on the other hand, has effectively infinite contrast, because it’s a self-emissive technology where individual pixels can be turned off for perfect blacks. This is why, at present, all the very best HDR displays are OLEDs, though they have traditionally struggled with peak brightness.

Related

The last component is color gamut. This is a measure of the number of colors that can be reproduced, and their spread. HDR content uses wider color spaces like DCI-P3 or Rec. 2020, letting your screen show more shades and deeper saturation than SDR (Standard Dynamic Range) content.

Related

HDR vs SDR: What’s the Difference?

The key difference between SDR and HDR isn’t one of kind but one of quantity. SDR content has been mastered for SDR displays. With screen technology getting better, content creators are no longer bound by the limits of older SDR displays, so they’ve come up with new mastering standards to extend the brightness, contrast and color ranges of their videos.

If you know that a display can hit 600 or 1000 nits, then you can master your content to take advantage of that. That being said, most content out there is still available in SDR, and HDR is a bonus at this point. Most modern content offer both, but SDR is always the lowest common denominator.

Related

Types of HDR Formats

Unfortunately, there’s still a bit of a format war going on in the world of HDR. These are currently the competitors:

- HDR10: The most common and baseline format. Uses static metadata (brightness information stays the same throughout a movie).

- HDR10+: Adds dynamic metadata that adjusts brightness scene-by-scene or even frame-by-frame.

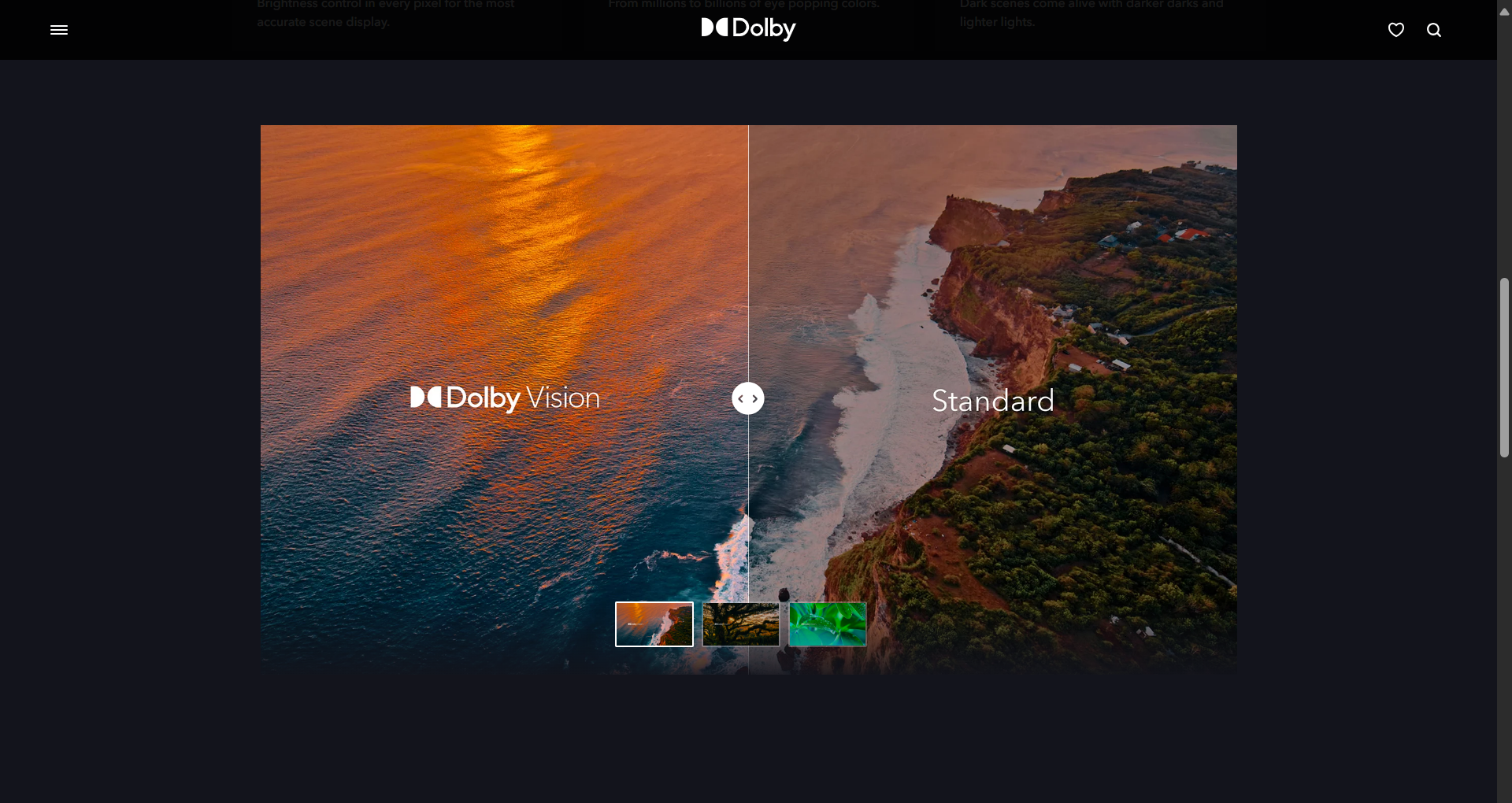

- Dolby Vision: A premium format with dynamic metadata and support for up to 12-bit color (though most screens still display 10-bit).

- HLG (Hybrid Log-Gamma): Designed for live broadcasts. You’ll see this used more in sports and news.

- Advanced HDR by Technicolor: Rare and largely unused in consumer devices.

Support varies between devices, but HDR10 and Dolby Vision are the most common combo in TVs and streaming services. However, I’d barely consider HDR10 to be true HDR, and Samsung is steadfast in its refusal to support Dolby Vision, rather supporting HDR10+.

Related

HDR Formats Compared: HDR10, Dolby Vision, HLG, and Technicolor

If you’re confused by all the HDR video formats, you’re certainly not alone! Here’s what you need to know.

Do You Need Special Hardware or Content?

It’s obvious from the discussion so far that you’ll need a modern TV that’s rated for HDR in order to experience it. Preferably a TV that can hit at least 600 nits of brightness, though again 1000 nits is a good rule of thumb for a true premium HDR experience.

You also need HDR content. On the physical media front, only 4K UHD Blu-rays offer HDR. Standard Blu-rays and DVDs do not. That being said, many modern TVs have a function where SDR content can be “tone-mapped’ onto HDR. This uses an algorithm to infer what SDR content would have looked like in HDR and this means you HDR TV can improve the look of SDR content too. However, whether it actually looks better depends on the display or device doing the tone-mapping. Your mileage will absolutely vary.

HDR content is much easier to find on streaming services, and most recent content, especially movies, should be mastered for HDR ons services like Netflix. Modern high-end phones even offer HDR when using services like Netflix if the app and phone hardware both support it.

The source of the content also matters. Your streaming box, console,or Blu-ray player need to support HDR, and in a format that your TV or monitor also supports. Else they’ll fall back to the lowest standard both work with, like HDR10. If you have a smart TV, its internal apps should support HDR for all content, but not every HDMI port necessarily supports HDR on some TVs, so ensure your Apple TV (for example) is plugged into the port that’s listed as supporting HDR. You also need HDMI 2.0 or preferably HDMI 2.1 cables for HDR at 4K.

Why HDR Matters for Movies, TV, and Gaming

It’s hard to think of a bigger visual upgrade than HDR. The HDR version of movies are much more vibrant, punchy, and realistic looking. While extra resolution helps with image quality, the impact of contrast, black levels, and color gamut are much bigger. If I had to choose between 4K SDR and 1080p HDR, I’d pick the latter every time. Fire and explosions sear your eyes, skin tones look far more natural, and there’s so much extra detail and nuance in the image.

For gaming, I almost feel like the advantages are even more dramatic, at least for games that are designed with HDR in mind. With titles like Cyberpunk 2077 and Horizon Forbidden West the difference between SDR and HDR is so striking, that the worlds feel dull and lifeless without it.

Sony PlayStation 5 Pro (PS5 Pro)

The Catch: Not All HDR Is Equal

Just because a display says “HDR” on the box doesn’t mean it offers a good experience. Some budget screens technically accept HDR input but don’t get bright enough to make it meaningful (some struggle to exceed 300 nits). Others may not display wide color gamuts properly or fail to handle metadata correctly.

Look for certifications like:

- VESA DisplayHDR 600 or higher

- Dolby Vision support

- 10-bit panel depth (8-bit + FRC is a compromise, but it works)

A cheap monitor that “supports HDR10” but has low brightness and contrast might even look worse than SDR in some cases.

Related

What Is ‘Fake HDR,’ and Should You Buy HDR Blu-rays?

Do you buy a lot of 4K HDR Blu-rays? Unfortunately, you might not be getting what you think you’re getting.

How to Know if You’re Really Seeing HDR

Almost all displays have an indicator that tells you if HDR is active and will briefly show a notification when you toggle between modes. However, the easiest way to check if HDR is really doing its job is to flip between SDR and HDR for the same scenes and look for these tell-tale signs with HDR on.

- Your screen gets noticeably brighter in highlights than in SDR mode.

- Colors look richer, not just more saturated.

- You can still see details in both dark and bright areas simultaneously.

If you have a proper HDR screen, and the content has been correctly mastered, there should be little doubt. The difference is not subtle.

Should You Upgrade to HDR?

In my opinion, the jump from SDR to HDR is a much bigger leap than FHD to UHD. Most people can’t really see or appreciate the extra resolution, but the benefits of HDR are apparent to anyone, whether they’re a casual watcher or a connoisseur.

So make sure your new TV or monitor has the right specifications for true HDR, get that content ready, and prepare for a visual feast like no other today.