Summary

- “Sneos” allows prompt comparison between Gemini, ChatGPT, and Claude for detailed breakdowns- no more browser tab jumping.

- The tool compares chatbot responses in-depth, but how it does ratings can fluctuate. Accuracy should not be taken lightly.

- Premium subscription offers access to more models, higher rate limits, and upcoming features like context modality and more AI models.

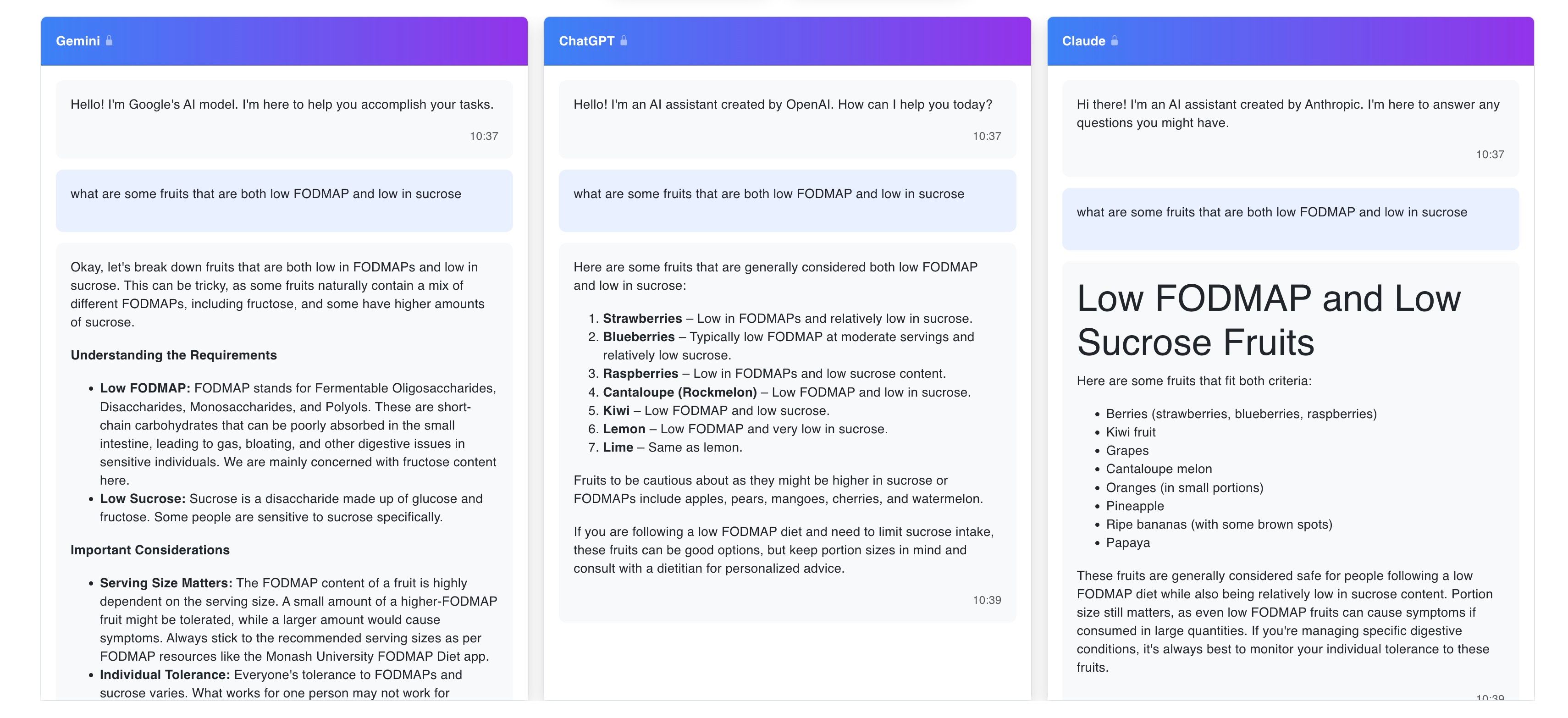

Tired of jumping between browser tabs to see which AI chatbot spits out the best answer? “Sneos” is a handy web tool that allows you to prompt Gemini, ChatGPT, and Claude all at once and get a detailed breakdown of their responses.

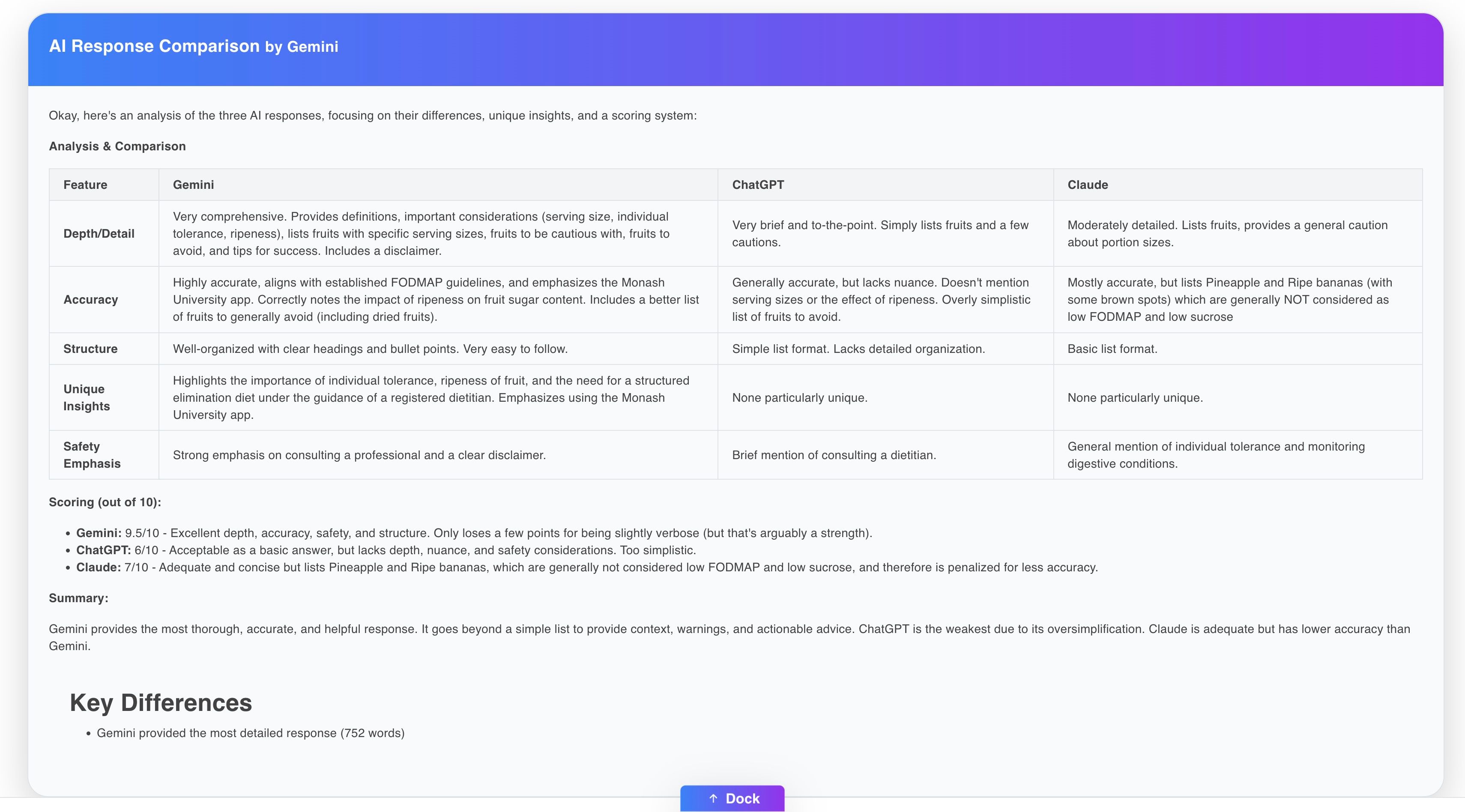

While it’s certainly convenient to use three AI chatbots at once, it’s about more than that. The tool also helps you figure out which one might be best for your needs. First, you type in your prompt, send it off, and watch as Gemini, ChatGPT, and Claude go to work simultaneously. But the most useful thing kicks in after the responses are given. The tool then employs Gemini to do a comparison of each chatbot’s output. And no, you’re not alone in thinking that’s a bit unfair.

The comparison breakdown goes pretty in-depth. However, since it’s AI, the way it does the comparison is not the same every time. As a small example, sometimes it rates the chatbots out of five, but other times it rates them out of 10. Generally speaking, though, you get a breakdown of the level of detail provided, the tone of each chatbot, and some words about the accuracy of their information. The latter should very much be taken with a grain of salt since it’s literally AI “fact checking” itself.

It even drills down into specific comparisons for your particular prompt. I asked about fruit, so the comparison included how each chatbot handled fruit selection and serving size. Sometimes, the responses also get scored along with the chatbots themselves. Then there’s an overall conclusion, and the key differences are highlighted. In my case, it said Gemini had the most detailed response since it had the most words, though quantity doesn’t always mean quality.

If you’re looking for even more, there’s a premium subscription available for $29 per month. This unlocks access to even more models like DeepSeek and Grok, along with higher rate limits, meaning you can run more comparisons. And for those who want to explore even more, there’s a waiting list for upcoming premium features, including context modality, enhanced chat history, and an even wider array of AI models to pit against each other.

While the ability to use multiple AI chatbots at once is pretty cool, I found the comparisons at the end to be the most interesting part. Each one genuinely does “approach” prompts differently, though the end results are usually pretty similar.

Related

Don’t Trust AI Search Engines–Study Finds They’re “Confidently Wrong” Up to 76% of the Time

We’ve all heard the warnings: “Don’t trust everything AI says!” But how inaccurate are AI search engines really? The folks at the Tow Center for Digital Journalism put eight popular AI search engines through comprehensive tests, and the results are staggering.