The iPhone 14 is almost certainly right around the corner, and the rumor mill has been churning furiously as we head toward the launch. I’ve been very vocal about the major features I want to see on Apple’s next flagship phone, but it’s the camera that I’m particularly keen to see take some real steps forward.

The cameras on Apple’s phones have always been superb, with the iPhone 13 Pro capable of taking the sort of shots you’d expect to see from professional cameras, and even its cheapest iPhone SE able to take beautiful snaps on your summer vacations. But the Pixel 6 Pro and Samsung Galaxy S22 Ultra pack amazing camera systems that mean Apple doesn’t have the lead it once did.

So I’ve sat around daydreaming about how I’d go about redesigning Apple’s camera system for the iPhone 14 to hopefully secure its position as the best photography phone around. Apple, take note.

A much bigger image sensor on iPhone 14

The image sensors inside phones are tiny compared with the ones found in professional cameras like the Canon EOS R5. The smaller the image sensor, the less light can hit it, and light is everything in photography. More light captured means better looking images, especially at night, and it’s why pro cameras have sensors many times the size of the ones found in phones.

Why are phone cameras lacking in this regard? Because the image sensors have to fit inside pocket-size phone bodies, where space is at a premium. But there’s certainly some room to play with. Phones like Sony’s Xperia Pro-I and even 2015’s Panasonic CM1 pack 1-inch camera sensors that can offer greatly improved dynamic range and low-light flexibility, so it’s not too wild to hope for a much larger image sensor inside the iPhone 14.

Panasonic’s CM1 had a 1-inch image sensor, and it came out back in 2015. Keep up, Apple.

Andrew Lanxon/CNET

Sure, Apple does amazing things with its computational photography to squeeze every ounce of quality from its small sensors, but if it paired those same software skills with a huge image sensor, the difference could be huge. A 1-inch image sensor surely couldn’t be out of the question, but I’d really like to see Apple take things even further with an APS-C size sensor, such as those found in many mirrorless cameras.

Fine, not all three cameras could get massive sensors, otherwise they simply wouldn’t fit into the phone, but maybe just the main one could get a size upgrade. Either that or just have one massive image sensor and put the lenses on a rotating dial on the back to let you physically change the view angle depending on your scene. I’ll be honest, that doesn’t sound like a very Apple thing to do.

A zoom to finally rival Samsung

While I generally find that images taken on the iPhone 13 Pro’s main camera look better than those taken on the Galaxy S22 Ultra, there’s one area where Samsung wins hands down; the telephoto zoom. The iPhone’s optical zoom tops out at 3.5x, but the S22 Ultra offers up to 10x optical zoom.

And the difference it makes in the shots you can get is astonishing. I love zoom lenses, as they let you find all kinds of hidden compositions in a scene, instead of just using a wide lens and capturing everything in front of you. I find they allow for more artistic, more considered images, and though the iPhone’s zoom goes some way to helping you get these compositions, it’s no competition for the S22 Ultra.

The Galaxy S22 Ultra has an awesome 10x optical zoom, and even the Pixel 6 Pro manages 4x.

Andrew Lanxon/CNET

So what the phone needs is a proper zoom lens that relies on good optics, not just on digital cropping and sharpening, which always results in quite muddy-looking shots. It should have at least two optical zoom levels; 5x for portraits and 10x for more detailed landscapes. Or even better, it’ll allow for a continuous zoom between these levels to find the perfect composition, rather than having to simply choose between two fixed zoom options.

Personally, I think 10x is the maximum Apple would need to go to. Sure, Samsung actually boasts its phone can zoom up to 100x, but the reality is that those shots rely heavily on digital cropping and the results are terrible. 10x is huge and is the equivalent of carrying a 24-240mm lens for your DSLR — wide enough for sweeping landscapes, with enough zoom for wildlife photography too. Ideal.

Pro video controls built in to the default camera app

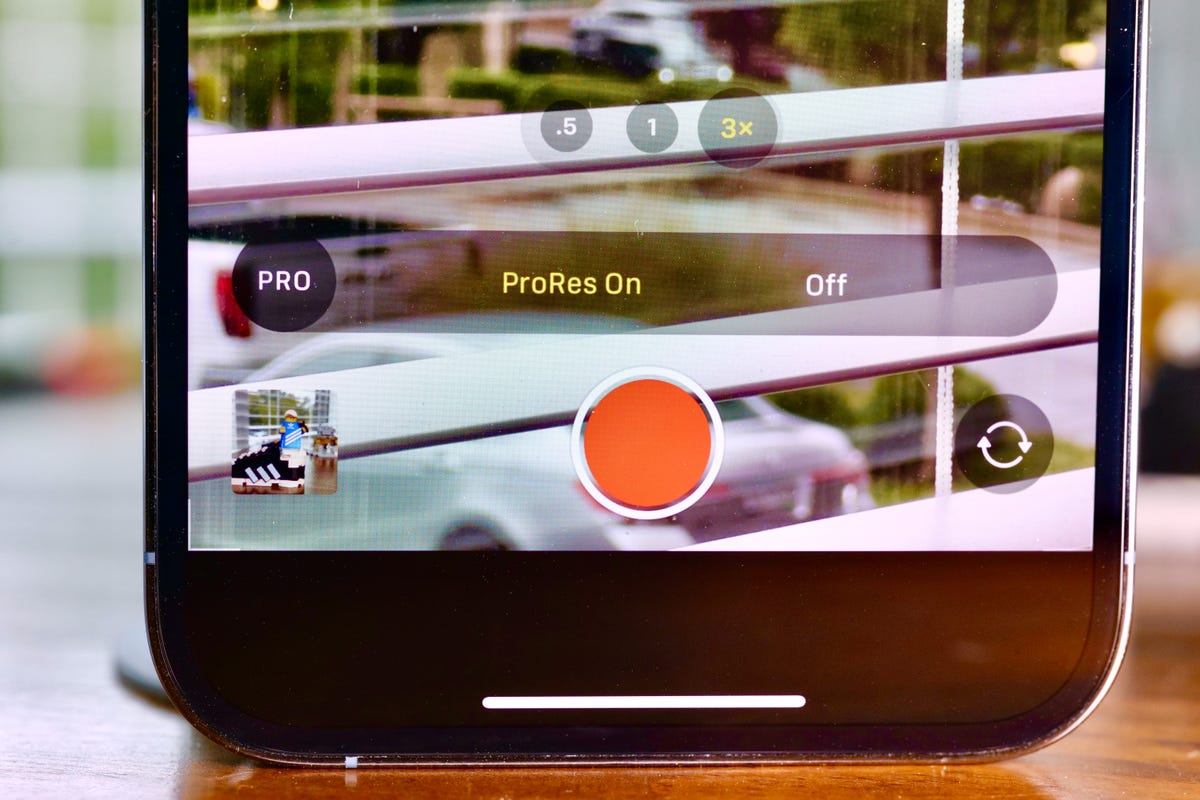

With the introduction of ProRes video on the iPhone 13 Pro, Apple gave a strong signal that it sees its phones as a genuinely useful video tool for professional creatives. ProRes is a video codec that captures a huge amount of data, allowing for more editing control in postproduction software like Adobe Premiere Pro.

ProRes is built in to the camera app, so why not offer other controls for pro users?

Patrick Holland/CNET

But the camera app itself is still pretty basic, with video settings limited mostly to turning ProRes on or off, switching zoom lenses and changing the resolution. And that’s kind of the point; make it as simple as possible to get shooting and to capture beautiful footage with no fuss. But the pros who want to use ProRes will also likely want more manual control over things like white balance, focus and shutter speed.

And yes, that’s why apps like Filmic Pro exist that give you incredible fine-grain control over all these settings to get exactly the look you want. But it’d be nice to see Apple find a way to make these settings more accessible within the default camera app. That way, you could fire up the camera from the lock screen, twiddle just a couple of settings and get rolling straight away, confident that you were getting exactly what you wanted from your video.

In-camera focus stacking on iPhone

Imagine you’ve found a beautiful mountain wildflower with a towering alpine peak behind it. You get up close to the flower and tap on it to focus and it springs into sharp view. But now the mountain is out of focus and tapping on that means the flower is now blurry. This is a common issue when trying to focus on two items in a scene that are far apart, and experienced landscape and macro photographers will get round it using a technique called focus stacking.

Using my Canon R5, I took multiple images here, focusing at different points on this fly, and then merged them together afterward. The result is a subject that’s pin-sharp from front to back.

Andrew Lanxon/CNET

Focus stacking means taking a series of images with the camera staying still while focusing on different elements within a scene. Then, those images are blended together later — usually in desktop software like Adobe Photoshop or dedicated focus software like Helicon Focus — to create an image that has focus on the extreme foreground and the background. It’s the opposite goal of the camera’s Portrait Mode, which purposefully tries to defocus the background around a subject for that artful shallow depth of field — or “bokeh.”

It might be a niche desire, but I’d love to see this focus stacking capability built in to the iPhone, and it possibly wouldn’t even be that difficult to do. After all, the phone already uses image blending technology to combine different exposures into one HDR image — it’d just be doing the same thing, only with focus points, rather than exposure.

Much better long-exposure photography

Apple has had the ability to shoot long exposure images on the iPhone for years now. You’ll have seen those shots; images of waterfalls or rivers where the water has been artfully blurred but the rocks and landscape around the water remain sharp. It’s a great technique to really highlight the motion in a scene, and it’s something I love doing on my proper camera and on my iPhone.

A standard and long-exposure comparison, taken on the iPhone 11 Pro. It’s a good effort, but you lose a lot of detail in the process.

Andrew Lanxon/CNET

And though it’s easy to do on the iPhone, the results are only OK. The problem is that the iPhone uses a moving image — a Live Photo — to detect motion in the scene and then digitally blur it, and this usually means that any movement gets blurred, even bits that shouldn’t be. The result is shots that are quite mushy looking, even when you put the phone on a mobile tripod for stability. They’re fine for sending to your family or maybe posting to Instagram, but they won’t look good printed and framed on your wall, and I think that’s a shame.

I’d love to see Apple make better use of its optical image stabilization to allow for really sharp long-exposure photos, not just of water, but of nighttime scenes too, perhaps of car headlights snaking their way through the street. It’d be another great way of getting creative with photography from your phone, and making use of the excellent quality from those cameras.