Apple has plans for making Apple Vision Pro even more accessible and allowing for it to be controlled with customized gestures.

Imagine being on a Zoom call and being able to mute yourself the way a radio DJ does by making a slicing gesture in front of your throat. Or perhaps make the age-old hand signal for “phone me” and have Apple Vision Pro send your contact details to whoever you’re looking at.

That’s the implication of Apple’s newly-granted patent, “Method And Device For Defining Custom Hand Gestures.” It’s a method of assigning any gesture to any Apple Vision Pro function, and while it comes from the headset’s accessibility features, it has a broad use.

In demonstrations of the Apple Vision Pro, Apple will sometimes say that the headset is the most accessible device ever. Even for a short demo, there are options to help it be used by people with limited physical movement, for instance.

“In various implementations, an electronic device detects pre-defined hand gestures performed by a user and, in response, performs a corresponding function,” says the patent. “However, in various implementations, a user may be physically unable to perform the pre-defined hand gesture or may desire that a hand gesture perform a different function.”

It’s similar to how features such as Back Tap on the iPhone may have been introduced as accessibility ones, but offer such convenience that any user may adopt them. And just as with Back Tap, what this patent describes does suggest that a gesture could run a Shortcut.

That opens up everything. The smallest gesture could run any Shortcut, meaning you could have it trigger a whole sequence of options.

As ever, the patent is concerned chiefly about how something can be done, rather than what the technology might then be used for. But across its 6,000 words and 48 drawings, it steps through how these future gestures could be detected and assigned to tasks or functions.

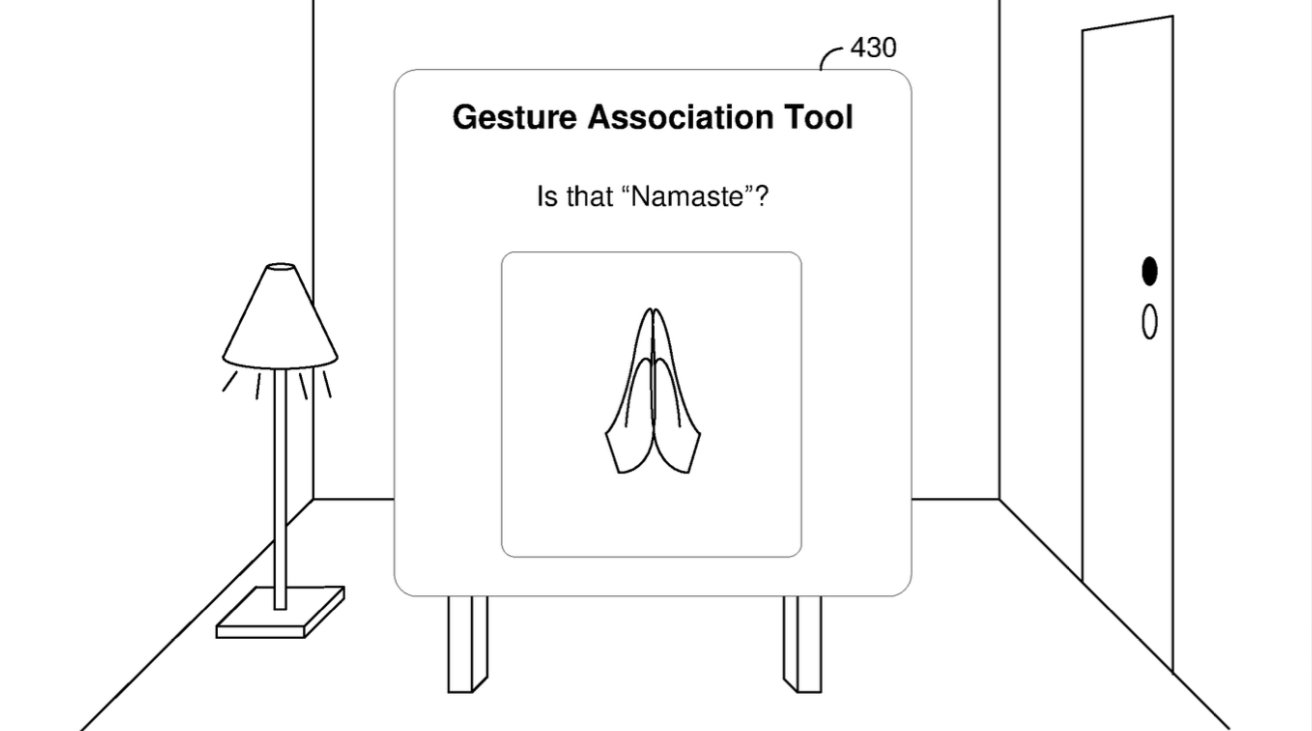

Each of the steps uses what Apple calls a Gesture Association Tool, which begins by asking what function the user wants to create a gesture for. The patent is actually unclear about this stage, but presumably, the user has to manually select a function or shortcut.

There’s also the fact that if the gesture is a known one that doesn’t happen to be already in use, the Apple Vision Pro can name it for you. The example given is of someone holding their palms together, which the Tool guesses is “Namaste.”

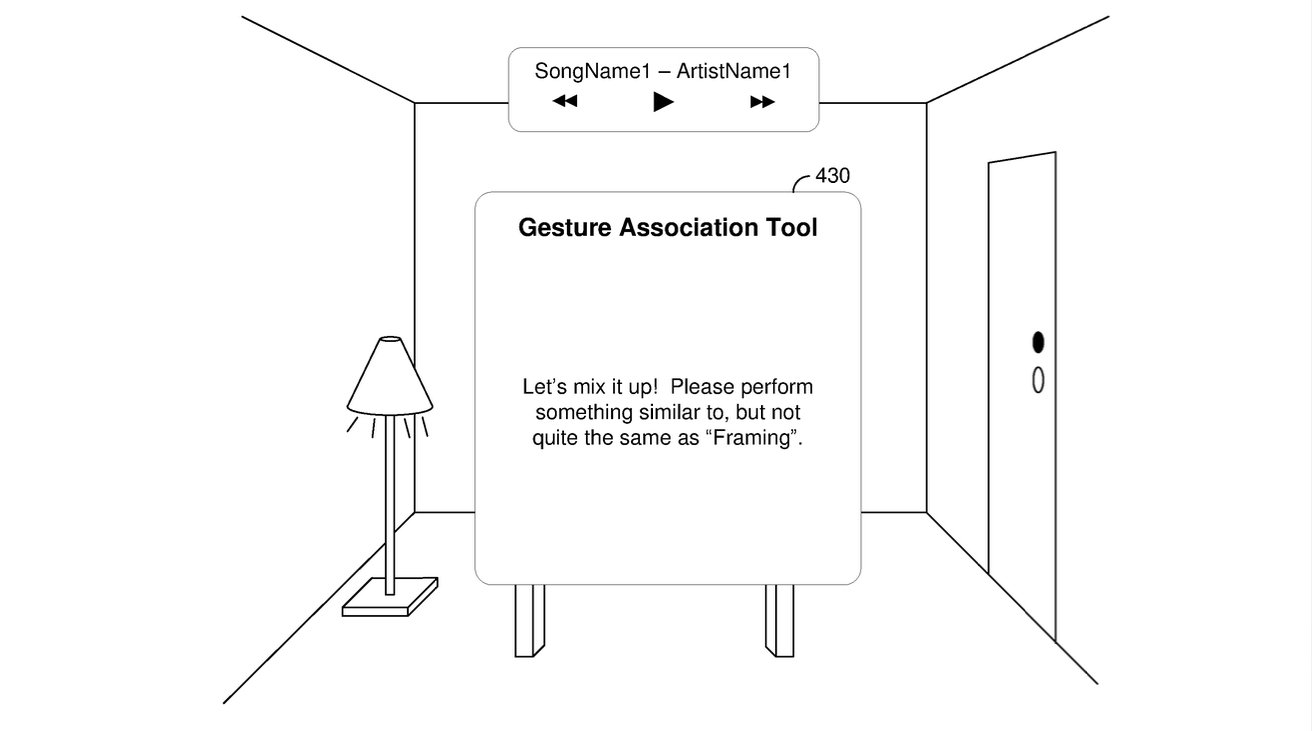

Assuming it doesn’t already recognize the gesture, it asks for a name for it. Then, it asks the user to repeat the gesture — and next to make a gesture that is similar but different.

From then on, the new gesture will be recognized, and the associated task or function will be performed. Apple does not describe editing the gestures later or removing any, but there would presumably be a way to do that — and surely to see all custom gestures.

Apple’s patent also doesn’t comment on whether, for instance, Apple Vision Pro will refuse to recognize rude gestures.

This patent, which therefore hopes everyone will be nice, is credited to two inventors, Thomas G. Salter and Richard Ignatius Pusal Lozada. Both have multiple previous patents related to augmented reality and graphical environments.