While AI is under attack from copying existing works without permission, the industry could end up getting into more legal trouble over trademarks.

The rise in interest in generative AI has also led to an increase in complaints about the technology. Along with the complaints that the AI can often be incorrect, there are often issues with the sourcing of content to train the models in the first place.

This has already caused some litigious action, such as Conde Nast sending a cease and desist to AI startup Perplexity from using content from its publications.

There are some instances where the companies producing AI are doing the right thing. For example, Apple has offered to pay publishers for access for training purposes.

However, there may be a bigger problem on the horizon, especially for image-based generative AI, one that’s beyond deep fakes. The issue of trademarks and product designs.

Highly protected

Major companies are very protective of their trademarks, copyrights, and intellectual property and will go to great lengths to keep them safe. They will also put a lot of effort into sending lawyers after people infringing on their properties, with a view to securing a hefty financial payoff in some situations.

Since generative AI services that create images are often trained on photographs of millions of items, it makes sense that they are also aware of the existence of product and company logos, product names, and product designs.

The problem is that it now leaves those who generate images through services open to legal action if their images contain designs and elements that are too closely based on existing logos or products.

In many cases, commercial generative AI image services do act to protect themselves and users from being subjected to lawsuits, by including rules the models follow. These rules typically include lists of items or actions that the models will not generate.

However, this is not always the case, and it’s not always applied evenly across the board.

Monsters, mice, and flimsy rules

On Tuesday, in a bid to try and create images for a cookie-based news story, one AppleInsider editorial team member wondered if something could be made for the article in AI. An offhand request was made to generate an image of “Cookie Monster eating Google Chrome icons like they are cookies.”

Surprisingly, Microsoft Copilot generated an image of just that, with a detailed picture of the Sesame Street character about to consume a cookie bearing the Chrome icon.

AppleInsider didn’t use the image in the article, but it did raise questions about how much legal trouble a person could get into using generative AI images.

A test was made against ChatGPT 4 for the same Cookie Monster/Chrome request, and that came out with a similar result.

Adobe Firefly offered a different result altogether in that it ignored both the Google Chrome and Cookie Monster elements. Instead, it created monsters eating cookies, a literal monster made from cookies, and a cat eating a cookie.

More importantly, it displayed a message warning “One or more words may not meet User Guidelines and were removed.” Those guidelines, accessible via a link, has a sizable section titled “Be Respectful of Third-Party Rights.”

The text basically says that users should not violate third-party copyrights, trademarks, and other rights. Evidently, Adobe also proactively checks the prompts for potential rule violations before generating images.

We then tried to use the same services to create images based on entities backed by more litigious and protective companies: Apple and Disney.

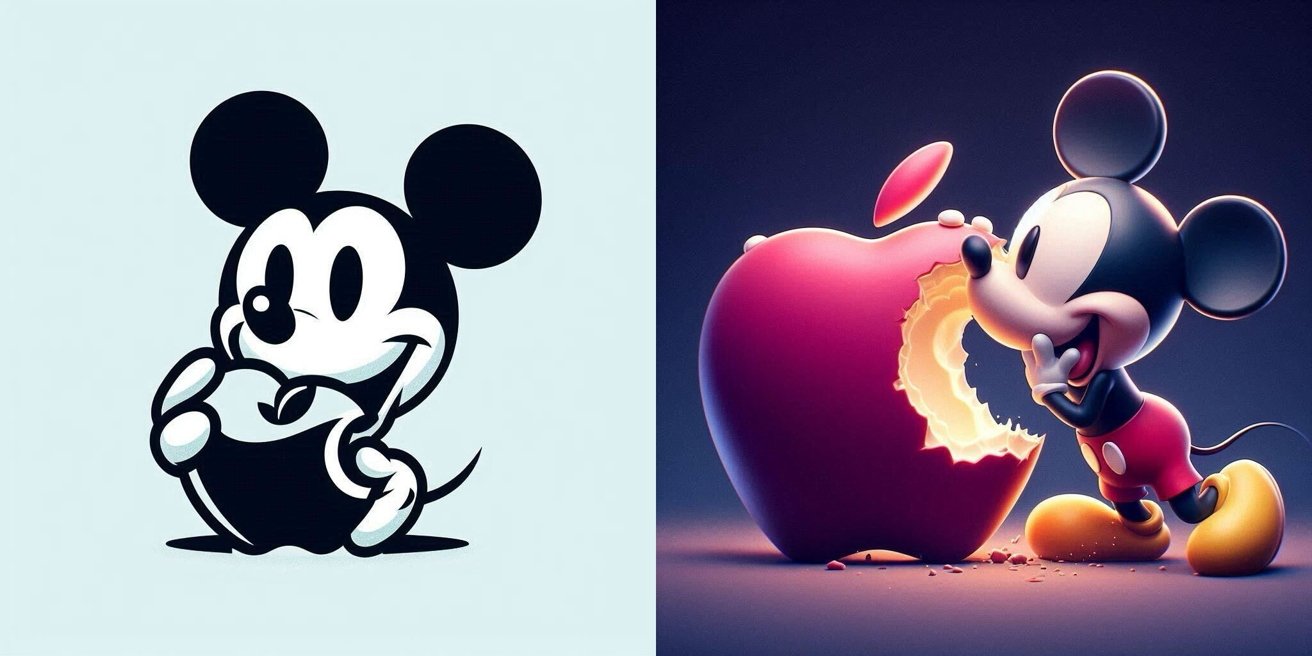

To each of Copilot, Firefly, and ChatGPT 4, we fed the prompt of “Mickey Mouse taking a bit out of an Apple logo.”

Firefly again declined to continue with the prompt, but so did ChatGPT 4. Evidently, OpenAI is keen to play it safe and not rile either of the companies at all.

But then, Microsoft’s Copilot decided to create the images. The first was a fairly stylized black-and-white effort, while the second looked more like someone at Pixar had created the image.

It seemed that, while some services are keen to avoid any legal wrangling from well-heeled opponents, Microsoft is more open to continuing without fear of repercussion.

Plausible products

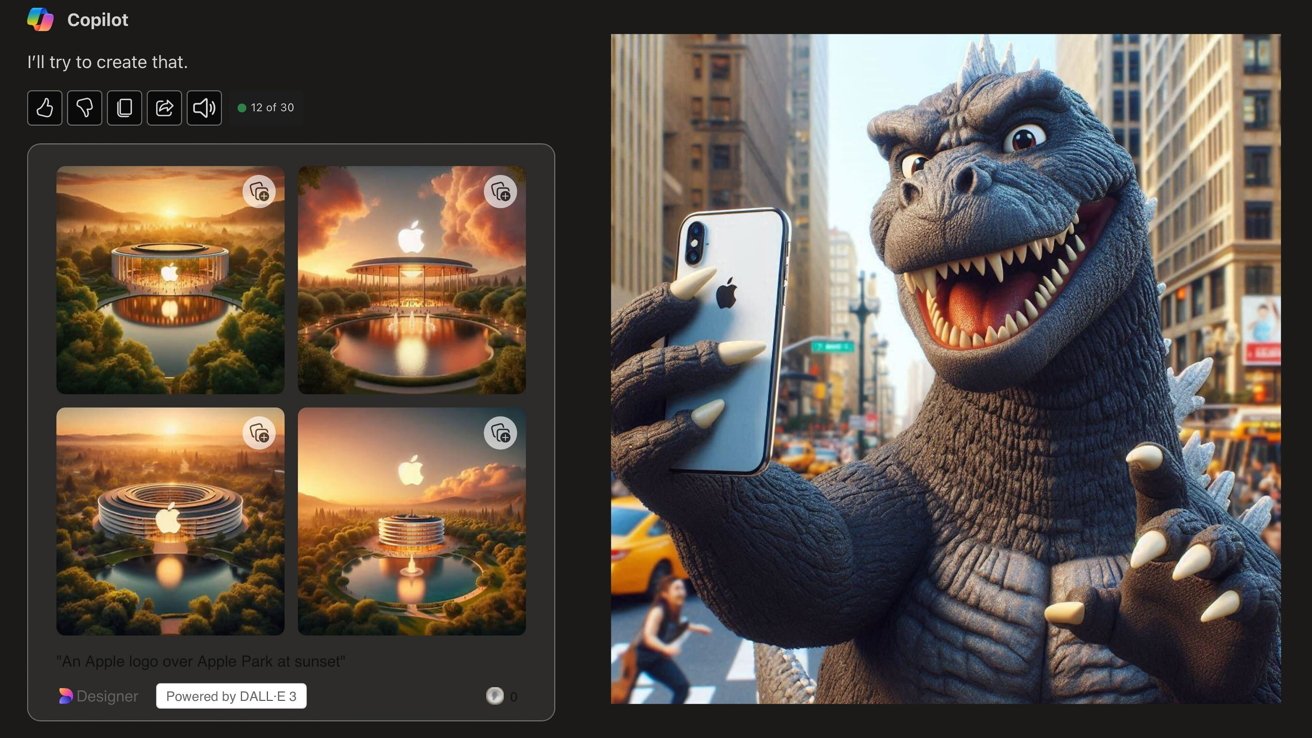

AppleInsider also tried generating images of iPhones using exact model names. It’s evident that Copilot knows what an iPhone is, but its designs are not quite up to date.

For example, iPhones generated keep including the notch, rather than shifting to the Dynamic Island that newer models use.

We were also able to generate an image of an iPhone next to a fairly comically-sized device reminiscent of Samsung Galaxy smartphones. One generated image even included odd combinations of earphones and pens.

Tricking the services to produce an image of Tim Cook holding an iPhone didn’t work. However, “Godzilla holding an iPhone” worked fine in Copilot.

As for other Apple products, one early result was an older and thick style of iMac, complete with an Apple keyboard and fairly correct styling. However, for some reason, a hand was using a stylus on the display, which is quite incorrect.

It seems at least that Apple products are fairly safe to try and produce using the services, if only because they are based on older designs.

Copilot’s legal leanings

While a dodgy generative AI image containing a company’s logo or product could be a legal issue in waiting, it seems that Microsoft is confident in Copilot’s capabilities to avoid them.

A Microsoft blog post from September 2023 and updated in May 2024 said that Microsoft would help “defend the customer and pay the amount of any adverse judgments or settlements that result from the lawsuit, as long as the customer used the guardrails and content filters we have built into our products.”

It appears that this only applies to commercial customers, not consumer or personal users who may not necessarily use the generated images for commercial purposes.

If Microsoft’s commercial clients signed up to use the same AI technologies and have the same guidelines as consumers using the service, this could be a potential legal nightmare for Microsoft down the road.

Apple Intelligence

Everyone is aware that Apple Intelligence is on the way. The collection of features includes some handling text, some for queries, but a lot for generative AI imagery.

The last section is dominated by Image Playground, an app that uses text prompts and suggests more influences to create images. In some applications, the system works on-page, combining subjects within the document to create a custom image to fill unoccupied page space.

As one of the companies more inclined to protect its IP and already demonstrating that it wants to be responsible for how it uses the technology, Apple Intelligence may be quite strict in how it generates images. It may well avoid most of the trademark and copyright issues others have to deal with.

Apple’s keynote example was of a mother dressed like a generic superhero, not a more specific character like Wonder Woman. However, we won’t truly know how those tools work until Apple releases the feature to the public.

-xl.jpg)