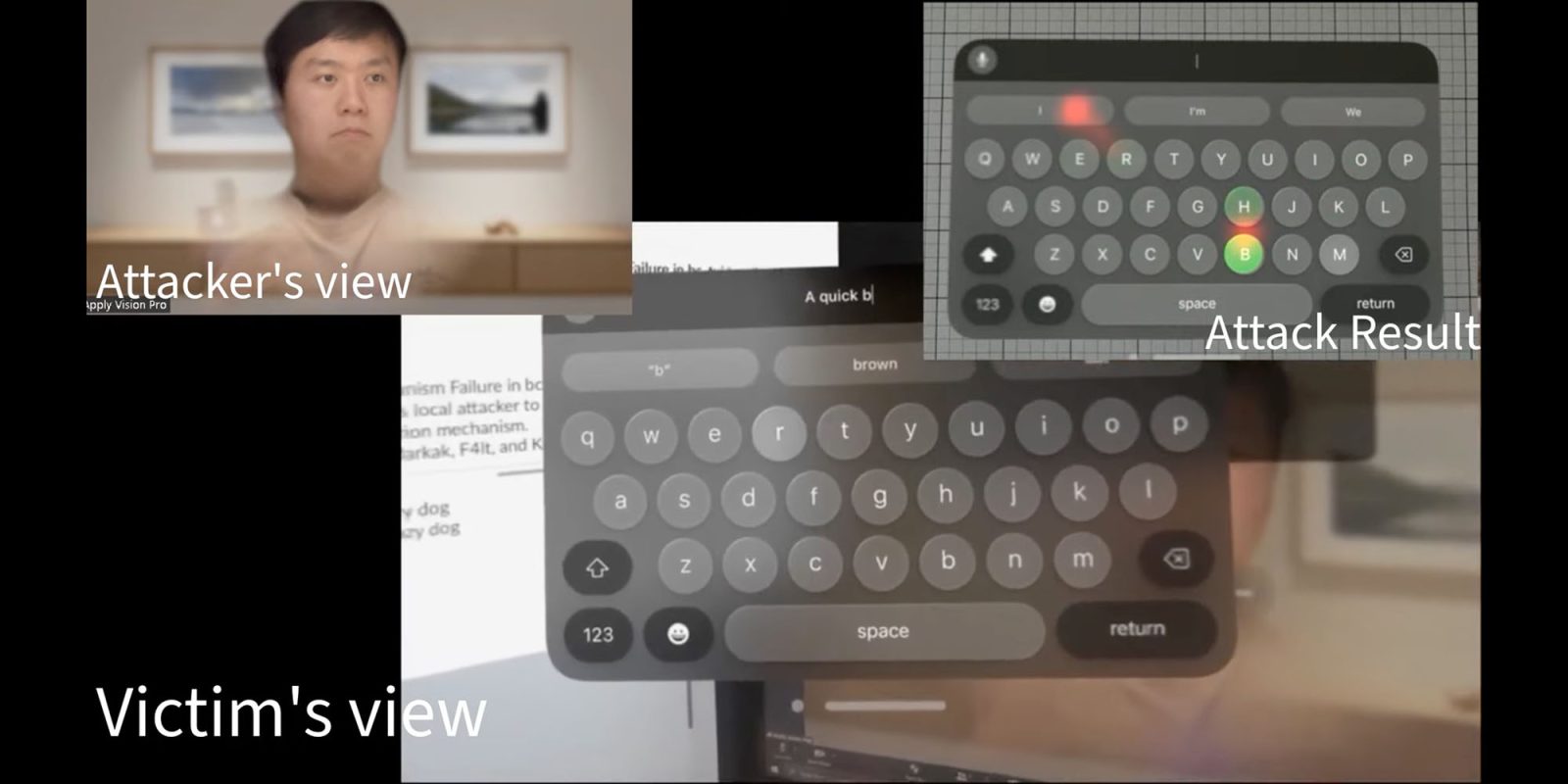

Security researchers have come up with a pretty wild Vision Pro exploit. Dubbed GAZEploit, it’s a method of working out the passwords of Vision Pro users by watching the eye movements of their avatars during video calls.

They’ve put together a YouTube video (below) to demonstrate how tracking the avatar’s eye movements accurately detects the virtual keys the Vision Pro user is looking at when typing …

When typing on Vision Pro as a standalone device, the unit displays a large virtual keyboard, and uses eye-tracking to detect which key the user is looking at as they virtually type.

The problem is that, if you’re on a video call, your avatar’s eyes will accurately reflect the direction of your own eyes – and an attacker can monitor the avatar’s eye movements to work out the keys you’re looking at as you type.

A neural network can even work out when you are typing.

The gaze estimation reveals a noteworthy pattern:

- Direction of eye gaze tends to be more concentrated and exhibits a periodic pattern during typing session.

- Frequency of eye blinking decreases during typing sessions

Recurrent neural network (RNN) is suitable for tasks that need to recognize patterns in sequential data.

The team analyzed the eye movements of 30 Vision Pro users, and was able to achieve very high accuracy rates.

During gaze typing, users’ gazes shift between keys and fixate on the key to be clicked, resulting in saccades followed by fixations. Saccades refers to the period when users move their gaze rapidly from one object to another. Fixations refers to the period when users stare at an object.

We developed an algorithm that calculate the stability of the gaze trace and set a threshold to classify fixations from saccades. We use the gaze estimation points in these high stability region as click candidates. Evaluation on our dataset shows precision and recall rate of 85.9% and 96.8% on identifying keystrokes within typing sessions.

In addition to detecting passwords, GAZEploit was also able to spy on messages and website addresses typed by Vision Pro users during video calls.

Check out the demo video below, with more details here.

9to5Mac’s Take

This is an imaginative exploit!

The issue arises because the same eye-tracking data is used for both keyboard functionality, and generating the avatar image. Apple could likely fix it by adding a relatively small and random displacement to the avatar eye movements.

Via Wired. Framegrab: GAZEploit.

FTC: We use income earning auto affiliate links. More.