On Linux, pipes and redirection let you use the output from commands in powerful ways. Capture it in files, or use it as input with other commands. Here’s what you need to know.

What Are Streams?

Linux, like other Unix-like operating systems, has a concept of streams. Each process has an input stream called stdin, an output stream called stdout, and a stream for errors, called stderr. Linux streams, like streams in the real world, have two end points. They have a source or input, and a destination, or output.

The input stream might come from the keyboard to the command, letting you send text such as information or commands to the process. The output stream comes from the command, usually to the terminal window. The stderr stream also writes to the terminal window.

You can redirect streams and you can pipe them. Redirection means sending the output to somewhere other than the terminal window. Piping means taking the output of one command and using it as the input to another command.

This lets you chain commands together to create sophisticated solutions out of a series of simple commands working in collaboration.

Redirecting Streams

The simplest form of redirection takes the output from a command and sends it to a file. Even this trivial case can be useful. Perhaps you require a record of the command’s output, or maybe there’s so much output scrolling by you can’t possibly read it.

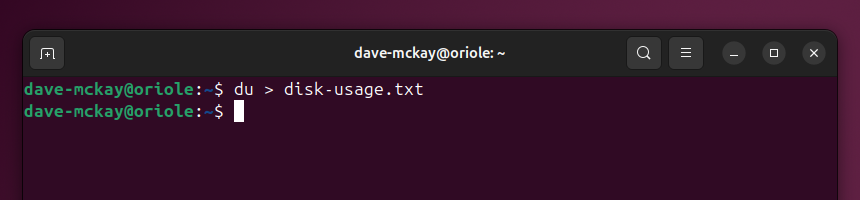

On this test PC, the du command outputs 1380 lines of text. We’ll send that to a file.

du > disk-usage.txt

The right angle bracket tells the shell to redirect the stdout output from the du command into a file called disk-usage.txt. No output is sent to the terminal window.

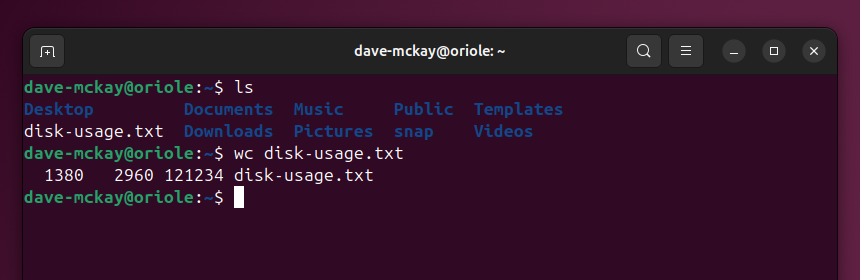

We can use ls to verify the file has been created, and wc to count the lines, words, and letters in the file. As expected, wc reports that the file has 1380 lines in it.

ls

wc disk-usage.txt

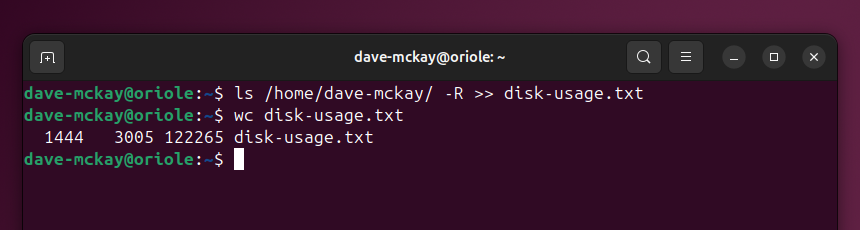

This type of redirection creates or overwrites the file each time you use it. If you want to append the redirected text to the end of an existing file, use double right angle brackets “>>”, like this.

ls /home/dave-mckay/ -R >> disk-usage.txt

wc disk-usage.txt

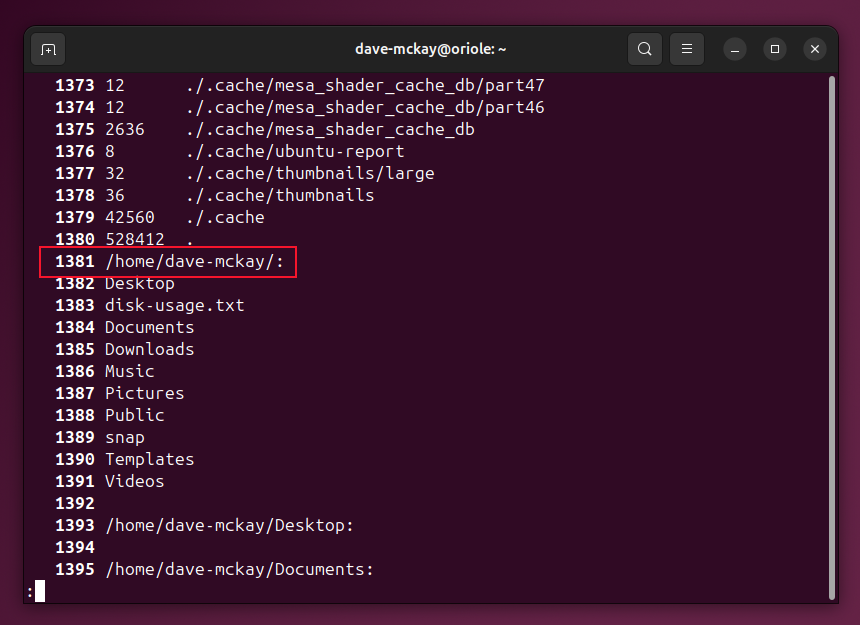

Using the -N (line numbers) option with less, we can verify that the new information has been appended after line 1380.

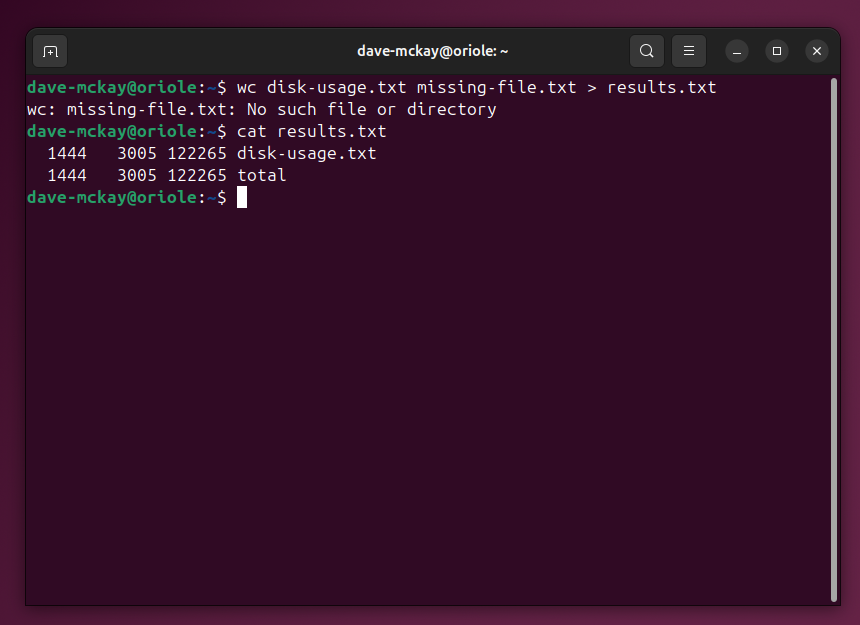

If we write a command that generates an error, we’ll see that, because we’re only redirecting stdout, any stderr error messages are still sent to the terminal window.

wc disk-usage.txt missing-file.txt > results.txt

wc: missing-file.txt: No such file or directory

cat results.txt

The results for disk-usage.txt are sent to the results.txt file, but the error message for the non-existent missing-file.txt is sent to the terminal window.

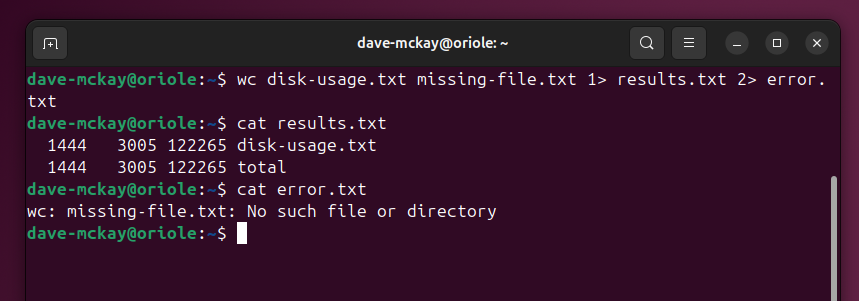

We can add numeric indicators to the right angle bracket to make it explicit which stream we’re redirecting. Stream 1 is stdout and stream 2 is stderr. We can redirect stdout to one file and stderr to another, quite easily.

wc disk-usage.txt missing-file.txt 1> results.txt 2> error.txt

cat results.txt

cat error.txt

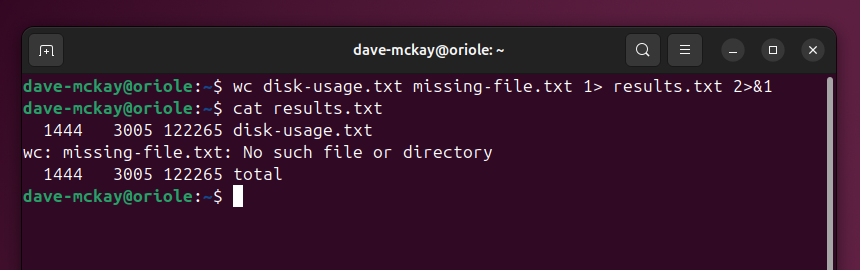

If you want to have both streams redirected to a single file, we redirect stdout to a file, and tell the shell to redirect stderr to the same destination that stdout is going to.

wc disk-usage.txt missing-file.txt 1> results.txt 2>&1

cat results.txt

Any error messages are captured and sent to the same file as stdout.

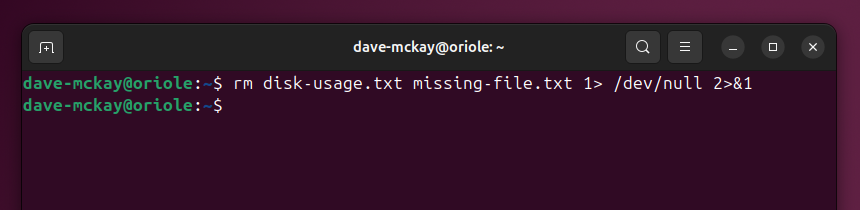

You might not want to store any of the output at all, you just don’t want anything written to the terminal window. The null device file, which silently consumes everything sent to it, is a handy destination to send unwanted screen output.

rm disk-usage.txt missing-file.txt 1> /dev/null 2>&1

Neither stdout nor stderr messages are written to the terminal window, even though one of the files we’re deleting doesn’t exist.

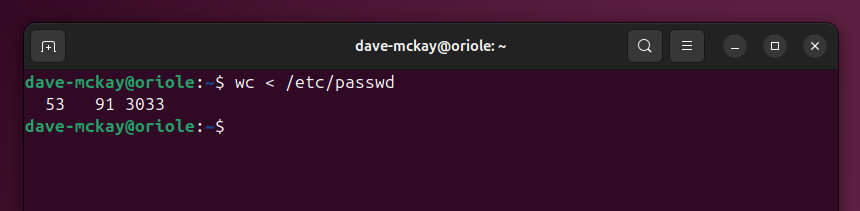

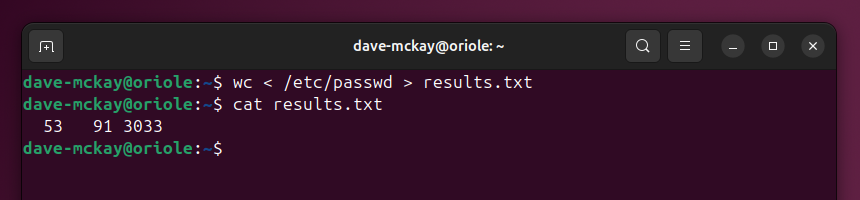

One final trick you can do with redirection is to read a file into a command’s stdin stream.

wc /etc/passwd

You can combine this with redirection of the output.

wc /etc/passwd > results.txt

cat results.txt

Putting Streams Through Pipes

A pipe effectively redirects the stdout of one command and sends it to the stdin of another command. Piping is one of the most powerful aspects of the command line, and can transform your use of the core Linux commands and utilities.

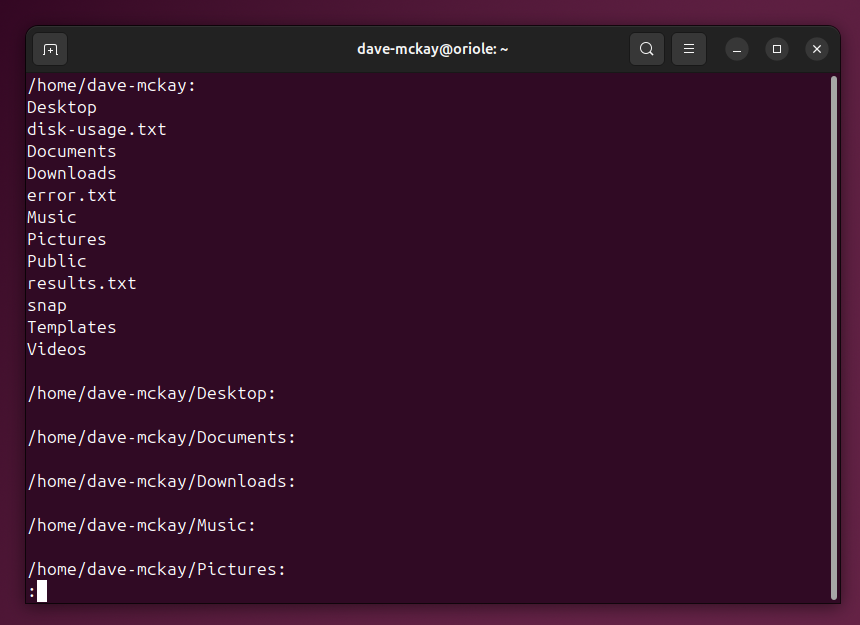

To pipe the output of a command into another, we use pipe “|” symbol. For example, if we wanted to list recursively all the files and subdirectories in your home directory, you’d see a fast blur as the output from ls whizzed by in the terminal window.

By piping the ls command into less, we get the results displayed in a convenient file viewer.

ls -R ~ | less

That’s more efficient than the two-step manual process of sending the output to a file and opening the file in less.

Piping Output Through Another Command

Piping really comes into its own when the second command does further processing on the output of the first command.

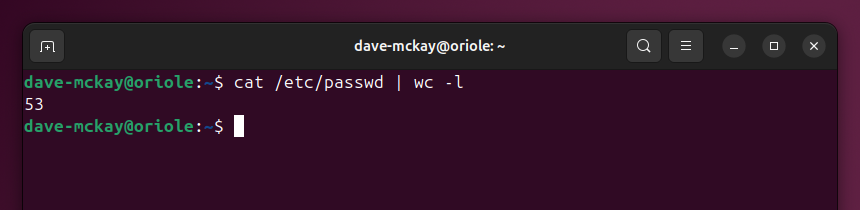

Let’s count the number of user and pseudo-user accounts on your computer. We’ll use cat to dump the contents of the /etc/passwd file, and pipe it wc. The -l (lines) option will count the number of lines in that file. Because there’s one line per account, it counts the accounts for us.

cat /etc/passwd | wc -l

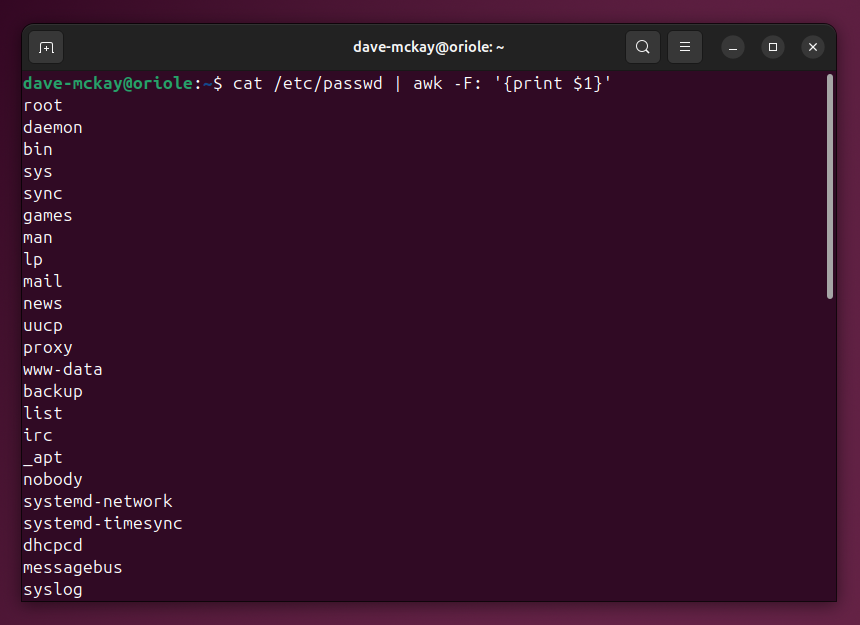

That sounds a lot. Let’s see the names of those accounts. This time we’ll pipe cat into awk. The awk command is told to use the colon “:” as the field separator, and to print the first field.

cat /etc/passwd | awk -F: '{print $1}'

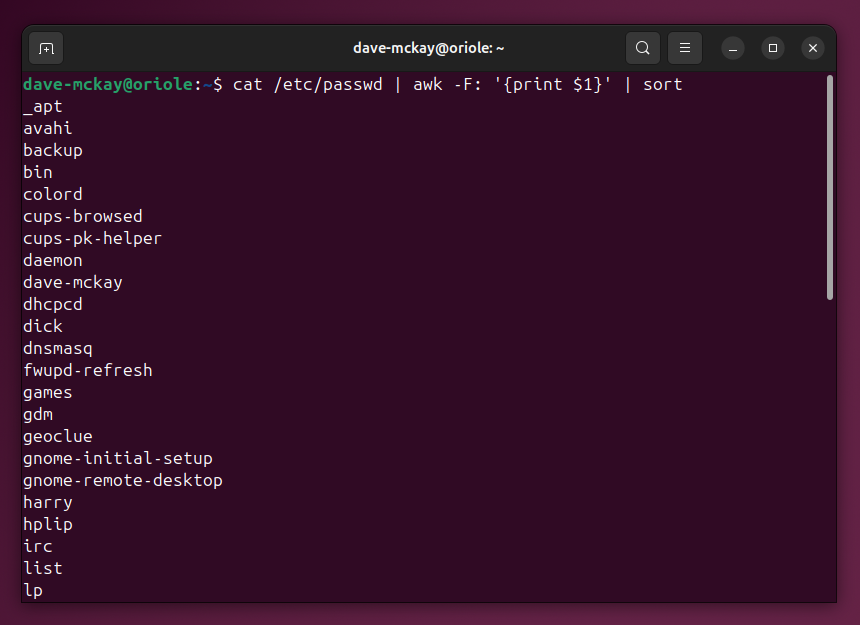

We can keep adding commands. To sort the list, append the sort command so that the output from awk goes to the sort command.

cat /etc/passwd | awk -F: '{print $1}' | sort

Piping Output Through a Chain of Commands

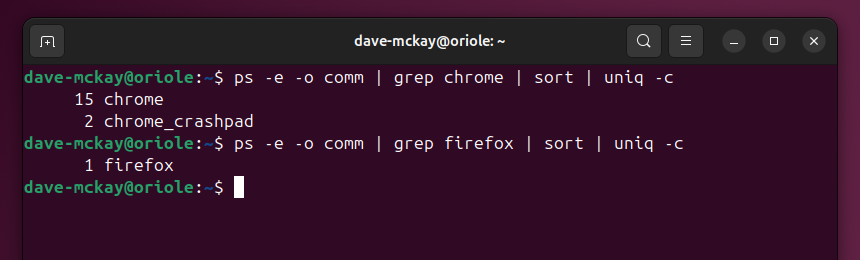

Here’s a set of four commands joined with three pipes. the ps command lists running process. The -e (everything) option lists all processes, and the -o (output) option specifies what information to report on. The comm token means we want to see the process name only.

The list of process names is then piped into grep, which filters out processes that have chrome in their name. That filtered list is fed into sort, to sort the list. The sorted list is then piped into uniq. The -c (count) option counts the unique process names. Then, just for fun, we do the same thing for firefox.

ps -e -o comm | grep chrome | sort | uniq -c

ps -e -o comm | grep firefox | sort | uniq -c

Endless Combinations

There’s no limit to the number of commands you can pipe together, just be aware that each command is opened in its own new subshell. That shouldn’t be a problem on even the most modestly-specced modern computer, but if you do see slowdowns, consider simplifying your commands. Or be patient.