Summary

- Siri can extract information from the screen using ChatGPT with supported iPhones.

- You can use Siri to get information from webpages, photos, and the live camera feed.

- True onscreen awareness will bring more features and inter-app operability, but the current ChatGPT method is still highly useful.

Onscreen awareness is an upcoming feature that lets you ask Siri for information about what’s currently displayed on your iPhone screen. However, if your iPhone supports Apple Intelligence, you can already do exactly that.

What Is Onscreen Awareness for Siri?

When Apple showcased what would be featured in iOS 18, one of the upgrades announced for Siri was the addition of onscreen awareness. According to Apple, Siri will be able to perform actions based on the information that’s currently displayed on the screen of your iPhone.

The example Apple gave was that if a friend sent you a message with your new address, you could say to Siri “Add this address to his contact card” and Siri would be able to extract the address information from the screen and add it to the relevant contact.

There are two parts to this process. One is the ability for Siri to perform actions in multiple apps by taking text from Messages and passing it to Contacts. This is something that isn’t yet possible and is expected to arrive next year as part of iOS 18.4.

The other part of the process is Siri being able to access the information that’s currently displayed on your screen. This feature is expected to arrive in iOS 18.4, but if your iPhone supports Apple Intelligence, you can already use Siri to extract information from what’s displayed on your screen.

How to Get Onscreen Awareness With Siri

To get information from your screen, you need to be using an iPhone that supports Apple Intelligence. That’s because Siri can’t extract information from your screen itself; it needs to turn to ChatGPT for help. With the release of iOS 18.2, ChatGPT is now integrated with Siri for iPhones that support Apple Intelligence, which is currently only the iPhone 15 Pro, iPhone 15 Pro Max, and iPhone 16 models.

If your iPhone is supported, all you need to do is use something in your Siri request that indicates that you want to get information from the current screen. For example, you can say “Summarize the information on this screen” or “Describe what you can see on my iPhone screen”. If you’re struggling to get it to work, adding “Ask ChatGPT” to the start of your query can help.

Siri should then ask you if you want to send a screenshot of your screen to ChatGPT. Tap “Send” and the image of your screen will be analyzed by ChatGPT and a response to your request generated.

The process is even more impressive when you have an image displayed on your screen. In this case, you don’t even need to refer to your screen at all. If your iPhone is displaying a picture of an animal, for example, you can simply ask “What animal is this?” and Siri will ask if you want to send a screenshot to ChatGPT.

ChatGPT requests are processed in the cloud, so you should be wary of using this method if your iPhone screen is displaying any sensitive information.

Asking Siri for Information From a Webpage

Using this trick can be very useful, and the best part is that ChatGPT can extract information not only from the text of a webpage that’s visible on the screen but from the entire page, including text that you’d have to scroll up or down to see.

If you need to get the contact information from a webpage, for example, the information doesn’t need to be displayed on your screen, as long as it’s somewhere on the page.

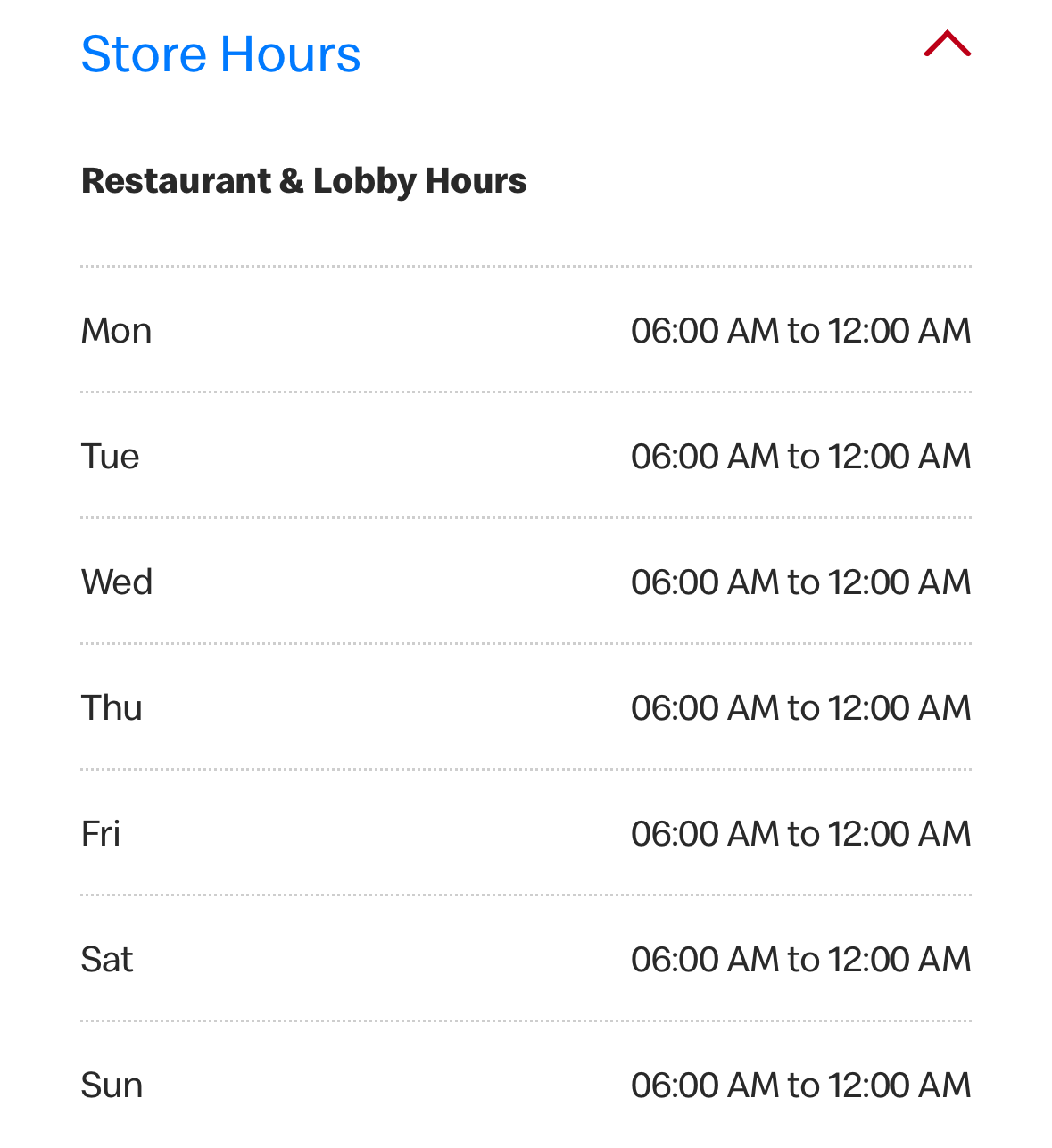

Open a webpage that you want to extract some information from. In this example, I want to find the opening hours for a restaurant. The drive-thru is 24 hours, but the restaurant hours shown below aren’t visible without scrolling down. Siri will be able to extract this information with ChatGPT’s help even though the hours aren’t currently showing on the iPhone screen.

Activate Siri by pressing and holding the side button or saying “Siri” or “Hey Siri.” Ask something like “Get the opening hours for the business displayed on my screen.”

When Siri asks if you want to send the screenshot to ChatGPT, tap “Screenshot” and select “Full Content.” This will include all the information on the webpage, including content that is not visible on the screen.

Tap “Send’ and the screenshot will be sent to ChatGPT. After a few moments, your response will appear, and Siri will read it aloud.

Asking Siri for Information About a Photo

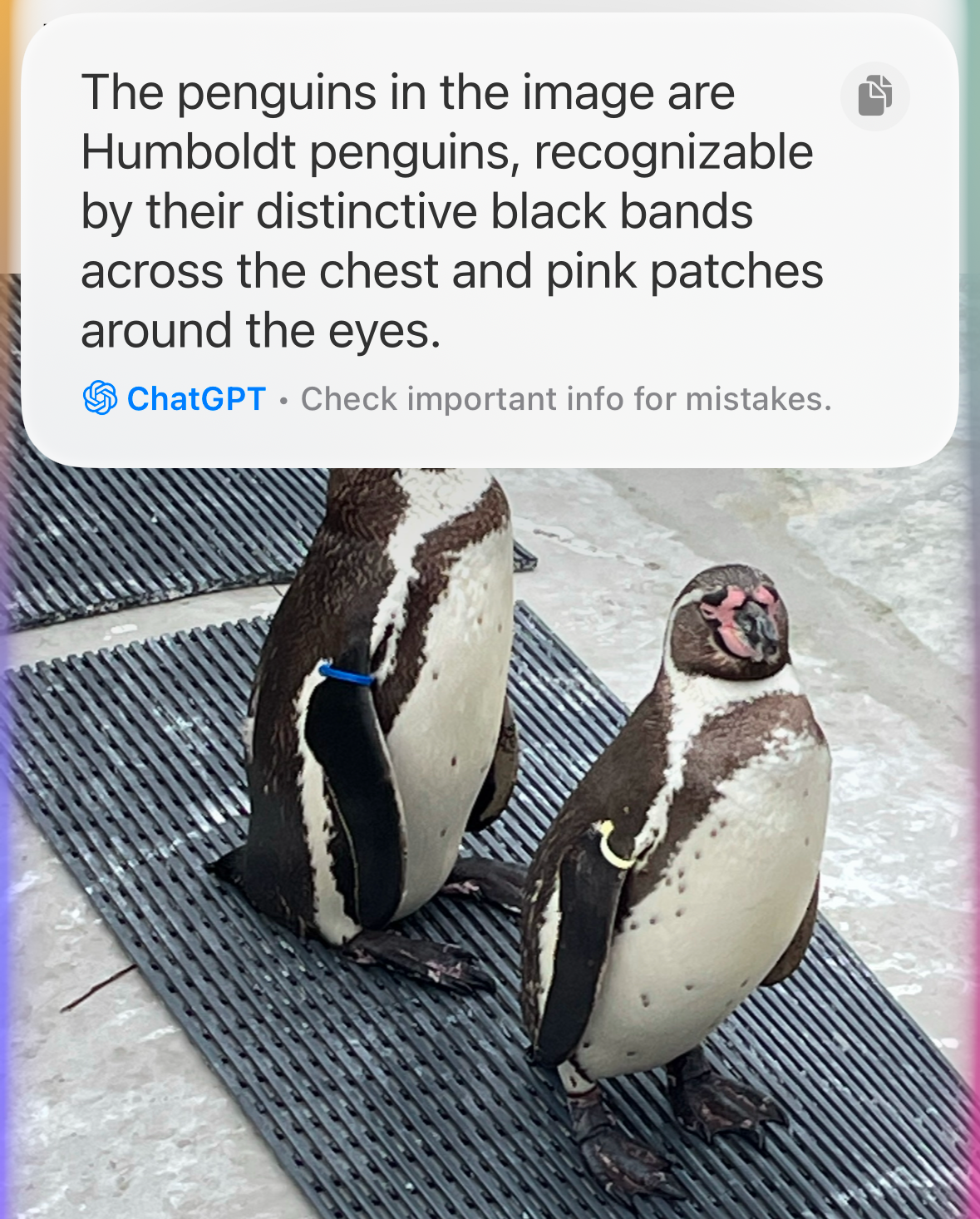

You can also get Siri to use ChatGPT to analyze photos that are displayed on your screen. For example, if you took a photo of some penguins at the zoo, you might want to know what type of penguins they are. You can open the photo, and ask Siri, and ChatGPT will do the rest.

Open the photo that you want to analyze. Say something like “What species is this?” Siri will ask if you want to send the photo to ChatGPT. Tap “Send” to confirm.

ChatGPT will analyze the image and after a moment will give a response, which is read aloud by Siri.

If you use this method, your photo will be sent to ChatGPT’s servers. You should take this into consideration if you don’t want a photo to leave your device.

Getting Siri to Summarize Text

If you don’t have time to read a long wall of text, you can use this method to get Siri to summarize it for you, with the help of ChatGPT. For webpages, you can select the full content so that you get a summary of the entire webpage, rather than just the visible portion, but this isn’t possible with text files. However, it is possible to reduce the font size quite far before ChatGPT is unable to read it, meaning you can fit quite a lot of text onto your iPhone screen.

Open a document or a webpage that you want to summarize. Activate Siri and say something like “Ask ChatGPT to summarize this text.

When the pop-up appears, if your text is from a webpage, tap “Screenshot” and select “Full Content.”

Tap “Send” and the screenshot is sent to ChatGPT. After a few moments, the response should appear.

Querying Live Images From Your Camera

If you own an iPhone 16, you can use the Camera Control button to get information about anything that you can see on your camera screen, using a feature called visual intelligence. However, iPhone 15 Pro and iPhone 15 Pro Max users can get a similar experience just by using the Camera app to point at an object and then asking Siri for information about it.

If you don’t own one of those models, there is another workaround that can give you a similar experience to visual intelligence.

Open the Camera app and point at an object so that it’s displayed in the viewfinder. Activate Siri and ask something such as “What is this object?”

When the pop-up appears, tap “Send” to send the image to ChatGPT to analyze. After a moment, ChatGPT will respond, with Siri reading the response aloud.

The image from your camera will be sent to ChatGPT’s servers, so this method isn’t a good idea if your image contains anything sensitive.

Other Ways to Use Siri’s Onscreen Awareness

When “true” onscreen awareness arrives in iOS 18.4, it should make the process even easier. But there is still a lot you can do right now using this trick. For example, you can bring up a webpage for a product and ask for reviews or suggestions for alternative products. You can ask about an acronym in the text you’re reading or get definitions for complex words that you’re not sure how to pronounce.

You can even use this method to translate text from an image or a webpage, or to ask ChatGPT to verify if the article that you’re reading is accurate.

What Siri Can’t Do Until Real Onscreen Awareness Arrives

This trick already gives you a lot of the features of the upcoming onscreen awareness feature. However, there are some things that onscreen awareness will be able to do that this method can’t.

For example, it’s not very easy to copy information from your screen and use it somewhere else. ChatGPT can extract the information from the screen, but you still need to manually copy it before you can paste it anywhere. You also can’t interact with other apps; it’s not possible to ask to add an address to a contact like you’ll be able to with true onscreen awareness. Until that arrives, however, this is a really useful feature that can save you a lot of time and effort.

Onscreen awareness may not be here just yet, but you can use ChatGPT with Siri to get information from anything that’s currently displayed on your iPhone screen. There are some very useful ways to make use of this until true onscreen awareness arrives.