AI models will need more storage soon

When people talk about the “size” of an AI model, they’re referring to the number of “parameters” it contains. A parameter is one variable in the AI model that determines how it generates output, and any given AI model can have billions of these parameters.

Also referred to as model weights, these parameters occupy storage space to operate properly — and when an AI model has billions of parameters, storage requirements can quickly balloon.

Here are a few current examples:

- Google Gemma 2 — 1.71GB (2 billion parameters)

- Meta Llama 3.1 — 4.92GB (8 billion parameters)

- Qwen 2.5 Coder — 8.52GB (14 billion parameters)

- Meta Llama 3.3 Instruct — 37.14GB (70 billion parameters)

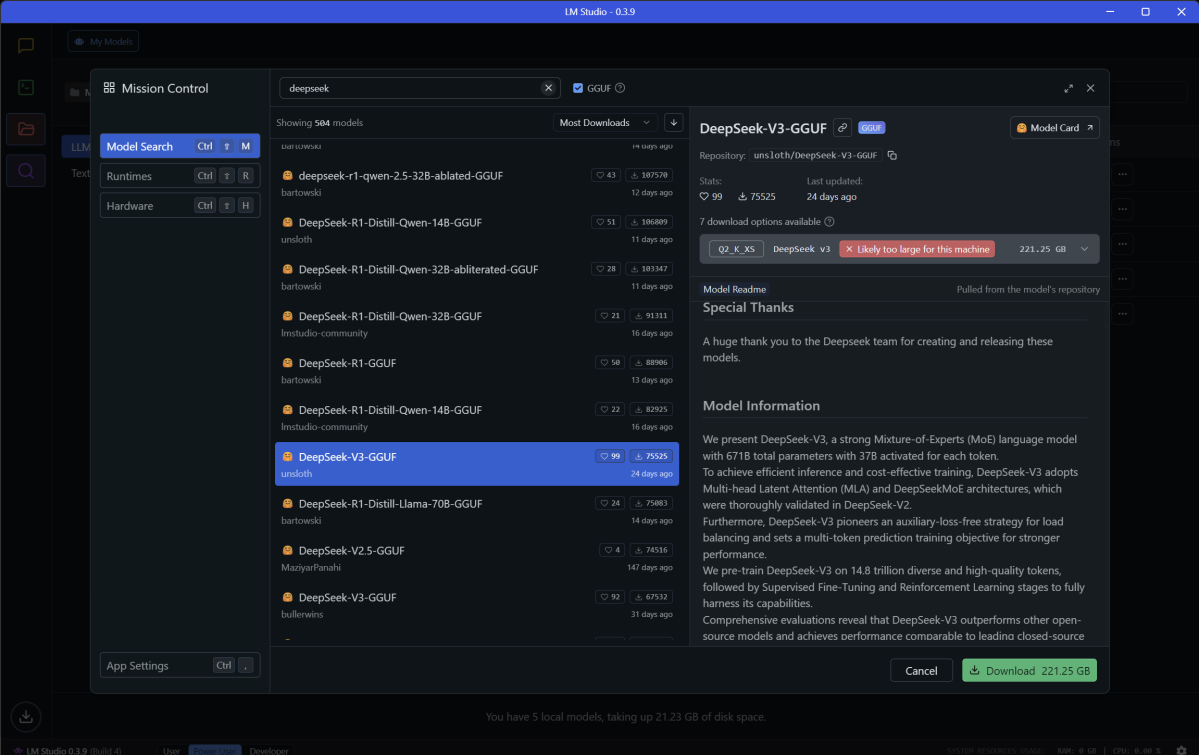

- DeepSeek V3 — 221.25GB (671 billion parameters)

As you can see, the storage space consumed by an LLM increases with the size of its parameters. The same is true for other types of generative AI models, too. For example, Stable Diffusion v1.4 takes up about 4GB while Stable Diffusion XL consumes over 13GB. OpenAI’s Whisper, a speech-to-text model, consumes between 1GB and 6GB depending on whether you choose a lightweight variant or the full-fat model.

Matt Smith / Foundry

But there’s something else that also increases with the size of an AI model’s parameters: its intelligence. Llama 3.1 405B, the biggest version of Meta’s AI model, scored 88.6 on the MMLU benchmark, meaning it correctly answered 88.6 percent of questions in the benchmark. Meanwhile, the lesser Llama 3.1 70B model scored 86 and the even lesser Llama 3.1 8B scored 73.

To be clear, there are ways to improve the intelligence of AI models without increasing parameters. That was a key innovation of DeepSeek-V3, the Chinese AI model that emerged from nowhere in December 2024 with performance that rivaled OpenAI’s best.

Still, the very best AI models are massive. DeepSeek-V3 is presumed to be smaller than OpenAI’s models — I say “presumed” because OpenAI doesn’t disclose parameter counts for its cutting-edge models — and yet it’s still over 220GB. Most apps won’t require a local installation of DeepSeek-V3 to power AI features, but they might want to use mid-sized models like Qwen 2.5 14B (8.52GB) or Llama 3.3 70B (37.14GB).

And it gets worse for your hard drive, because different AI models have different strengths. Some are better for writing in natural language while others are optimized for logical reasoning or step-by-step planning. That means you can’t just get by with one locally installed AI model. You’ll likely want a few. Perhaps dozens.