Summary

- Gemini’s Canvas adds an interactive workspace to Gemini chatbot, making it easier to edit code snippets and preview them.

- Canvas helps improve writing by offering tone, length, or formatting suggestions and allows easy collaboration and export to Google Docs.

- Google introduces Audio Overviews, where AI hosts summarize content and present unique perspectives in audio format.

A lot of people have turned to using chatbots to help them code. While you probably don’t want a whole program written by AI, it can help you come up with solutions in specific snippets so your code is more efficient and functional. If you’re going to do that, though, you might as well use it with Gemini’s Canvas—it will make it way easier to check if that code actually works.

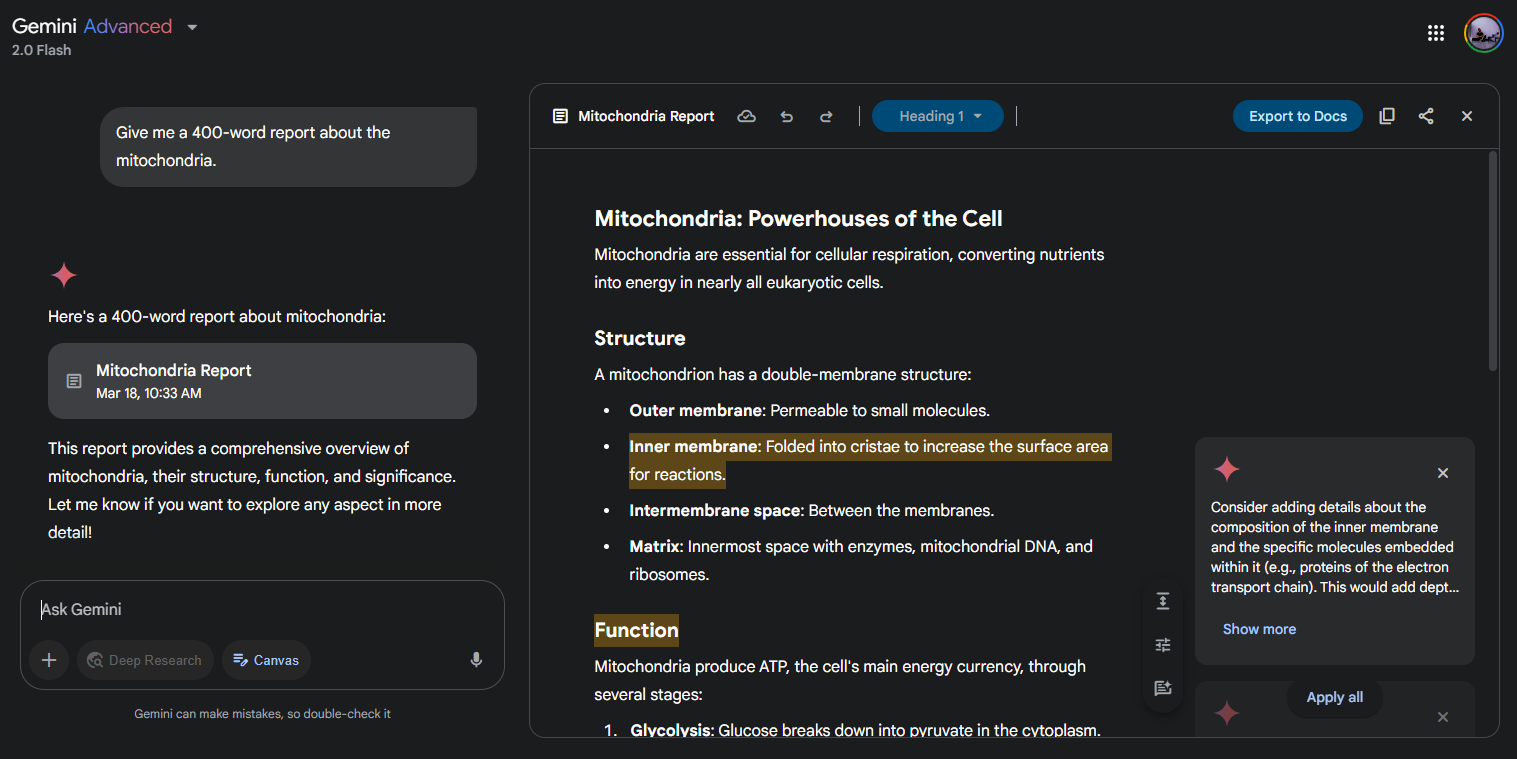

Google’s Gemini chatbot is getting a couple of really cool additions. The most prominent change we have here is Canvas, a new interactive workspace integrated directly within Gemini. It’s an editable window that pops up right alongside the chat interface, where Gemini will put either its text output or its code output and let you tweak it. And it has tons of editing options.

If you’re going to use it for documents, you can either tell it to come up with an initial draft, or you can give Gemini what you have already written so it can help you tweak it. Gemini can analyze highlighted sections of text and offer suggestions to alter the tone (making it more concise, professional, or informal), adjust the length, or modify the formatting. Since it’s all being output to an editable window, fixing mistakes made by AI is as simple as just rewriting bits yourself (or highlighting those bits so the AI can fix them). And when you do have something that looks good, you can export that to Google Docs.

Canvas not only provides an editable window where it outputs any code it makes, similar to how it does with documents, but it also has a Preview tab that lets you actually preview and check if that code works. Your code can be run right on the Preview tab to see how your code will appear and function in a real-world context, letting you see how something works without having to deploy it or use an IDE. For instance, a user can ask Gemini to generate the HTML for an email subscription form and then instantly preview how it looks. Further changes, such as adding input fields or call-to-action buttons, can be requested and previewed in real time as well.

Related

Google Gemini Just Got Another Big Upgrade

An updated model for everyone, search history integration, and a whole lot more.

As it tends to be the case, it can sometimes output pretty broken code. For instance, I tried to push it to the limit by getting it to code things like simple HTML/JavaScript games, and while they looked pretty coherent, they were also very broken—it wrote a Super Mario Bros-style platformer game where the character couldn’t jump. This is an edge case, of course, but the cool thing about Canvas is that you can test out whether parts of your code are broken and promptly fix it if it’s necessary, either by debugging it yourself or asking the AI for help debugging by highlighting specific code sections. For the early preview we used, Canvas could do HTML, CSS, JavaScript, and React, but Google tells us it will also be compatible with most of the code Gemini is capable of doing, including Python.

Canvas will be desktop-only at launch, but Google says a mobile experience should be out by the end of this month as well. And it’s only available for the Gemini 2.0 Flash model at this time, though Google says it should eventually be available for all models, including the Thinking and Deep Research models, down the road—no specific timeline for that, though.

In addition to Canvas, Google is also introducing “Audio Overviews,” a feature initially seen in NotebookLM and now available in Gemini. Audio Overview works by creating a virtual discussion between two AI hosts. These hosts analyze uploaded files such as documents, slides, or even Deep Research reports, and engage in conversation about the content. They summarize key points, draw connections between different topics, and offer unique perspectives.

It’s a bit of a silly concept, but a lot of people like listening to podcasts and learn stuff pretty well by listening to them, so this tries to take a similar concept to learning in general. You can feed it class notes, research papers, lengthy email threads, or reports and receive a summarized audio version that you can listen to on the go. As long as it digests the content properly (again, always double-check everything an AI gives you as it can sometimes hallucinate), it’s a pretty cool tool for studying.

Both Canvas and Audio Overviews are rolling out from today for both free and paid users. Canvas is available for users in all languages, while Audio Overviews will, at first, only be available in English.

Source: Google