Adobe is bringing new features to its Premiere Pro video editor, including caption translations and the ability to search videos by describing them.

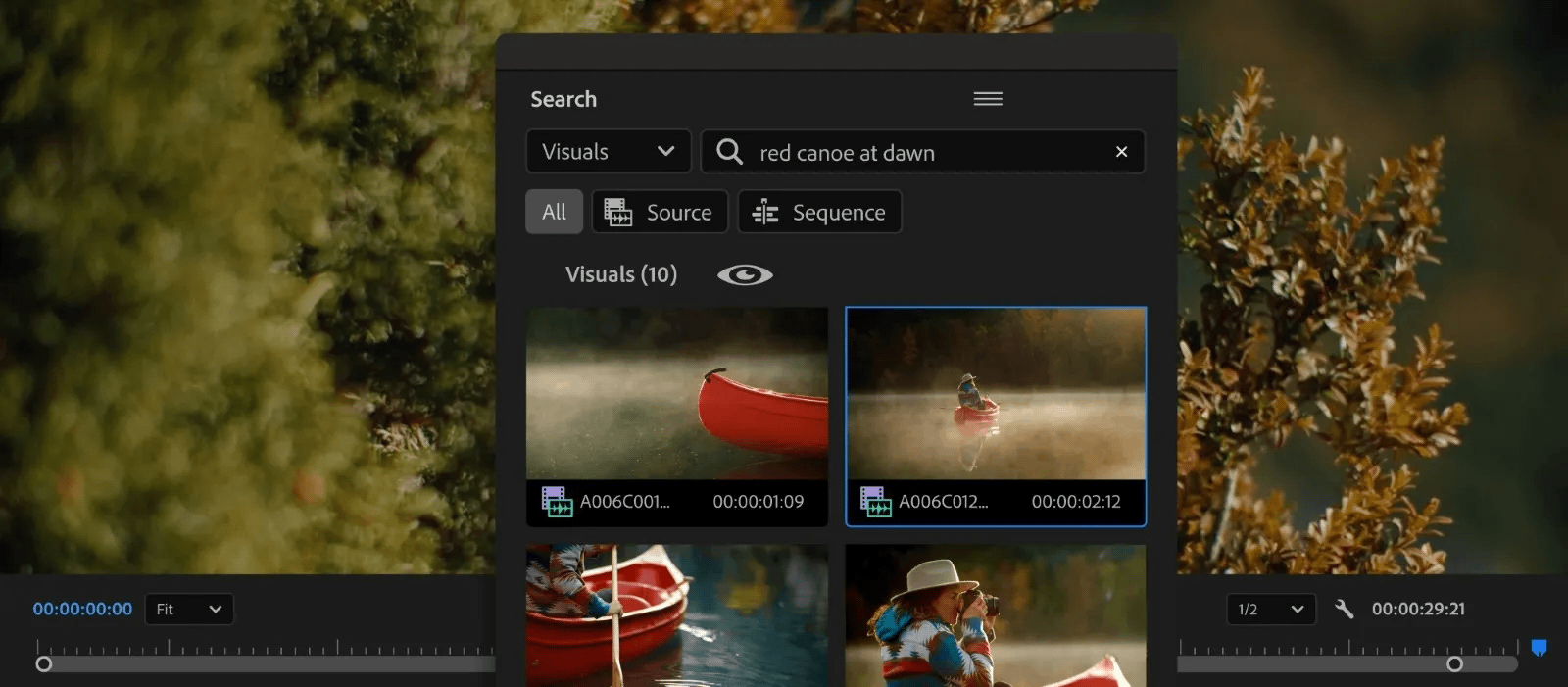

Premiere Pro can now recognize objects, locations, camera angles, and more in videos but doesn’t identify or label people (yet). You can simply describe the footage to find videos in your library via a new Search panel. For example, you can find matching items with simple search keywords like “California” or use natural language for more complex queries, such as “a person skating with a lens flare” or “a person skating with a lens flare,” the company said. The feature will find matching sections of a clip or the relevant range.

If you edit many videos in Premiere Pro, this feature could save you time compared to manually reviewing videos to find the right footage for your project. It takes advantage of an AI-powered computer vision algorithm Adobe calls Media Intelligence. The company says Media Intelligence currently only identifies visuals, not sound. It also does not use optical character recognition, so you can’t find a specific text in a frame.

The app can also find spoken words if the video file has a transcript attached. And beacuse Media Intelligence can analyze metadata, too, you can find videos based on GPS locations, camera types, capture dates, and other information embeded in the metadata.

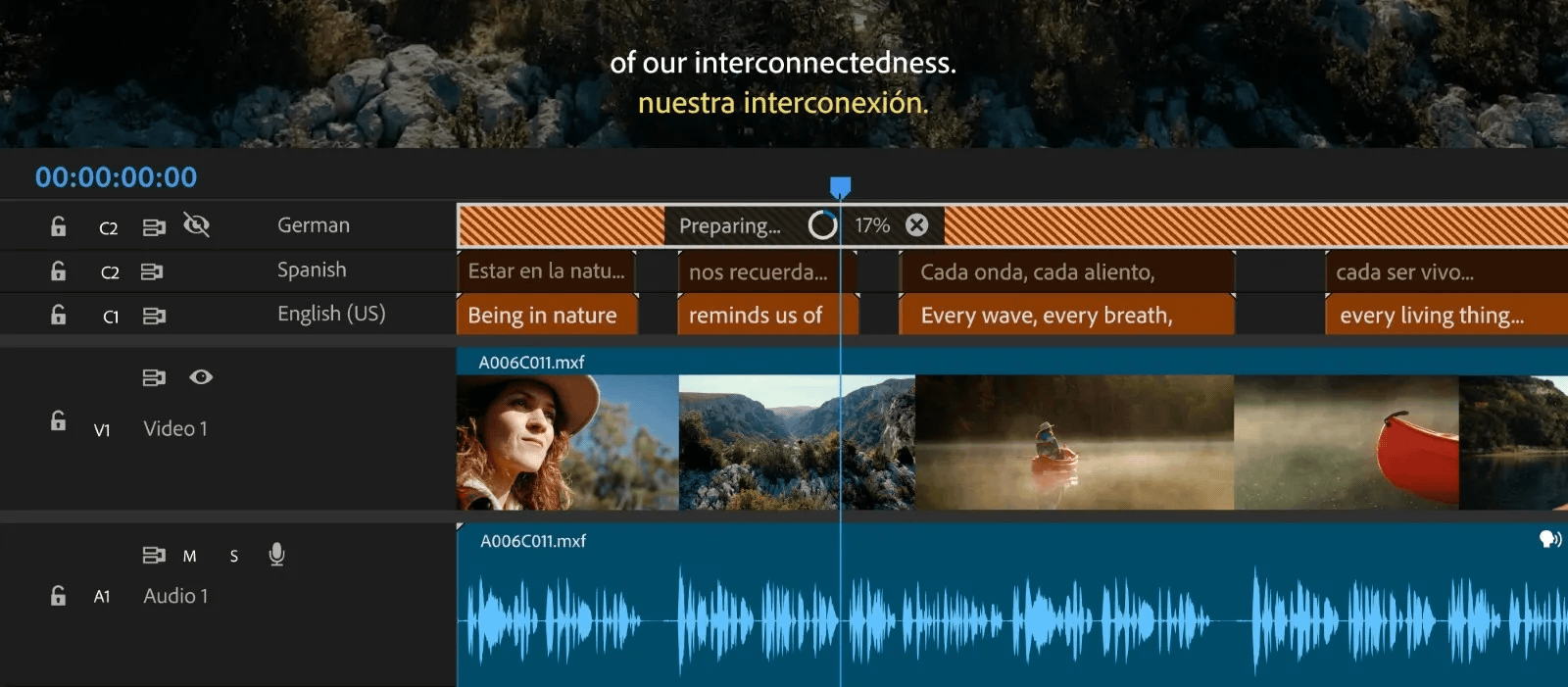

Adobe’s FAQ page says privacy is guaranteed because all processing for Media Intelligence is performed on-device. Nothing is uploaded to the cloud, and no internet connectivity is required for Media Intelligence to work. Your content is not used to train Adobe’s models. Premiere Pro can also now automatically translate captions into 17 languages, with multiple caption tracks visible at the same time. Video is transcribed in real-time using AI voice-to-text technology, and you can then edit the video directly from the transcript.

Adobe isn’t alone in bringing AI capabilities to video editing. Apple’s Final Cut Pro software recently gained the ability to automatically create closed captions using a large language model that transcribes spoken audio. Microsoft’s Clipchamp video editor in Windows has a similar feature, where you edit videos by using text transcripts instead of the usual timeline.

The new Search panel, caption translations, and other improvements are available in the Premiere Pro Beta starting today. To run the betas, you need an active Creative Cloud or Premiere Pro subscription. Creative Cloud subscribers who don’t wish to install the betas can get those features later when they are released to all users.

Adobe also announced improvements for After Effects and Frame.io, its cloud-based video collaboration platform which now supports Canon’s C80 and C400 cameras. After Effects has a new caching system that should eliminate the need to pause playback to cache or render on slower computers. The app now supports HDR monitoring for PQ and HLG video via a calibrated reference monitor. You can try these improvements in the After Effects Beta.

Source: Adobe