Summary

- AMD gained back a portion of the desktop GPU market, but the company has to improve its GPU on multiple fronts before we can call it a serious competitor to NVIDIA’s dominance.

- The most immediate areas of improvement should be FSR 4 and Radeon Anti-Lag 2 adoption rate, and FSR frame generation.

- To compete with NVIDIA in the long run, AMD also has to improve the path tracing and productivity performance of its future GPUs.

At one point during 2024, AMD GPUs made up only 10% of the GPU market. Thanks to the RX 9070 series, AMD could grab more than 20% of the GPU market in 2025, which hasn’t happened in years.

A lot of this comes down to NVIDIA’s fumbling of the ball, with disappointing performance uplifts in its RTX 50 Series, not to mention melting power cables, defective cards, and predictable availability problems. To truly challenge NVIDIA’s dominance, though, AMD must resolve the following six issues marring its GPUs.

1

FSR 4 Adoption Rate

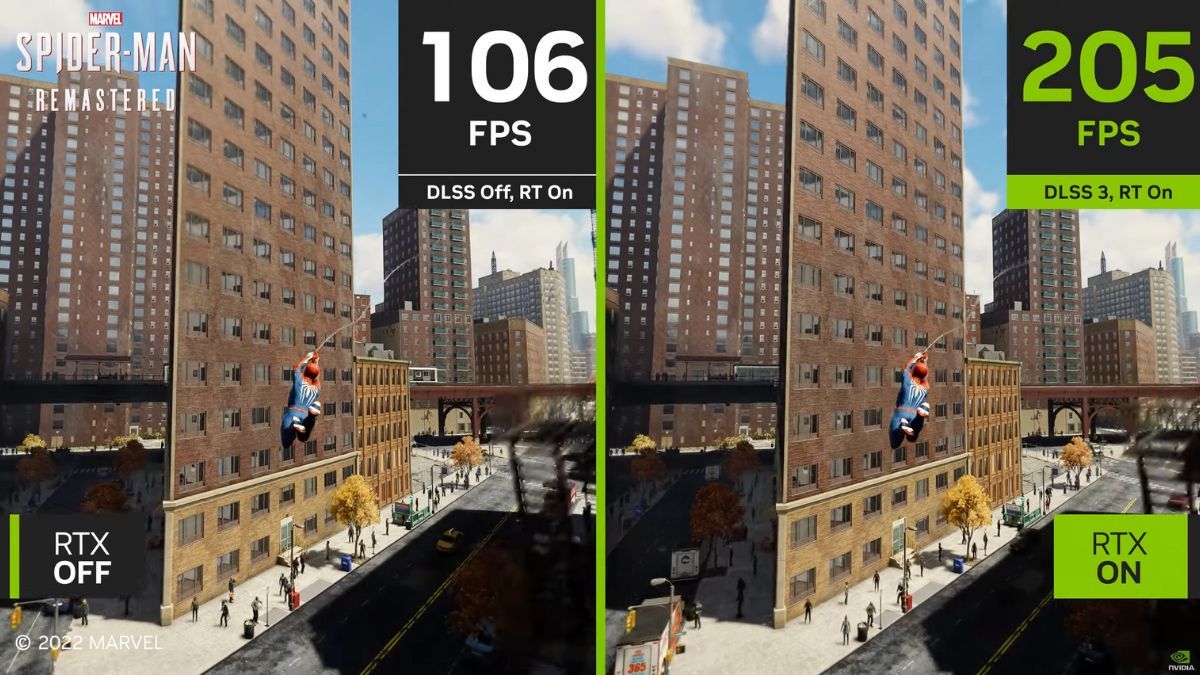

FidelityFX Super Resolution, or FSR for short, is AMD’s upscaling and frame-generation tech designed to challenge NVIDIA’s Deep Learning Super Sampling (DLSS) which has dominated the GPU market for years.

After testing it in Horizon Forbidden West and Call of Duty Black Ops 6, I can confidently say that FSR 4 looks great and is a massive improvement over FSR 3. The issue is that there are only 21 games at the moment that support FSR 4, and not all of them actually work with the latest version of the upscaler.

I’ve tried enabling FSR 4 in Kingdom Come: Deliverance II, a perfect game for testing the new upscaler thanks to its lush forests that look smeared as heck when using FSR 3, but couldn’t because the game doesn’t work with FSR 4 despite it being listed on AMD’s website. On the other hand, more than 100 games support NVIDIA DLSS 4, both the upscaling and multi-frame generation parts of the equation.

The poor FSR 4 adoption rate is the most immediate issue AMD must resolve. Many PC gamers, including myself, bought an RX 90 GPU because of FSR 4, and if we can’t use it anywhere, what’s the point?

For example, instead of working with Ubisoft to make Assassin’s Creed Shadows—one of the biggest games of the year that runs much better on AMD GPUs and that doesn’t support DLSS 4—FSR 4-compatible, AMD did absolutely nothing. No in-game support and no FSR 4 support via AMD software.

If things continue this way and NVIDIA solves the pricing and availability issues affecting the RTX 50 series, I don’t see how AMD can become a long-term danger to NVIDIA’s dominance. Sure, I can use apps such as Optiscaler to inject FSR 4 into games with DLSS support, but more casual gamers who don’t want to deal with third-party apps will just return to NVIDIA once the time comes to upgrade their graphics card.

2

Path Tracing Performance

The RX 9070 XT and the RX 9070 are great cards, with their strongest point, aside from FSR 4, being ray tracing performance. The RX 9070 XT, for instance, matches the RTX 4070 Ti Super in ray tracing performance and beats the RX 7900 XTX by almost 15% on average. That’s a massive improvement in ray tracing performance compared to the RDNA 3 architecture.

However, one issue remains: path tracing performance. The RX 9070 XT is about 14% slower in ray tracing compared to its closest competitor, the RTX 5070 Ti. But after you turn on demanding path-tracing effects in games such as Cyberpunk 2077 and Alan Wake 2, the gap widens to more than 65%!

Sapphire NITRO+ AMD Radeon RX 9070 XT GPU

The AMD 9070 from Sapphire features 12GB of DDR6 memory, two HDMi and two DisplayPorts, and plenty of cooling options to keep your GPU from running hot while gaming.

Now, path tracing is still too demanding for most of the current crop of gaming GPUs, especially if you game at 1440p and higher resolution. However, ray tracing is here to stay, and we will see more and more games embracing fully path-traced global illumination in the future. While this performance discrepancy isn’t very important at the moment, I hope that AMD is working on making its next GPU architecture, UDNA, much more competitive with NVIDIA GPUs when it comes to path tracing performance.

3

Frame Generation

DLSS frame generation is impressive if you’ve got a high enough base frame rate (the lower limit is about 40 frames per second). The tech offers low enough latency to enjoy games with a controller without noticing the extra lag, even with a mouse, if the base frame rate is higher than 60FPS.

The latest form of the tech, DLSS multi-frame generation, is perfect for maxing out your monitor’s refresh rate in games that already run at 60FPS due to the very low latency penalty when your base frame rate is higher than 60FPS. Similarly, the number of temporal artifacts is also quite low if you have a base frame rate of 60FPS or higher.

FSR 3 frame generation, on the other hand, is fine but far behind NVIDIA’s solution. Firstly, the latency penalty is higher. I’ve tried both DLSS and FSR frame generation in Ghost of Tsushima, and the latter felt “floatier” despite the base frame rate being about 55FPS in both cases.

Another issue is the amount of temporal artifacts when the base frame rate is lower than about 40FPS. While you can notice said artifacts no matter which of the two frame-gen technologies you use, they are more noticeable with AMD’s FSR frame generation.

Personally, I’m not really interested in frame generation. The only scenario where I use it is when I run demanding games locally on my ROG Ally, where Lossless Scaling does the job commendably. But, as with path tracing, frame generation is here to stay, so AMD ought to figure out how to bring its frame gen tech to parity with NVIDIA sooner rather than later.

4

Anti-Lag Technology

The main reason DLSS frame generation feels less floaty than FSR frame generation is NVIDIA Reflex. In-game support for the latency reduction technology is a prerequisite for implementing DLSS multi-frame gen, a smart move by NVIDIA.

NVIDIA Reflex doesn’t only noticeably improve latency in games, it also makes DLSS frame gen, especially multi-frame generation, more palatable to gamers susceptible to high input latency.

On the other side, we’ve got AMD and its Radeon Anti-Lag technology, which is available via AMD software and doesn’t do as good of a job at reducing latency as NVIDIA Reflex. AMD tried to improve the tech, but AMD Anti-Lag+ burned spectacularly when it activated anti-cheat software in many games, with some AMD GPU owners even getting banned from their favorite multiplayer games.

The latest version of the tech, AMD Anti-Lag 2, is an in-game option similar to NVIDIA Reflex. But the list of supported games is tragically short. At the moment of writing, only three games include Anti-Lag 2, which is a pretty sad state of affairs. NVIDIA Reflex is, as you’ve gotten used to reading in this piece, available in hundreds of games.

During the last couple of years or so, I have gotten so used to NVIDIA Reflex, due to it being present in so many big-budget games, that enabling Reflex was one of the first things I did when launching a game for the first time. Sadly, I cannot do that with AMD Anti-Lag 2, which is a shame.

If AMD wants to challenge NVIDIA’s gaming GPU domination, it should start working with game developers to increase FSR 4, FSR frame generation, and Anti-Lag adoption. Otherwise, this year’s uptick in its GPU market share won’t develop into a long-term trend. This is something AMD is going to need to do in conjunction with developers to avoid the issues that led to cheat detection last time around.

5

Productivity Performance

Thanks to its CUDA API, NVIDIA GPUs are a far better choice for anyone who uses GPUs for gaming and work. CUDA allows NVIDIA to maintain its domination in the data center GPU market, but the technology is also important for NVIDIA’s gaming GPUs, which leave AMD GPUs in the dust regarding productivity performance.

If you’re a professional who also plays games, chances are you’re using an NVIDIA GPU or multiple GPUs. They’re much better for virtually every single productivity workload, from AI inference to GPU rendering to video editing. The only proper challenger to NVIDIA here is Apple, with AMD being far behind in this category to even be considered a competitor.

Now, NVIDIA didn’t find success overnight. The first version of CUDA debuted in 2007, and it only got better over the next 18 years. If AMD gaming GPUs want to challenge NVIDIA in productivity performance, AMD will have to wage a long-term war.

The upcoming UDNA GPU architecture is a step in the right direction. UDNA amalgamates the best parts of AMD’s RDNA gaming-focused and CNDA compute-focused architecture into one unified GPU microarchitecture that could be the start of AMD’s return to its glory days of the early and mid-2010s when the company held almost 40% of the desktop GPU market.

RDNA 4 GPUs might make a visible dent in NVIDIA’s gaming GPU market share, but AMD has to cover a lot of ground if it wants to transform into a proper challenger. The areas that ought to see the most immediate improvement are FSR 4 and AMD Anti-Lag 2 adoption rate, and the quality of FSR frame generation. Path-tracing performance improvements can wait for UDNA. However, to become a long-term danger for NVIDIA, AMD also has to improve the productivity performance of its gaming GPUs.