An Apple research paper suggests that humanoid robots can be more effectively trained with human instructors as well as robot demonstrators, which is part of a new combined approach the company calls “PH2D.”

On Wednesday, a week after the company revealed its Matrix3D and StreamBridge AI models, Apple published new research on robots and how to train them. The iPhone maker’s previous robotics efforts included the creation of a robotic lamp, among other things, but Apple’s latest study deals with humanoid robots specifically.

The research paper, titled “Humanoid Policy ~ Human Policy,” details the inadequacies of traditional robot-training methods and proposes a new solution that’s both scalable and cost-effective.

Rather than relying solely on robot demonstrators for humanoid robot training, a process the paper says is “labor-intensive” while also requiring “expensive teleoperated data collection,” Apple’s study suggests a combined approach.

This involves the use of human instructors, alongside robot demonstrators, as part of the process. This aims to reduce training-related costs, as Apple’s study explains that the company was able to produce training material for humanoid robots through the use of modified consumer products.

Specifically, an Apple Vision Pro was modified to use only the lower left camera for visual observation, while Apple’s ARKit was used to access 3D head and hand poses. The company also utilized a modified Meta Quest headset equipped with mini ZED Stereo cameras, which effectively made it a low-cost training option.

The modified headsets were used to train humanoid robots’ hand manipulation. Human instructors were told to sit upright and perform actions with their hands. This included grasping and lifting specific objects as well as pouring liquids, among other things, and audible instructions were provided as the actions were recorded. The resulting footage was then slowed down so that it could be used for humanoid robot training.

Human instructors used modified Apple Vision Pro headsets as part of the process. Image Credit: Apple

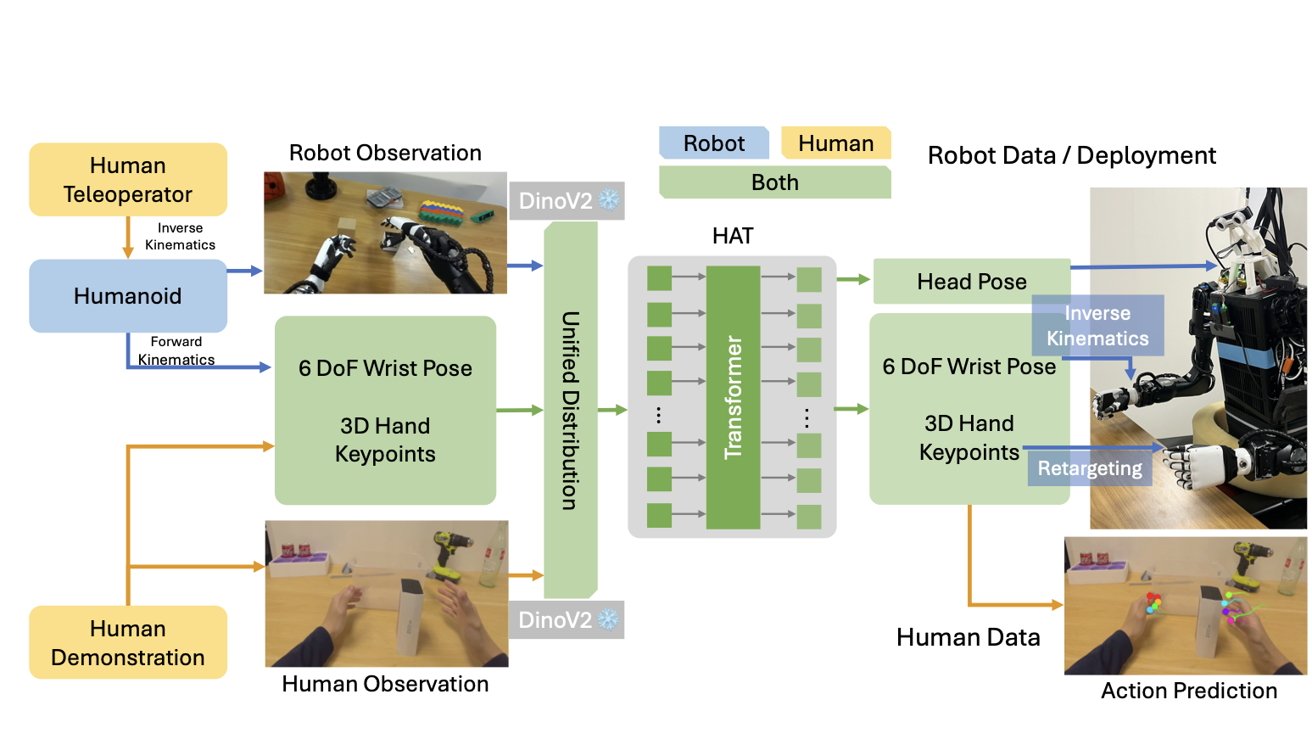

Apple created a model that can process the training material created by human instructors, as well as that created by robotic demonstrators — the paper calls this “Physical Human-Humanoid Data” or PH2D. The model that deals with the data, known as the “Human-humanoid Action Transformer” or HAT, is capable of processing input created by both humans and robots alike.

The company’s researchers were able to unify into a human and robot demonstration sources “generalizable policy framework.” Apple’s unique approach leads to “improved generalization and robustness compared to the counterpart trained using only real-robot data,” the research paper says.

Apple’s HAT can process data created by robot demonstrators and human instructors. Image Credit: Apple

Apple’s study suggests that there are significant benefits to using this combined training strategy. Alongside its cost-effective nature, robots trained with the approach delivered better results compared to those where robot demonstrators were exclusively used. This only applies to select tasks, however, such as vertical object grasping.

The company will likely implement this training method for future products. Though it has only demonstrated its robot-lamp prototype so far, Apple is said to be working on a mobile robot for end consumers that could perform chores and simple tasks.