A child safety group pushed Apple on why the announced CSAM detection feature was abandoned, and the company has given its most detailed response yet as to why it backed off its plans.

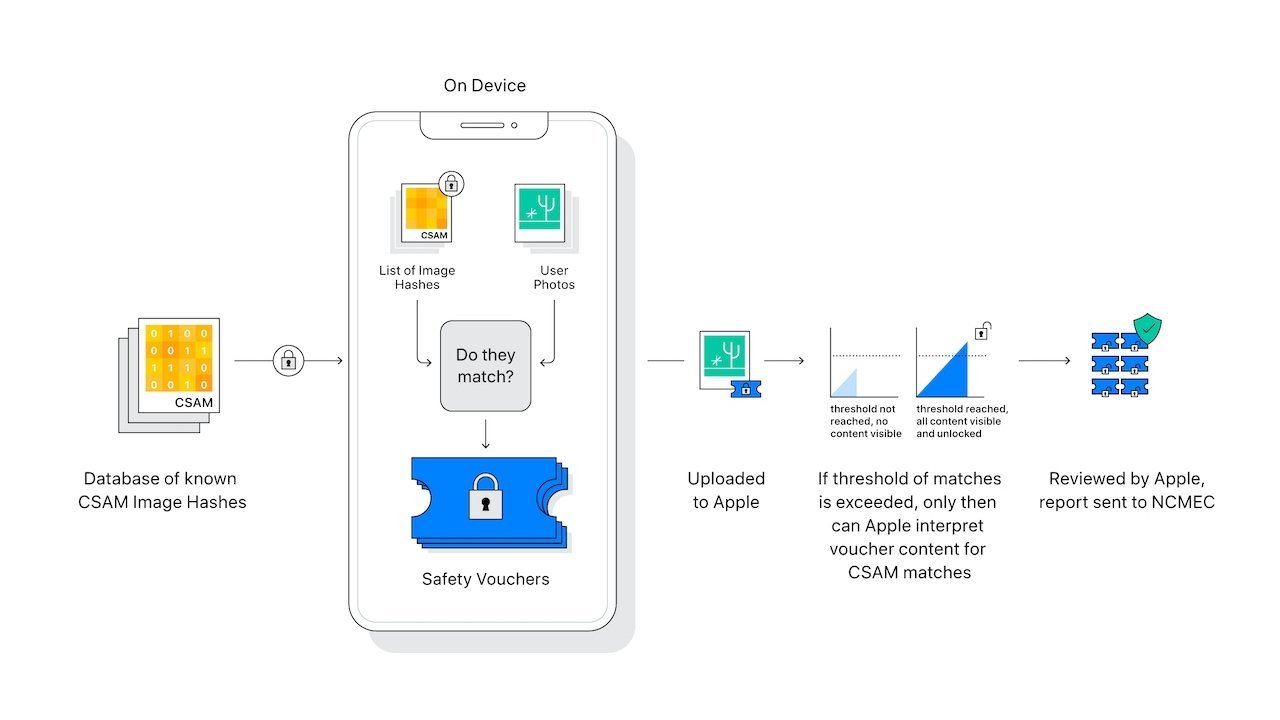

Child Sexual Abuse Material is an ongoing severe concern Apple attempted to address with on-device and iCloud detection tools. These controversial tools were ultimately abandoned in December 2022, leaving more controversy in its wake.

A child safety group known as Heat Initiative told Apple it would organize a campaign against the choice to abandon CSAM detection, hoping to force it to offer such tools. Apple responded in detail, and Wired was sent the response and detailed its contents in a report.

The response focuses on consumer safety and privacy as Apple’s reason to pivot to a feature set called Communication Safety. Trying to find a way to access information that is normally encrypted goes against Apple’s wider privacy and security stance — a position that continues to upset world powers.

“Child sexual abuse material is abhorrent and we are committed to breaking the chain of coercion and influence that makes children susceptible to it,” wrote Erik Neuenschwander, Apple’s director of user privacy and child safety.

“Scanning every user’s privately stored iCloud data would create new threat vectors for data thieves to find and exploit,” Neuenschwander continued. “It would also inject the potential for a slippery slope of unintended consequences. Scanning for one type of content, for instance, opens the door for bulk surveillance and could create a desire to search other encrypted messaging systems across content types.”

“We decided to not proceed with the proposal for a hybrid client-server approach to CSAM detection for iCloud Photos from a few years ago,” he finished. “We concluded it was not practically possible to implement without ultimately imperiling the security and privacy of our users.”

Neuenschwander was responding to a request by Sarah Gardner, leader of the Heat Initiative. Gardner was asking why Apple backed down on the on-device CSAM identification program.

“We firmly believe that the solution you unveiled not only positioned Apple as a global leader in user privacy but also promised to eradicate millions of child sexual abuse images and videos from iCloud,” Gardner wrote. “I am a part of a developing initiative involving concerned child safety experts and advocates who intend to engage with you and your company, Apple, on your continued delay in implementing critical technology.”

“Child sexual abuse is a difficult issue that no one wants to talk about, which is why it gets silenced and left behind, added Gardner. “We are here to make sure that doesn’t happen.”

Rather than take an approach that would violate user trust and make Apple a middleman for processing reports, the company wants to help direct victims to resources and law enforcement. Developer APIs that apps like Discord can use will help educate users and direct them to report offenders.

Apple doesn’t “scan” user photos stored on device or in iCloud in any way. The Communication Safety feature can be enabled for children’s accounts but won’t notify parents when nudity is detected in chats.