I wanted to like Apple Intelligence, especially after Apple’s fake ad fooled me into believing that an AI-infused Siri was real. I gave Apple Intelligence more than one chance, enabling it and using it on all my devices every day to compare improvements between updates.

I concluded that Apple Intelligence, in its current form, lags behind the competition so much that it isn’t worth the storage hit and battery drain. As a matter of fact, I’m perfectly happy to live without Apple Intelligence and address my AI needs with apps instead. Let me explain.

Image Playground Is Utterly Useless

This has actually been the feature that I was most eager to take for a spin. There are many more robust AI image generation tools out there, like Grok, which is pretty good at it. I thought I’d like Image Playground, but it turned out to be terrible beyond comprehension. I gave it a few chances to shine, but the output that Image Playground gave me has been unimpressive.

There’s no visual variety because the built-in Animation, Illustration, and Sketch drawing styles don’t look much different from one another. When it comes to performance, I’m unforgiving; every second counts, but Image Playground leaves me waiting for what feels like an eternity to generate a small, low-resolution image from a simple prompt. That’s just for the first draft; each subsequent tweak means more waiting for stuff to render

If Image Playground could generate an image within a second, and actually draw stuff beyond super simple requests, such as “hot dogs in a suitcase,” I might use it.

I also don’t like the non-standard interface of Image Playground because it doesn’t adhere to Apple’s own guidelines. It’s counter-intuitive and not very easy to use. Why do I have to swipe all the way to the right while waiting for each new image to populate?

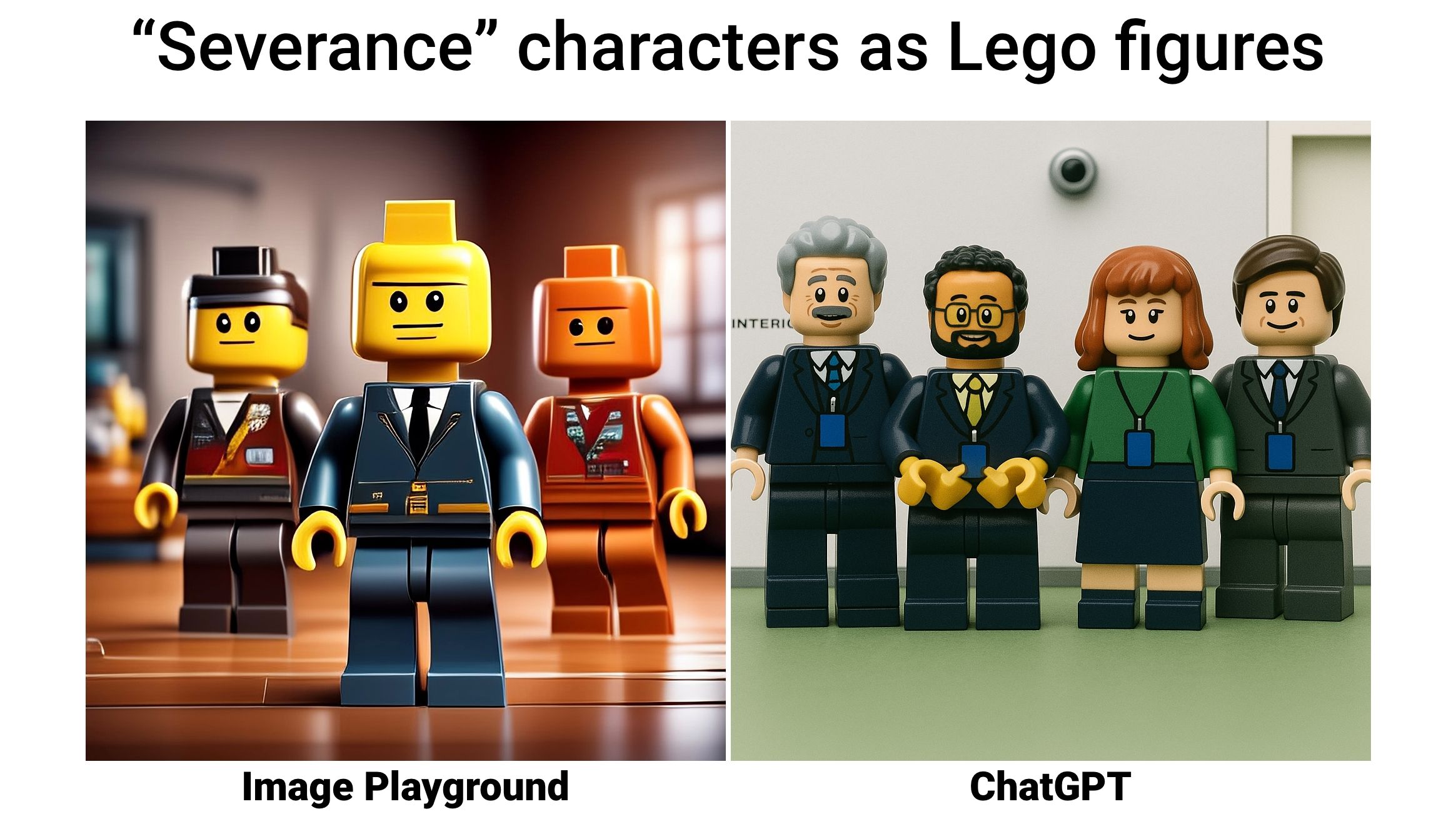

There are more capable and free AI image generators out there that produce realistic, high-quality images with unbelievable detail. My personal favorite is OpenAI’s GPT-4o image generation in ChatGPT, which can spit out state-of-the-art images with perfectly legible text, but your mileage may vary. Google Gemini and Meta AI also do free image generation. To me, Image Playground almost feels like training wheels for those tools.

John Gruber best highlighted how bad Image Playground is—try prompting both ChatGPT and Image Playground with the instruction “Create an image of the main characters from ‘Severance’ as Lego figures,” then compare the results for yourself.

Image Playground is my least favorite app in the Apple Intelligence suite. It’s fine if you’re into cartoonifying images of yourself, pets, and other human beings, but that has a short shelf life. Beyond that, Image Playground has proved utterly useless, especially for real work.

If nothing else, iPhone, iPad, and Mac owners can use Image Playground app for things like profile photos instead of Memoji (remember Memoji?).

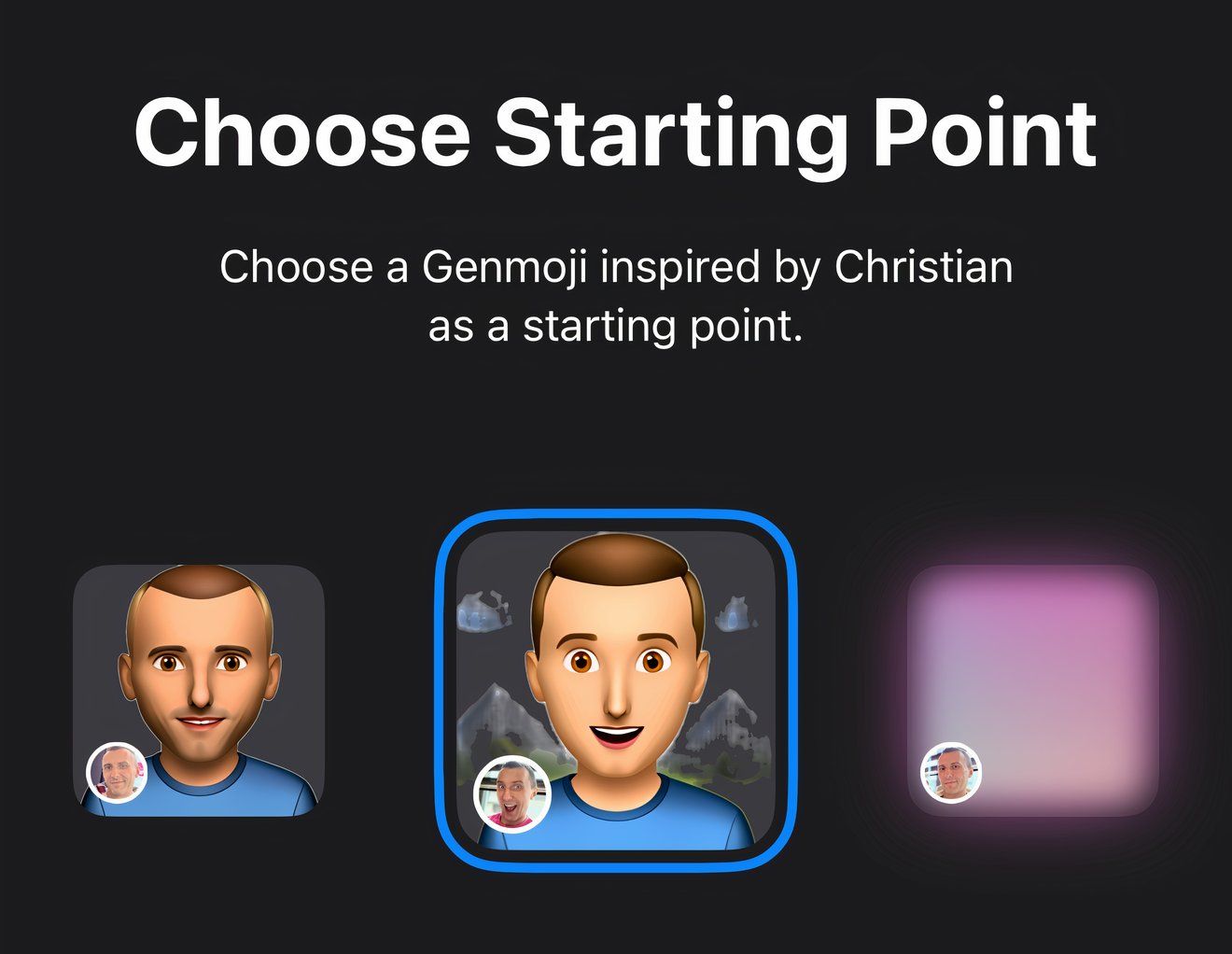

Genmoji and Image Wand Aren’t Much Better

The other two image generation features of Apple Intelligence—Genmoji and Image Wand—are no better than Image Playground because they all use the same underlying AI model.

Genmoji is available via the onscreen keyboard to create what Apple calls “custom emoji,” but that’s misleading. What this feature actually produces are regular images that can be applied to your iMessage chats as image attachments, stickers, or custom Tapback reactions.

Apple doesn’t make this clear, but Genmoji are not regular Unicode characters. Sending them to your non-Apple friends who use platforms like Android or Windows will either result in garbage or display your Genmoji as an image attachment.

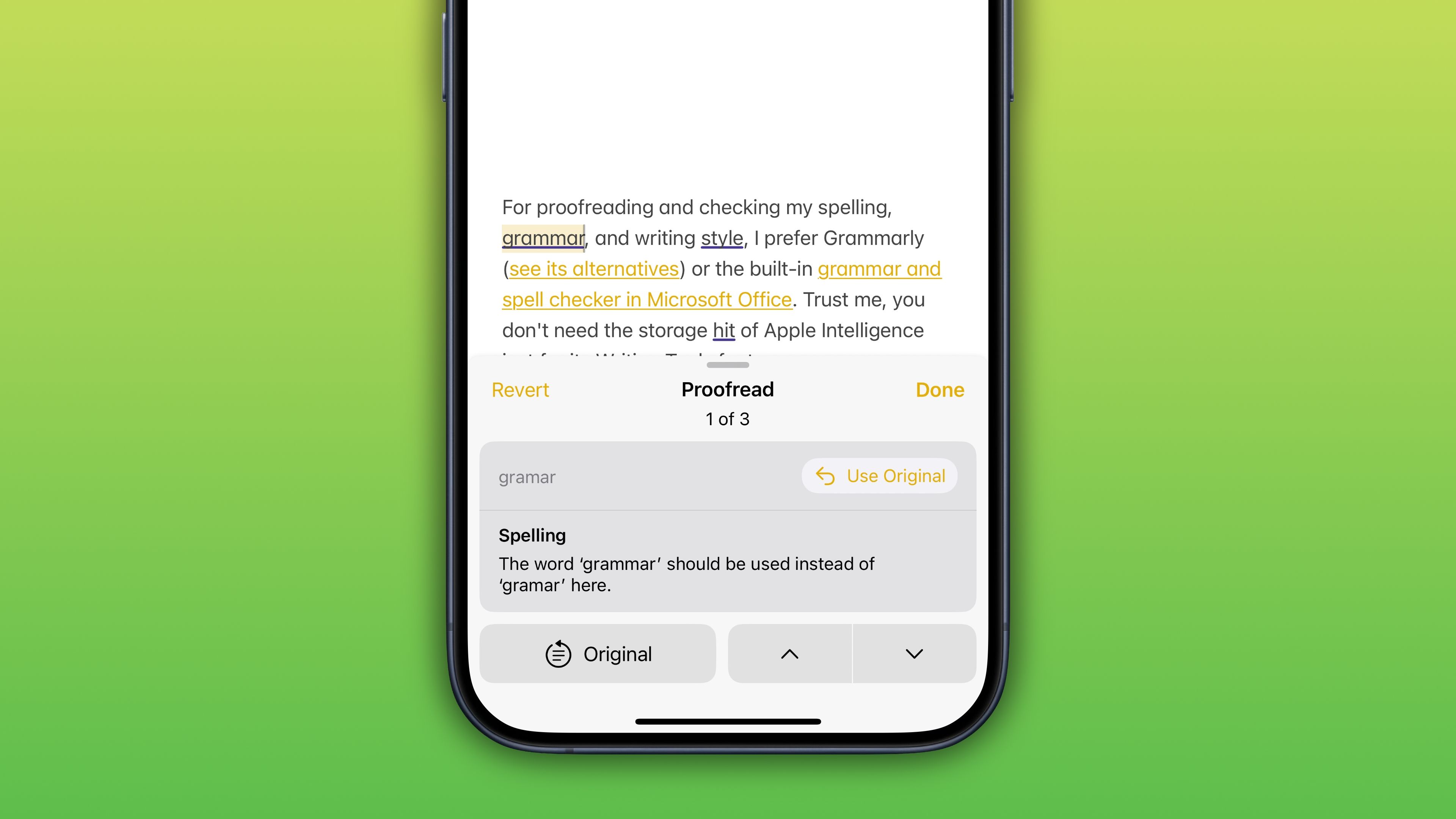

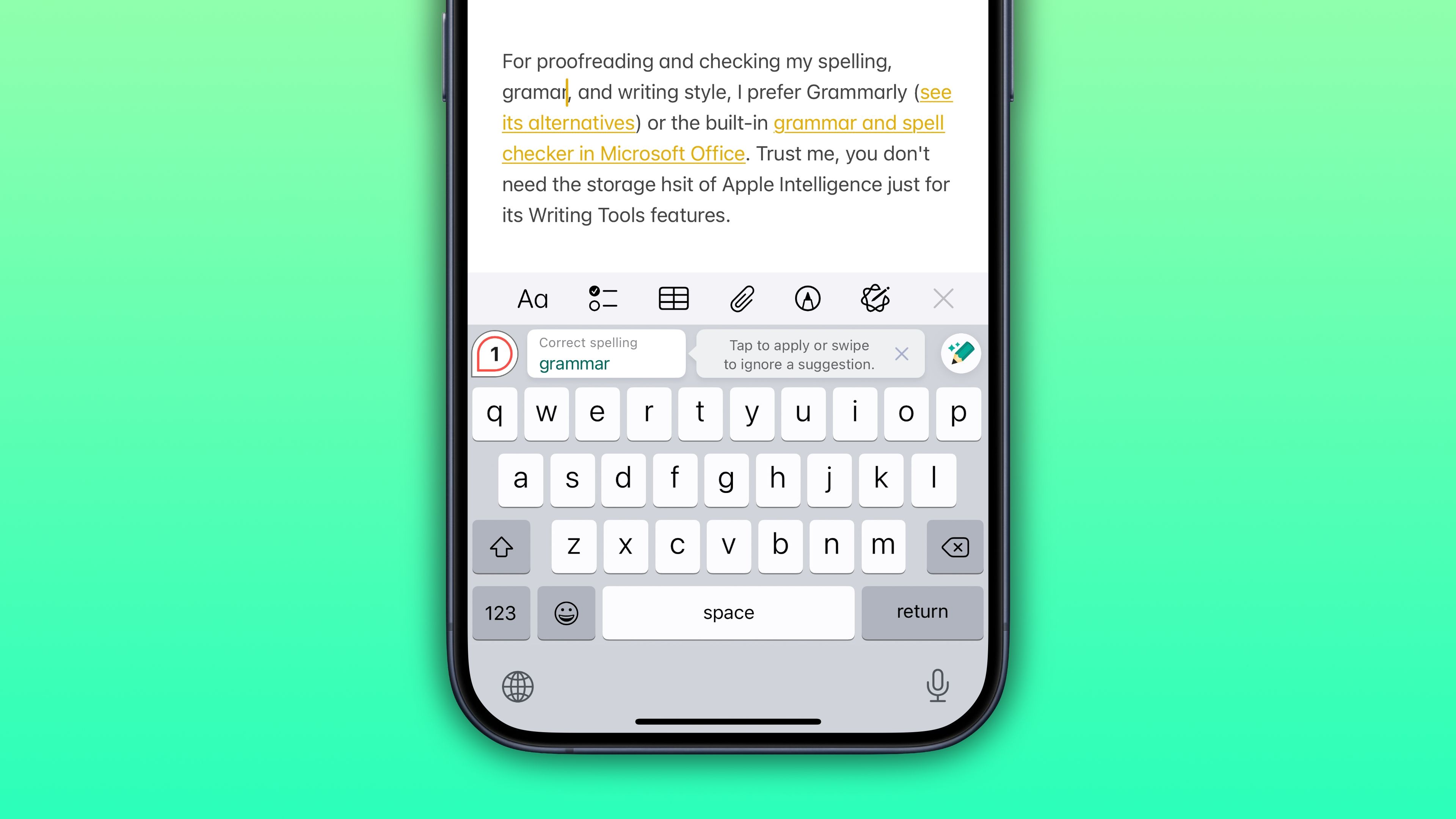

The only aspect of Apple Intelligence that I’ve been using daily is Writing Tools, a set of features to proofread text, check the spelling and grammar, rewrite text in a different style, or create something new from scratch via ChatGPT. These are genuinely helpful features, but I don’t think they’re worth the overhead of Apple Intelligence.

Except for the compose feature, all other capabilities offered by the Writing Tools use Apple’s own large language model that runs directly on the device, which taxes the battery. Couple this with the fact that the Writing Tools are integrated right into the onscreen keyboard, and you get a winning combination of integration and utility.

ChatGPT offers better proofreading and rewriting capabilities, but I’ve found myself firing up Writing Tools for such needs because integration trumps jumping in and out of apps to perform a quick grammar check. As for the compose feature, I don’t use it often because all it does is relay my requests to ChatGPT, which I already use.

Writing Tools work as advertised, but continue using ChatGPT if you already do. Apple Intelligence isn’t worth it just for the Writing Tools alone. If you don’t use ChatGPT, there are plenty of free AI text creation tools that can help you with your next writing assignment.

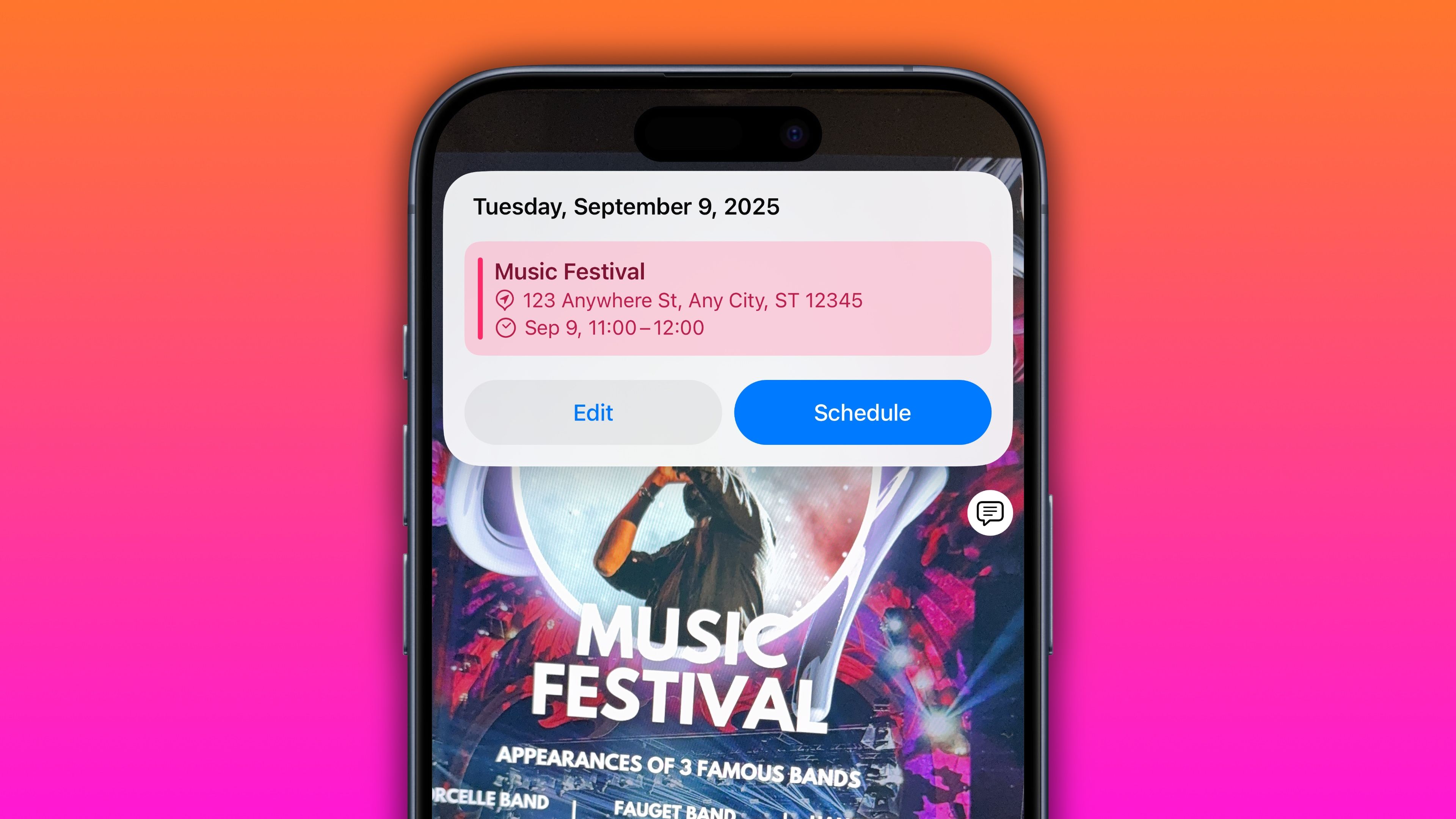

Visual Intelligence Cannot Touch Google Lens

While the Visual Intelligence feature of Apple Intelligence relies on Google for web search and ChatGPT for image analysis, the interface feels half-finished. Visual intelligence does some smart things, like detecting text in the viewfinder, and with the help of iOS data detectors, I can call the phone number detected on the image or add an event detected on a concert flyer to my calendar.

But at the end of the day, it’s just a glorified Google Lens, which currently has, in my opinion, the best image recognition technology in the industry.

I Don’t Trust Notification Summaries

There’s a reason summarized notifications are turned off for news apps by default: they can misrepresent headlines. I’ve actually turned off AI summaries for all apps because Apple Intelligence tends to produce some weird summaries of my Slack messages while away.

One time, we were chatting about shooting better images on an iPhone, and I had to go out to grab lunch. I forgot to screenshot it, but the way Apple Intelligence summarized Slack messages while I was away made it sound as if an editor shot another team member.

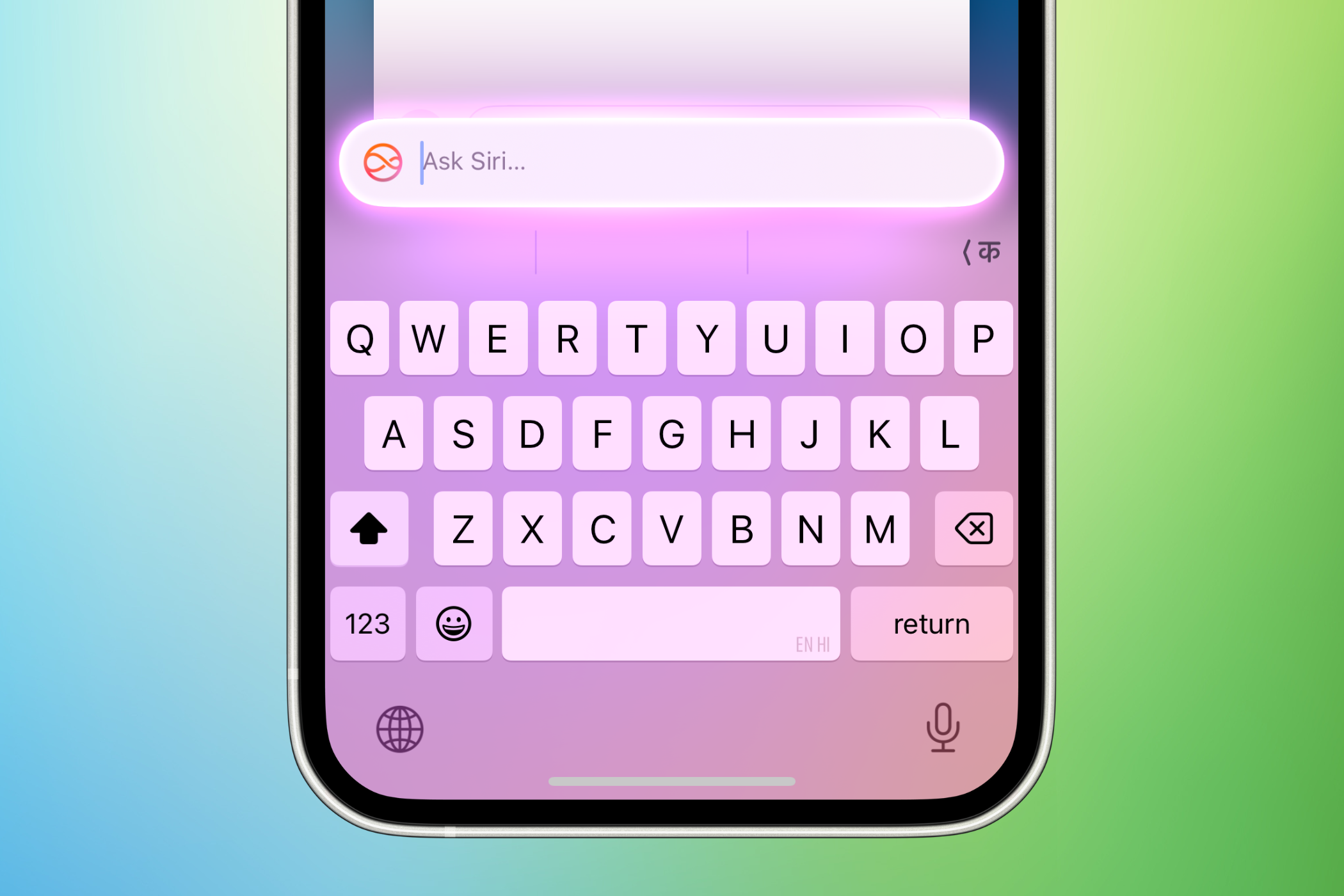

Siri Improvements Are Merely Small Wins

I don’t know if it’s just me, but I feel like Apple’s managed to make Apple Intelligence’s Siri worse than it’s ever been. It should understand me better, but I’m getting more “Sorry, I don’t understand” responses than before. It no longer seems to understand which room I’m in, and switches on the wrong lights all the time, ruining my smart home.

Right now, there are two Siri versions. One is the good ol’ dumb Siri we all know and love, which has become subjectively worse. The other is the new and supposedly smarter Siri, but all it currently does is offload complex requests to ChatGPT.

This begs the question: What’s the point of Apple Intelligence if I can simply use ChatGPT instead?

Siri was supposed to gain more advanced capabilities by now. But Apple fumbled Siri’s makeover, delaying an AI-infused Siri until iOS 19. A scoop by The Information reveals that the former Siri leadership was content with “small wins,” like faster response times and dropping “Hey” from the “Hey Siri” invocation phrase, which reportedly took two years to implement!

The new features supposed to arrive alongside the delayed Siri upgrade include personal context understanding (the “When’s Mom’s flight arriving?” feature), onscreen awareness (fulfilling requests based on what an app currently displays on the screen), and in-app actions (chaining together a series of actions across apps to perform complex multistep tasks).

Until I get to use the Siri features advertised in Apple’s pulled commercial, I can guarantee you that Apple’s assistant won’t get much attention from me; no small wins or the colorful Siri animation will be enough to change my mind.

Apple Intelligence Nice-to-Haves

The smallest features sometimes have the biggest impact. The Apple Intelligence features that I’ve been regularly using are actually all nice-to-haves, not the headline-grabbing capabilities that Apple heavily advertised, like Image Playground, Genmoji, or Writing Tools.

- Clean Up in Photos: This Photos app feature replaces distracting objects in images. Clean Up seamlessly replaces the background, but I wish it were faster. I also like how Clean Up blurs a person’s face with a pixelated effect when I brush over their face.

- Memory Movie in Photos: This creates animated slideshows with music and attractive transitions based on your natural language prompt. You could create these slideshows before by hand. Now you can just describe the story you’d like to see, such as “Picnic in the woods with Joanna, happy vibes” or some such.

- Better search in Photos: Computer vision in the Photos app gets a boost thanks to natural language understanding provided by Apple Intelligence. As a result, I can pinpoint the exact photos I need with specific queries such as “Chris in a city wearing jeans.”

- Reduce Interruptions focus: A new Focus mode that reduces notification load by prioritizing important alerts that might need immediate attention, such as a text about an early pickup from daycare or a notification about a carbon monoxide detected by your smart home sensor, while silencing everything else.

- Intelligent Breakthrough & Silencing focus toggle: Available in any focus mode, this setting ensures that any notifications you’ve specifically allowed or silenced will always be allowed or silenced. I turn on this setting if I don’t feel like personalizing a focus mode to my liking by allowing certain people and apps.

- Priority emails: This view shows time-sensitive emails in a Priority box at the top of the inbox (only in the Primary category or in list view). I keep it turned off because I leverage other strategies to triage my inbox.

- Smart replies in Messages and Mail: One-touch responses based on the contents of the original message, but they only work for simple replies. For replies that must include all the pertinent details, smart replies won’t do the trick.

- Summaries in Messages, Mail, and Safari: I don’t trust email summaries for the same reason that I distrust notification summaries: they’re not 100% accurate all the time. I also don’t have any need for summaries of unread messages in my Messages conversation because my chat threads are never that long. The only exception is summaries of webpages in Safari—those I use all the time to summarize a long article into the key takeaways, so I can decide whether this is something worth reading.

I’ve Replaced Apple Intelligence With Apps

I’ve easily replaced the functionality that Apple Intelligence provides with third-party apps. Text summaries and rewrites are the only AI features that I find genuinely useful for work and everyday personal use, but ChatGPT does them better than any other rival AI service out there.

Image Playground wouldn’t generate many things I asked for. That’s fine—I’ll continue using ChatGPT for AI image generation. I tried liking Genmoji, but it just doesn’t click for me. I also don’t need unhinged notification summaries compiled by Apple Intelligence.

As for Siri, it’s a joke at this point. Siri was always dumb as a rock, but Apple has somehow managed to make its smart assistant worse than it’s ever been. The AI-infused Siri we were promised in June 2024 has been delayed, and I’ll trust its capabilities when I see them in action. In the meantime, I’ll be using ChatGPT whenever I feel like conversing with a chatbot.

The only advantage Apple Intelligence has stems from being built into the iOS, iPadOS, and macOS operating systems, so you don’t have to download anything. In every other aspect, Apple lags behind the competition in the AI race. Aggressively pushing Apple Intelligence by quietly re-enabling it after a software update only makes Apple look weak.

The storage requirements and battery hit are no small concerns, and the performance and unimpressive output leave a lot to be desired, so I’ve turned Apple Intelligence off.

The whole Apple Intelligence thing is disappointing beyond belief. Apple has tarnished its reputation by releasing a subpar product while falsely advertising its features. I won’t be turning Apple Intelligence back on until it improves, and by a large margin.