OPINION: You can now train an iPhone and iPad to speak for you, in your own voice. The feature could literally give a voice to the voiceless. It would have eased heartache for my family.

Apple has revealed the iPhone and iPad can now learn to simulate the user’s voice after training the machine learning tech for just 15 minutes.

The Personal Voice Advance Speech accessibility feature is designed to enable people to speak with loved ones, even though their ability to use their voice may be diminished or completely under threat.

512GB Apple Mac Mini with M2 chip

Amazon is offering more than £70 off the uber-compact, uber-powerful 2023 Mac Mini with a very reasonable 512GB storage.

- Amazon

- Was £849

- Now £776

Apple says: “For users at risk of losing their ability to speak — such as those with a recent diagnosis of ALS (amyotrophic lateral sclerosis) or other conditions that can progressively impact speaking ability — Personal Voice is a simple and secure way to create a voice that sounds like them.

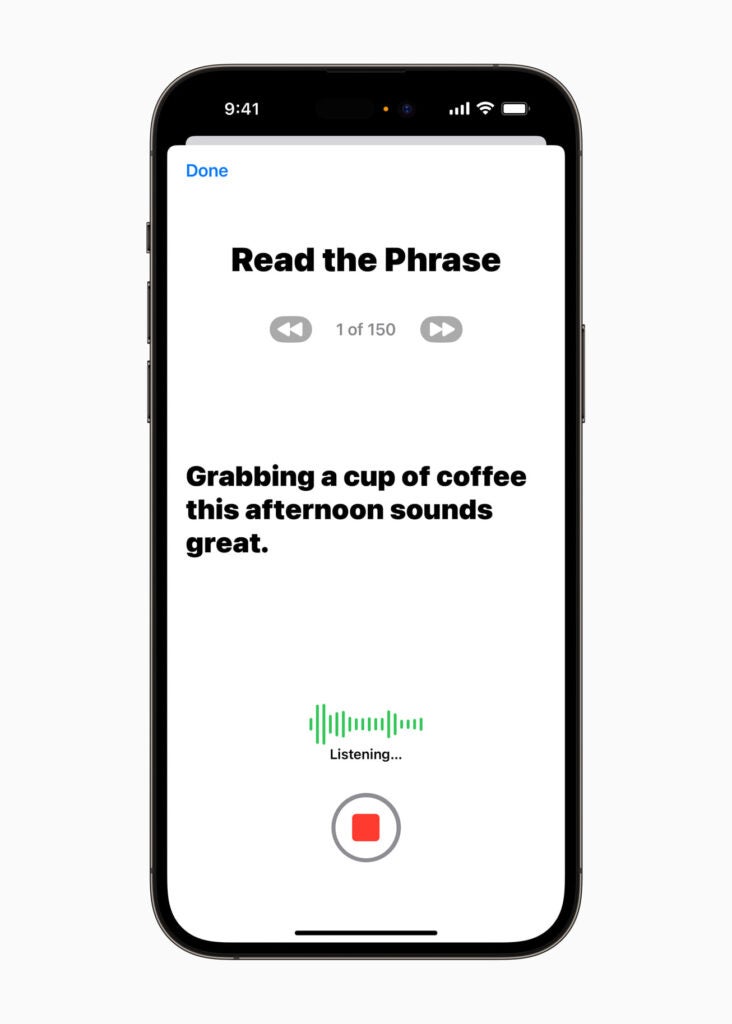

“Users can create a Personal Voice by reading along with a randomised set of text prompts to record 15 minutes of audio on iPhone or iPad. This speech accessibility feature uses on-device machine learning to keep users’ information private and secure, and integrates seamlessly with Live Speech so users can speak with their Personal Voice when connecting with loved ones.”

Hearing this news immediately brought my late father to mind.

He passed away in 2014 after a long battle with throat cancer that cost him his voice a few years beforehand. The tracheostomy button that allows some of patients to continue to speak, didn’t work. He became voiceless. We lipread, listened for the sounds of his breath, and got by.

Looking back over those memories is painful. My dad losing his voice was far greater than simply losing his ability to speak words and hold a conversation. It was his sense of self, it was his ability to be involved with a group. Often he felt bypassed.

He tried using an electrolarynx for a while. But he’s a was hard working, hard drinking working class man who spent most of his free time in working class pubs.

Somebody made fun of him for using it – as working class men tend to do with each other as a coping mechanism when showing real emotion is the alternative – and that was that. The device went in the drawer, never to come out.

At my wedding in 2014, he stood arm in arm with my best man while a speech he had written was read aloud. It was hugely moving, yet somewhat tragic too. All he wanted was to say a few words at his son’s wedding and was robbed of that.

I often think back on that time and wondered if I could have done more, especially with my background in tech. I look at a new feature like like Personal Voice Advance Speech Accessibility from Apple and think about how that could have helped.

In the run up to surgery I could have had dad go through this process, and create a Personal Voice profile for him, while I still could. He wasn’t the most tech savvy (to be honest, he struggled with Teletext when looking for the horse racing), but I could have helped him. So could my sister.

It would have allowed him to still have a voice. A voice that sounds like him. And his agency. In some ways, his humanity. That wedding speech, as moving as it was, could have been delivered in his voice after all.

One of the things I find hardest about his passing to this day is that I struggle to hear the sound of his voice in my head. I mean I think I can, but is that really it?

Had a feature like this been around. He would have kept his voice and I would have been able to keep it too.

The coverage of this feature has mainly been about the machine learning language advances that make this possible. But at its heart, the story is in the humanity. I should know.