Apple’s machine learning researchers have worked on myriad ways to improve Apple Intelligence and other generative AI systems, as its research papers accepted by a major AI conference demonstrate.

The creation of Apple Intelligence and other machine learning tools at Apple requires a lot of research. This is both for improving existing offerings and for future services that Apple doesn’t offer its users just yet.

While Apple has offered glimpses into this work in previous releases, a selection of papers accepted by the Thirteenth International Conference on Learning Representations gives more of a look into the work.

Enhancing computer vision, and computer visions

One of the key areas of machine learning research is computer vision. The ability to draw out information from an image can provide a system with a considerable advantage.

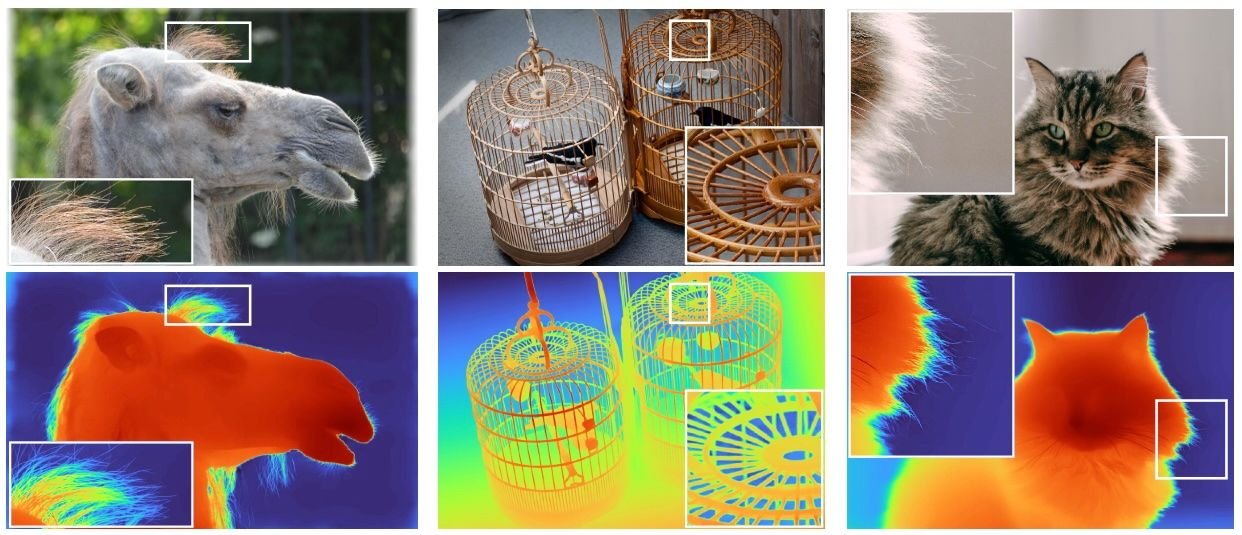

In the presentation of “Depth Pro: Sharp Monocular Metric Depth in Less Than a Second,” Apple explains how the depth information of a single image can be determined. This includes creating high-resolution depth maps, taking into account fine details such as hair or fluff.

The system also manages to do so without the need for metadata, such as what kind of camera was used.

While computer vision is one important area, another is the other direction, generating images. In two papers for text-to-image generation and control, Apple offers a technique for controlling the output, while the other handles a new technique for diffusion-based text-to-image generations.

For the former, Apple describes it as using Activation Transport, a generational framework for steering activations using an optimal transport theory. This uses a generalization of previous activation-steering works.

DART (Denoising Autogregressive Transformer for Scalable Text-to-Image Generation) is an argument that current denoising of a Markovian process to add noise to a process is inefficient in training. Instead, Apple offers a transformer-based model to unify autogregressive and diffusion within a non-Markovian framework.

The upshot is a system that is more effective while still flexible, and capable of training both text and image data within a unified model.

Decisions and reasoning

With the potential for Apple Intelligence to potentially activate apps and perform tasks on the behalf of the user, researchers have to work on systems to perform such tasks with a level of certainty.

The presentation “On the Modeling Capabilities of Large Language Models for Sequential Decision Making,” Apple researchers will propose an LLM’s general knowledge could be utilized for policy learning for reinforcement agents.

The results propose that it could be viable to use generalist foundation models and automatic annotations instead of “costly human-designed reward functions.” This could mean a more effective training system could be used to create the models in the future.

When it comes to a complex task, a model will have to work through a reasoning step, but each can be an opportunity for errors to creep in and cause problems. While current research uses an external verifier that weights multiple solutions, it is also affected by sampling inefficiencies and requires a lot of supervision.

“Step-by-Step Reasoning for Math Problems via Twisted Sequential Monte Carlo” offers a way that refines the sampling effort so that it focuses more on promising solutions. By estimating the expected future rewards for partial solutions, the models can be trained in ways that also rely on less human interference.

To create safe AI agents using LLMs, there is a need for models to follow constraints and guidelines provided by users. However, LLMs often fail to follow even basic commands.

The presentation of “Do LLMs Know Internally When The Follow Instructions?” will explore if an LLM encodes information in its representation that in theory correlates with the successful following of instructions. This includes creating predictions of whether responses comply with instructions, and generalizes its effectiveness over potential similar tasks.

With the distinct possibility for an LLM to hallucinate, referring to the creation of incorrect results but delivered as fact, there’s also a need for LLMs to estimate its own certainty. Another presentation for “Do LLMs Estimate Uncertainty Well in Instruction Following?” will evaluate the capability of LLMs to determine how well they can estimate their uncertainty.

Apple believes current estimation methods run poorly, and therefore change is needed.

Apple at the conference

Apple researchers will be presenting submitted research at the ICLR, running from April 24 to April 28 in Singapore, across a variety of topics. Apple is also hosting a booth at location C03, as well as sponsoring affinity group-hosted events during the conference.

At its booth, attendees can try out Depth Zero, the monocular depth estimation system. They can also look at FastVLM, a family of mobile-friendly vision language models.