Key Takeaways

- Ensure only a single instance of your script is running using pgrep, lsof, or flock to prevent concurrency issues.

- Easily implement checks to self-terminate a script if other running instances are detected.

- By leveraging the exec and env commands, the flock command can achieve all of this with one line of code.

Some Linux scripts have such an execution overhead, running several instances at once needs to be prevented. Thankfully, there are several ways you can to achieve this in your own Bash scripts.

Sometimes Once Is Enough

Some scripts shouldn’t be launched if a previous instance of that script is still running. If your script consumes excessive CPU time and RAM, or generates a lot of network bandwidth or disk thrashing, limiting its execution to one instance at a time is common sense.

But it’s not just resource hogs that need to run in isolation. If your script modifies files, there can be a conflict between two (or more) instances of the script as they fight over access to the files. Updates might be lost, or the file might be corrupted.

One technique to avoid these problem scenarios is to have the script check that there are no other versions of itself running. If it detects any other running copies, the script self-terminates.

Another technique is to engineer the script in such a way that it locks itself down when it is launched, preventing any other copies from running.

We’re going to look at two examples of the first technique, and then we’ll look at one way to do the second.

Using pgrep To Prevent Concurrency

The pgrep command searches through the processes that are running on a Linux computer, and returns the process ID of processes that match the search pattern.

I’ve got a script called loop.sh. It contains a for loop that prints the iteration of the loop, then sleeps for a second. It does this ten times.

#!/bin/bashfor (( i=1; i=10; i+=1 ))

do

echo "Loop:" $i

sleep 1

done

exit 0

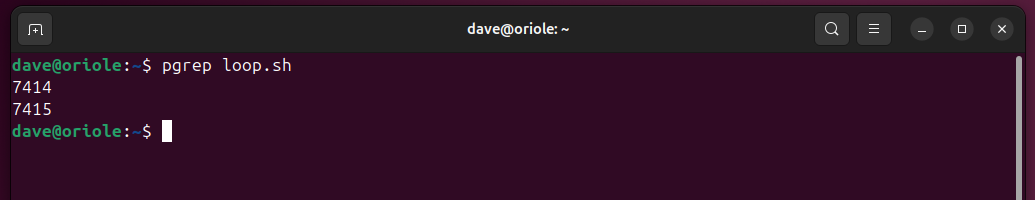

I set two instances of it running, then used pgrep to search for it by name.

pgrep loop.sh

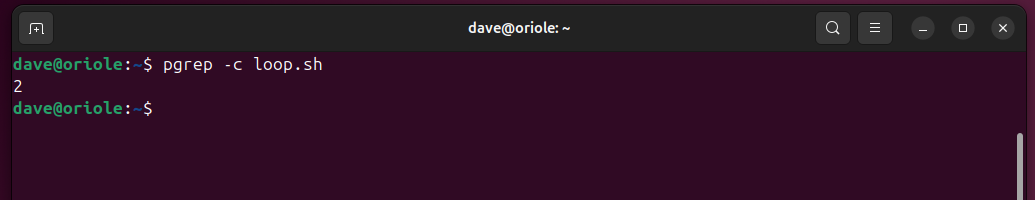

It locates the two instances and reports their process IDs. We can add the -c (count) option so that pgrep returns the number of instances.

pregp -c loop.sh We can use that count of instances in our script. If the value returned by pgrep is greater than one, there must be more than one instance running, and our script will close down.

We’ll create a script that uses this technique. We’ll call it pgrep-solo.sh.

The if comparison tests whether the number returned by pgrep is larger than one. If it is, the script exits.

if [ $(pgrep -c pgrep-solo.sh) -gt 1 ]; then

echo "Another instance of $0 is running. Stopping."

exit 1

fiIf the number returned by pgrep is one, the script can continue. Here’s the complete script.

#!/bin/bashecho "Starting."

if [ $(pgrep -c pgrep-solo.sh) -gt 1 ]; then

echo "Another instance of $0 is running. Stopping."

exit 1

fi

for (( i=1; i=10; i+=1 ))

do

echo "Loop:" $i

sleep 1

done

exit 0

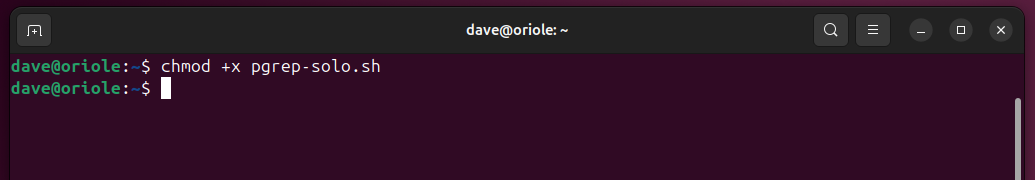

Copy this to your favorite editor, and save it as pgrep-solo.sh. Then make it executable with chmod.

chmod +x pgrep-loop.sh When it runs, it looks like this.

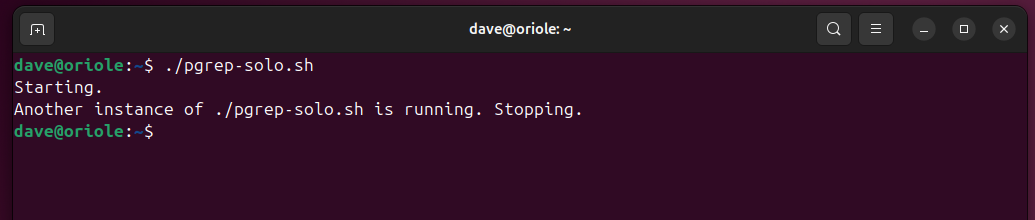

./pgrep-solo.sh But if I try to start it with another copy already running in another terminal window, it detects that, and exits.

./pgrep-solo.sh Using lsof To Prevent Concurrency

We can do a very similar thing with the lsof command.

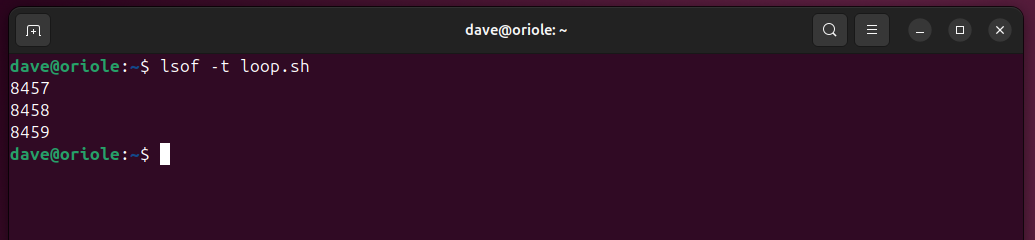

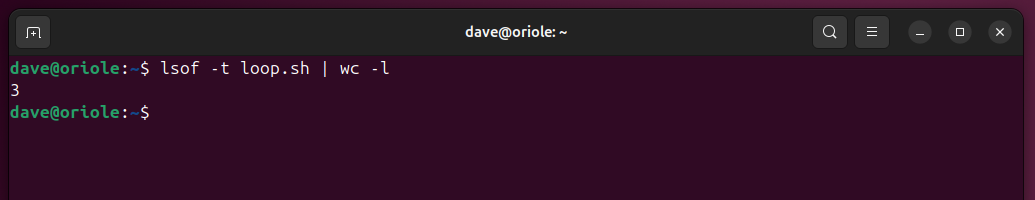

If we add the -t (terse) option, lsof lists the process IDs.

lsof -t loop.sh We can pipe the output from lsof into wc. The -l (lines) option counts the number of lines which, in this scenario, is the same as the number of process IDs.

lsof -t loop.sh | wc -l We can use that as the basis of the test in the if comparison in our script.

Save this version as lsof-solo.sh.

#!/bin/bashecho "Starting."

if [ $(lsof -t "$0" | wc -l) -gt 1 ]; then

echo "Another instance of $0 is running. Stopping."

exit 1

fi

for (( i=1; i=10; i+=1 ))

do

echo "Loop:" $i

sleep 1

done

exit 0

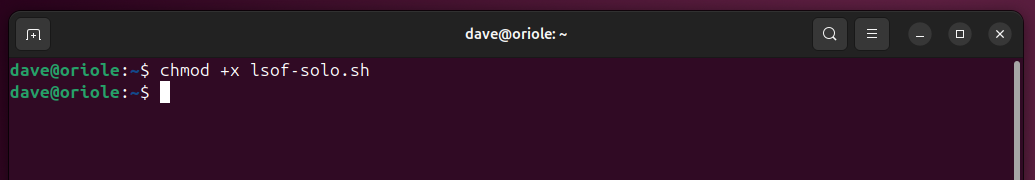

Use chmod to make it executable.

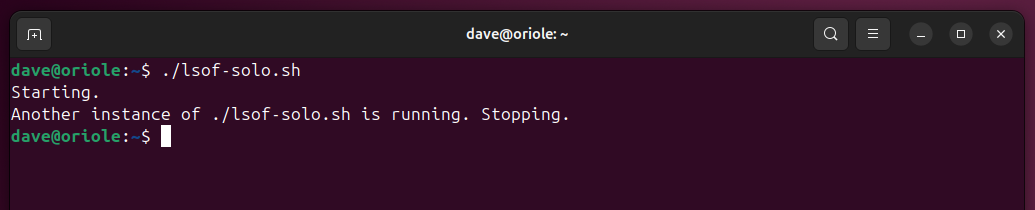

chmod +x lsof-solo.sh Now, with the lsof-solo.sh script running in another terminal window, we can’t start a second copy.

./lsof-solo.sh The pgrep method only requires a single call to an external program (pgrep), the lsof method requires two (lsof and wc). But the advantage the lsof method has over the pgrep method is, you can use the $0 variable in the if comparison. This holds the script name.

It means you can rename the script, and it’ll still work. You don’t need to remember to edit the if comparison line and insert the script’s new name. The $0 variable includes the ‘./’ at the start of the script name (like ./lsof-solo.sh), and pgrep doesn’t like it.

Using flock To Prevent Concurrency

Our third technique uses the flock command, which is designed to set file and directory locks from within scripts. While it is locked, no other process can access the locked resource.

This method requires a single line to be added at the top of your script.

[ "${GEEKLOCK}" != "$0" ] && exec env GEEKLOCK="$0" flock -en "$0" "$0" "$@" || : We’ll decode those hieroglyphics shortly. For now, let’s just check that it works. Save this one as flock-solo.sh.

#!/bin/bash[ "${GEEKLOCK}" != "$0" ] && exec env GEEKLOCK="$0" flock -en "$0" "$0" "$@" || :

echo "Starting."

for (( i=1; i=10; i+=1 ))

do

echo "Loop:" $i

sleep 1

done

exit 0

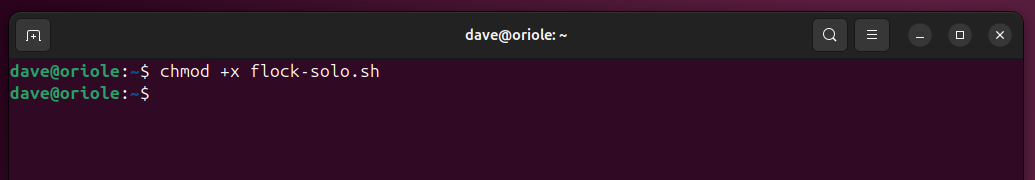

Of course, we need to make it executable.

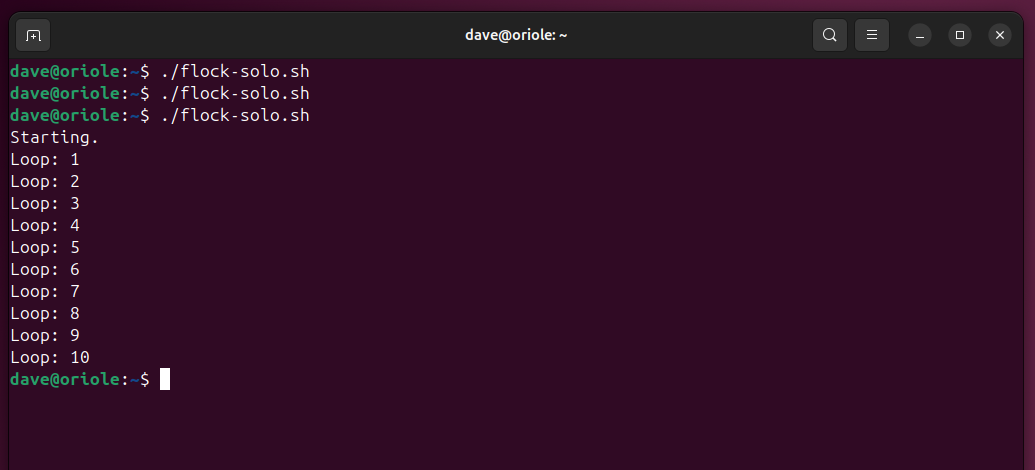

chmod +x flock-solo.sh I started the script in one terminal window, then tried to run it again in a different terminal window.

./flock-solo

./flock-solo

./flock-solo I can’t launch the script until the instance in the other terminal window has completed.

Let’s unpick the line that does the magic. At the heart of it is the flock command.

flock -en "$0" "$0" "$@" The flock command is used to lock a file or directory, and then to run a command. The options we’re using are -e (exclusive) and -n (nonblocking).

The exclusive option means if we’re successful at locking the file, no one else can access it. The nonblocking option means if we fail to obtain a lock, we immediately stop trying. We don’t retry for a period of time, we gracefully bow out straight away.

The first $0 indicates the file we wish to lock. This variable holds the name of the current script.

The second $0 is the command we want to run if we’re successful in obtaining a lock. Again, we’re passing in the name of this script. Because the lock locks out everyone apart from us, we can launch the script file.

We can pass parameters to the command that is launched. We’re using $@ to pass any command line parameters that were passed to this script, to the new invocation of the script that’s going to be launched by flock.

So we’ve got this script locking the script file, then launching another instance of itself. That’s nearly what we want, but there’s a problem. When the second instance is finished, the first script will resume its processing. However, we have another trick up our sleeve to cater for that, as you’ll see.

We’re using an environment variable we’re calling GEEKLOCK to indicate whether a running script needs to apply the lock or not. If the script has launched and there’s no lock in place, the lock must be applied. If the script has been launched and there is a lock in place, it doesn’t need to do anything, it can just run. With a script running and the lock in place, no other instances of the script can be launched.

[ "${GEEKLOCK}" != "$0" ] This test translates to ‘return true if the GEEKLOCK environment variable is not set to the script name.’ The test is chained to the rest of the command by && (and) and || (or). The && portion is executed if the test returns true, and the || section is executed if the test returns false.

env GEEKLOCK="$0" The env command is used to run other commands in modified environments. We’re modifying our environment by creating the environment variable GEEKLOCK and setting it to the name of the script. The command that env is going to launch is the flock command, and the flock command launches the second instance of the script.

The second instance of the script performs its check to see whether the GEEKLOCK environment variable doesn’t exist, but finds that it does. The || section of the command is executed, which contains nothing but a colon ‘:’ which is actually a command that does nothing. The path of execution then runs through the rest of the script.

But we’ve still got the issue of the first script carrying on its own processing when the second script has terminated. The solution to that is the exec command. This runs other commands by replacing the calling process with the newly launched process.

exec env GEEKLOCK="$0" flock -en "$0" "$0" "$@" So the full sequence is:

- The script launches and can’t find the environment variable. The && clause is executed.

- exec launches env and replaces the original script process with the new env process.

- The env process creates the environment variable and launches flock.

- flock locks the script file and launches a new instance of the script which detects the environment variable, runs the || clause and the script is able to run to its conclusion.

- Because the original script was replaced by the env process, it’s no longer present and can’t continue its execution when the second script terminates.

- Because the script file is locked when it is running, other instances cannot be launched until the script launched by flock stops running and releases the lock.

That might sound like the plot of Inception, but it works beautifully. That one line certainly packs a punch.

For clarity, it’s the lock on the script file that prevents other instances being launched, not the detection of the environment variable. The environment variable only tells a running script to either set the lock, or that the lock is already in place.

Lock and Load

It’s easier than you might expect to ensure only a single instance of a script is executed at once. All three of these techniques work. Although it’s the most convoluted in operation, the flock one-liner is the easiest to drop into any script.