If you’ve ever used any of the AI chatbots out there, you might wonder how they work and if you could build one yourself. With Python, that’s more than possible, and here we’ll cover how to build the simplest chatbot possible, and give you some advice for upgrading it.

Learning About NLTK With Python

One of the most compelling reasons to use Python is its modular nature. We saw this in our simple expense tracker, when we used Tkinter to create a basic GUI for our application. Chatbots don’t have to be that sophisticated, though, and we can run one in a terminal with no problem. I won’t be designing a UI for this app, but you’re welcome to try it yourself.

The basis of our app is NLTK (Natual Language Toolkit), a Python library that gives us the basic building blocks for a chatbot. To install NLTK, open the location of your Python install in a terminal and type:

pip install nltk

You should see the NLTK library being downloaded and updated. Just like any other includes, we’ll have to import NLTK at the top of our code, like this:

import nltk

from nltk.tokenize import word_tokenize

from nltk.sentiment import SentimentIntensityAnalyzer

from nltk.corpus import stopwords

from nltk.tag import pos_tag

import string

import random

import re

nltk.download('punkt_tab')

nltk.download('vader_lexicon')

nltk.download('stopwords')

nltk.download('averaged_perceptron_tagger_eng')

You might have noticed that we’ve added some “download” keywords there. This allows us to download the basic data set including the responses and information our very simple chatbot already knows. Let’s dive into making the chatbot work.

Creating the Chatbot’s Interface

We’ll keep it simple with the chatbot interface, so it’ll be similar to how we did our simple to-do list in Python. We’ll be using the terminal window for our input and output.

class SmartAIChatbot:

def __init__(self):

self.knowledge_base = {

'greetings': {

'patterns': ['hello', 'hi', 'hey'],

'responses': [

"Hi there! How can I help you today?",

"Hello! Nice to meet you!",

"Hey! What's on your mind?"

]

},

'questions': {

'what': {

'patterns': ['what is', 'what are', 'what can'],

'responses': [

"Let me think about {topic}...",

"Regarding {topic}, I would say...",

"When it comes to {topic}, here's what I know..."

]

},

'how': {

'patterns': ['how do', 'how can', 'how does'],

'responses': [

"Here's a way to approach {topic}...",

"When dealing with {topic}, you might want to...",

"The process for {topic} typically involves..."

]

},

'why': {

'patterns': ['why is', 'why do', 'why does'],

'responses': [

"The reason for {topic} might be...",

"Thinking about {topic}, I believe...",

"Let me explain why {topic}..."

]

}

},

'farewell': {

'patterns': ['bye', 'goodbye', 'see you'],

'responses': [

"Goodbye! Have a great day!",

"See you later!",

"Bye! Come back soon!"

]

}

}

self.sentiment_analyzer = SentimentIntensityAnalyzer()

self.stop_words = set(stopwords.words('english')) This gives our chatbot the basic interaction and prompt words as well as the beginnings of a way to handle questions. Now, let’s look at how this AI bot will take prompts and how it’ll understand what we type.

How to Teach Your Bots to Read

You might have noticed the word “tokenize” in the previous imports. “Tokens” are what bots use to understand what we’re saying. To determine what topic you want to discuss, we’ll use tokens and tagging, as well as handling things like questions:

def tokenize_and_tag(self, user_input):

"""Clean, tokenize, and POS tag user input"""

cleaned = user_input.lower().translate(str.maketrans('', '', string.punctuation))

tokens = word_tokenize(cleaned)

tagged = pos_tag(tokens)

return tokens, tagged def extract_topic(self, tokens, question_word):

"""Extract the main topic after a question word"""

try:

q_pos = tokens.index(question_word.lower())

topic_words = tokens[q_pos + 2:]

topic = ' '.join([word for word in topic_words if word not in self.stop_words])

return topic if topic else "that"

except ValueError:

return "that"

def identify_question_type(self, user_input, tagged_tokens):

"""Identify the type of question and extract relevant information"""

question_words = {

'what': 'what', 'why': 'why', 'how': 'how',

'when': 'when', 'where': 'where', 'who': 'who'

}

is_question = any([

user_input.endswith('?'),

any(word.lower() in question_words for word, tag in tagged_tokens),

any(pattern in user_input.lower() for qtype in self.knowledge_base['questions']

for pattern in self.knowledge_base['questions'][qtype]['patterns'])

])

if not is_question:

return None, None

for q_type, q_info in self.knowledge_base['questions'].items():

for pattern in q_info['patterns']:

if pattern in user_input.lower():

topic = self.extract_topic(user_input.split(), q_type)

return q_type, topic

return 'general', 'that'

Building Our Bot’s (Rudimentary) Brain

As this is a simple AI chatbot, we won’t be doing any complex thought processing. Instead, we’ll develop a method for processing user input, and determining the sentiment:

def get_response(self, user_input):

"""Generate a more thoughtful response based on input type"""

tokens, tagged_tokens = self.tokenize_and_tag(user_input)

for category in ['greetings', 'farewell']:

if any(pattern in user_input.lower() for pattern in self.knowledge_baseProgramming['patterns']):

return random.choice(self.knowledge_baseProgramming['responses'])

q_type, topic = self.identify_question_type(user_input, tagged_tokens)

if q_type:

if q_type in self.knowledge_base['questions']:

template = random.choice(self.knowledge_base['questions'][q_type]['responses'])

return template.format(topic=topic)

else:

return f"That's an interesting question about {topic}. Let me think... "

sentiment = self.analyze_sentiment(user_input)

if sentiment > 0.2:

return "I sense your enthusiasm! Tell me more about your thoughts on this."

elif sentiment -0.2:

return "I understand this might be challenging. Would you like to explore this further?"

else:

return "I see what you mean. Can you elaborate on that?"

def analyze_sentiment(self, text):

"""Analyze the sentiment of user input"""

scores = self.sentiment_analyzer.polarity_scores(text)

return scores['compound']

We use vader_lexicon to tell us how the user’s input sentiment feels and from there we can determine what type of output we will give them.

Talking to Our Bot

To round out the coding, we’ll finalize the chat interface:

def chat(self):

"""Main chat loop"""

print("Bot: Hi! I'm a smarter chatbot now. I can handle questions! Type 'bye' to exit.")

while True:

user_input = input("You: ")

if user_input.lower() in ['bye', 'goodbye', 'exit']:

print("Bot:", random.choice(self.knowledge_base['farewell']['responses']))

break

response = self.get_response(user_input)

print("Bot:", response)

if __name__ == "__main__":

chatbot = SmartAIChatbot()

chatbot.chat()

This allows us to have a basic chat with our chatbot, and get some of its responses.

Bringing It All Together and Ways to Improve

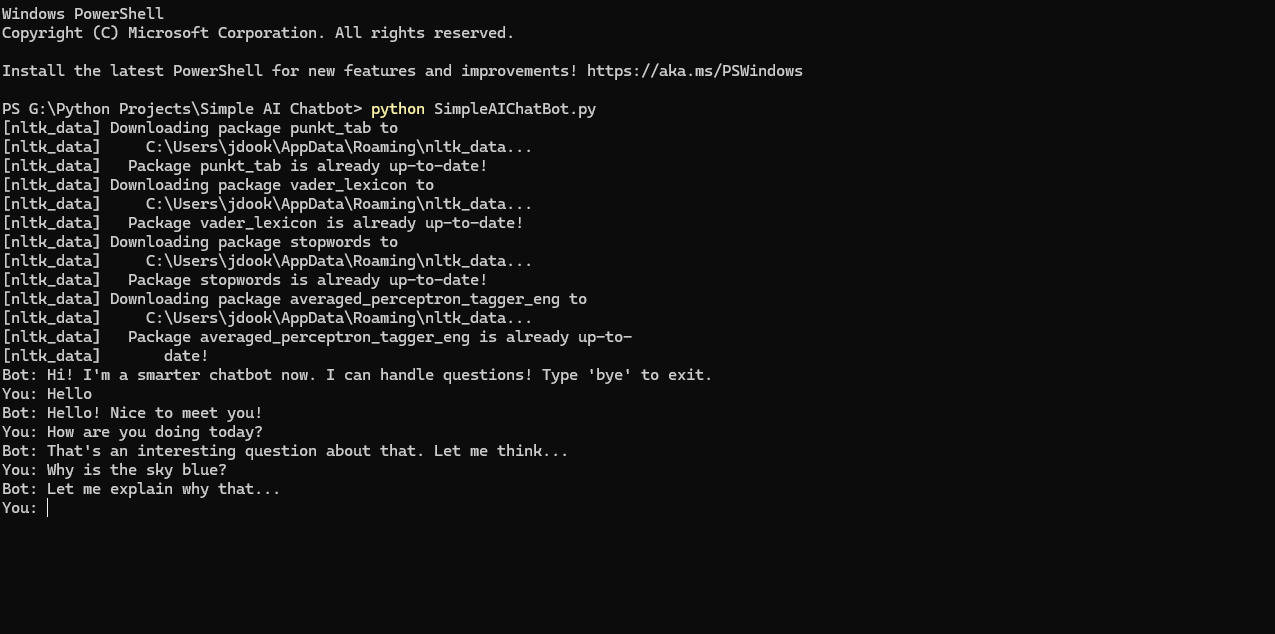

When we run our chatbot, we should see something like this:

At this point, the bot can read your input and give you a randomized response, but it can’t actually think and respond to you. However, because of the tokenization system, it can figure out what you’re trying to ask. It just doesn’t have any data to respond with.

To build an AI chatbot with a proper knowledge base, you’d need to dive into word nets and learn about serializing data which is way beyond what we want to do here. However, if you want to make a more functional chatbot, there are a lot of resources that can teach you what you need to know. As always, this code is available on my GitHub for download or comments.

There are a lot of things that we can improve with this bot, from giving it an actual knowledge base to upgrading the UI to something more sleek and inviting. Experiment with this and see what you come up with!