Chinese startup DeepSeek has stunned the biggest Silicon Valley players with its new AI chatbot that rivals the performance of OpenAI’s ChatGPT while costing far less to develop.

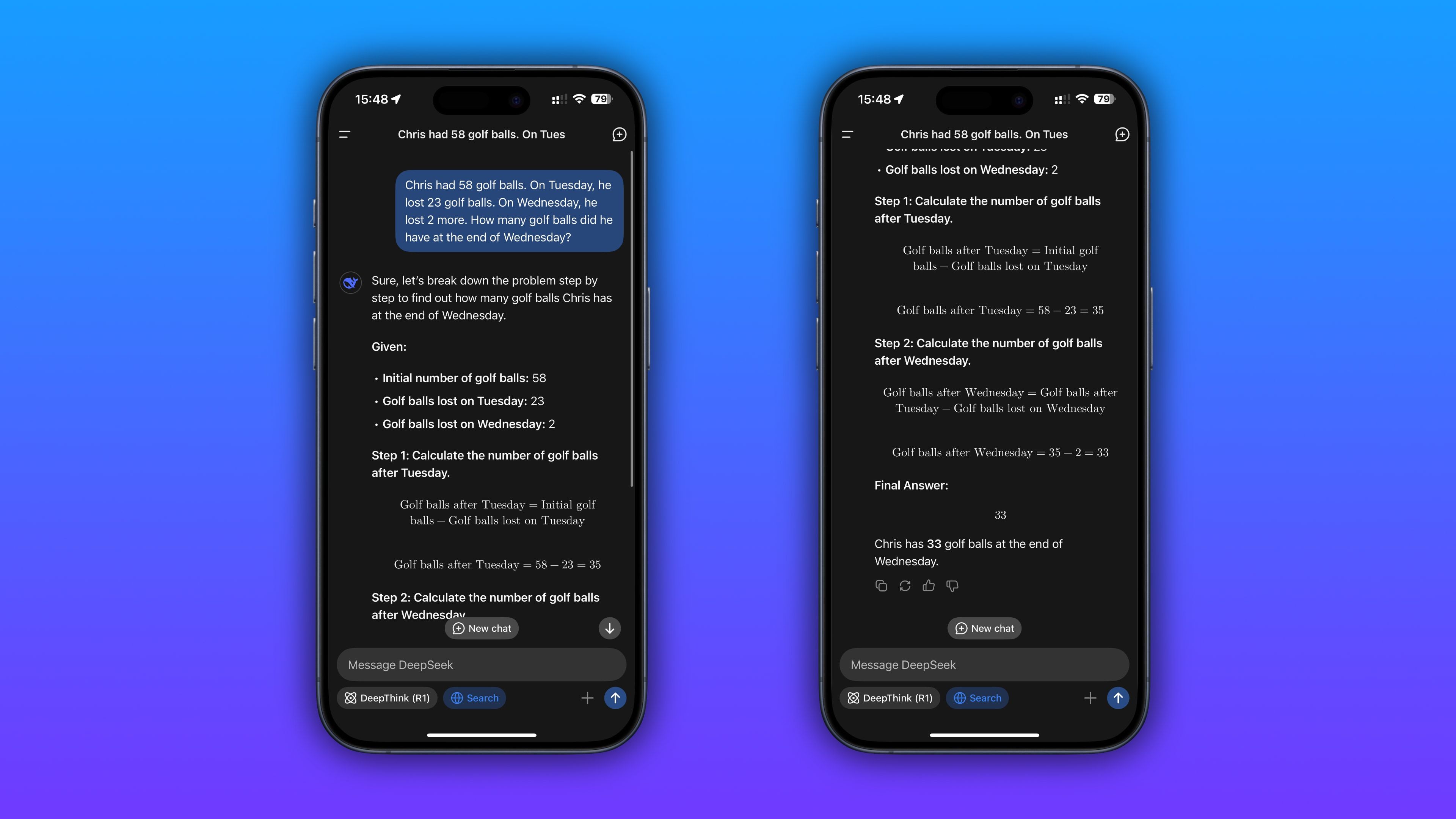

Aa of this writing, DeepSeek has overtaken ChatGPT on Apple’s App Store as the most downloaded free app in the United States, the United Kingdom, China, and multiple other countries. It works like a typical chatbot: you enter a query, and its open-sourced model generates an answer. The underlying DeepSeek-V3 model features 671 billion parameters, enabling the app to “think” before it gets to solving the problem.

Unlike OpenAI’s model, which can only run on its own servers, DeepSeek can run locally on higher-power computers and many GPU-accelerated servers. The company claims its model matches OpenAI’s o1 model on specific benchmarks. Unlike many other AI chatbots, DeepSeek also transparently shows its reasoning and how it derived an answer. As a Chinese app, however, DeepSeek censors certain topics such as Tiananmen Square.

The app, released on January 20, is also available in Google’s Play Store. DeepSeek owes its sudden popularity to its ability to match or one-up established AI models. Its research paper, released on Monday, reveals how cost-efficient training DeepSeek-V3 is.

While its claims haven’t yet been verified, DeepSeek apparently used just 2,048 specialized Nvidia H800 chips to train R1 versus the more than 16,000 Nvidia chips to train the leading models from OpenAI. DeepSeek says the drastic reduction in the number of cutting-edge GPUs required for AI training has allowed it to spend just $5.6 million to train R1. Contrast this with OpenAI, which spent over $100 million to train its comparably sized GPT-4 model. However, the company hasn’t quantified DeepSeek’s energy consumption relative to rivals.

R1 itself is based on DeepSeek’s V3 large language model (LLM), which the company says matches OpenAI’s GPT-4o and Anthropic’s Claude 3.5 Sonnet. DeepSeek’s achievement is especially impressive in light of the US government imposing trade sanctions on sophisticated Nvidia chips used for AI training. With the biggest AI players like Nvidia, OpenAI, Meta, and Microsoft spending to the tune of billions on their AI data centers, we’re going to be seeing lots of headlines this week questioning America’s lead in AI.

It’ll be fascinating to see how DeepSeek’s breakthrough affects the Trump administration’s billion Stargate project—backed by OpenAI, Softbank, and Oracle—with the goal of investing $500 billion in building new AI infrastructure and data centers for OpenAI over the next four years. Things are going to be especially interesting as OpenAI transitions from a non-profit to a for-profit organization, because DeepSeek has open-sourced its AI models.

Meta has also open-sourced some aspects of its AI technology, like the Lama LLM. Still, DeepSeek is a new kid on the block that everyone’s talking about, and the fact that developers can freely build upon DeepSeek’s technology may give OpenAI a run for its money.

Source: TechCrunch, New York Times, Bloomberg