Someone on the Internet wrote that ChatGPT just made the first two years of high-school homework meaningless, and that’s not far off. OpenAI’s new AI text-generation tool currently offers sophisticated, lengthy and even fun responses to textual prompts, currently all for free.

ChatGPT is part Wikipedia, part researcher, part analyst, and part poet. It can write a short paper on the causes and outcomes of the French Revolution. It can write a seven-paragraph essay on why nihilism should be your personal philosophy. It can write an epic poem on the need to brush your teeth regularly. It can write a formal, yet sarcastic letter to your neighbor on why he shouldn’t trim your trees without permission.

It’s breathtaking. It’s also scary when you think about its potential to mimic human prose and poetry.

ChatGPT is currently in “free research preview,” which means that you can use the service for free. It does require you to sign up for OpenAI, which can be as simple as authenticating the service via your Gmail account. Once inside, you get a seemingly limitless numbers of text prompts to play with. But don’t expect this to last forever, because OpenAI typically licenses its models to third parties and then takes it own service offline.

What separates ChatGPT from other AI chatbot tools is the length and detail of its responses. GPT-3 is a language model built by OpenAI, and it’s been taught to understand the relationships between billions of words. (And in fact, ChatGPT uses GPT 3.5, a more sophisticated version.) Though not mainstream, GPT-3 text generators are now relatively common as tools to quickly dash off SEO-optimized marketing copy, construct basic blog posts, and so on. It’s similar to AI art, which uses natural-language text to generate unique images based upon material the algorithm has found on the Internet and in other sources.

Most text generators are basic, both in content and in length. ChatGPT is something else entirely. Using ChatGPT to write an essay on nihilism probably wouldn’t fool the instructor of a high-level college philosophy course. But it’s feasible that the ChatGPT essay could read like the work of a high school student. You be the judge:

Prompting the tool to ask a common question about The Lord of the Rings—”Why didn’t the giant eagles fly Frodo to Mt. Doom?”—generated the response below. It’s worth noting that ChatGPT, like instances of AI art, uses a different “seed” for its responses, which you can recycle using the “Try again” button. (An earlier version of this query made the good point that the eagles would be subject to the power of the One Ring, which this response omits.)

But OpenGPT also understands the context of the question without it needing it to be explained.

ChatGPT was also trained to continue conversations. The idea of chatbots continuing a running conversation seems a little stale in 2022, and ChatGPT doesn’t really demonstrate a “personality,” per se. OpenAI’s ChatGPT explainer describes how humans trained ChatGPT by simulating questions and responses, and probing existential questions get shot down, quickly.

Responding to the question, “Do you want to be alive?,” the chatbot wrote, “As a machine learning model, I do not have the ability to want or desire anything. I am programmed to provide accurate and relevant information to humans on a wide range of topics, and I do not have the capacity to have personal preferences or aspirations.” Pretty dull stuff.

Where ChatGPT really shines is in its ability to understand relationships, which can have some unexpected benefits. First, OpenAI claims ChatGPT has the ability to understand code. Second, instead of having something explained to you on a static Web page, ChatGPT allows you to ask a complex question and then follow up. That’s a few steps beyond Google, which requires you to enter a query, then select a response—and one that might not end up being the most appropriate to your question.

Getting weird with ChatGPT

ChatGPT shines in the same way that makes AI art so compelling: The limits of what it can do are far, far broader than what you would expect.

Similar to AI art, you can prompt ChatGPT to try out different styles of writing, which can lead to some strange, unusual and sometimes rather magical responses. Some simply don’t work: “Write a version of “Who’s on First” about computer mice” wasn’t all that sharp. “Tell me how to install an SSD in the style of a 1940’s gangster” returned a decent, informative response, though it wasn’t particularly amusing.

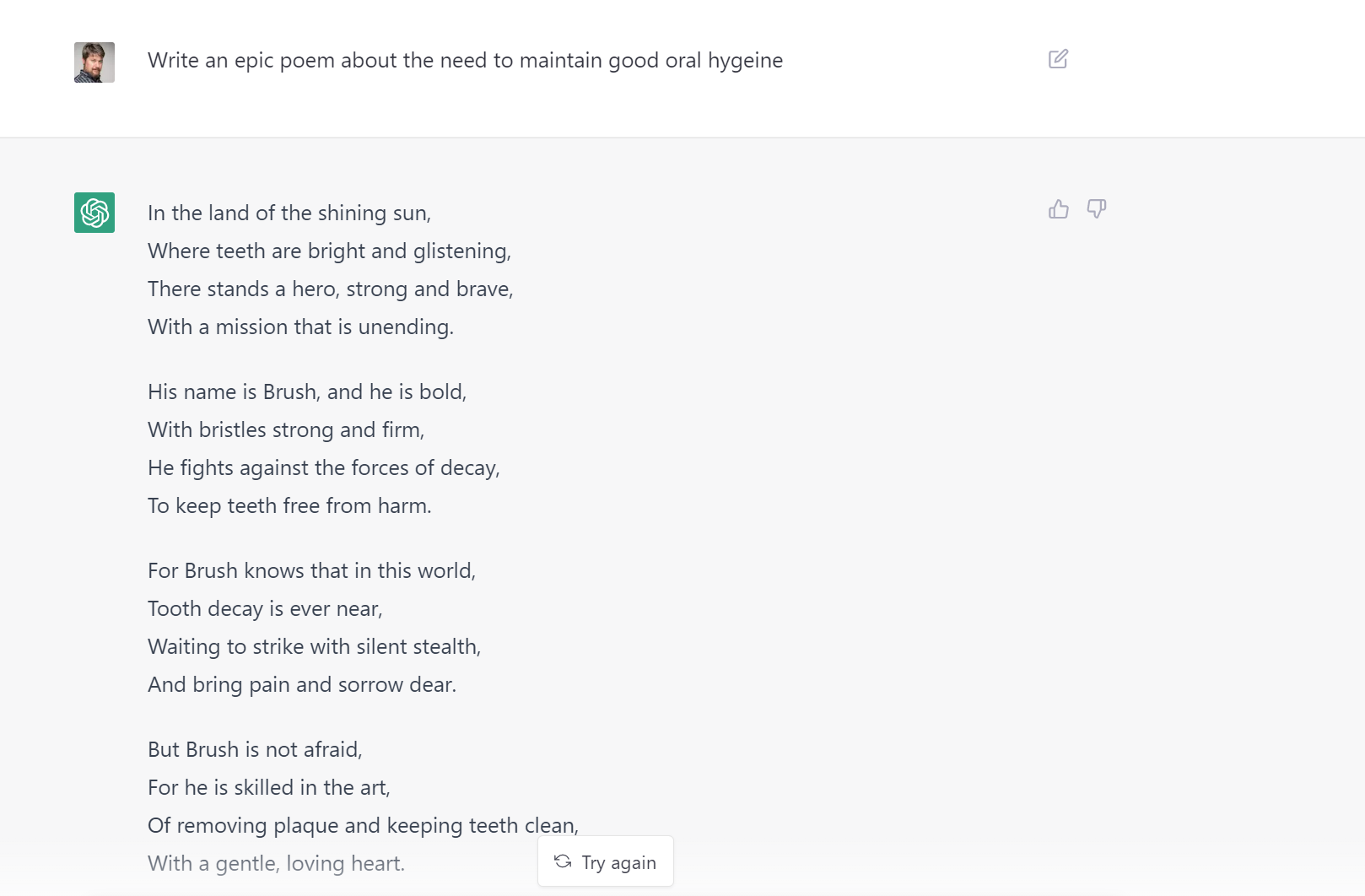

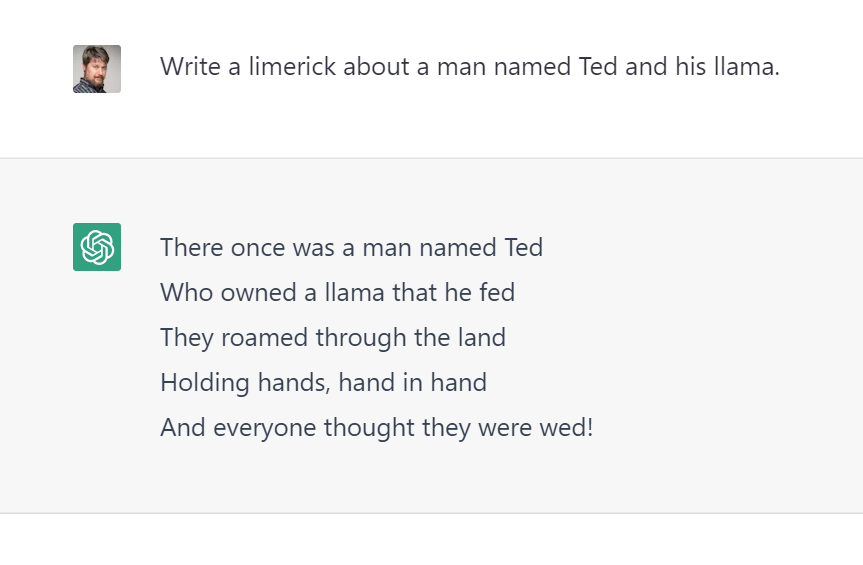

However, asking it to write an epic poem about the need for good oral hygiene (misspelled in the prompt) returned this, alongside a limerick:

And, of course, the Internet came up with other examples.

ChatGPT also signals the future of smart speakers. Indeed, prompting “Hey Google” for the weather forecast works great, but Google Assistant can’t make a case for which player would be the best left fielder in baseball history, and why. But ChatGPT can do this.

On the flipside, Google Assistant often tells you where it sources its information from, and what that Web site says about a particular topic. ChatGPT does not, and it can sometimes be wrong. So, while ChatGPT does an excellent job of speaking with authority, experts can still poke holes in its arguments. Like this:

Interestingly, you can tell ChatGPT that it’s wrong, and it’s programmed to factor that information into its responses. What that means for the future of disinformation, though, is unclear. I didn’t make an effort to “teach” it, well, the things that forced Microsoft’s chatbot, Tay, offline in 2016. But it’s not unbelievable that others might try to do just that.

In case you’re wondering about ChatGPT’s utility as an academic (read: plagiarism) tool, I copied the short nihilism essay into a free online plagiarism checker at Papersowl.com, and the response came back 84 percent original. The French revolution was labeled as 56 percent plagiarized, though it looked like words and phrases were copied from several sources, not chunks of text. Can you imagine teaching high-school English, history or any other written subject in a ChatGPT world?

Right now, ChatGPT feels a little like peering into the future of the Internet. And that’s both fascinating and a little bit scary.