Pointed letters from Congress have been sent to tech executives like Apple’s Tim Cook, stating concerns over the prevalence of deepfake non-consensual intimate images.

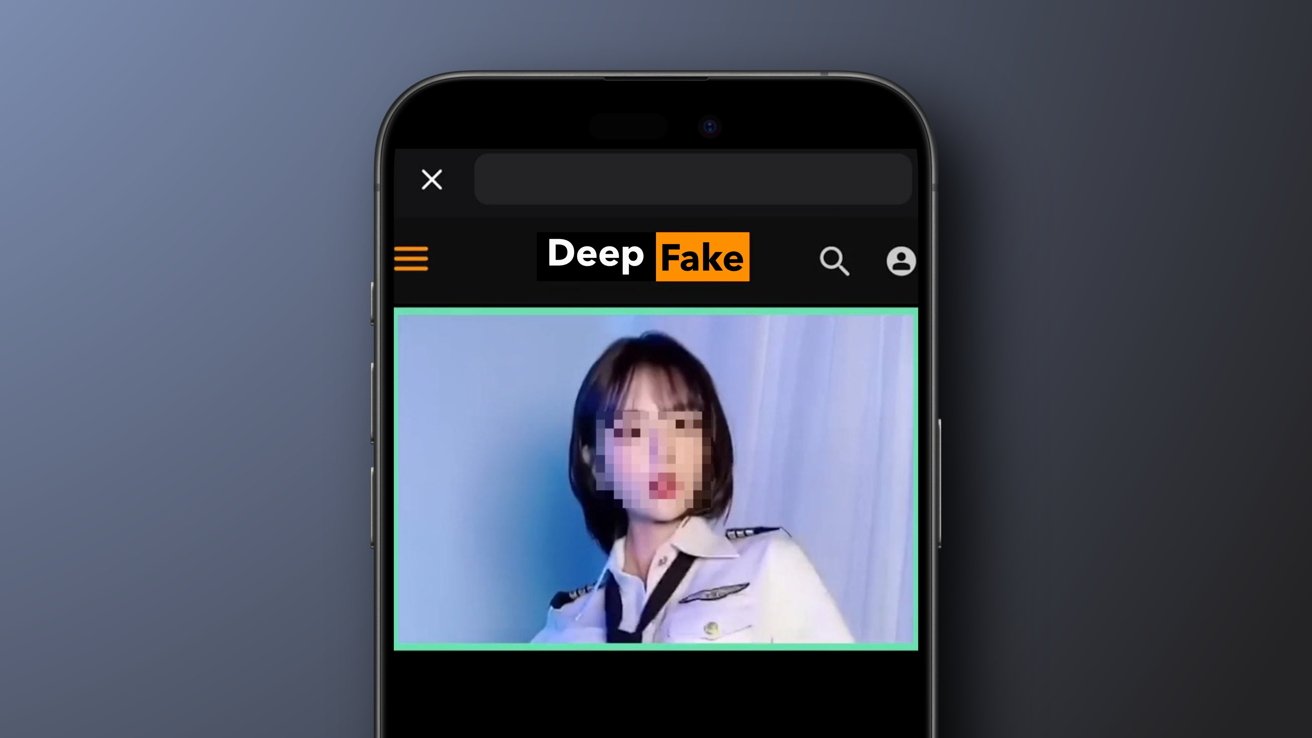

The letters stem from earlier reports about nude deepfakes being created using dual-use apps. Advertisements popped up all over social media promoting face swapping, and users were using them to place faces into nude or pornographic images.

According to a new report from 404 Media, the US Congress is taking action based on these reports, asking tech companies how it plans to stop non-consensual intimate images from being generated on their platforms. Letters were sent to Apple, Alphabet, Microsoft, Meta, ByteDance, Snap, and X.

The letter to Apple specifically calls out the company for missing such dual-use apps despite App Review Guidelines. It asks what Apple plans to do to curb the spread of deepfake pornography and mentions the TAKE IT DOWN Act.

The following questions were asked of Tim Cook:

- What plans are in place to proactively address the proliferation of deepfake pornography on your platform, and what is the timeline of deployment for those measures?

- What individuals or stakeholders, if any, are included in developing these plans?

- What is the process after a report is made and what kind of oversight exists to ensure that these reports are addressed in a timely manner?

- What is the process for determining if an application needs to be removed from your store?

- What, if any, remedy is available to users who report that their image was included non-consensually in a deepfake?

Apple acts as the steward to the App Store, and in doing so, gets the blame anytime something gets into the store that shouldn’t. These instances of deepfake tools or children’s apps that become casinos are used as fuel in the argument that Apple shouldn’t be the gatekeeper to its app platform.

The apps identified by previous reports that were removed by Apple showed clear signs of potential abuse. For example, users could source videos from Pornhub for face swapping.

Apple Intelligence can’t make nude or pornographic images, and Apple has stopped Sign-in with Apple from working on deepfake websites. However, that’s just the bare minimum of what Apple can and should be doing to combat deepfakes.

As the letter from Congress suggests, Apple needs to take steps to ensure that dual-use apps can’t get through review. Extra precautions should at least be taken with apps that promise AI image and video manipulation, especially those that offer face swapping capabilities.