Summary

- DeepSeek offers more than just financial savings, there’s some serious tech under the hood.

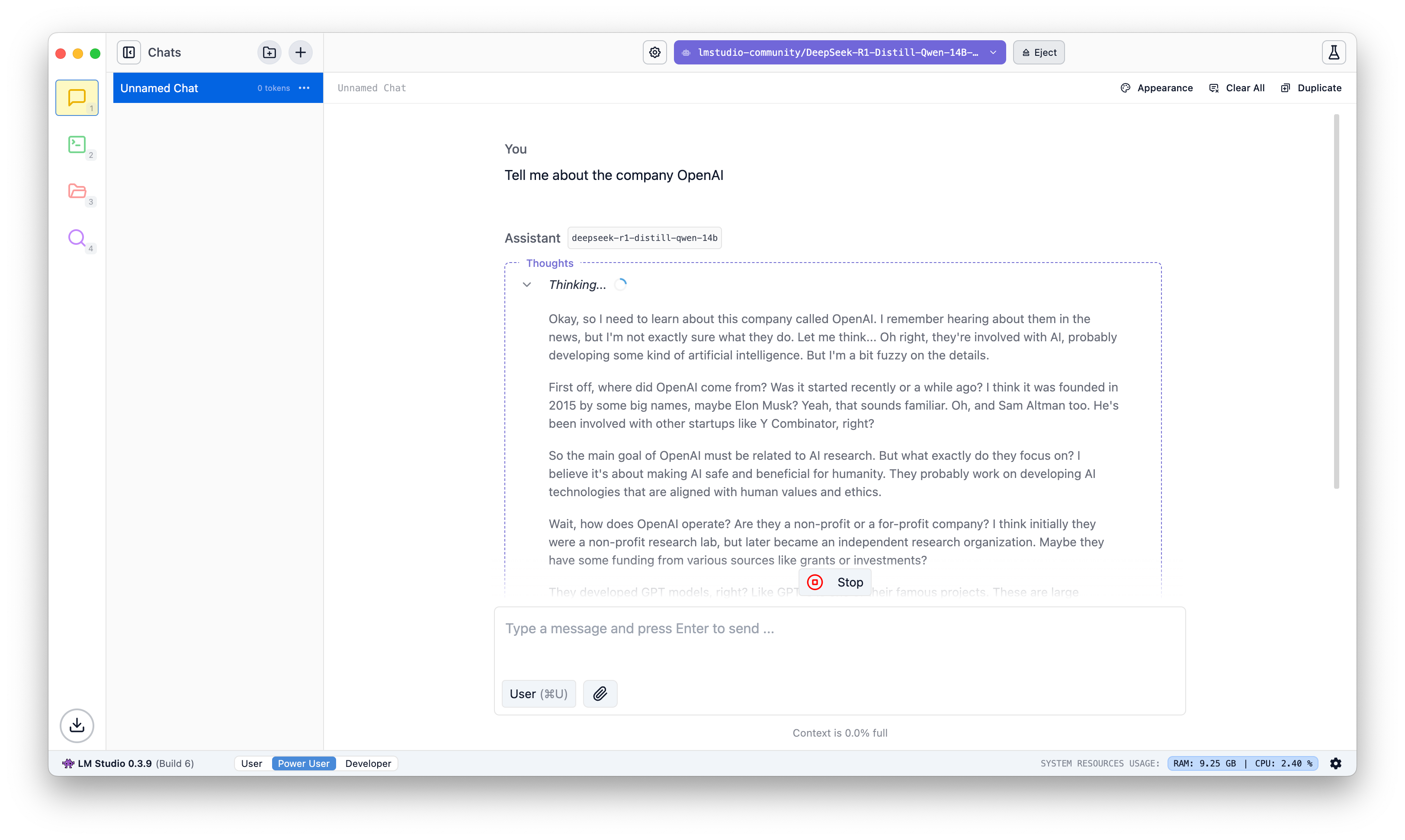

- DeepSeek stands out thanks to transparent thought processes, making it easier to tweak output.

- Content caching is another important technical innovation, leading to much better prompting.

The last few weeks the tech news has been mostly about how DeepSeek, the Chinese answer to western large language models (LLM), is sweeping the world—and sweeping away a lot of market value, besides. What sets DeepSeek apart from GPT, though, and is there more to it than just being cheaper to run?

Turns out that there is. In fact, once you take a closer look, you’ll realize that DeepSeek isn’t some same-but-cheaper clone that China has been famous for in other industries. It’s a real contender that has innovated and made real improvements to the AI model.

Chain of Thought

Much like humans, LLMs need to work their way through a complicated problem. I can’t ask you to simply calculate a complicated equation, you need to go step by step until you get to your conclusions. In AI, this is called “chain of thought” and it’s a vital component to getting good output from a chatbot.

Chain of thought may be where DeepSeek has made the most strides compared to GPT, able to not just work through complicated riddles (like in this example) but also showing its work in a satisfactory way. Instead of you asking a question and just getting an answer, this allows you to check DeepSeek’s work.

It also means you can ask for changes if you’re not happy with the answer you received, or have DeepSeek answer any questions that may have occurred to you while reading its chain of thought. It’s a powerful addition and a great tool for any user.

Caching

Another way in which DeepSeek is a real rival to GPT is in caching, or temporarily storing your questions and answers, allowing you to build a chain of questions. OpenAI, the company behind ChatGPT, has limited caching for the simple reason that it costs money, making it so you can only ask so many questions (the limit is set by your plan) before the chatbot “wipes” its memory.

DeepSeek tackles this problem by using what it calls Content Caching on Disk. This technology detects duplicate inputs, making it so DeepSeek can retrieve earlier answers rather than put together a new one. This saves a lot of wasteful computation, and, as a result, DeepSeek’s costs are lower as well as letting users create longer chains.

Speaking to AI modeler Emile Gervais, DeepSeek is also very transparent with what it caches and what it doesn’t; you can just look it up. This way you can see what works best when entering prompts, which brings us to my last point.

Prompting Optimization

The upshot of better caching and chain of thought improvements is that it becomes easier to create better prompts. Gervais says that DeepSeek’s transparency on how it works makes it easier to figure out how to construct the commands you give the AI.

For example, when writing a prompt you can put the data that won’t change as you build up the chain at the front, making sure DeepSeek uses and reuses that information in the cache. More mutable data should be placed in the middle or at the end of prompts, which should allow for clearer answers.

Though it’s not something you’ll figure out overnight, and it may not be information that’s too useful to the average person, it does show that DeepSeek is a different animal than GPT, and there’s more to it than just being a cheaper “knockoff.” What OpenAI started, may end up being finished by a Chinese company nobody had heard of a few months ago.

Related