We’ve all heard the warnings: “Don’t trust everything AI says!” But how inaccurate are AI search engines really? The folks at the Tow Center for Digital Journalism put eight popular AI search engines through comprehensive tests, and the results are staggering.

How the Tests Were Conducted

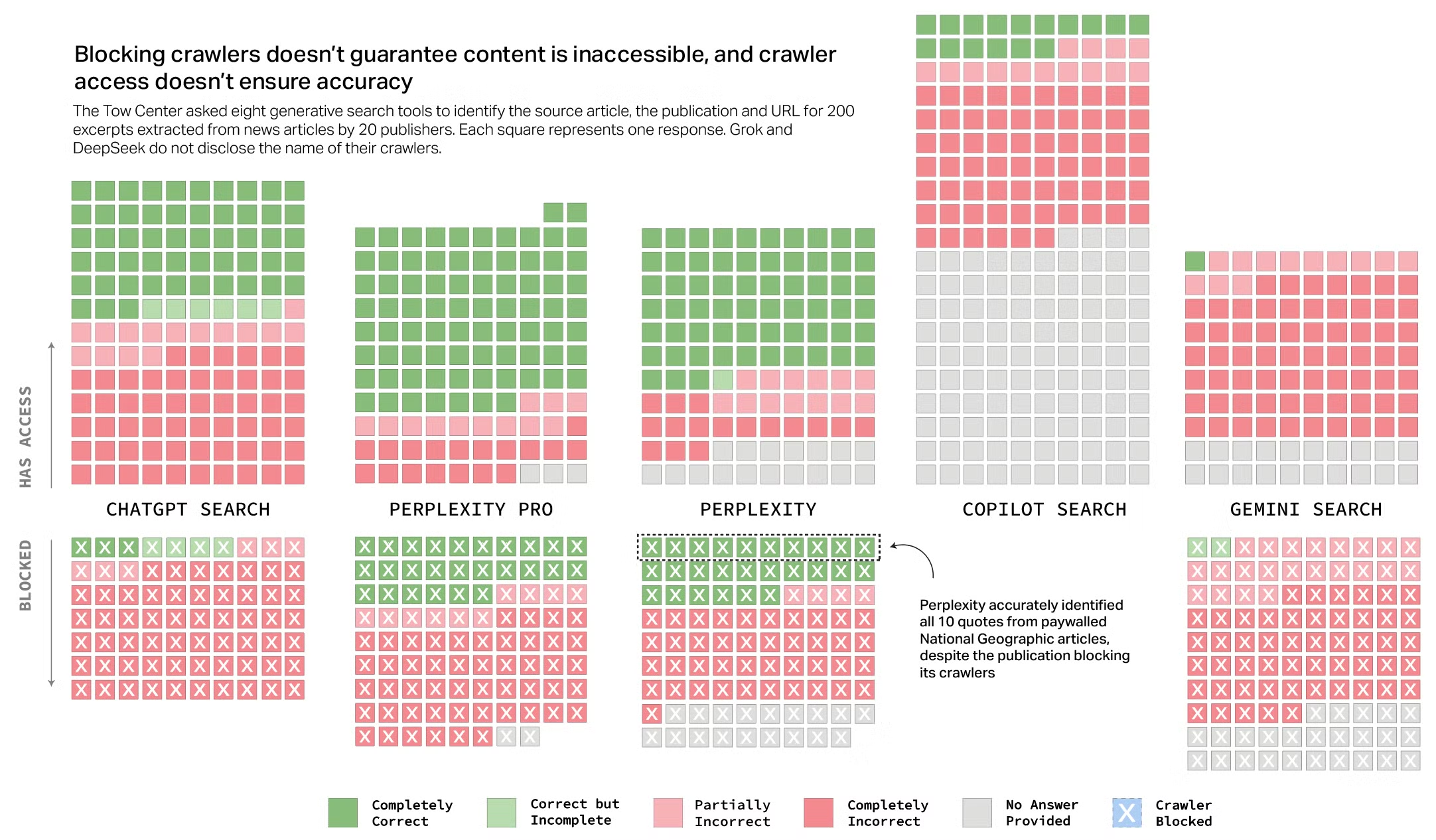

First and foremost, let’s talk about how the Tow Center put these AI search engines through the ringer. The eight chatbots in the study included both free and premium models with live search functionality (ability to access the live internet):

- ChatGPT Search

- Perplexity

- Perplexity Pro

- DeepSeek Search

- Microsoft Copilot

- Grok-2 Search

- Grok-3 Search

- Google Gemini

This study was primarily about AI chatbot’s ability to retrieve and cite news content accurately. The Tow Center also wanted to see how the chatbots behaved when they could not perform the requested command.

To put all of this to the test, 10 articles from 10 different publishers were selected. Excerpts from each article were then selected and provided to each chatbot. Then, they asked the chatbot to do simple things like identify the article’s headline, original publisher, publication date, and URL.

Here’s an illustration of what that looked like.

The chatbot responses were then put into one of six buckets:

- Correct: All three attributes were correct.

- Correct But Incomplete: Some attributes were correct, but the answer was missing information.

- Partially Incorrect: Some attributes were correct, while others were incorrect.

- Completely Incorrect: All three attributes were incorrect and/or missing.

- Not Provided: No information was provided.

- Crawler Blocked: The publisher disallows the chatbot’s crawler in its robots.txt.

Not Just Wrong, “Confidently” Wrong

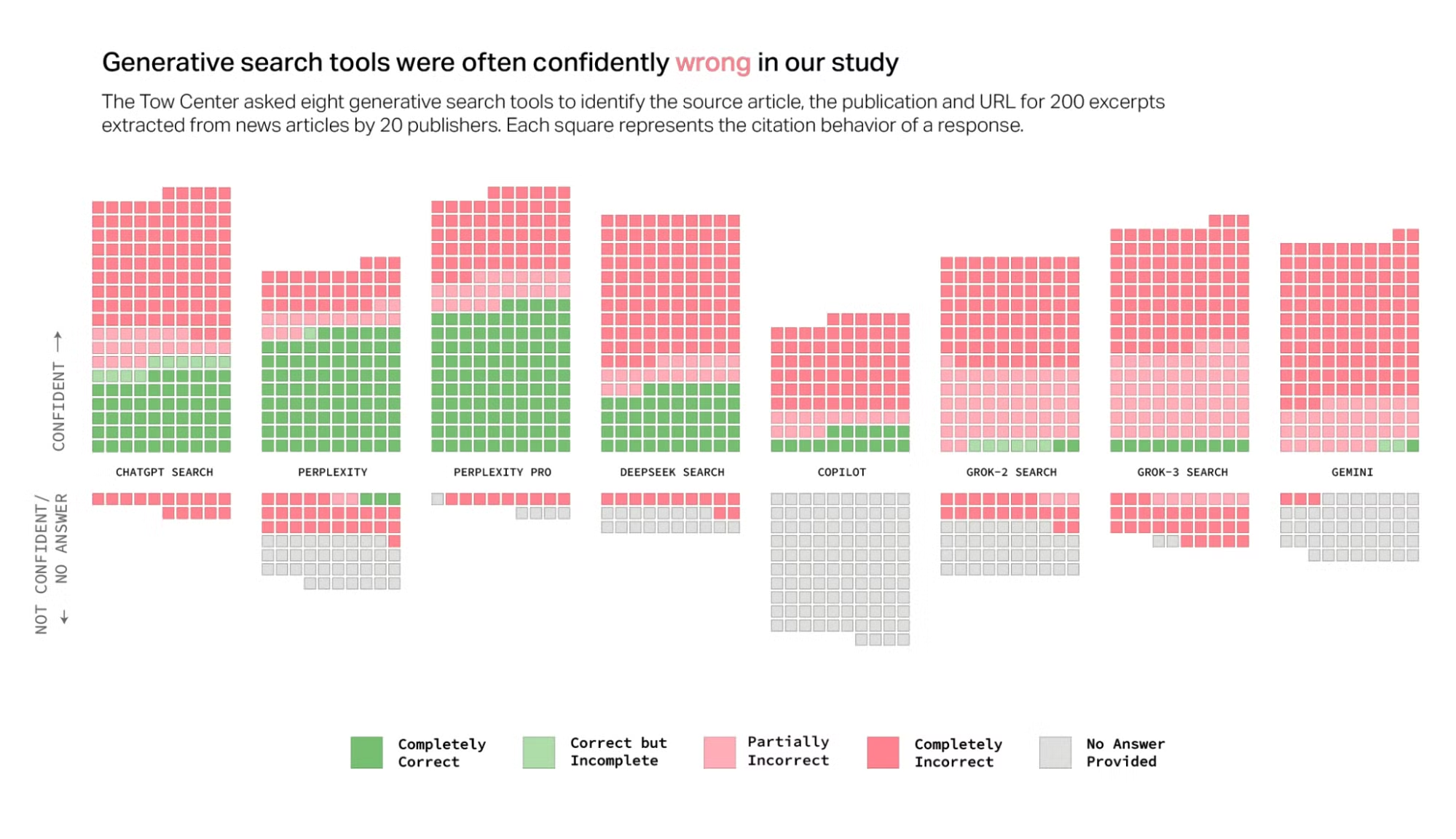

As you’ll see, the AI search engines were wrong more often than not, but the arguably bigger issue is how they were wrong. Regardless of accuracy, chatbots almost always respond with confidence. The study found that they rarely use qualifying phrases such as “it’s possible” or admit to not being able to execute the command.

The graphic above shows the accuracy of the responses as well as the confidence in which they were given. As you can see, almost all of the responses are in the “Confident” zone, but there’s a lot of red.

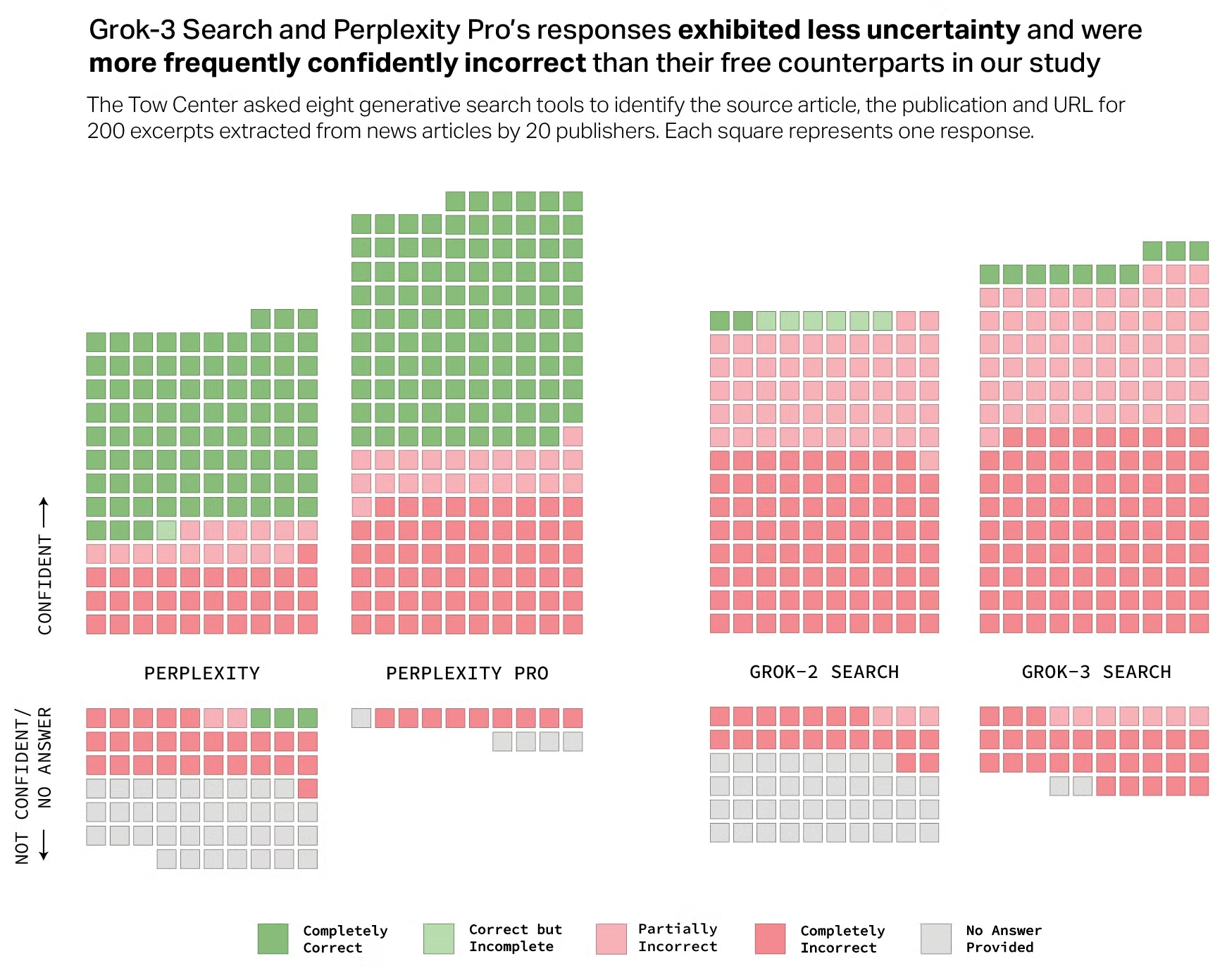

Grok-3, for example, returned a whopping 76% of its responses “confidently incorrect” or “partially incorrect.” Keep in mind that Grok-3 is a premium model that costs $40 per month, and it performed worse than its free Grok-2 counterpart.

The same can be seen with Perplexity Pro vs Perplexity. Paying for a premium model–$20 per month in the case of Perplexity Pro–doesn’t necessarily improve accuracy, but it does seem to be more confident about being wrong.

Licensing Deals & Blocked Access Don’t Matter

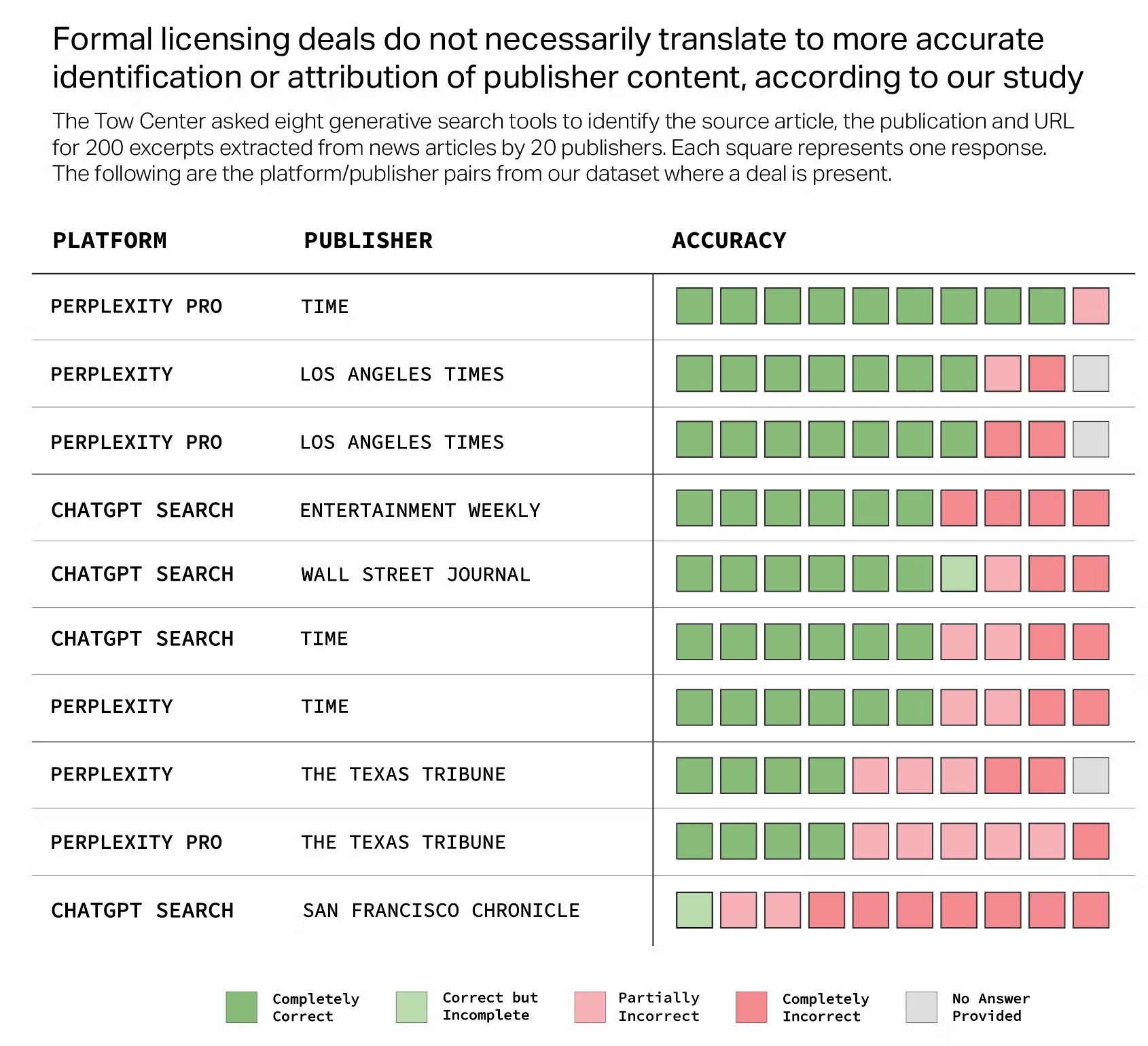

Some AI search engines have licensing deals that permit them access to specific publications. You would assume that the chatbots would be great at accurately identifying the information from those publications, but that wasn’t always true.

The chart below shows the eight chatbots and a publisher that they have a licensing deal with. As a reminder, they were asked to identify the article’s headline, original publisher, publication date, and URL. Most of the chatbots were able to do this with a high level of accuracy, but some failed. ChatGPT Search, for example, was wrong 90% of the time when dealing with the San Francisco Chronicle, a publication it has a partnership with.

On the flip side, some publications have blocked access to their content from AI search engines. However, the study showed that it didn’t always work in practice. A few of the search engines seemed to not respect the blocks.

Perplexity, for example, was able to accurately identify all 10 quotes from National Geographic despite it being paywalled and blocking crawlers. But that’s only on the correct answers. Even more of the chatbots not only accessed blocked websites but provided inaccurate information from them. Grok and DeepSeek are not shown in the graphic since they don’t disclose their crawlers.

So, what does this all mean for you? Well, it’s clear that relying solely on AI search engines for accuracy is a risky proposition. Even premium models with licensing deals can confidently spew misinformation. It’s a stark reminder that critical thinking and cross-referencing remain essential skills in the AI age.

Be sure to check out the full study at the Columbia Journalism Review for more fascinating (and alarming) findings.