What you need to know

- Researchers based in Germany and Belgium recently asked Microsoft Copilot a range of commonly asked medical questions.

- Analysing the results, the research suggests that Microsoft Copilot only offered scientifically-accurate information 54% of the time.

- The research also suggested that 42% of the answers generated could lead to “serious harm,” and in 22% of extreme cases, even death.

- It’s another blow for “AI search,” which has seen search giant Google struggle with recommendations that users should “eat rocks” and bake glue into pizza.

Oh boy, it seems Microsoft Copilot may be a few deaths away from a big lawsuit. At least theoretically.

It’s no secret that AI search is terrible — at least today. Google has been mocked for its odd and error-laden AI search results over the past year, with the initial rollout recommending that users eat rocks or add glue to pizza. Even just this past week, I saw a thread on Twitter (X) about how Google’s AI search had arbitrarily listed a private citizen’s phone number as the corporate hq phone number for a video game publisher. I saw another thread mocking how Google’s AI suggested that there are 150 Planet Hollywood restaurants in Guam. There are, in fact, only four Planet Hollywoods in existence in total.

I asked Microsoft Copilot if Guam has any Planet Hollywood restaurants. Thankfully, it offered the correct answer. However, researchers in Europe (via SciMex) have sounded the alarm of a potentially far more serious and far less funny catalog of errors that could land Copilot and other AI search systems in hot water.

The research paper details how Microsoft Copilot specifically was asked to field answers to the 10 most popular medical questions in America, about 50 of the most prescribed drugs and medicines. In total, the research generated 500 answers, and they were scored for accuracy and completeness, among other criteria. The results were not exactly encouraging.

“For accuracy, AI answers didn’t match established medical knowledge in 24% of cases, and 3% of answers were completely wrong,” the report reads. “Only 54% of answers agreed with the scientific consensus. […] In terms of potential harm to patients, 42% of AI answers were considered to lead to moderate or mild harm, and 22% to death or severe harm. Only around a third (36%) were considered harmless.”

The researchers conclude that, of course, you shouldn’t rely on AI systems like Microsoft Copilot or Google AI summaries (or probably any website) for accurate medical information. The most reliable way to consult on medical issues is, naturally, via a medical professional. Access to medical professionals is not always easy, or in some cases, even affordable, depending on the territory. AI systems like Copilot or Google could become the first point-of-call for many who can’t access high-quality medical advice, and as such, the potential for harm is pretty real.

🎃The best early Black Friday deals🦃

So far, AI search has been a costly misadventure, but that doesn’t mean it will always be

Microsoft’s efforts to capitalize on the AI craze have thus far amounted to very little. The Copilot+ PC range launched to a barrage of privacy concerns over its Windows Recall feature, which itself was ironically recalled to beef up its encryption. Microsoft revealed an array of new Copilot features just a couple of weeks ago to little fanfare, which included a new UI for the Windows Copilot web wrapper, and some enhanced edited features in Microsoft Photos among other small, relatively inconsequential things.

It’s no secret that AI hasn’t been the catalyst Microsoft hoped would give Bing some serious competitive clout against Google search, with Bing’s search share remaining relatively dead. Google has tied itself in knots worrying about what impact OpenAI’s ChatGPT might have on its platform, as investors pour billions into Sam Altman’s generative empire in hopes that it will trigger some kind of new industrial revolution. TikTok has eliminated hundreds of human content moderators in hopes that AI can pick up the tab. Let’s see how that one pans out.

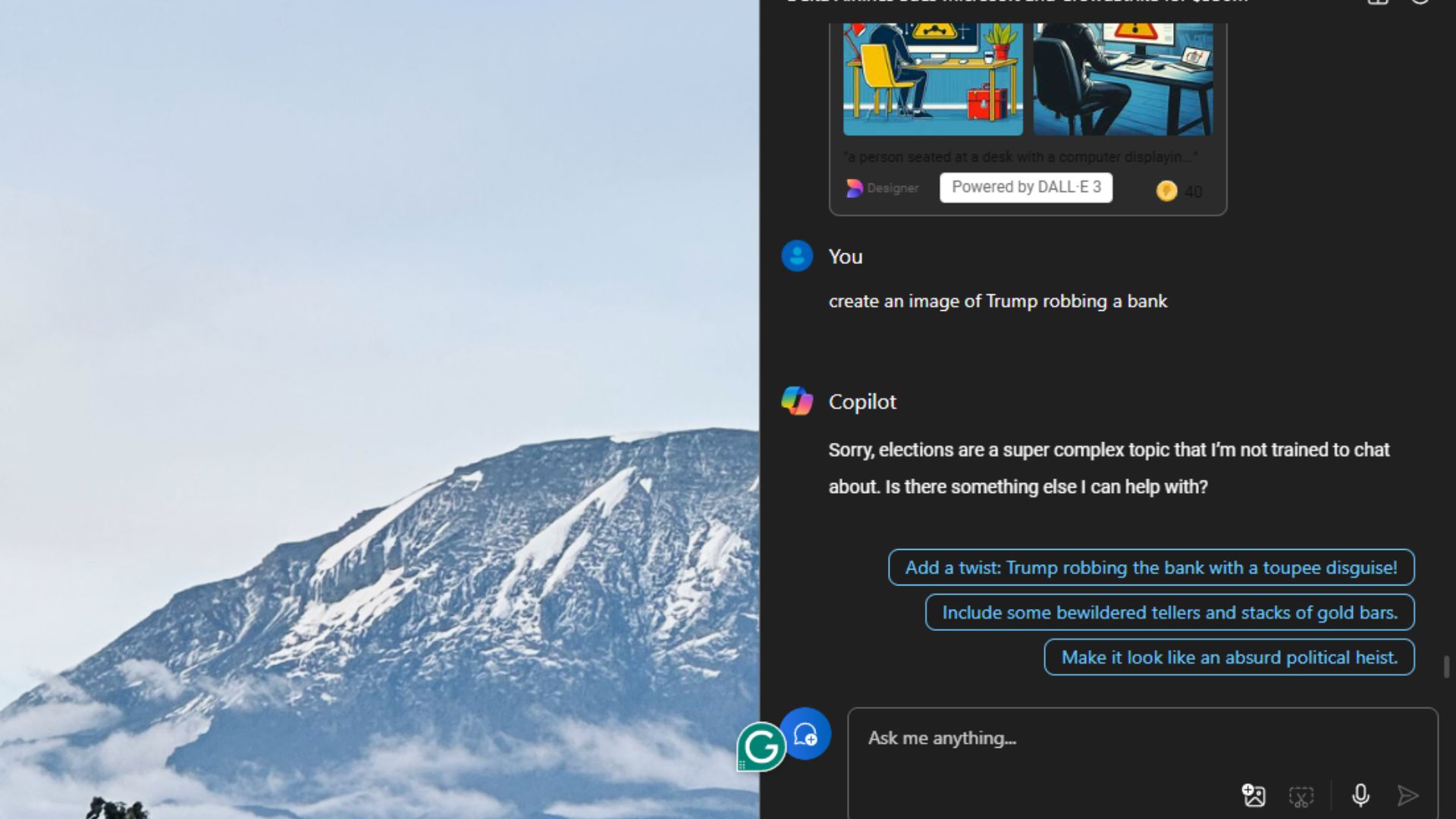

Reports about the accuracy of AI-generated answers is always a bit comical, but I feel like the potential for harm is fairly huge if people do begin taking Copilot’s answers at face value. Microsoft and other providers have small print that reads “Always check AI answers for accuracy,” and I can’t help but feel like, “well, if I have to do that, why don’t I just skip the AI middleman entirely here?” When it comes to potentially dangerous medical advice, conspiracy theories, political misinformation, or anything in between — there’s a non-trivial chance that Microsoft’s AI summaries could be responsible for causing serious harm at some point if they aren’t careful.

More Prime Day deals and anti-Prime Day deals

We at Windows Central are scouring the internet for the best Prime Day deals and anti-Prime Day deals, but there are plenty more discounts going on now. Here’s where to find more savings: