WWDC is on the horizon, which will be Apple’s venue to announce upcoming changes to iOS 19. Here’s what we want Apple to include as part of the iPhone OS refresh.

Apple will be holding its annual Worldwide Developers Conference from June 9 to June 13. It’s the event when Apple details what new features and alterations it will be making to its various operating systems, with iOS being its main talking point.

This has frequently involved the detailing of changes to existing iOS features, as well as new features and even big strategy shifts. The WWDC 2024 introduction of the Apple Intelligence initiative was massive news at the time, and still is, even if Apple has fumbled the rollout.

Whatever Apple announces during WWDC won’t be used by the public until the fall, accompanying the launch of the iPhone 17 collection. Even then, not everything will be available at that point, with features often expected to be introduced in the months that follow.

However, there will be some elements that become immediately usable for developers in Apple’s beta-testing program. Shortly after the Monday keynote address, Apple tends to issue the initial developer betas, giving a hint of what to expect in September.

While Apple has its internal list of things it will be adding to iOS 19, there are many items that iOS power users will want Apple to address. AppleInsider included.

Here’s what we want Apple to change in iOS 19, if not necessarily what we’ll actually get.

More Apple Intelligence, Faster

It’s inevitable that Apple Intelligence will be a big feature of WWDC. It was a massive part of 2024’s event, and it’s something that Apple intends to further develop.

However, it’s got a massive amount of catching up to do. Not just with the market at large, but for its own goals.

Apple came under fire over the year due to its glacial rollout of Apple Intelligence features. Many elements have been caught up in delays, to the level that the Better Business Bureau stepped in to complain about Apple’s promotion of it all.

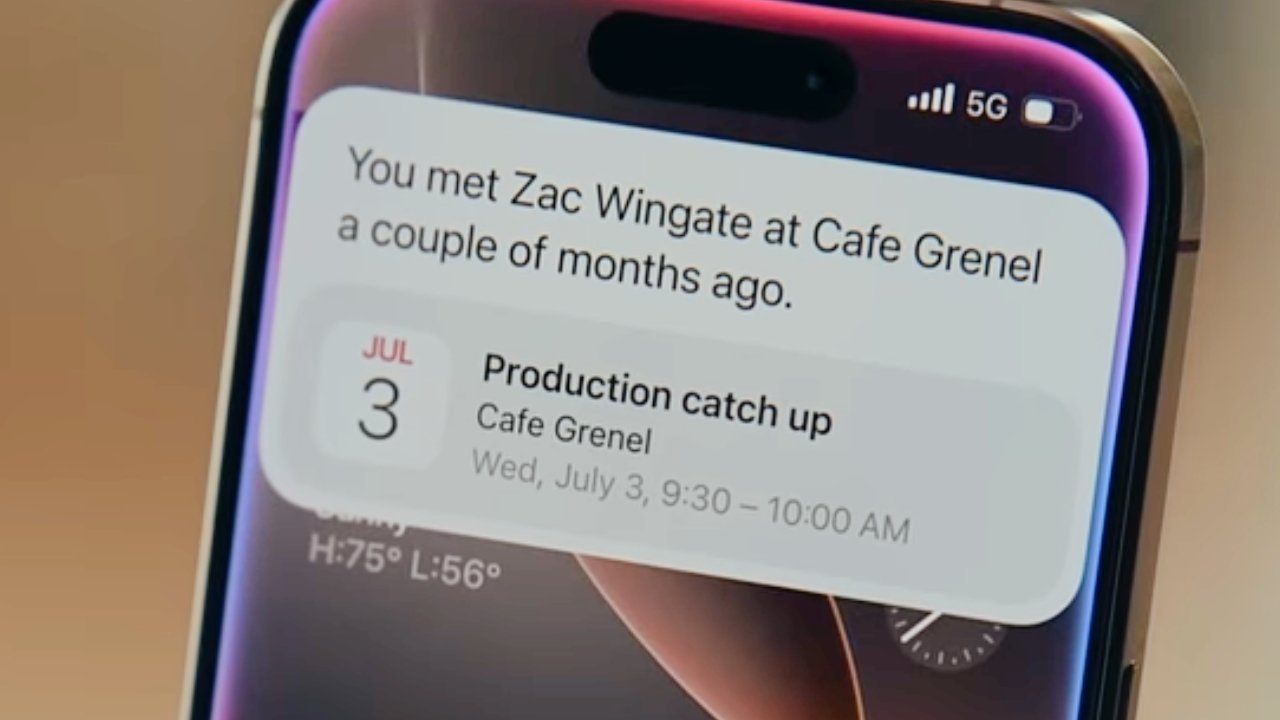

Then there is Siri to discuss, as Apple promised an overhauled version that could understand context to a high degree. We were told Siri would one day understand questions like “When’s Mom’s flight arriving at the airport?” but it’s still not a reality.

Apple has taken steps to rectify the situation, including an internal reshuffle of how Siri and Apple Intelligence features are managed. It’s even supposedly trying to avoid promoting upcoming features until they’re a short time away from launching.

We can expect a mea culpa from Apple on the whole Apple Intelligence and Siri debacle during WWDC. We can probably get assurances that updates will be arriving fast and steadily.

But we can also anticipate that Apple will be promoting where Apple Intelligence will be going in the future. Possibly by opening it up to third-party apps.

Let’s just hope that whatever it says is on the way actually arrives in a timely fashion.

Continuity Camera, but with iPadOS

The Continuity Camera function of the Apple ecosystem is an extremely useful item, especially when it comes to video conferencing over Zoom. The iPhone’s rear camera is fantastic, and better than quite a lot of webcams on the market.

For Mac users who don’t have the luxury of owning a MacBook Air or MacBook Pro with a built-in camera in the display, it also saves money from having to buy a webcam in the first place.

This is very useful for video-related needs on a Mac. So, why not use the same concept elsewhere, like on an iPad.

You can use Continuity Camera to use an iPad or iPhone’s rear camera on a Mac, but you cannot use an iPhone’s rear camera in the same way for an application on an iPad.

The iPad has perfectly adequate front-facing and rear-facing cameras, certainly, so extending Continuity Camera to be accessible by macOS applications doesn’t seem necessary.

However, it could be useful in cases where you’re recording a video on an iPhone. You can record on the iPhone and transfer it to the iPad afterward, but you pretty much have to get everything set up on the iPhone itself.

Being able to remotely manage the iPhone’s camera from another device, be it another iPhone or an iPad, would be very handy.

Indeed, you have that to a degree with Final Cut Camera and using it with Live Multicam on Final Cut Pro for iPad. Not everyone wants to pay for Final Cut Pro’s subscription, or even wants to use that tool.

Having it as a separate feature could be a godsend for wannabe YouTube moguls to upgrade their productions.

And no, previewing the camera view on an Apple Watch just isn’t enough.

Advanced Camera settings accessible from within the app

The stock Camera app does a lot to balance the fine line between being feature-filled and too cluttered. You have a lot of access to enable lots of functions and to change essential settings while using the app, all so you can get the shot you want with minimal interference.

However, while you can access a lot of the settings of the Camera app quite easily, there’s quite a lot more you can change. The problem is that they’re buried inside the Settings app.

You want to enable or disable the grid or level indicator, or to stop the screen mirroring the image when using the front-facing camera? They’re options in Settings.

There’s also no way to change your formats or adjust Photographic Styles unless you go to Settings. If you want to turn off lens correction or macro control, you have to do it there too.

The request here isn’t necessarily to add all of these as options within the Camera app itself. We’d be happy with an option in Camera that takes you to the Camera page in Settings.

For those who spend a lot of time doing photography and videography and take it seriously, it’s an addition that will be extremely useful.

More button customization

Apple’s introduction of the Action Button did more than just replace a finicky mute toggle with a pressable item. It opened up a world of personalization, since you can set it to open up one of a selection of apps, and in various ways too.

While it is one of the more adjustable physical controls on the iPhone, there are relatively few other opportunities available.

The main ones are being able to switch out the Lock Screen software buttons for other tools, and to enable the volume up button to take a burst of pictures in the Camera. If you have a newer iPhone with Camera Control, there are some other options, but it’s still very limited.

What would be nice is if you can make more changes to how the buttons react to different presses. For example, setting the volume buttons to open apps with a long press, but only actually change the volume with short presses.

Providing a wider array of options for what each button press does would also be welcome. Sure, you can configure Camera Control to open the camera, the QR code scanner, or Magnifier, but why are there not as many options as the Action Button’s settings?

Admittedly, this could get in the way of some existing press-based functions that already exist. But it would good to have the options available if we really want them.

iPhone split screen

Let’s face it, iPhone screens are pretty large, especially if you pick up a model like the iPhone 16 Pro Max. With a 6.9-inch display and an equally high resolution, there’s a lot of digital space to play with.

You could say the same thing about the 6.3-inch Pro model, or even the 6.1-inch iPhone 16e. That’s not even taking into account the potentially large display of the long-rumored iPhone Fold.

With screen sizes that are not really far off the 8.3-inch iPad mini panel, it’s safe to say that iPhone users have a lot of display to work with. So much so that it should be used more efficiently.

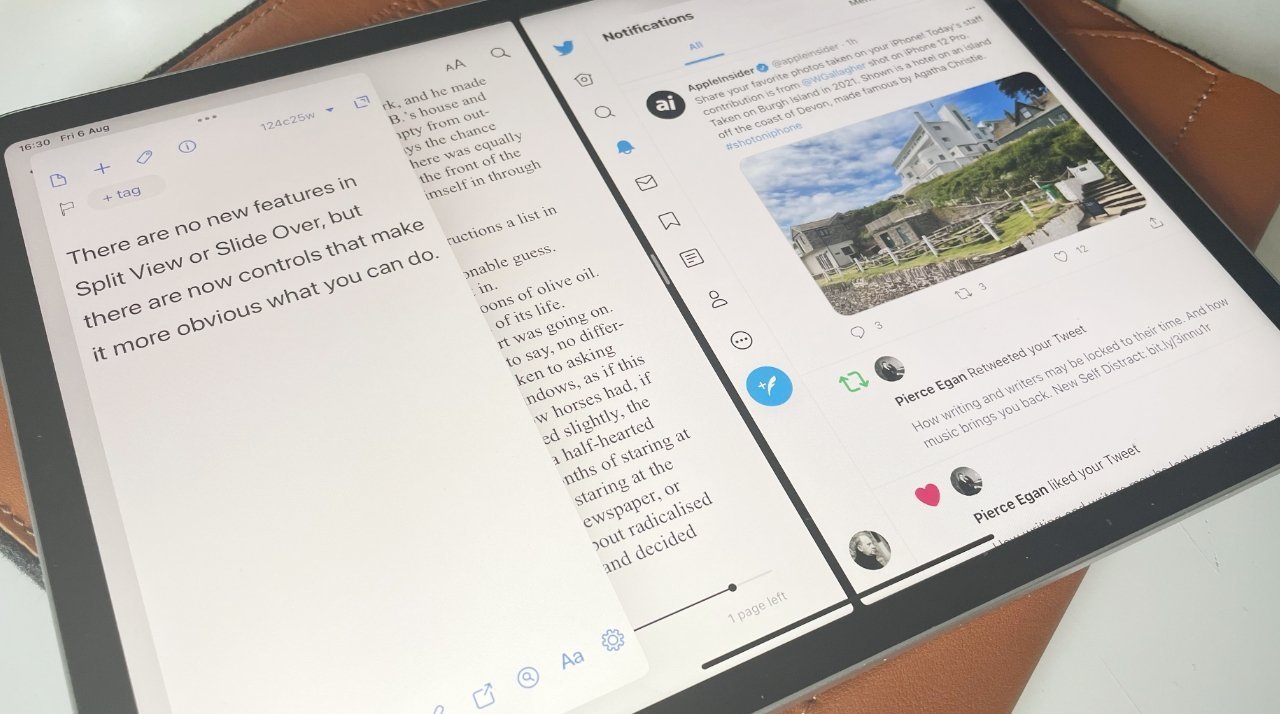

The iPad and iPad Pro have features like Stage Manager, Split View, and Slide Over to allow users to work with two apps at the same time. Given the processing performance and the display size, surely some form of split-screen mode could be brought over to iOS.

You can technically work with two apps at the same time, with picture-in-picture letting you watch a video on top of another app. That’s fine, but it would be nice to be able to fully use two apps on the screen at once.

It’s not hard to imagine an app taking up the top or bottom half of the iPhone’s display and still be usable with another. It probably isn’t hard to make it a reality, either.

Clipboard manager

Apple’s ecosystem is fantastic, especially when it comes to getting data from one device to another. It’s trivial to use Universal Clipboard to copy text or an image on a Mac into a message you’re writing on an iPhone.

This is great for the most part, until you need to handle multiple bits of data at once. You can only copy and paste one thing at a time on Apple’s hardware, be it Mac, iPad, or iPhone.

To be fair, this is something that isn’t really an issue for iPhone users. Unlike the iPad or Mac, you’re not necessarily going to be doing as much productive work on the smaller screen, so there’s no real need to juggle multiple clipboard items.

Even so, it would be quite useful to have some form of clipboard manager tool to allow for multiple items to be held in said clipboard. Sure, it would be more useful for the bigger display hardware than the iPhone, but if you’re doing it there, you could do it in iOS too for feature parity.

You can get clipboard managers on macOS, though on iOS, you have relatively few available. Paste being one of the main options out there for iPhone users.

It’s a feature that you would’ve expected Apple to implement in some way in its ecosystem by now.

App Library folders on the Home Screen

Apple has, in successive iOS refreshes, increased the amount of personalization a user can do to their iPhone’s Home Screen. Nowadays, users can include widgets of various sizes, introduce gaps and place icons wherever they want, and even customize the color scheme of the icons themselves.

This is all well and good, but Apple could go one step further.

If you swipe to the far right of the Home Screen, you’re greeted with the App Library. This section categorizes apps, but does so in a fairly neat way.

Each category square is effectively a folder, with three or four commonly-used apps from that category tappable, or three tappable apps and a quadrant with tiny app icons representing others in the folder. Tap this, and you see the full list within the category.

This folder view would be very useful on the main Home Screen pages, as an alternate to the existing folder setup that requires you to enter the folder to select an app.

It’s a little redundant, as you can consider each Home Screen page as a folder in its own right, but it would give users more ways to organize their icons.

For users like the AppleInsider editorial team that have hundreds of apps installed, we need all the help we can get.

Food Logging

Apple has a deep-seated interest in the health of its users. With the Apple Watch and the Health app keeping tabs on the user’s fitness and vitals, it’s already doing a lot to promote a healthier lifestyle.

Indeed, there have been rumors of changes arriving in the Health app in the form of an AI agent. One that could provide feedback on ways to improve their health, like a coach.

However, one area that Apple could muscle in on is in food tracking. It’s one thing to have tools available for monitoring how much a person works out, but it would be beneficial to track the nutrition side of things, too.

Indeed, the Health app has an entire section for nutrition built into it. Except it doesn’t offer a way to track food, as it relies on third-party services like MyFitnessPal.

A food tracking app would help fill out this category, as well as giving any potential AI coach more data to work with.

Automatic Memoji creation

The push for Apple Intelligence has, so far, included a few image generation elements. Give some prompts, and you can get custom emoji or even entire images.

Image Playground, one of the most well-known elements of Apple Intelligence so far, also uses images supplied by the user as the basis for its creations.

If Image Playground can recreate a person’s face from a photograph, maybe this could be expanded to another area.

Memoji is the expansion of Animoji, in that users can create 3D avatar faces. This process uses a menu system where you select each element of the face from a selection of components, resulting in anything from a broadly similar caricature to something wildly different depending on your choices.

With that in mind, it seems plausible that Apple’s system for recognizing and copying a face in Image Playground could be leveraged for Memoji. One where the user offers an image of a person to create, and Memoji automatically selects the most appropriate facial features.

Even better, imagine updating a Memoji of yourself by telling iOS to refresh the model it has for you, and it does so by scanning your face.

Sure, there’s a lot of fun to be had in selecting components yourself, but not everyone wants to fuss around when they just want a quick computer-generated version of their own face.