Generative AI has come a long way over the past few months, and everyone wants a piece of the pie. Google had a rough start in the generative AI scene, but the newly-announced Gemini 2.0 family of models is changing that.

Google has announced Gemini 2.0, its most advanced AI model yet, though the release is staggered for now. Building on the success of Gemini 1.0 and 1.5, this new model boasts enhanced multimodal capabilities, including native image and audio output. This allows for the creation of more sophisticated AI agents that can understand and interact with the world in a more human-like way.

Gemini 2.0 Flash, the first model in the family, is available to developers and some Gemini Advanced users, with plans for broader rollout in the near future. According to Google, it outperforms its predecessor, Gemini 1.5 Pro, on key benchmarks while offering twice the speed. If you have Gemini Advanced, you might already have access to the new Gemini 2.0 Flash model. Once you fire it up, though, you’ll see a big bold warning saying that it’s in an “experimental” stage and that it might not work as expected.

It might fumble some things at the moment—I tried it out for a while, and it actually tends to give out overcomplicated answers to otherwise simple queries, but it’s otherwise fine and works at okay speeds. I wouldn’t trust it for any actually important tasks at the moment. It’s experimental, after all, which means that it requires more training and usage before it’s actually appropriate to be used by most people.

We’re not sure when this will actually get out of this “experimental” stage and actually supersede the Gemini 1.5 family of models, but we’ll have to wait and see. We should also get a bigger Gemini 2.0 Pro model down the road as well, and like the Gemini 2.0 Flash model, it will probably release as an experimental model at first.

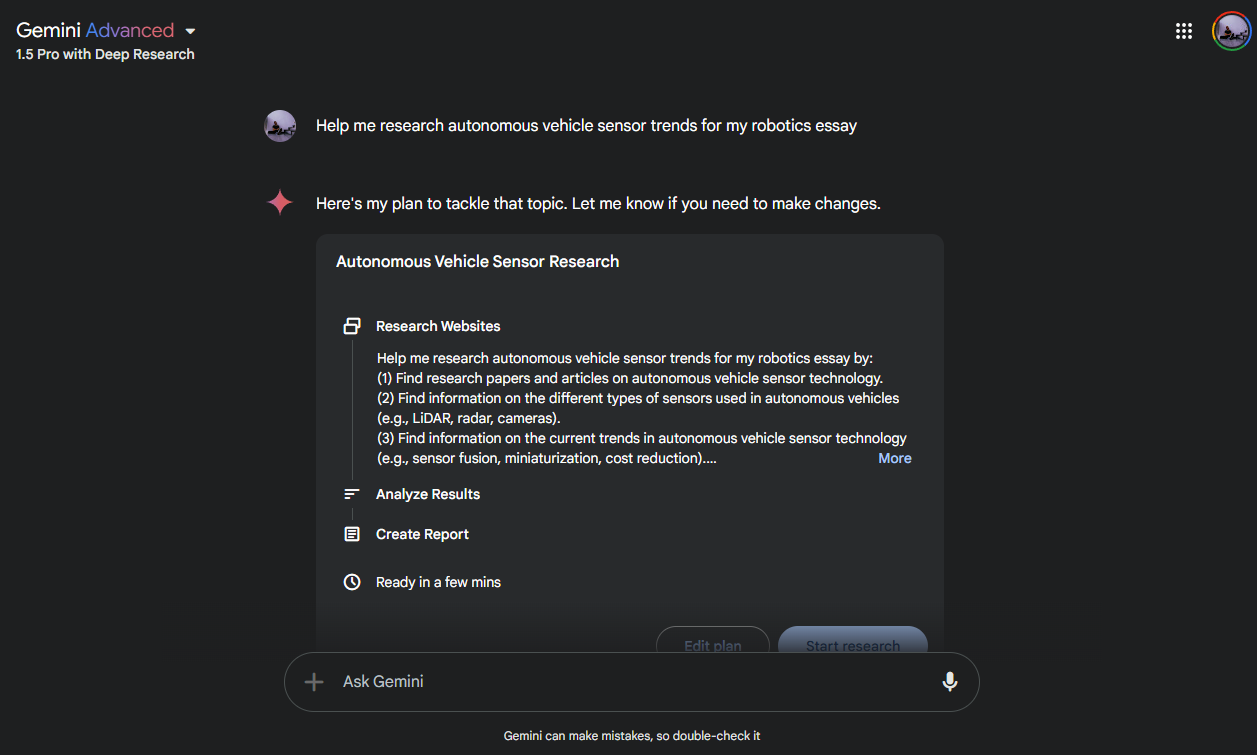

One improvement that you can use right now, however, is a new “Deep Research” version of Gemini 1.5 Pro. This is similar to ChatGPT’s new thought changes, where it will actually check its answers over and over for accuracy rather than just spitting out the very first thing that comes out of the model. Rather than just checking one output, however, Deep Research will go one step further and come up with a step-by-step plan of what it’ll do and how it will help you research.

It can find information on the web, analyze all that info, making calculations, and checking all that for accuracy to eventually culminate in a long-form informed report, using info from several sources (citing each source used), properly answering your query. You can even revise the research plan before Gemini spends several minutes researching and writing.

Deep Research is pretty cool, but since it prioritizes research and accuracy over actually giving you a fast answer, it can take a long time. All three prompts I used took at least 5 minutes each to give me a proper answer, which you can either read in Gemini directly or export to Google Docs (in case you’re going to use that for something like an essay). It can still make mistakes even if it’s checking answers a thousand times over, so we can’t stress this enough—if you’re going to use this, please double-check what it gives you, and don’t just take the output on face value.

Both the experimental version of Gemini 2.0 Flash and the Deep Research version of Gemini 1.5 Pro are now available for Gemini Advanced users.