It hasn’t been too long since Google released its Gemini 2.0 family of models, but the company is already moving ahead with what’s next. Google has just announced the Gemini 2.5 family, starting with Gemini 2.5 Pro. It seems rushed, but we’ll allow it.

Google has just announced the introduction of Gemini 2.5, its newest generation of artificial intelligence models. The initial rollout features the experimental version of Gemini 2.5 Pro, which the company positions as a significant advancement in AI reasoning and coding capabilities compared to Gemini 2.0 and even compared to competing models.

The big thing to note here is that Gemini 2.5 is Google’s first full “chain-of-thought” model, which means that it performs multi-step thinking and checks its responses for accuracy before actually outputting them. Gemini 2.0 already supported this with the 2.0 Flash Thinking model (which is also experimental), but Gemini 2.5 is not available in a non-chain-of-thought version at all. It will take longer to respond to queries sometimes, but responses will be more accurate and, hopefully, we’ll also have less hallucinations—as it turns out, that’s still a huge problem with AI, even with how advanced large language models have gotten.

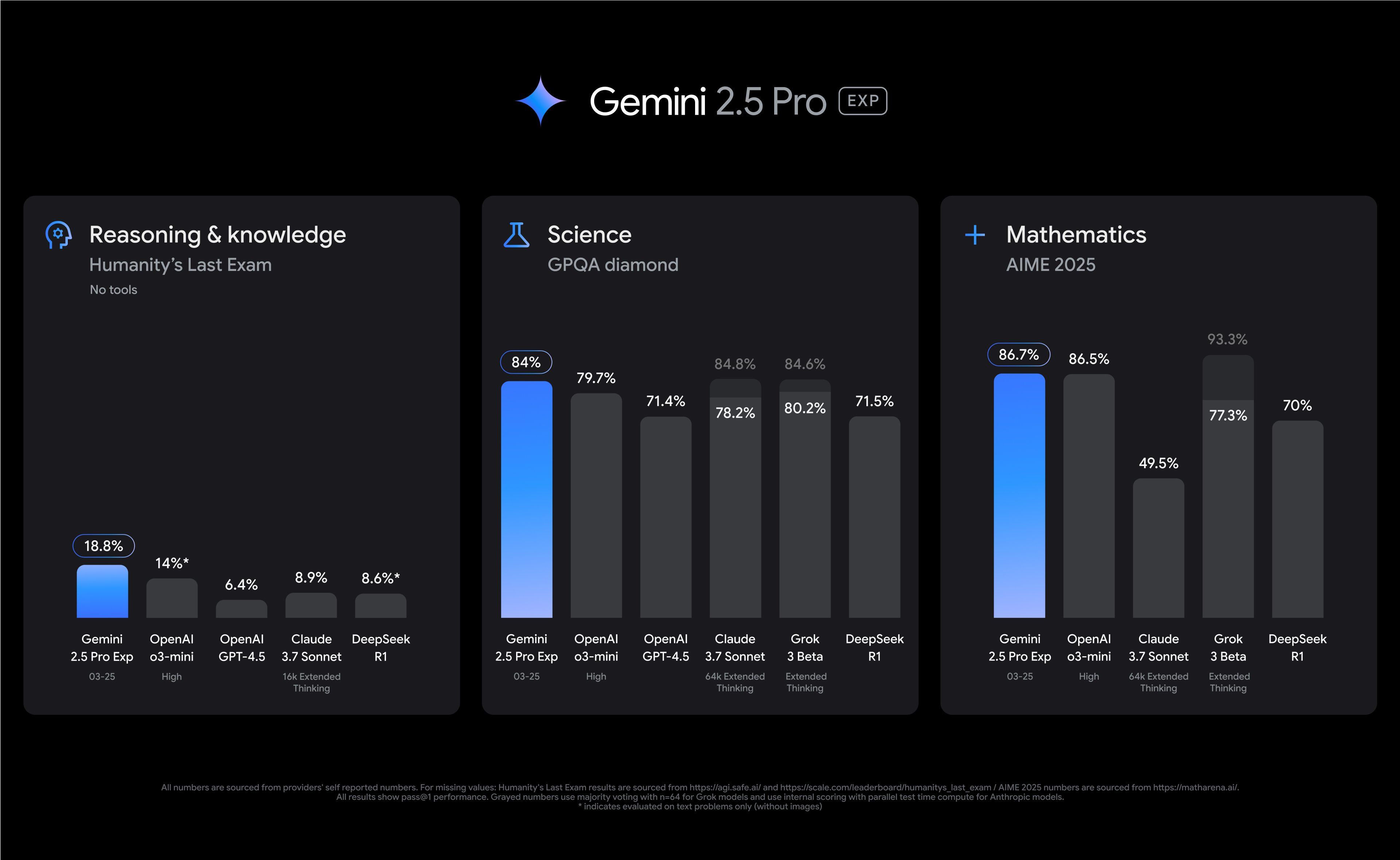

The generational gains Google is claiming here look pretty good. In areas requiring advanced reasoning, the company claims Gemini 2.5 Pro performs pretty well on benchmarks such as GPQA (Graduate-Level Google-Proof Q&A) and AIME 2025 (American Invitational Mathematics Examination problems). Furthermore, it reportedly scored 18.8% on Humanity’s Last Exam, a challenging dataset designed by subject matter experts, when tested without external tool use. The model also debuted at the top position on the LMArena leaderboard, a platform that ranks AI models based on human preference evaluations, sitting above recently released models like OpenAI’s GPT 4.5 or xAI’s Grok 3.

Google claims that Gemini 2.5 Pro performs great when it comes to generating web applications, agentic code (code designed to perform tasks autonomously), code transformation, and editing. On the SWE-Bench Verified benchmark, which evaluates agentic coding skills, Gemini 2.5 Pro achieved a score of 63.8% using a custom agent setup. To further flaunt its capabilities, the company even said that the model is capable of generating executable code for a video game from a single-line prompt. I tried exactly that last week when the new Canvas feature was released and it kind of sucked, so I’d need to try that out again with the new model to see if it’s true.

Gemini 2.0 was first released publicly in late January, so it hasn’t even been two full months since that particular model family was released. As a fun note, Google has also completely scrubbed the experimental version of Gemini 2.0 Pro and replaced it with Gemini 2.5, so unless the stable version of that model is coming soon, we could technically say the short-lived Gemini 2.0 family didn’t have a stable “advanced” model at all. Yes, we moved that quickly. With everyone wanting to claim the AI throne for themselves and competition ramping up, companies releasing models in rapid succession will likely become an increasingly common sight.

The model is currently available in an experimental stage for Gemini Advanced users, so if you have a subscription, you can try it out from now. If you don’t see it yet, it might take a few more days to pop up. We’re not sure when we’ll see this become stable, or when we might see a smaller Gemini 2.5 Flash model for free users.

Source: Google