They won’t be winning Olympic gold medals any time soon, but a team of researchers from Google’s DeepMind have developed an AI-driven robotic system. It matches the capabilities of an amateur-level human tennis player and which can handily outperform beginner-level players.

“Achieving human-level speed and performance on real world tasks is a north star for the robotics research community,” the team wrote in a study titled, “Achieving Human Level Competitive Robot Table Tennis,” published Thursday. “This work takes a step towards that goal.” Their “learned robotic agent” is designed to achieve human-level performance in accuracy, speed, and adaptability to its opponent. It combines a library of low-level skills, each pertaining to a specific move or shot in table-tennis—a forehand topspin, backhand targeting, or forehand serve—with a high-level controller that understands the strengths, weaknesses, and limitations of each skill and employs the optimal shot selection for the given situation.

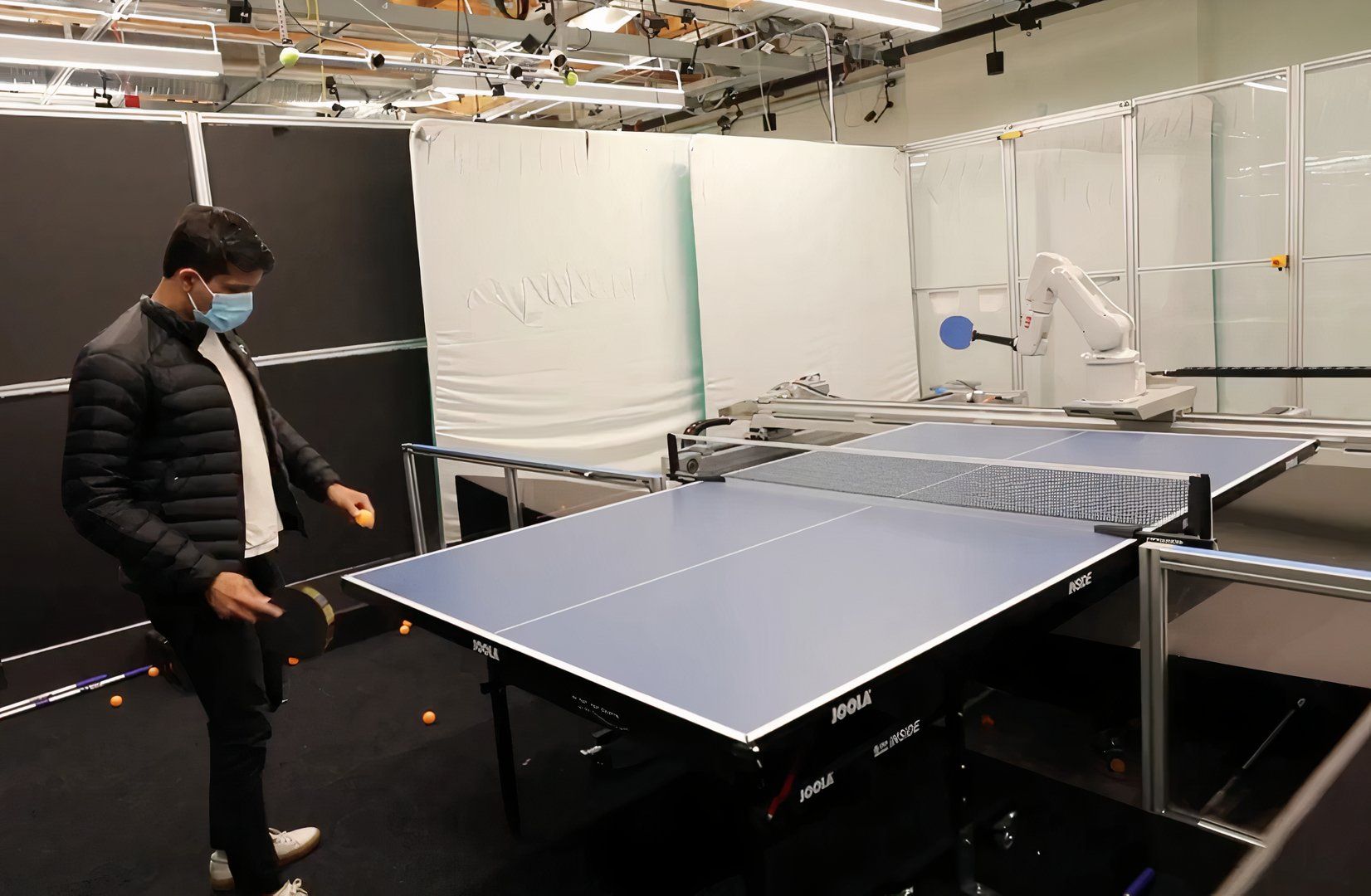

The team tested its table-tennis prowess by pitting it against 29 table tennis players ranging in skill from beginner to advanced+, as determined by a professional table tennis coach the researchers hired. Each player competed in 3 games against the robot. Only humans served as the robot only has one arm.

Despite its lack of extra appendages, the robot went undefeated against the beginner-level players. It lost consistently against both the advanced and advanced+ tiers and won about half of its games against intermediate-level players. Overall, the robot won 45 percent of its games and 45 percent of its matches against the entire field of opponents. What’s more, the study participants actually enjoyed playing with the machine, describing it as “fun” and “engaging.”

“This is the first robot agent capable of playing a sport with humans at human level and represents a milestone in robot learning and control,” the researchers argued. “However, it is also only a small step towards a long-standing goal in robotics of achieving human level performance on many useful real world skills.”

Google has long sought to develop machine learning and embodied AI systems that can match humans on the field of play, with varying levels of success. In 2016, it famously outperformed human champions at Go, prompting one to retire from the sport. In 2022, Google’s Swift AI beat 2019 Drone Racing League champion Alex Vanover, the 2019 MultiGP Drone Racing champion Thomas Bitmatta, and three-times Swiss champion Marvin Schaepper at their own game, the first time an AI had outperformed humans in a physical sport. And earlier this year, DeepMind debuted a duo of soccer-playing automatons.

Source: Google DeepMind