Summary

- AI can decode brain waves without invasive surgery, allowing for mind-reading without physical implants.

- Semantic decoders can translate brain waves into coherent sentences, providing potential for thought-to-text interfaces.

- Current mind-reading tech is accurate but requires extensive training, sophisticated lab equipment, and raises privacy concerns.

Think of a sentence and it instantly shows up on your screen. You didn’t type or say a word. That sounds like something out of a Black Mirror episode, right?

However, there are AIs being developed that can do just that. You don’t even need Elon Musk’s invasive brain chip for it.

How AI Mind Reading Works

You might have heard of Neuralink. It’s a small chip that can pick up neural activity and wirelessly send it to a computer. The technology is still in its early stages, but it can do some pretty impressive things.

As seen in recent demos, it lets a paralyzed person control a cursor on a screen with just their mind. The company hopes that this chip will one day allow paralyzed people to walk and even make telepathy possible.

The thing is, Neuralink requires risky brain surgery. That kind of implant makes sense for people with severe disabilities, but it’s probably not worth it for most people.

Related

Your Brain Sends Out a Secret Broadcast

Surprisingly enough, you don’t actually need to physically insert a chip in the brain to read its activity. You can rely on brain waves instead.

When a neuron in your brain fires, it sends out a tiny electric current. On its own, it’s too faint to measure outside your brain, but neurons tend to sync up and fire together.

When thousands of them fire together in rhythms inside the brain, the electrical signal is strong enough to be detected outside on the scalp. Kind of like how a stadium full of people clapping in sync creates a pulse you can feel from a distance. In the same way, your scalp is buzzing with electrical activity all the time.

That’s what brain waves are: rhythmic patterns that reflect what your brain is thinking and doing in real-time. Some patterns show rest and relaxation, others show focus or problem-solving, some reflect inner monologues. The trick is telling them apart, and separating meaningful patterns from noise.

So that’s the core idea: train AI to interpret those waves and see what it pulls out from the noise. Shockingly, it works.

Lie Still Please, The Machine Is Scanning Your Mind Right Now

In a lab test, a person was read the sentence:

“I don’t have my driver’s license yet.”

They didn’t say or type a word, just listened while a machine scanned their brain (non-invasively).

An AI read that scan in real-time and this text was its output:

“She has not even started to learn to drive yet.”

Note the input isn’t regurgitated word for word. This AI pulls the meaning out of the listener’s thoughts. It’s mind-reading in the most literal sense.

The system is called a “semantic decoder,” and it relies on scans from fMRI machines to decode brain activity. The participants are either read stories or told to think about stories, and that’s enough for the AI to translate their thoughts.

One participant listened to the line:

“That night, I went upstairs to what had been our bedroom and, not knowing what else to do, I turned out the lights and lay down on the floor.”

The AI looked at their brain activity and translated it as:

“We got back to my dorm room. I had no idea where my bed was. I just assumed I would sleep on it, but instead I lay down on the floor.”

The system goes beyond speech. When participants are watching silent videos, the AI can narrate some of what they’re seeing in a way that’s decoding their thoughts based on visual input.

See Through Someone Else’s Eyes

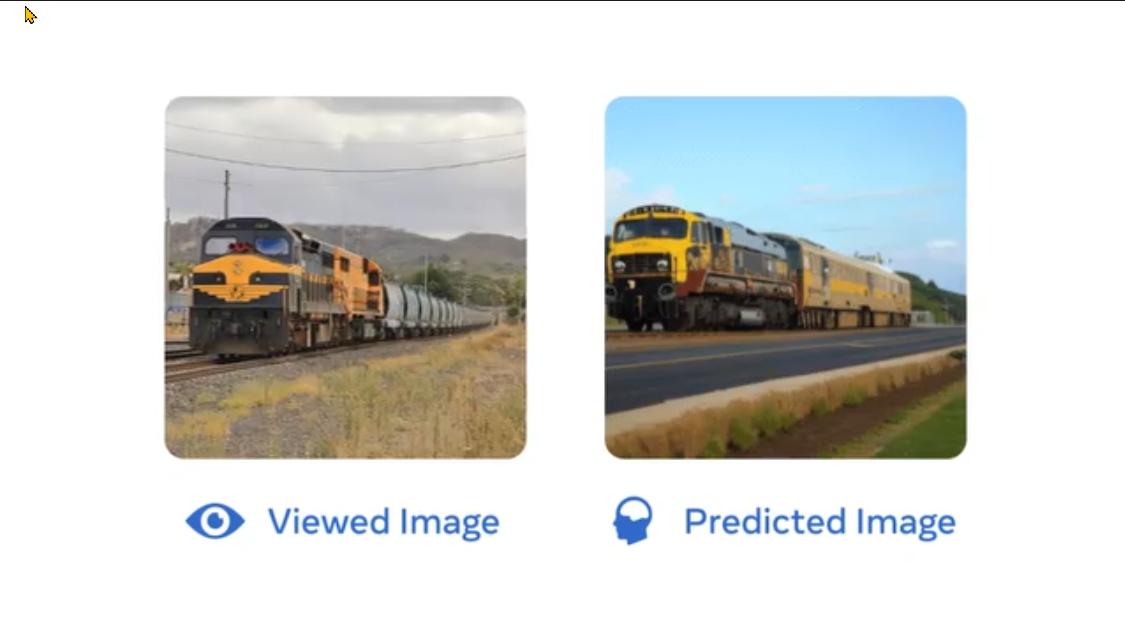

Take a look at this picture.

A volunteer looked at the picture on the left for one second while lying in an fMRI machine. An AI read their brain activity and reconstructed the volunteer’s visual experience in the image on the right.

It’s not always a perfect copy, but it’s incredibly close.

Researchers at Meta took this impressive decoding even further. Using magnetoencephalography (MEG), the AI can decode these visual inputs in real-time, or at least close to it. It doesn’t generate crisp, near-perfect results, but it does reconstruct a person’s visual stream: a constantly morphing feed of what the brain is seeing moment by moment.

In another project, Meta has used the same devices to reconstruct words and coherent sentences entirely from brain signals.

Talk To Alexa With Your Mind

The tech we’ve talked about so far requires bulky, sophisticated lab equipment. However, there are more practical, wearable systems in development too.

AlterEgo is a wearable device that lets you talk to computers and AI chatbots without speech or gestures. When you think about speaking, before articulating a word, there is an imperceptible muscular activity in your jaws and head. This wearable device can pick up those muscle signals and translate them into computer-readable input. The computer then returns output as audio conducted through bones.

So you could silently say, “Alexa, play music” and hear “playing music” through bone conduction, without making a sound or wearing earbuds.

It’s not mind reading in the literal sense, because the device isn’t reading your feelings or interpreting your thoughts. It’s just a way to interface with computers using your internal monologue.

What Could Mind-Reading AIs Really Do?

AIs like these remain confined to the lab, but they are slowly making their way out into the real world. It’ll open an entirely new dimension to how we interact with our computers and what they’re capable of.

Give Disabled People Their Voice Back

The most urgent use case is restoring communication for the disabled. People with total paralysis have to rely on slow and clunky technology like eyeball tracking to compose messages. AI systems like semantic decoders or AlterEgo can instantly output their internal thoughts, giving them their voice back.

New kinds of accessibility tools and features could be built off these systems. They would remove the need for typing, talking, or moving, offering people who can’t speak a clear way to communicate. Instead of painstakingly choosing letters with blinks, they could type or control wheelchairs just with their thoughts.

Input At the Speed Of Thought

Instead of keyboards, mice, touch, or speech, these AIs could help us build interfaces that run on thoughts. Once these technologies mature, you can control your phone or laptop just by thinking of scrolling or searching. AlterEgo already understands subvocalized speech like “turn off the lights” and takes it as input.

Interfaces built on decoding thoughts are near-instant. Not effortful, like typing, or clunky, like speech recognition.

Brain-to-Screen Interfaces

Eventually, you might not even have to verbalize internally. It’ll take away the need for typing altogether. Your ideas will go straight from brain to screen. It wouldn’t just change how we write, it would change what writing means.

You could take notes, skip songs with your mind, or write code with your mind at the speed of thought. Meta’s brain-to-text tech is still in its early stages, but it consistently hits almost 60 words per minute—twice as fast as the typing average.

Since real-time vision decoders like Meta’s MEG can read visual content inside the brain, that content itself could become input for the computer. Instead of trying to think in words, you could imagine a picture and it (or something close to it) would show up on the screen.

Right now, they’re using it to decode inner mental constructs, but one day this tech could help you send texts quietly during a meeting.

Why It’s Not Ready For the Market (Yet)

The tech itself is sound, and it does work, but the reason we don’t have semantic decoders out in the wild is because it’s not generalized—yet.

Excessive Training Requirements

In order for a semantic decoder to work for someone, they have to train the AI on that person for hours. That means the participant has to spend hours in an fMRI machine, listening to stories, so the AI can start recognizing the patterns in that individual’s neural activity.

Also, the AI outputs break if the participants stop cooperating. The decoder doesn’t “steal” thoughts because it can only interpret them as long as the person is willing. If they stop thinking or think of other thoughts, the system just returns noise.

It Requires Heavy Lab Equipment

Brain-to-screen or thought decoders need incredibly sophisticated lab equipment. Meta’s MEG device that powers the real-time visual feed and thought typing only works in a magnetically shielded room and the subjects have to stay perfectly still, otherwise the output is blurred.

Even AlterEgo, which sidesteps finicky brain wave detection by relying on micro movements of muscles, is limited to short pretrained phrases. It doesn’t understand complex or long sentences.

It’s Not Always Accurate

There’s one more catch: the accuracy is still two paces off. The semantic decoder (which reports the meaning behind thoughts) captures the right meaning only about 50% of the time. Meta’s brain decoding AI is 80% accurate.

Will This Tech Be the End of Privacy?

As it stands right now, we are in completely uncharted territory. The second a thought can be digitized and recorded, self-censorship begins. It’s not about secrets locked away in your mind; it’s more about the freedom to think without being observed. If anyone can observe our thoughts, record them, and play them back, we’ll start editing our thoughts internally.

If that data can live outside our brains like a browser history of our thoughts, who has the right to access and ownership?

Could a court issue a subpoena for your mind when no other evidence or witnesses are available? Therapists might not even need to ask you stuff anymore. They could just look at your thoughts.

Corporations are already strip mining our digital lives for every bit of data they can dig up. When thoughts go digital in the real world, they could become an incredibly invasive tool to tune algorithms and targeted ads. Amazon might know what you want to buy, not just guess, and throw suggestions at you before you even realize it.

Mind-reading tech is already here. What remains to be seen is how the world will change when it hits the shelves.