Summary

- Smaller LLMs can run locally on Raspberry Pi devices.

- The Raspberry Pi 5 with 16GB RAM is the best option for running LLMs.

- Ollama software allows easy installation and running of LLM models on a Raspberry Pi.

AI chatbots such as ChatGPT and Google Gemini have been incredibly popular in recent years. You can access these chatbots online or through dedicated mobile or desktop apps. All of your requests are sent to the chatbot’s servers and processed in the cloud, with the responses sent back to your computer or phone.

However, you might not like the idea of every conversation you have with an AI chatbot being sent to the cloud. At best, it puts your information at risk of a data breach, and at worst, companies may decide to use that information for their own ends, such as targeted advertising.

The good news is that it’s possible to run your own Large Language Model (LLM) on a computer. All the chatbot’s responses are then generated locally and nothing gets sent to the cloud. It’s even possible to run a local LLM on some Raspberry Pi models.

Raspberry Pi 5

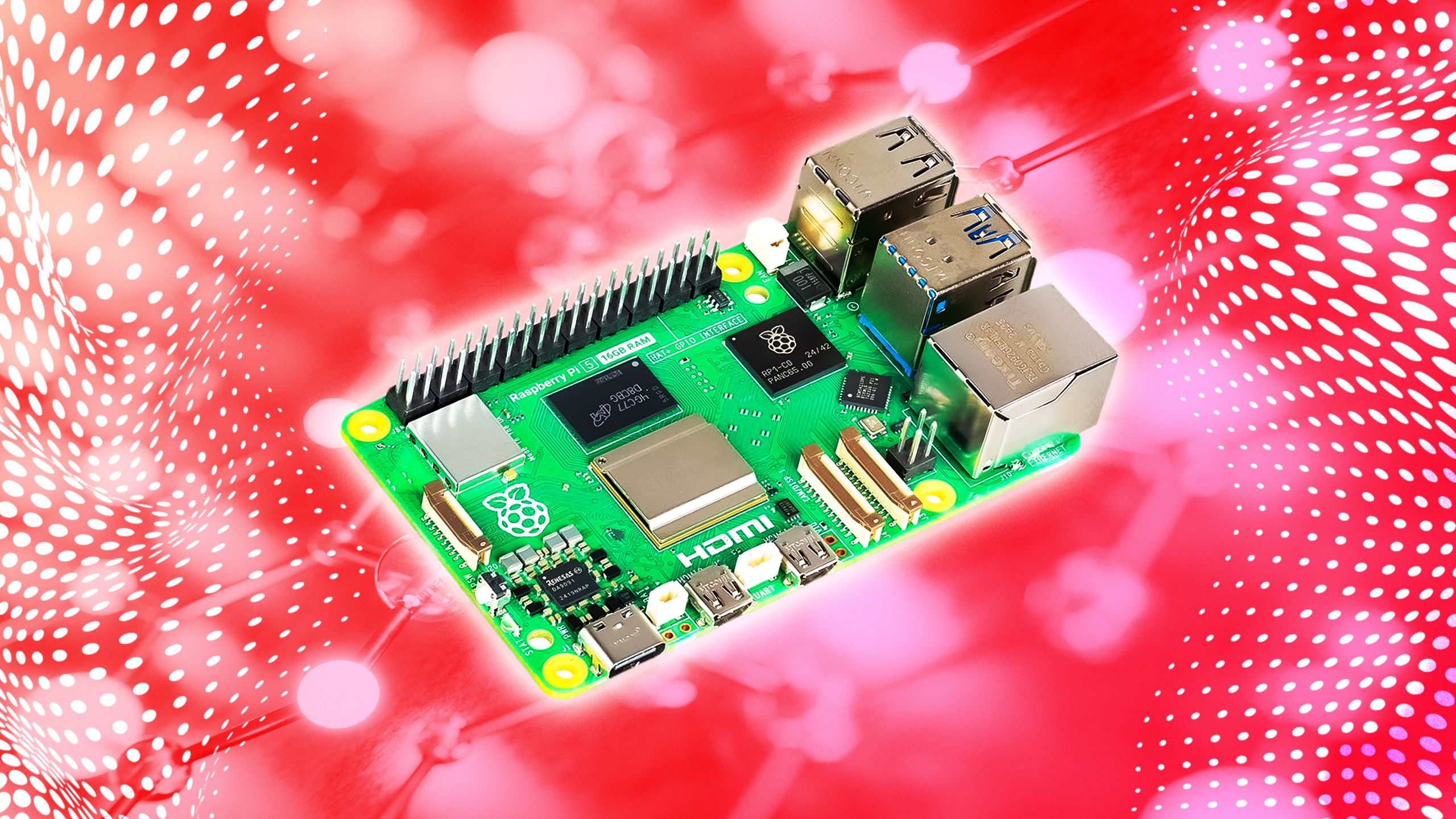

The Raspberry Pi 5 is a powerful single-board computer (SBC) that launched towards the end of 2023. It’s great for DIY tech projects or even as a low-power desktop PC.

Choosing the right Raspberry Pi

The more powerful your Pi, the better

Pocket-lint / Raspberry Pi

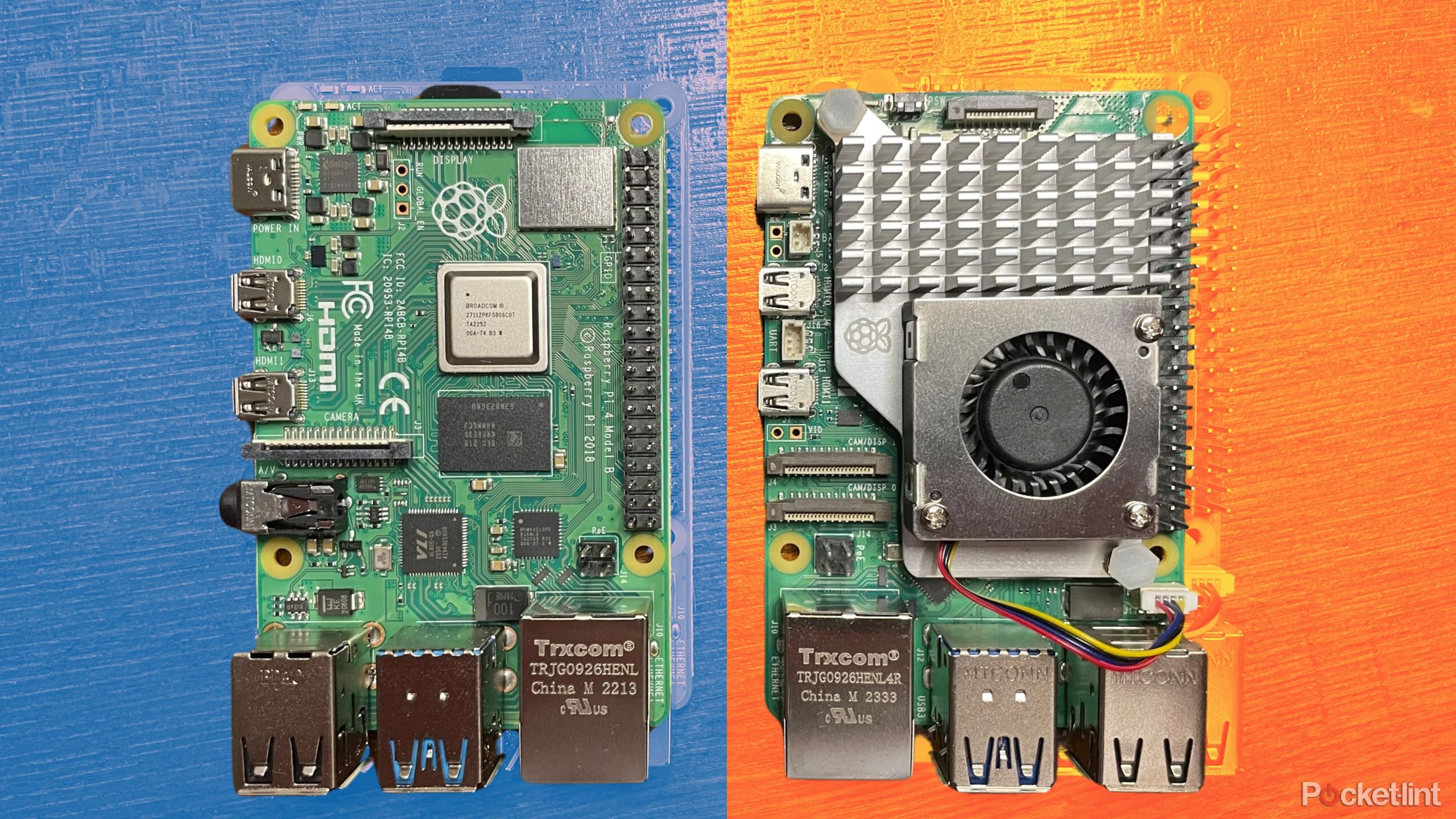

Running LLMs usually requires machines with powerful GPUs to get the best results. However, the integrated GPU in Raspberry Pi models offers little help when it comes to the complex calculations involved in running an LLM.

The key factors, therefore, are the amount of RAM and the CPU. This means that the Raspberry Pi 5 is the best choice. It has the fastest CPU and supports up to 16GB of RAM, while the Raspberry Pi 4 tops out at 8GB. You can run an LLM on a Raspberry Pi 4, but a maxed-out Raspberry Pi 5 will give you the best results.

I was able to get some of the smaller models, such as qwen2.5:0.5b, running on a Raspberry Pi 3B, but it was fairly slow.

You could try an older Raspberry Pi model at a push, but the results are unlikely to be great. I was able to get some of the smaller models, such as qwen2.5:0.5b, running on a Raspberry Pi 3B, but it was fairly slow, and the Raspberry Pi soon got hot. If you’re planning to buy a Pi to run an LLM, then I would definitely opt for a Raspberry Pi 5 with 16GB of RAM.

Related

Raspberry Pi 5 vs 4: Is newer better?

After testing, we put these two tiny computers head-to-head to see which one comes out on top.

Selecting which LLM to use

You’ll get the most joy out of smaller models

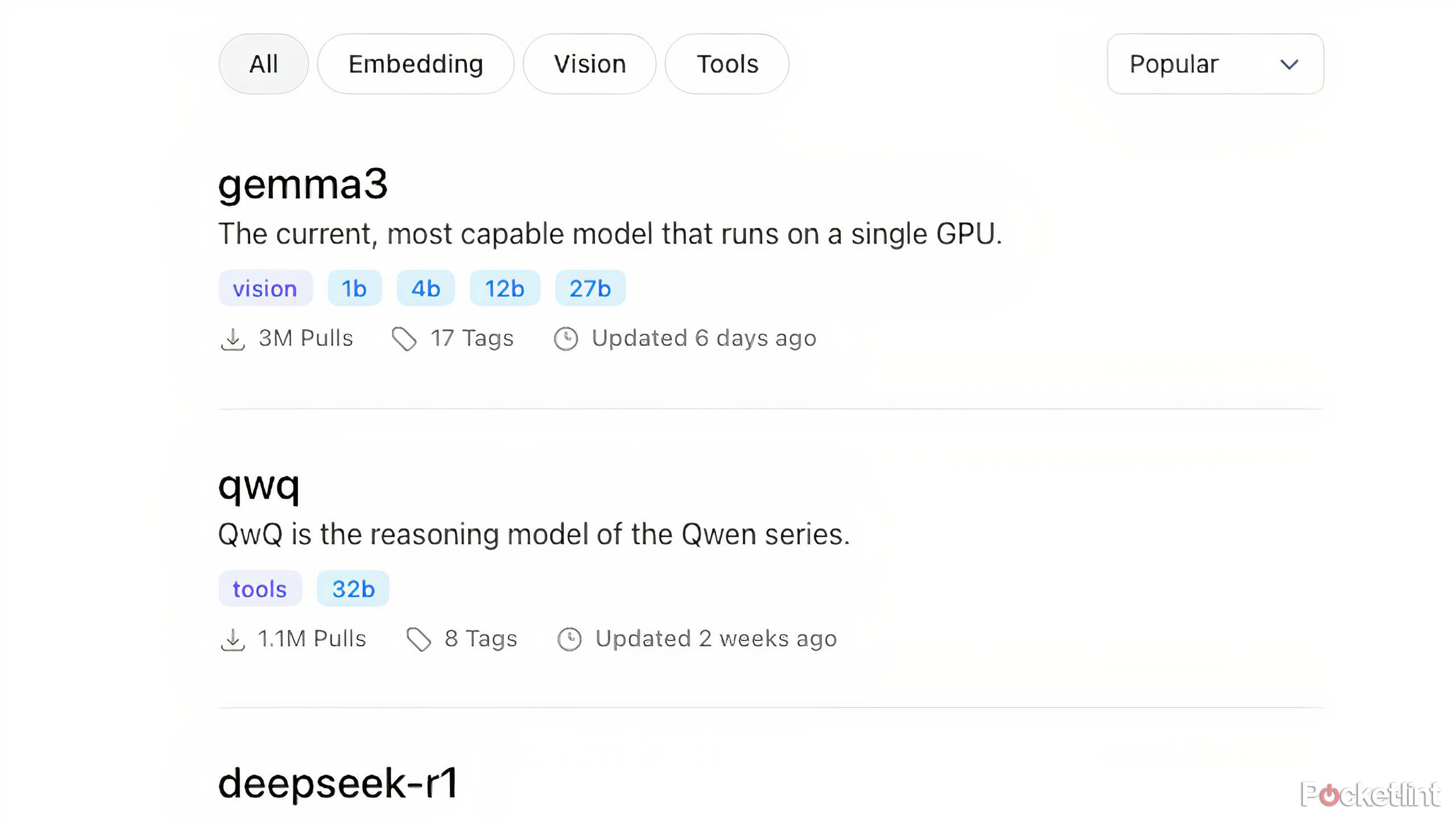

Once you’ve chosen your hardware, you need to decide which LLM to use. There are plenty of options you can choose from, and your selection will largely be guided by the fact that you’re going to be running it on a Raspberry Pi.

The more parameters a model has, the more likely it is that your Pi will struggle to handle the pace. Models with 3-5 billion parameters should run reasonably, but anything higher might be a struggle. You can opt for a more lightweight model with 1 or 2 billion parameters, and you should find that these will run more easily on your Raspberry Pi.

Some good lightweight options that should run fine on a Raspberry Pi 4 or Raspberry Pi 5 include tinyllama, gemma, and qwen2.5.

Related

What is Grok and what can it do? Elon Musk’s AI chatbot explained

An AI chatbot exclusively for paid Twitter users, and not an X in sight.

Installing Raspberry Pi OS on your Raspberry Pi

Your Pi needs an OS to run your LLM

Once you’ve decided on the hardware you’re going to use and the model you’ll run, you’re ready to get started. You’ll need to install Raspberry Pi OS on your Raspberry Pi if it’s not already installed.

- Download the Raspberry Pi Imager software and connect your SD card to your computer.

- Click Raspberry Pi Device and select your model of Raspberry Pi.

- Select Operating System and choose the Raspberry Pi OS software for your device.

- Click Storage and select your storage.

- Click Next.

- Select Edit Settings.

- Under the General tab, enter a hostname for your Raspberry Pi if you want to change it.

- Enter a username and password you will use to remotely access your Pi.

- Enter the SSID and password for your Wi-Fi if you want your Raspberry Pi to access the internet wirelessly.

- Click the Services tab and check Enable SSH to allow you to access your Raspberry Pi via SSH from a computer.

- Raspberry Pi OS will be installed. This may take some time.

- Close out of the settings and click Yes.

- Confirm that you want to overwrite your storage device.

- Raspberry Pi OS will be written to your storage device.

- When it’s finished, connect your storage device to your Raspberry Pi and start it up.

Installing Ollama on your Raspberry Pi

The open-source tool lets you run your LLM

Once you’ve installed Raspberry Pi OS, it’s time to install the Ollama software that will run your LLM. You can install this directly from your Raspberry Pi.

- In PowerShell on Windows or Terminal on Mac, enter the following:

ssh [your_username]@[your_hostname].local

- Enter your password to sign in.

- Enter the following to install Ollama:

curl -fsSL https://ollama.com/install.sh | sh

- It will take some time for Ollama to install.

- Once the installation is completed, enter the following, replacing [model_name] with the name of the model you want to use and [parameter_size] with the number of parameters you want to use:

ollama run [model_name]:[parameter_size]

- For example, to use gemma3 with one billion parameters, you would type:

ollama run gemma3:1b

- You’ll see some progress bars as the model is installed.

- Once the model has been installed, it’s ready to use.

Running your LLM model on your Raspberry Pi

Your very own AI chatbot running in your home

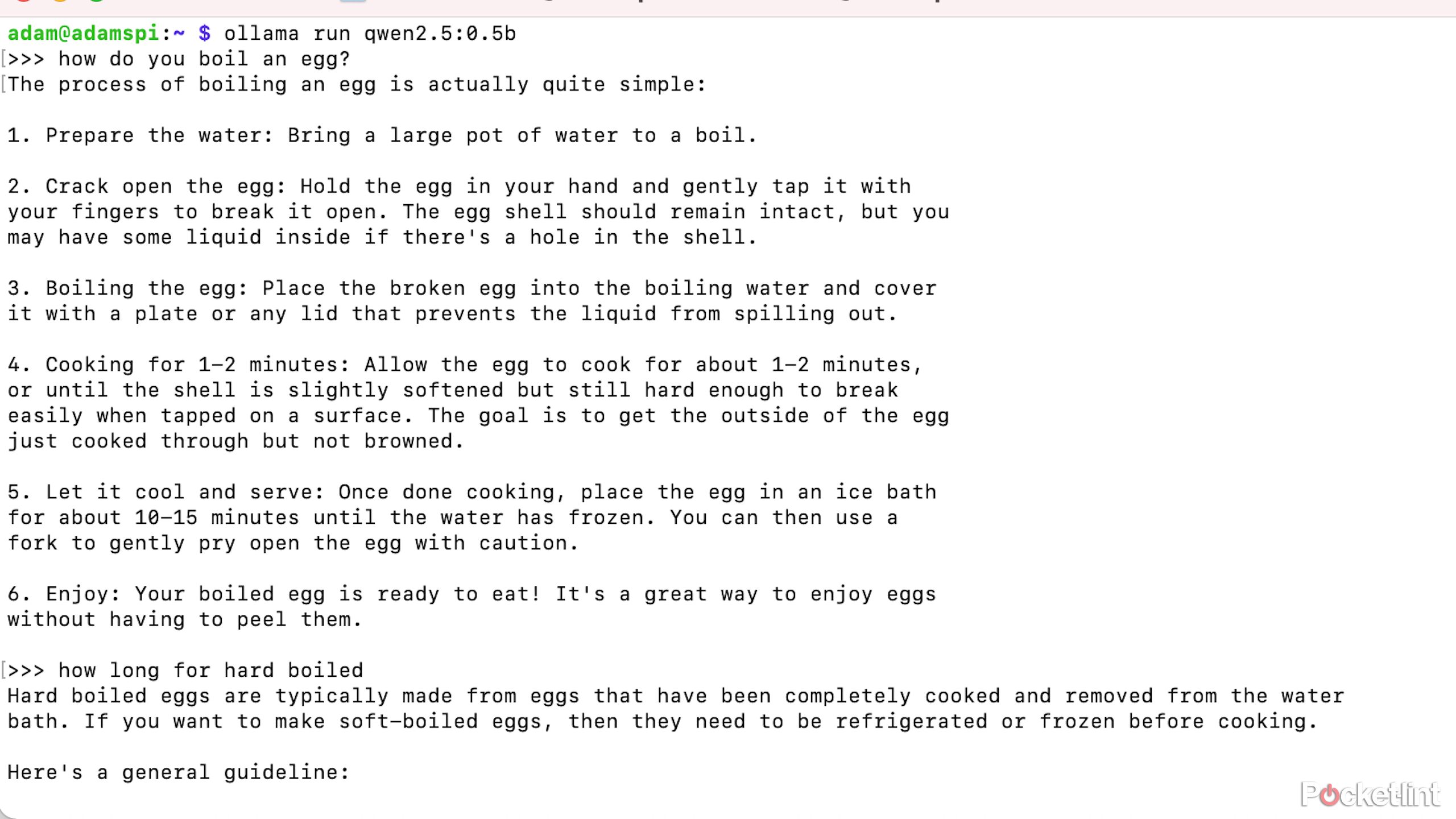

Once the model has finished configuring, you should see a request for you to enter a prompt. You can now enter a prompt just like you would with any other AI chatbot.

For example, you could ask it to write you a poem or to explain how a steam engine works, or almost anything else that you would ask an AI chatbot.

The responses may take a little longer than you’re used to when using AI chatbots such as ChatGPT, but as long as you’re using decent hardware and not trying to run an LLM with too many parameters, you should have an adequately usable AI chatbot.

Related

I tried DeepSeek and ChatGPT to find out which one is actually better

The AI assistants are similar in some ways, and vastly different in others. Here’s which you should use.

Why should you run an LLM on your Raspberry Pi?

Running a local LLM offers far greater privacy

Being able to run an LLM on a Raspberry Pi is all very well, but it doesn’t mean you actually should. However, there are a few benefits from using a local LLM rather than turning to AI chatbots such as ChatGPT or Gemini.

You can ask anything you want without worrying about someone at OpenAI or Google reading your prompts and keeping track of everything you ask.

The biggest benefit is that everything happens locally. It means that you can ask anything you want without worrying about someone at OpenAI or Google reading your prompts and keeping track of everything you ask. It’s not a good idea to include sensitive information in prompts when using apps such as ChatGPT, for example, but you can do so using your own LLM without worrying that other people might be tracking what you’ve asked and possibly selling that information on.

Another benefit is that you can use your local LLM even if the internet is down. As long as you can still connect to your Raspberry Pi within your home, you can use your LLM. You’re no longer at the mercy of internet outages; your LLM will always be available when you need it.

You can also use your LLM for other local services. For example, using home automation software such as Home Assistant, you could use your local LLM to generate personalized messages that play when you or other family members arrive home. You don’t need to worry about API costs since everything will be generated at home on your Raspberry Pi.

Related

6 Raspberry Pi projects that go beyond the basics

You can do more than you might think with a Raspberry Pi.