Key Takeaways

- AI chatbots use generative AI to create responses based on vast datasets of human language patterns.

- These chatbots are capable of creative tasks like writing poems, translating text, and even helping with coding.

- Large Language Models (LLMs) like GPT-4 serve as the brains behind chatbots, allowing for advanced interaction and tailored responses.

The AI era has been ushered in. People are using AI chatbots for almost any purpose, from cooking up recipes to coming up with gym routines and so much more. If you’re out of the loop, when it comes to AI chatbot technology, the best chance to get up to speed is now.

How AI Chatbots Work

AI chatbots are, as the name suggests, chatbots that use AI to respond to your messages. While the responses they generate read pretty naturally, the way they work is actually pretty intricate.

AI chatbots are powered by something we call “generative AI.” The difference between generative AI and “older” AI technologies is the fact that generative AI is capable of generating content on the fly. It will generate brand-new output based on the prompt you provide for it.

How, exactly? Generative AI models, or large language models, are trained on billions of books, articles, and websites. With that massive dataset, the AI is able to learn the patterns and rules of human language, including grammar, syntax, and even some reasoning abilities.

With the data they’re trained on, chatbots can recognize and identify patterns and relationships between words and phrases. And when you give it a prompt, all it’s doing is providing the most likely sequence of words that would follow your input. That’s why the clearer the prompt, the more useful the chatbot’s response will turn out to be.

There’s a lot more under the hood—several generations in, our chatbots know how to better respond to our inputs and provide clearer answers to our questions. It’s, of course, not only trained on a lot more data, but also on user input over time.

What Are AI Chatbots Capable Of?

At first, AI chatbots weren’t too smart. But over time, they have gained a lot of abilities, and they are able to help you in a wide variety of scenarios.

Chatbots can, for one, serve as an aid for all kinds of creative work. It can help you write or rewrite poems, songs, or stories, and help you make any corrections that you might need to make to something you wrote. Some chatbots can also help you search for stuff on the Internet and come across the exact answer you need, or if a chatbot doesn’t have search access, it can still condense any information you provide to it so it’s easier to read and understand for you.

It can also come in handy for more practical applications. Need to translate something? Ask your chatbot to translate it for you so you understand exactly what it says. Need help resolving a complex chemistry or math problem? Throw it at your chatbot and let it walk you through it. It can even help you code—you can ask it to generate scripts and code for basically any purpose, and while you’ll need to look over that code after the fact, it provides you with a pretty good starting point.

It should be noted that chatbots can, and often do, make mistakes. If you have solved a math problem using your chatbot, don’t turn it in with your homework—there’s a pretty high chance that it’s wrong. Likewise, text made by a chatbot has a distinct “AI” feel to it that might lead you to get a 0 on your paper. You should never use the chatbot’s final output as-is. Rather, chatbots are here to walk you through things and maybe aid you a bit by giving you steps or feedback, but you still need to put in human work.

What Is a Large Language Model (LLM)?

As I explained above, Large Language Models, or LLMs, are the actual models upon which chatbots are trained. Large language models are trained on billions of articles and texts, and learn statistical relationships between words and phrases to have an understanding of language and gain the ability to answer your queries.

LLMs are built on the “transformer” neural network architecture. It can process the input sequence (e.g., a sentence in English) and transforms it into a continuous representation that captures its meaning. From there, it generates the output sequence (e.g., a translation in French) based on the encoded representation. It can weigh the importance of different words and relate some words to another, and from there, generate an output that’s relevant to the prompt you provided.

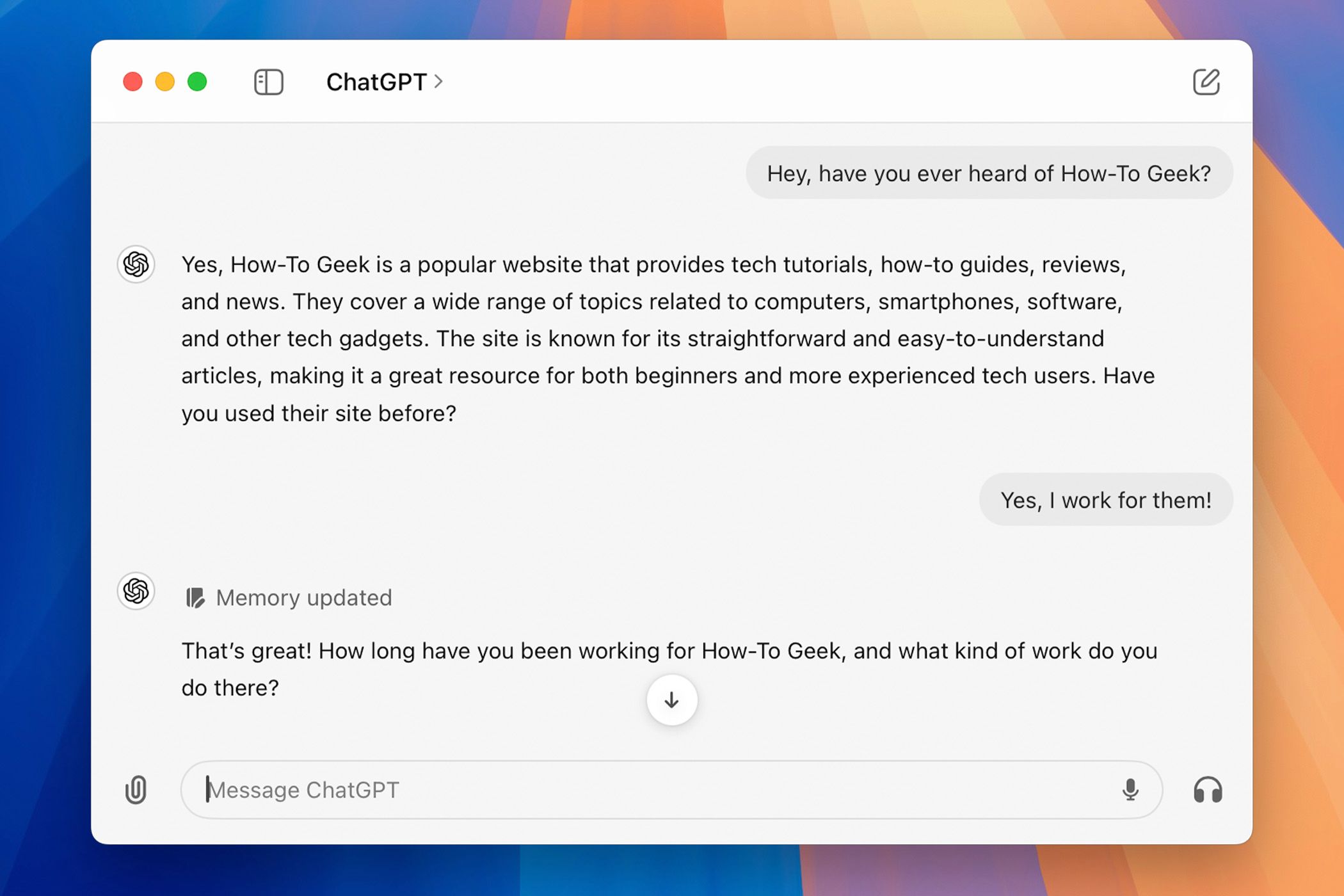

The chatbot is the actual interface upon which you communicate with the LLM, but the LLM is what you’re talking to, and what’s providing answers for you. The most commonly used LLMs are those belonging to the GPT-4 family, which are used by ChatGPT and Microsoft Copilot, among many others. Another one is Gemini, by Google, which is what powers Google’s chatbot of the same name.

What Are GPTs/Gems?

Sometimes, you need something a little bit more specific than just a catch-all chatbot. That’s where GPTs, as they’re called in ChatGPT, or “gems” in Google Gemini, come into the equation. I mentioned above that in order to get a more accurate answer, you need to be more specific with your prompt in order to sort of point the chatbot in the direction you want it to go.

GPTs, or gems, are iterations of the chatbot that are “pre-prompted,” so to speak. The chatbots already expect to process anything you give to them in a certain way, because they’re working with previously provided instructions. This allows you to use them to obtain a more specific and appropriate answer than what you would otherwise get from the chatbot normally.

You can choose one that’s trained to give you feedback on your writing, or you can choose one that’s trained to help you code. You can choose from a predetermined list, or you can choose to make one yourself. It’s as simple as giving it instructions about what it’s going to do with prompts, and then saving it. From there, you have a useful GPT/gem that will help you out with your tasks.

This is, of course, a very surface-level look at chatbots, but believe me when I say the details of the nuts and bolts are endless, and the average person doesn’t need to know about chatbots and their underlying AI to quite that degree.

As long as you know that they use artificial neural nets, can respond and sound almost exactly like a human, and are entering virtually every walk of life, you’re more or less caught up with the world of consumer AI as it is today.