Computers store characters by mapping them to different binary values. To display them properly, you need to know how they were encoded. The iconv command converts a file to a new encoding.

It’s Binary All the Way Down

No matter what type of data a computer is working with or storing, it is held as binary information. Images, text, music, video, and everything else are stored as binary data. Whether the data is on a storage device or loaded into the computer’s memory, it’s still represented by binary values.

If the data is text, and we want to display that text on screen, there’s a translation that has to happen to convert the binary values into characters. To perform the translation, we need to know which values were used to represent each character when the data was created. The software can then work backward and map the stored numerical values back to characters.

Because success depends on knowing what type of mapping has been used and rigorously adhering to the rules of the mapping during data creation and data usage, standards have been created that formalize such character mappings. They’re easy to understand if we get the jargon straight.

Characters, Bytes, and Mapping

A character is a letter, a number, or any other displayable symbol, such as punctuation symbols, mathematical signs like equals “=” and plus “+”, and currency symbols. The thing you see on screen that represents that letter is called a glyph, and a collection of glyphs make up a typeface.

A typeface is what a lot of people mistakenly call a font. Strictly speaking, a font is a version of a typeface that has been modified, for example by increasing or decreasing its size, or changing its weight to make the lines of the glyphs thicker or thinner. Regardless of the typeface, the numerical representation of the character remains the same.

All the characters in a single mapping are called the character set. Each character in a set has its own, fixed, unique, numerical value called a code point. If a character or symbol doesn’t appear in the character set – that is, there’s no code point for it – then it cannot be displayed using that character set. An important consideration is the number of bytes used to represent a single character. The more bytes you use per character, the more characters you can include in the set.

The granddaddy of all single-byte character sets is the ASCII standard. It hails from the late 1960s when a 7-bit standard was set down that encoded 128 different code points for use by teleprinters. By contrast, the Unicode standard contains a total of 1,114,112 code points. Such a large code space is required because Unicode tries to provide character mapping support for all human languages.

Using a fixed number of bytes to store code points is wasteful. If a code point only needs one byte to identify it, the other bytes reserved for that code point are redundant. Unicode multibyte variable-length character sets use a variable number of bytes for code points, with as many as four bytes being required to describe a complicated code point.

So, a code point may have to encode two types of data. It has to identify the character it represents, and it must contain metadata about itself, such as the number of bytes in the code point. Also, some characters need to be combined with other characters to obtain the final glyph, so the code point needs to encode that information too.

The advantage of a variable-length scheme is you only use the bytes you really need. This is efficient, and results in smaller files. The disadvantage is, the data is more complicated to read and parse. And converting from one character set to another can become very difficult, very quickly.

That’s where the iconv command comes in.

How to Use the iconv Command

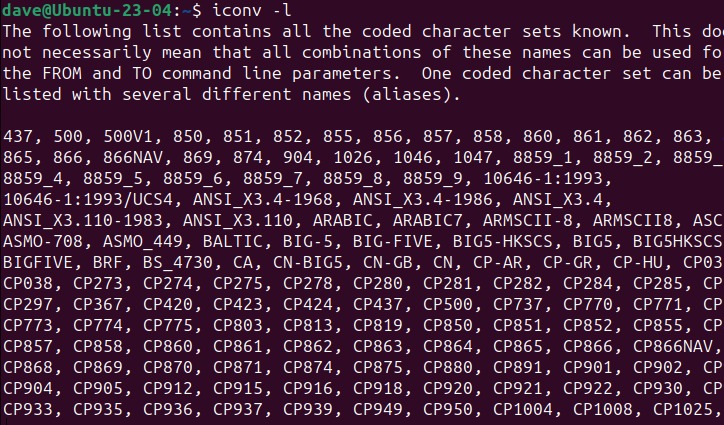

What the iconv command lacks in terms of command line options it more than makes up for in the number of character encodings it supports. It lists over 1100 different encodings, but many are aliases for the same thing. We can list all the supported encodings using the -l (list) option.

iconv -l

To use iconv you need to specify a source file and an output file, and the encoding you’re converting from and the encoding you’re converting to. If you don’t specify file names, iconv uses STDIN and STDOUT, taking its input from the command line and writing its output to the terminal window. You can pipe input to iconv, and you can redirect its output to a file too.

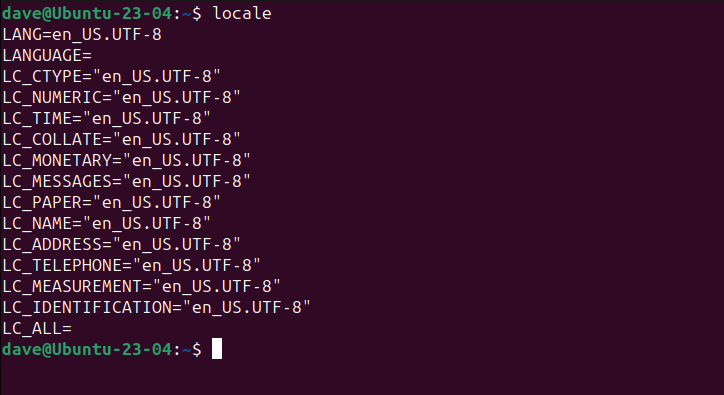

We’ll use iconv with STDIN to illustrate some points. We need to specify the encoding of the input text, so we’ll use the locale command to discover what it is.

locale

The first line says we’re using US English, and Unicode UTF-8 encoding. Our test string has some plain text, an accented word, a non-English character (the German eszett character, ß), and the currency symbol for the Euro.

plain àccented non-English ß Foreign currency €

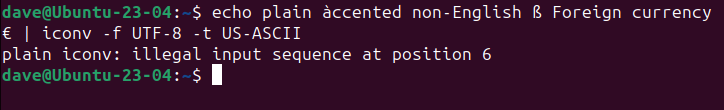

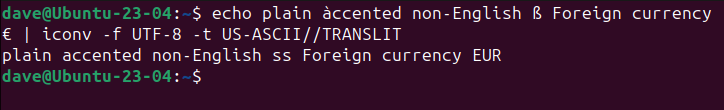

We’re going to convert this into ASCII. We’re using echo to pipe our input text to iconv. We’re using the -f (from) option to specify the input encoding is UTF-8, and the -t (to) option to indicate we want the output in US-ASCII.

echo plain àccented non-English ß Foreign currency € | iconv -f UTF-8 -t US-ASCII

That fails at the first hurdle. There is no equivalent character in US-ASCII for “à”, so the conversion is abandoned. iconv uses zero-offset counting, so we’re told the problem occurred at position six. If we add the -c (continue) option iconv will discard unconvertible characters and continue to process the remainder of the input.

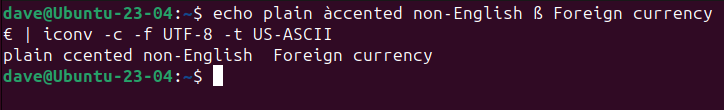

echo plain àccented non-English ß Foreign currency € | iconv -f UTF-8 -t US-ASCII

The command runs to completion now, but there are characters missing from the output. We can make iconv to provide an approximation of a nonconvertible character by substituting a similar character, or other representation. If it can’t manage that, it inserts a question mark “?” so you can easily see a character didn’t get converted.

This process is called transliteration, and to invoke it you append the string “//TRANSLIT” to the target encoding.

echo plain àccented non-English ß Foreign currency € | iconv -f UTF-8 -t US-ASCII//TRANSLIT

Now we’ve got a complete output text, with “a” instead of the “à” and “ss” instead of “ß”, and “EUR” instead of the “€” currency symbol.

Using iconv With Files

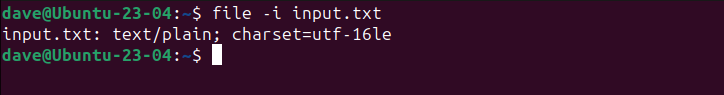

Using iconv with files is very similar to using it on the command line. To find out the encoding type of the source file, we can use the file command.

file -i input.txt

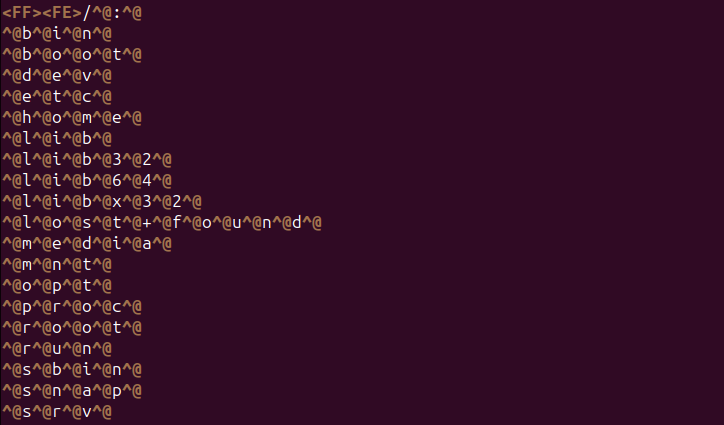

Our input file is in UTF-16LE encoding. That’s a 16-bit little-endian encoding. It looks like this:

less input.txt

If you squint and read the characters in white, you can pick out the actual text strings. A lot of software will incorrectly treat a file like this as a binary file, so we’ll convert it to UTF-8.

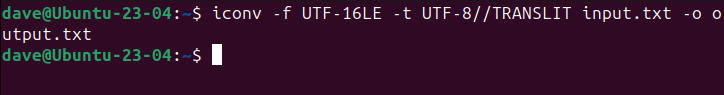

We’re using the -f (from) option to specify the encoding of the input file, and the -t (to) option to tell iconv we want the output in UTF-8. We need to use the -o (output) option to name the output file. We don’t use an option to name the input file—we just tell iconv what it is called.

iconv -f UTF-16LE -t UTF-8//TRANSLIT input.txt -o output.txt

Our output file looks like this:

less output.txt

Power When You Need It

You might not use iconv frequently, but when you do need it, it can save your bacon.

I get sent a lot of files from people who use Windows or Mac computers, and often from overseas. They arrive in all sorts of encodings. I’ve blessed iconv more than once for easily letting me work with those files on Linux.