I love ChatGPT. I turn to it many times a day and find it one of the most useful tools I’ve used. For the most part, ChatGPT does what I want and is incredibly helpful. However, there are some major problems that can make it extremely frustrating to use.

7

ChatGPT Won’t Always Follow Instructions

One of the most frustrating things I find with ChatGPT is that sometimes it simply decides to stop following instructions. Things will seem to be working fine, and then suddenly a response will behave completely differently to how it’s supposed to.

I have a ChatGPT Project with custom instructions that is supposed to take any prompt I enter and search for articles on How-To Geek on the topic of the prompt. I’ve found that this is a much easier way of checking whether articles already exist before I pitch them. The search feature on the site looks for keywords, but ChatGPT understands context, so it can find articles that the site search often misses.

Nine times out of ten it works like a charm; I type the topic of an article, and it searches the site for anything similar that’s already been published. However, now and again, it will ignore the instructions and give me information on the topic from random websites. I’ve tried reworking the prompt multiple times to make my instructions explicit, but the same issue keeps happening.

The worst part is that when you explain to ChatGPT that it hasn’t followed the instructions, it will respond by apologizing and promising not to do it again, before doing exactly the same thing five minutes later.

6

ChatGPT Won’t Stop Following Instructions

Sometimes, the exact opposite happens. My original version of my site search project had custom instructions that asked it to only search for articles on How-To Geek if I started the prompt with the words “search for.”

However, I found that using these instructions, if I asked follow-up questions, ChatGPT would still take my prompt and search for it on How-To Geek, even when I didn’t use the magic phrase. It seems that sometimes ChatGPT is so eager to follow the instructions that it will do so even when it isn’t supposed to.

Just like when ChatGPT doesn’t follow instructions, the upshot is that I get a completely unhelpful response. It doesn’t happen every time, but it happens enough to become annoying. I’ve now resorted to trying to create Projects that only ever do one thing and asking any follow-up questions outside those projects.

5

GPT-4o Won’t Follow Step-By-Step Instructions

Projects in ChatGPT are really useful, thanks to the fact that you can add both custom instructions and documents to a Project, which all chats within that project will have access to. The downside with Projects is that currently, you can only use the GPT-4o model. While GPT-4o is the most versatile model in ChatGPT, it has some significant drawbacks.

The beauty of the o1 model is that it stops to think about each step of the process before it generates the final output. GPT-4o just blurts out its response without stopping to ensure that all the steps have been followed.

I’ve tried numerous times to give step-by-step instructions, asking ChatGPT to only output a response once all the steps have been completed. Every time, however, it will follow the first instruction and then generate a response that says it’s done all the other steps when it hasn’t at all.

It seems that in most instances, GPT-4o is incapable of holding information in its head while it processes another step. It has to output the result of the first step of the prompt before it can move on to the next, which will often result in a response that doesn’t match what you intended.

If the steps are capable of being turned into Python code, GPT-4o can do so, which will then mean that the response will only be the final output. However, if your step-by-step instructions don’t easily turn into code, you’re out of luck.

I hope that eventually, we’ll be able to use o1 in Projects as it would make it much easier to create Projects that have more complex instructions that ChatGPT will actually follow.

4

You Can’t Search the Web When Using o1

Why not just use o1 outside a Project? Here’s the rub; at the moment, o1 can’t search the web. If I want to get ChatGPT to do something that searches the web, I can use GPT-4o. If I want to get ChatGPT to use step-by-step reasoning, I can use o1. But if I want ChatGPT to search the web AND use reasoning, it’s just not possible.

The ability to search is incredibly useful, but unfortunately, at the moment, you can only use what ChatGPT finds in simple ways. Anything more complex just keeps falling down.

There’s no way to pass results from one model to another either. It would be very useful to be able to combine the results of web searches from GPT-4o with the reasoning skills of o1.

3

You Can’t Use o1 With Tasks

Tasks in ChatGPT is another feature that has the potential to be incredibly useful, but at the moment has some serious flaws. For a start, you’re limited to 10 scheduled tasks, which can quickly get filled up.

Another major limitation is that you can’t use the o1 model in Tasks. This means that the same problem applies; you can’t create a Task that follows any kind of complex instructions that require more than one logical step.

I wanted to create a simple Task that would get today’s local weather forecast, extract the key weather information, and generate an image that represented that information. The idea was that each morning, I’d get an image that would tell me about today’s weather with just a glance.

However, despite multiple attempts to create clear step-by-step instructions, I was unable to get ChatGPT to do what I wanted. It would give a written forecast and then say “Here’s your image” but there would be no image. These are the kind of step-by-step instructions that o1 is capable of working through internally before generating an output.

The flip side, of course, is that o1 can’t generate images, so there’s currently no model I can use that will do exactly what I want.

2

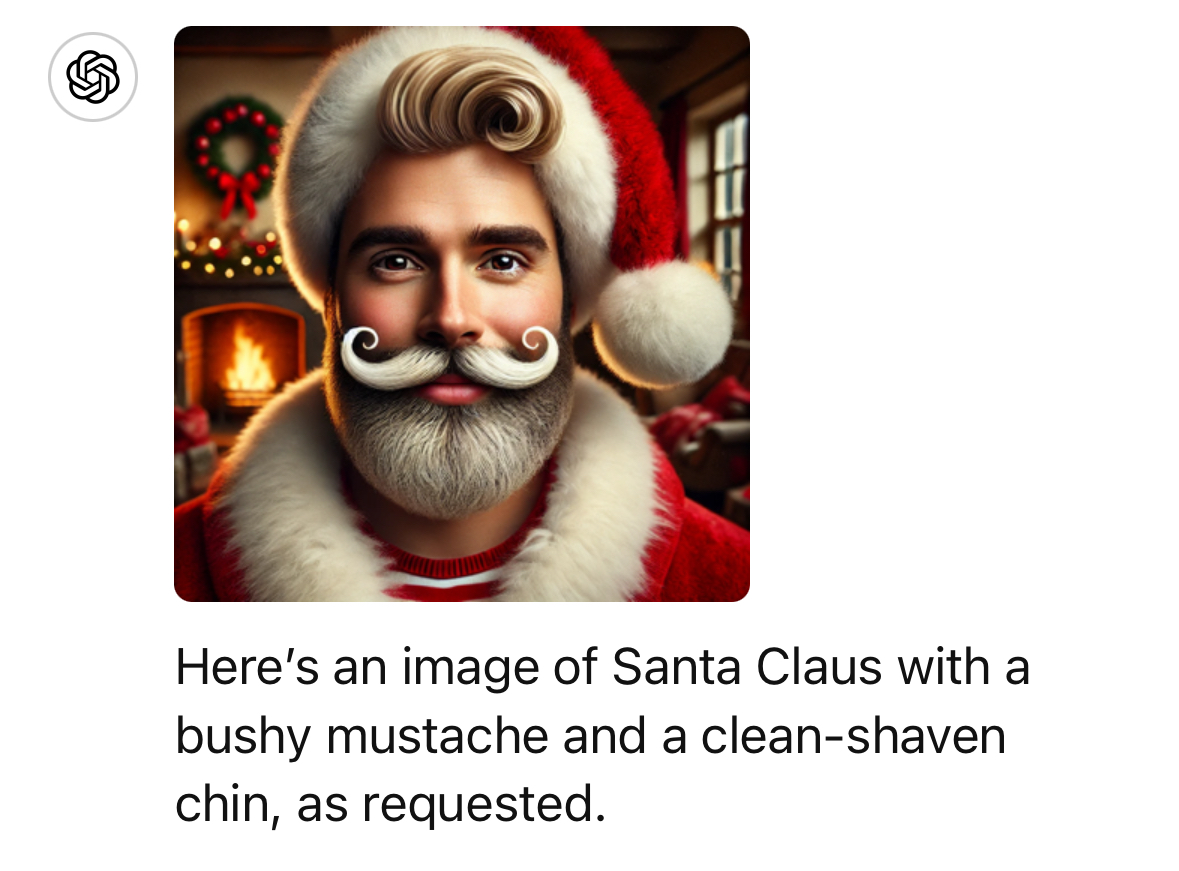

ChatGPT Really Can’t Handle Negative Prompts

AI chatbots are notoriously bad at following negative instructions. It’s true that if you tell someone to stop thinking about elephants, that’s the first thing that will pop into their head. However, if you tell someone to draw a picture containing no elephants, they’ll be able to do so.

ChatGPT, on the other hand, really can’t. Just try crafting a prompt to get ChatGPT to create an image of Santa Claus with a mustache but no beard and see how you get on. You get a beard every time. Even positive prompts like “clean-shaven” don’t seem to make any difference.

The same applies to prompts in general. Trying to get ChatGPT to stop adding unnecessary fluff to responses can be a challenge. You might find that you can do so by creating a long and detailed prompt where you give ChatGPT a persona and describe in detail how it behaves, but it shouldn’t be that hard.

I don’t want to have to try twelve long and complex prompts to get ChatGPT to stop adding extra text. My whole purpose in using ChatGPT is to save myself time and make things easier to do, and that’s really not the case when you need to spend a lot of time prompt engineering to make a simple change.

1

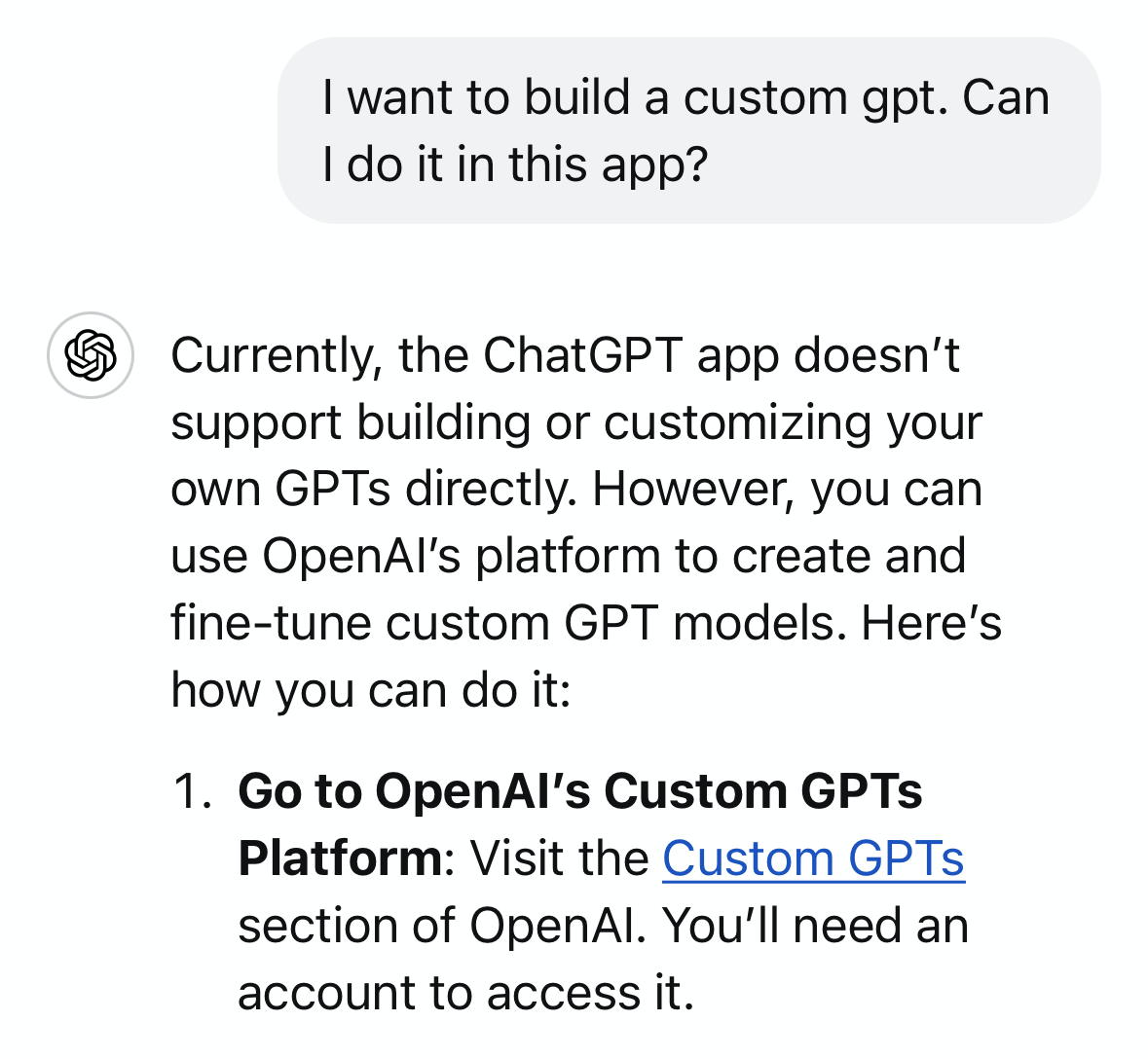

A Lot of Features Can Only Be Used in the Web App

I use ChatGPT all the time, both on my iPhone and on my Mac. I have the mobile app assigned to my Action Button, so I can access it quickly whenever I need it, and I can summon the desktop app by tapping Option+Space whenever I’m working on my Mac.

However, there are an increasing number of features that you can’t access in the mobile app or macOS desktop app. If I want to edit my Tasks, build a custom GPT, or create a new Project, I have to do so in the web app.

I don’t want to leave ChatGPT running in my browser, sucking up unnecessary resources, when I already have a desktop app that does the job. Even more frustrating is that if I want to make changes to a Project or Task when I’m away from my Mac, I have to sign in to ChatGPT in a browser. Not only does the web app use a smaller font, making it harder to use, but to rub salt into the wounds, I also get a notification pop up suggesting that I open the ChatGPT app instead.

Don’t get me wrong: I love ChatGPT. I use it all the time, and when it works, it can be incredibly useful. However, the more I use it, the more I fall foul of issues that make the process frustrating and stop me from achieving my goals. I’m sure that by creating and refining long and complex prompts I could get around some of these issues, but to my mind, that’s defeating the object. ChatGPT should be doing the hard work, not me.