Problem #1: Small LLMs are stupid

Newer open LLMs often brag about big benchmark improvements, and that was certainly the case with DeepSeek-R1, which came close to OpenAI’s o1 in some benchmarks.

But the model you run on your Windows laptop isn’t the same one that’s scoring high marks. It’s a much smaller, more condensed model—and smaller versions of large language models aren’t very smart.

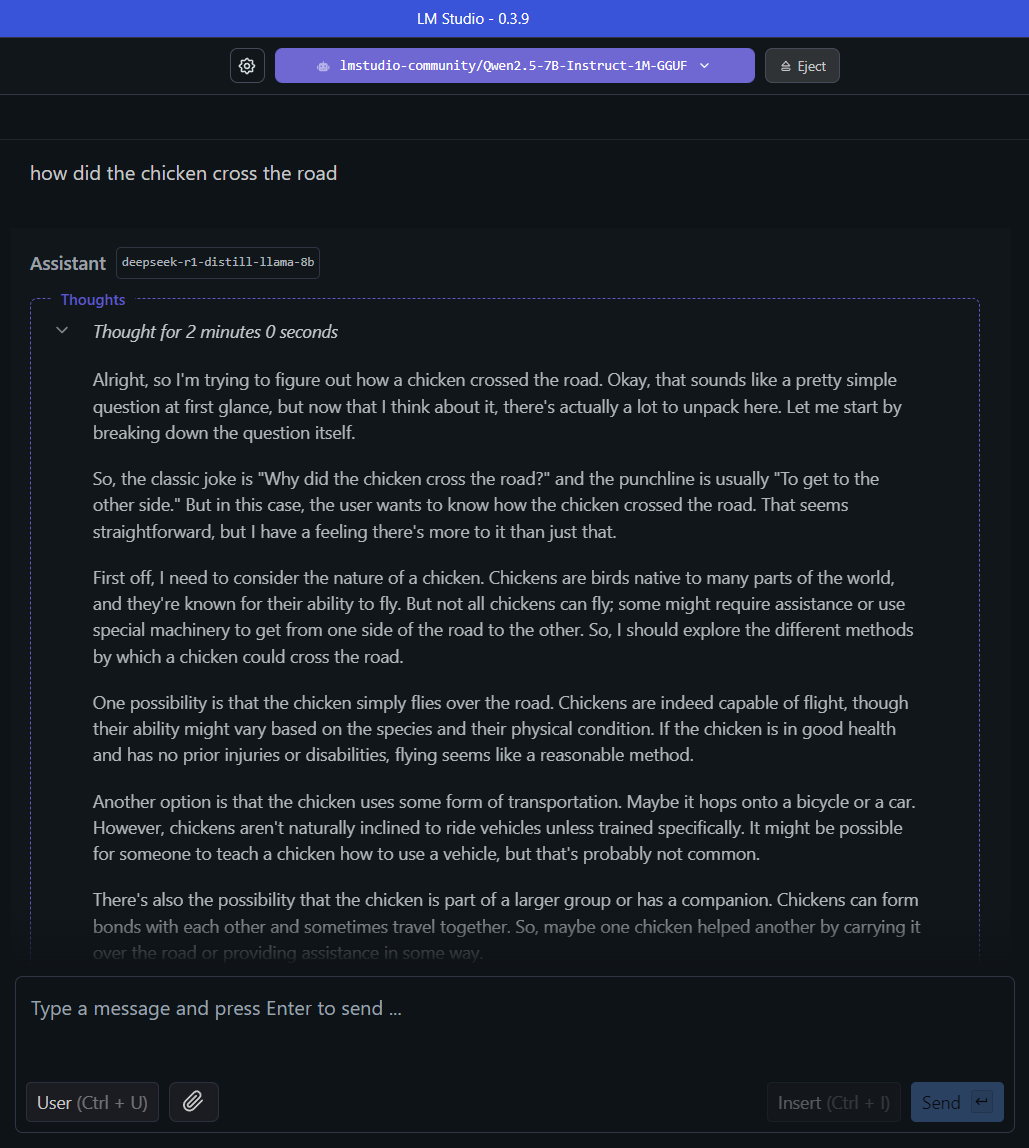

Just look at what happened when I asked DeepSeek-R1-Llama-8B how the chicken crossed the road:

Matt Smith / Foundry

This simple question—and the LLM’s rambling answer—shows how smaller models can easily go off the rails. They frequently fail to notice context or pick up on nuances that should seem obvious.

In fact, recent research suggests that less intelligent large language models with reasoning capabilities are prone to such faults. I recently wrote about the issue of overthinking in AI reasoning models and how they lead to increased computational costs.

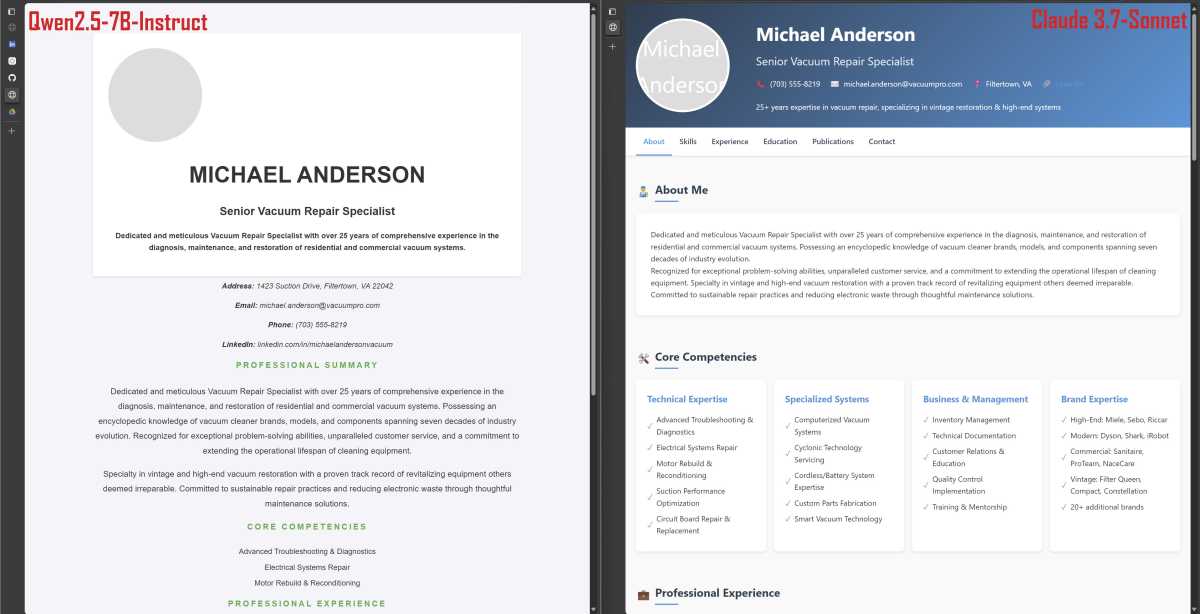

I’ll admit that the chicken example is a silly one. How about we try a more practical task? Like coding a simple website in HTML. I created a fictional resume using Anthropic’s Claude 3.7 Sonnet, then asked Qwen2.5-7B-Instruct to create a HTML website based on the resume.

The results were far from great:

Matt Smith / Foundry

To be fair, it’s better than what I could create if you sat me down at a computer without an internet connection and asked me to code a similar website. Still, I don’t think most people would want to use this resume to represent themselves online.

A larger and smarter model, like Anthropic’s Claude 3.7 Sonnet, can generate a higher quality website. I could still criticize it, but my issues would be more nuanced and less to do with glaring flaws. Unlike Qwen’s output, I expect a lot of people would be happy using the website Claude created to represent themselves online.

And, for me, that’s not speculation. That’s actually what happened. Several months ago, I ditched WordPress and switched to a simple HTML website that was coded by Claude 3.5 Sonnet.