Key Takeaways

- Apple’s new AI system, Apple Intelligence, will monitor personal data for a wide variety of assistive and generative tasks.

- Siri will leverage real-time personal data as context for unique assistance functions, such as summarizing text alerts.

- Though Apple has proven its reliability in protecting users’ information, the depth of data access required gives me reason to pause particularly in a world where many AI systems have experienced attacks and data leaks.

Apple’s impressive array of AI-enhanced features and functions are coming to iOS 18, but the tech depends on unhindered access to a lot of personal data. In a world of security breaches and data collection, this has me worried.

The Promise of Apple Intelligence

“‘Apple intelligence will transform so much of what you do with your iPhone,” Senior Vice President Software Engineering, Craig Federighi, said during the Apple Glowtime event in September 2024. The system is designed to serve as a “personal intelligence system” for iPhone, iPad, and Mac devices, utilizing a proprietary large language model that leverages my personal data to operate.

“You’ll be able to rewrite hastily written notes into a polished dinner party invite, adjust the tone of that Slack message to your boss to sound a little more professional, or proofread your latest review on Goodreads before posting it to your fellow bookworms,” Federighi said.

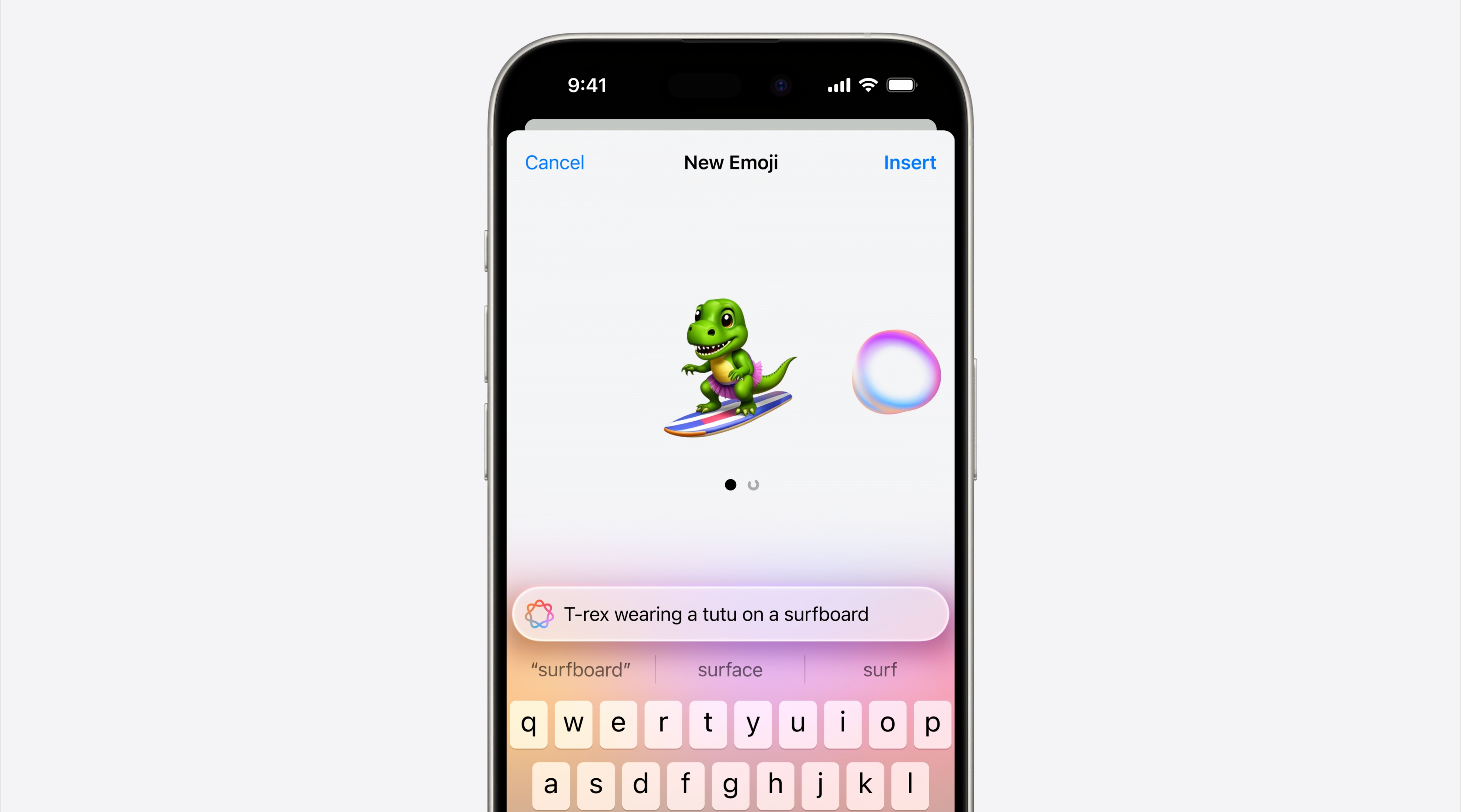

The AI agent will be able to interact with my data across both system and third-party apps, including Mail, Notes, Safari, and Pages, as well as generate images based on natural language text prompts. Apple Intelligence is “grounded in your personal information and context, with the ability to retrieve and analyze the most relevant data from across your apps, as well as to reference the content on your screen,” Federighi said during WWDC in June.

It can generate images and custom emoji, find pictures and files using natural language prompts, summarize notifications and emails, and a whole slew of other tasks that Apple insists will streamline my daily digital tasks.

I mean, yeah, this stuff is cool and probably the future, but I’m not confident that it has yet proven itself reliable enough to warrant giving Apple this degree of access to my data. Where Apple used to just host my on-device and iCloud data, now it wants to actually do stuff with it, and that makes me nervous.

Apple Intelligence Wants to Know Everything About Me

Apple is billing Intelligence as an overarching agent for iOS 18, capable of working across apps to streamline my digital life. Siri is being reimagined as a more capable and intelligent assistant thanks to Apple Intelligence. “Siri will be able to tap into your personal context to help you in ways that are unique to you, like pulling up the recommendation for the TV show that your brother sent you last month,” Federighi said.

I’m all for automations that can save me time and effort, but I’m not particularly comfortable with tools that can hallucinate not only monitoring my data in real-time but independently acting on that data.

I’m not concerned that Apple will awaken Skynet or birth H.A.L. mind you—we’re nowhere close enough to AGI to worry about things of that nature. I am wary, however, about the breadth and depth of personal data required to enable these features, as well as how that data is transmitted and handled.

The generative AI industry does not have what you’d call a sterling reputation when it comes to securing user data. OpenAI, which is partnering with Apple to incorporate ChatGPT into Siri’s functionality, has been hacked multiple times since its chatbot’s launch in November 2022, resulting in data leaks.

So too has NVIDIA, which makes the AI industry’s most sought-after GPUs. What’s more, a recent study by HiddenLayer found that 77 percent of business respondents reported breaches to their AI systems within the last year.

My social security number is already flapping in the breeze, I’m in no hurry to have the rest of my personal data join it.

How Apple Protects User Data in the Cloud

To its credit, Apple’s reputation for data security is well-earned. Data breaches are exceedingly rare, and when they do occur, like the 2014 iCloud hack, Apple has been quick to shore up its vulnerabilities. For Apple Intelligence, queries are primarily handled on-device. Doing so drastically limits the ways and means that my personal data can leak.

If it’s not being transmitted anywhere, there are very few ways unauthorized parties can access it—outside of physically rooting through my phone, installing malware, or performing side-channel attacks.

The company has also developed a massive custom data infrastructure, dubbed Private Cloud Compute (PCC), to handle and protect the uploaded user data for queries that can’t be solved on-device. As an Apple blog post from June explains, Apple Intelligence will assess whether a given user query can be handled on-device and, if it can’t, the AI, “can draw on Private Cloud Compute, which will send only the data that is relevant to the task to be processed on Apple Silicon servers.” The data sent to PCC is not stored, nor can it be accessed by Apple and, by extension, law enforcement, hackers, or other malicious actors.

“This data must never be available to anyone other than the user, not even to Apple staff, not even during active processing,” Apple researchers wrote in a study published in June. The data cannot be retained after the query is completed. “In other words, we want a strong form of stateless data processing where personal data leaves no trace in the PCC system.”

The researchers note that, while apps like iMessage can offer end-to-end encryption, PCC cannot, “since Private Cloud Compute needs to be able to access the data in the user’s request to allow a large foundation model to fulfill it.” Instead, they designed the system to be incapable of retaining user data after the query is completed, using “an enforceable guarantee that the data volume is cryptographically erased every time the PCC node’s Secure Enclave Processor reboots.”

“We set out from the beginning with a goal of how can we extend the kinds of privacy guarantees that we’ve established with processing on-device with iPhone to the cloud—that was the mission statement,” Federighi told Wired. “It took breakthroughs on every level to pull this together, but what we’ve done is achieve our goal. I think this sets a new standard for processing in the cloud in the industry.”

No System Is Perfect

That level of confidence is hard to ignore, especially when compliance with the system is enforced technically, rather than through easily-circumvented policy. However, no company is infallible, nor certainly is any AI model that’s been built to date.

Even with Apple’s data privacy assurances, I remain skeptical that being able to generate emoji and being reminded of acquaintances’ names (a la Bella Ramsey) provide enough value to warrant me upgrading to the new Ai-enabled OS or even buying a new iPhone, but that’s just me.

Ultimately, the decision of whether to embrace Apple Intelligence will depend on your own individual comfort levels with data privacy and the amount of trust you place in Apple’s commitment to protecting that data.