There’s no doubt that public access to sophisticated AI chatbots would have some profound effects on society—both positive and negative. It didn’t take long for people to start depending on AI bots for things that perhaps they shouldn’t, and now I’m seeing it spill out on social media.

Whether on Facebook, Instagram, X, or the comment section of online articles, you’ll see people post comments written by AI, or use information provided by AI, and this is something I’m deeply concerned about.

Folks Are Using AI to Win Arguments Online

Whenever I doomscroll X these days, I inevitably see people get into arguments with each other. So far, so normal for the internet. What isn’t so normal is the little electronic prayer for wisdom that now goes out seemingly at least every three or four replies—@grok.

Tagging X’s AI chatbot acts like a prompt and is a quick and easy way to invoke the bot to answer your question. So you might ask whether the above post is true, or whether someone is correct. Alternatively, you’ll see screenshots posted of chats with bots like ChatGPT and Claude. Posted as evidence in support of the points someone is trying to make, or to disprove the points of their interlocutor.

This Means They Don’t Know How Unreliable AI Bots Are

As someone who uses AI chatbots daily, and keeps a close eye on their development and capabilities, I find this appalling. I would never, ever use the information provided by one of these chatbots verbatim as a source for anything. To summarize verified info? Sure. To put together overviews that point me to original sources? Definitely.

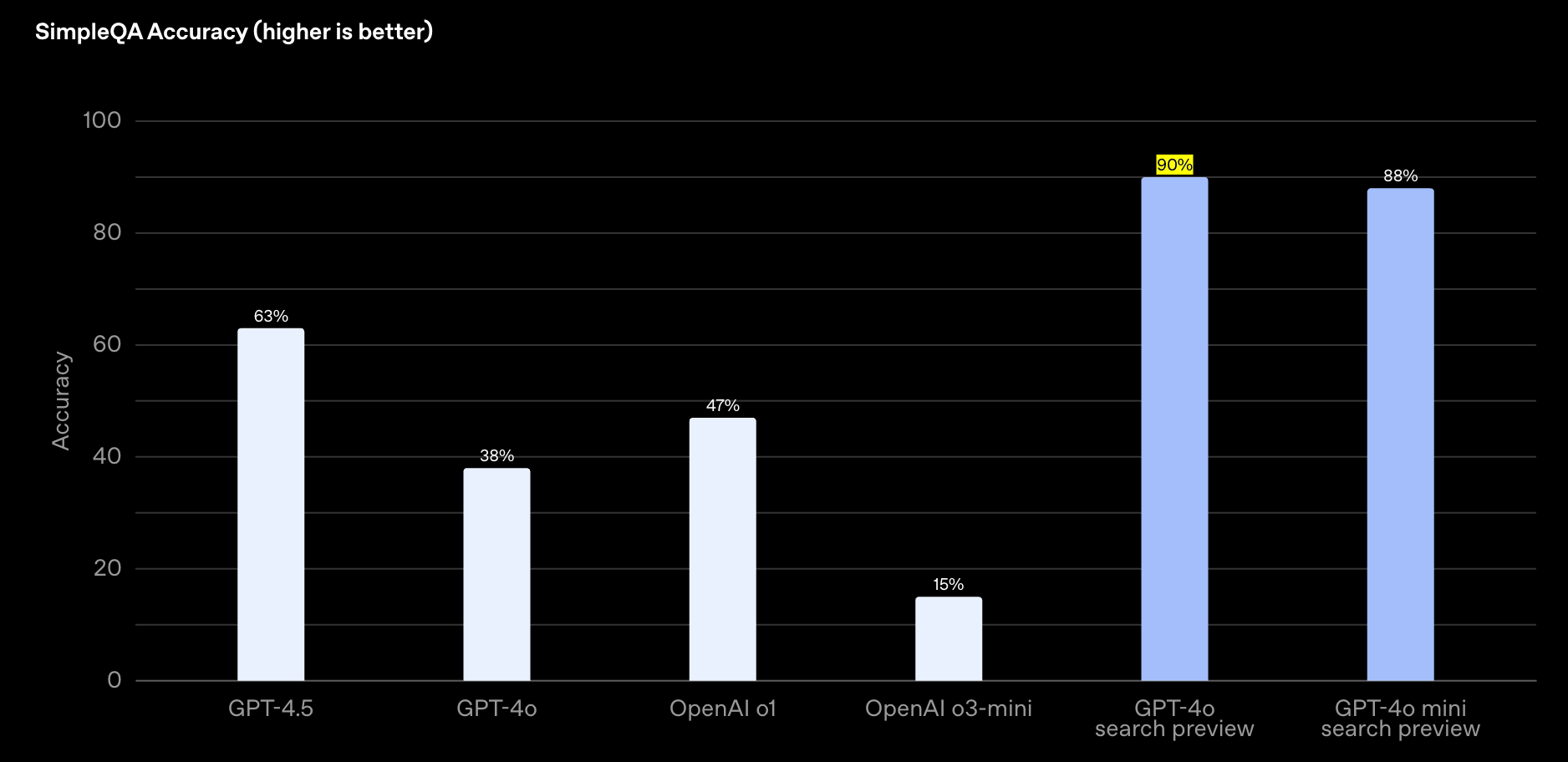

But, that’s not what’s happening on social media. I see users on these sites defer to the AI and simply accept whatever it says. Even if the information is clearly incorrect on the face of it. Look at this SimpleQA Accuracy benchmark result from OpenAI’s site.

The best result is for GPT-4o with web access at 90%. That sounds good, but I’d certainly check every claim for anything that had 10% inaccuracy! For the models that people are more likely to use, such as GPT-4.5 or 4o, the accuracy score is moderately better or worse than 50%, with 63% and 38% respectively. Even more relevant, the BBC found that AI chatbots are poor at accurately summarizing and presenting current events. After evaluation, 51% of of the answers given by the various AI tested had “significant inaccuracies”, according to the study

So the convenience of having the AI quickly reply with information during an online argument is far outweighed by the odds of that information having the accuracy of a coin flip!

Related

What Is AI Hallucination? Can ChatGPT Hallucinate?

AI chatbots can hallucinate. Here’s what that means for you.

Please Don’t Use AI as a Primary Source

Perhaps there will come a day where AI chatbots are as trustworthy as institutions like the Associated Press, but that day is not today and it’s hard to see it being true any time soon. Even if these bots were 99% accurate, you’d still have to check any claims made using a third-party source.

So I’m practically pleading with you not to use the direct output of a chatbot as ammunition for any sort of online discussion. At best, you’ll only make yourself look silly; At worst you could spread dangerous misinformation. This was a big enough problem before AI bots became commonplace, so you can imagine how dire the situation is now.

If you use the web-enabled versions of these AI bots, then remember to add a request to your prompt for sources. Then check the sources the AI cites first, before using that information any further. Don’t feel confident just because the AI provides a source, since they can even hallucinate website links, source names, or misrepresent what that source says.

A lot of what AI bots produce has the veneer of authority and legitimacy, but if you apply any sort of critical thinking to it, it falls apart quickly.

Related

Claude App vs. ChatGPT App: What’s Better For Everday Use?

Anthropic and OpenAI have both released apps, but which one is better for everyday use: Claude or GPT?

Push Back Against People Using AI as a Source Online

Even if you don’t use AI as a source in discussions, you’re going to encounter plenty of people online who do. In most cases I suspect they’ll have no idea that these bots are unreliable and that their answers need careful verification. So if anyone tries to counter you with the old “@grok” or with screenshots from ChatGPT, you should push back against it.

Make it clear that AI chatbots aren’t a legitimate source of information, and a significant source of misinformation instead. If people can challenge Wikipedia as a source (which has a transparent editorial talk page anyone can read) then they should absolutely challenge the use of AI in any online discussion.

I understand that it can feel good to tag in an AI teammate to help bolster your points, but in this matchup some of the participants are likely cheating, and you don’t want to be caught up in that.