Dear Chancellor of the Exchequer and Secretary of State for Science, Innovation and Technology,

I was delighted to be asked to lead this review on the future of compute for the UK. Having spent three decades in academia as an AI researcher, and more recently in the technology industry, I have witnessed the transformative potential of compute first hand. Modern compute has given us the ability to simulate and model complex phenomena, and the power to use data-driven machine learning technologies to provide powerful AI tools for society. Compute underpins much of what we do today and our dependence on it will only grow. Much like electricity, rail travel, and the internet, large scale compute is part of the infrastructure of modern life, and its effect on society is hard to measure. Many existing economic sectors depend on compute, and new discoveries that will significantly advance the health and prosperity of society rely on compute.

I have experienced many transformative technological changes in computing throughout my career, but at no time have I felt the immensity of the technological opportunity that we have now. The next decade is sure to bring even more advances that will continue to astonish us. The UK needs to be well prepared to take advantage of these opportunities to sustain growth and cement its position as a Science and Technology Superpower. This review on the future of compute makes specific recommendations to the government that are essential to achieve the UK’s ambition.

Compute can be thought of in three, somewhat overlapping, broad areas. First is compute for AI. AI is absolutely transformative, and depends heavily on compute, with the largest AI models using many exaflops of compute. The UK has great talent in AI with a vibrant start-up ecosystem, but public investment in AI compute is seriously lagging. The economic value of AI is undeniable – the world’s trillion dollar tech companies are all betting heavily on AI. A second area is compute for modelling and simulation, which is used widely across the sciences (e.g. physics, biology, climate) and engineering (design, simulation). Public facilities are essential resources operating at local, national and international scales to enable computations that cannot be achieved at smaller scales. Third, we have cloud computing, often provided by the private sector, which has proven to be a tremendous catalyst for SMEs and has made on demand computing a core part of building efficient and flexible businesses. All three of these types of compute are converging – AI compute can be done on the cloud, HPC access models are becoming more cloud-like, and HPC is supporting more and more AI workloads.

We need several interventions if we want compute to unlock the world-leading high-growth potential of the UK. I would like to highlight a few recommendations here. First, we need a strategic vision, roadmap and national coordination. The UK’s public compute infrastructure is fragmented and we do not currently have a long-term plan. We need a national coordination body to deliver the vision for compute, that can provide long-term stability and adapt to the rapid pace of change in compute technology. Second, we need to make immediate investments in the path to exascale compute, using a phased approach outlined in this review, so that we are not falling behind our peers. Third, we need to increase capacity for AI research immediately to power the UK’s impressive AI research community and plan for further AI capacity as part of our exascale system.

In approaching this review, we have focused on identifying how the UK can invest in the right technologies, with the right architectures, to meet the needs of all users, broaden access and reap the true benefits of compute. That is why the recommendations of this review should be viewed as a long-term, holistic package that supports the creation of a vibrant ecosystem. This includes, investing in domestic skills and attracting and retaining talent as a priority, supporting users at all levels of expertise and increasing awareness. We should collaborate with international partners, such as the US, Japan, and the EU, where we have had longstanding and valuable joint programs which would be beneficial to retain. Any facilities that we build should be built and operated sustainably, and incorporate best practice in secure computing.

These recommendations are critical for many of the government’s priorities. I recognise the potential difficulty of implementing these recommendations during a time of economic and fiscal challenges. However, the potential growth that compute could unlock across the economy, and within our domestic tech sector, is significant. Indeed, I personally envisage a future compute ecosystem in which the private sector can build upon these investments to be at the forefront of affordable compute provision for all users. This is not the time to limit our future by delaying needed investments.

I have thoroughly enjoyed my time on this review and wish to thank the panel members Sue Daley, Shaheen Sayed, Graham Spittle and Anne Trefethen. Their diverse expertise spanning academia and industry, and their knowledge of compute, has been indispensable. I also wish to thank the review’s Secretariat for their tremendous contribution that went into producing this review.

Sincerely,

Zoubin Ghahramani FRS

Professor, University of Cambridge

Vice President of Research, Google

Introduction from the expert panel

Compute is a material part of modern life. It is among the critical technologies lying behind innovation, economic growth and scientific discoveries. Compute improves our everyday lives. It underpins all the tools, services and information we hold on our handheld devices – from search engines and social media, to streaming services and accurate weather forecasts. This technology may be invisible to the public, but life today would be very different without it.

Sectors across the UK economy, both new and old, are increasingly reliant upon compute. By leveraging the capability that compute provides, businesses of all sizes can extract value from the enormous quantity of data created every day; reduce the cost and time required for research and development (R&D); improve product design; accelerate decision making processes; and increase overall efficiency. Compute also enables advancements in transformative technologies, such as AI, which themselves lead to the creation of value and innovation across the economy. This all translates into higher productivity and profitability for businesses and robust economic growth for the UK as a whole.

Compute powers modelling, simulations, data analysis and scenario planning, and thereby enables researchers to develop new drugs; find new energy sources; discover new materials; mitigate the effects of climate change; and model the spread of pandemics. Compute is required to tackle many of today’s global challenges and brings invaluable benefits to our society.

Compute’s effects on society and the economy have already been and, crucially, will continue to be transformative. The scale of compute capabilities keeps accelerating at pace. The performance of the world’s fastest compute has grown by a factor of 626 since 2010. The compute requirements of the largest machine learning models has grown 10 billion times over the last 10 years. We expect compute demand to significantly grow as compute capability continues to increase. Technology today operates very differently to 10 years ago and, in a decade’s time, it will have changed once again.

Yet, despite compute’s value to the economy and society, the UK lacks a long-term vision for compute. The ecosystem is fragmented and complex for users to navigate. Existing compute capabilities are not fit to serve all users, particularly AI users, and are falling behind those of other advanced economies. As of November 2022, the UK had only 1.3% share of the global compute capacity and did not have a system in the top 25 of the Top 500 global systems. Meanwhile, other countries continue to bolster their compute capabilities, with many already testing, building or planning for exascale systems – the next generation of computing technology. Infrastructure investment in the UK is the result of piecemeal procurement and there are no coordinated efforts to mitigate the environmental impact of compute and ensure secure access to infrastructure. A scarcity of compute skills and limited access to future EU systems lead to an uncertain outlook for UK compute.

We were asked, as a panel with expertise in compute, to understand the UK’s compute needs over the next decade; identify cost-effective, future-facing interventions that may be required to ensure research and industry have access to internationally competitive compute; and, establish a view of the role of compute in delivering the Integrated Review and securing our status as a Science Superpower this decade.

In line with the report of the Government Office for Science (GO-Science), we define compute or advanced compute as computer systems where processing power, memory, data storage and network are assembled at scale to tackle computational tasks beyond the capabilities of everyday computers. We have taken a long-term approach, considering what actions the government needs to take, now and in the decades to come. Underpinning our work is the belief that compute will unlock growth and innovation across the whole economy and will lead to transformative discoveries, improving the wellbeing of all citizens.

We have considered the broad range of compute users — established adopters and emerging users across academia, industry and the public sector. We have assessed the state of UK infrastructure, explored barriers to access and considered international best practices. We have identified the actions necessary to meet user needs, ensure the UK has cutting-edge compute capabilities and create a strong compute ecosystem.

Targeted intervention is required to fully deliver the value of compute and meet the government’s wider objectives. The extent of the cost and risk associated with computing technology means that private investment on its own will not be able to unleash the full potential of compute. Government action is urgently needed to ensure the UK keeps pace with computing advancements, addresses users’ needs and remains globally competitive. The breadth of users and compute capabilities means there is not a one-size-fits-all approach, and government and industry must work together to ensure success.

This review presents a set of 10 recommendations to the government on how the UK can harness the power of compute to achieve economic growth and address society’s greatest challenges. The government should unlock the world-leading, high-growth potential of compute through the creation of a strategic vision, increased coordination and broader use of compute. It should build world-class, sustainable compute capabilities. This includes investment in an exascale facility through a phased approach, to ensure ecosystem readiness and value for money. The government should also provide the resources necessary to train the largest AI models, support greater access to compute through the cloud and invest in sustainable compute infrastructure at all levels. Lastly, the government must empower the compute community by creating a sustainable skills pipeline, ensuring the security of compute systems and fostering international collaborations.

Crucially, creating a strong compute ecosystem and improving UK compute capability requires long-term action, extending to the next decade and beyond. All 10 recommendations should be implemented as part of a holistic approach to compute, and should be established within the government’s strategic vision. Only by harnessing the benefits of compute can the government realise its ambitions for the UK to sustain economic growth; achieve net zero; secure its status as a science and technology superpower; be a global AI superpower; and build a competitive and innovative digital economy. Compute is required to achieve each and every one of these objectives. Further, compute is closely linked to other core policies, including (but not limited to) digital competition, data, digital skills, semiconductors, quantum technology and sectoral policy.

We recognise that the cost of building world-leading systems is considerable. Even in difficult economic climates, it remains essential to plan for and invest in the future. By acting now, the government not only will ensure the UK remains a prosperous country, but will also deliver invaluable societal benefits. The UK is currently an international technology hub, a leader in research and innovation and hosts world-leading universities. It ranks third globally for investment and innovation in AI and in the development and uptake of advanced digital technologies. To capitalise on and further grow these strengths, the government must ensure the country has the necessary compute resources now, over the next decade and beyond. Inaction will be to the detriment of the UK’s scientific capability, innovative economy and overall international reputation.

We must urgently plan for the future of compute. While the challenges here are great, so are the opportunities. The recommendations outlined in this review aim to ensure the UK is on the path to success.

Professor Zoubin Ghahramani FRS

Vice President of Research at Google and Professor of Information Engineering at the University of Cambridge

Sue Daley

Director of Technology and Innovation, TechUK

Shaheen Sayed

Senior Managing Director, Accenture UK and Ireland

Dr Graham Spittle CBE

Dean of Innovation at Edinburgh University

Professor Anne Trefethen FREng

Pro-Vice Chancellor and Professor of Scientific Computing, University of Oxford

Report outline

The Government Office for Science’s 2021 report on large-scale computing) and the Alan Turing Institute and Technopolis’ analysis of the digital research infrastructure requirements for AI have provided an overview of the UK’s compute ecosystem. Both reports have called for strategic planning, greater coordination, continued public investment and collaboration across academia, government and industry. The review builds upon and expands these findings and provides actionable recommendations to create a world-class, cutting-edge compute ecosystem in the UK.

The following chapters present the key elements of the compute ecosystem, illustrating the UK’s position within the international landscape, the drivers of compute demand, the current infrastructure and future requirements and the government’s role in creating a vibrant ecosystem.

Chapter 2, The international landscape of compute, presents compute as an international issue, not just a domestic one. It looks at the UK’s position within the international landscape and illustrates best practices adopted by other countries as part of their compute policy.

Chapter 4, Meeting the UK’s compute needs, outlines the requirements to meet demand for compute in the UK, now and in the future. It looks at what the UK needs across the ecosystem, covering exascale, cloud and AI infrastructure, the importance of software and the environmental impact of compute systems.

Chapter 5, Creating a vibrant compute ecosystem, describes the need for a long-term vision and better coordination. It illustrates how, by addressing these strategic requirements, the UK will be able to create a vibrant compute ecosystem, build a sustainable skills pipeline, ensure secure access to infrastructure, and establish beneficial partnerships.

Recommendations sets out the review’s actions for the government. These outline the need for the government to be ambitious and visionary in its approach to compute, the tangible actions required to bolster the UK’s compute infrastructure and the specific measures that will maximise the value of the UK’s compute ecosystem through empowering the community.

List of recommendations

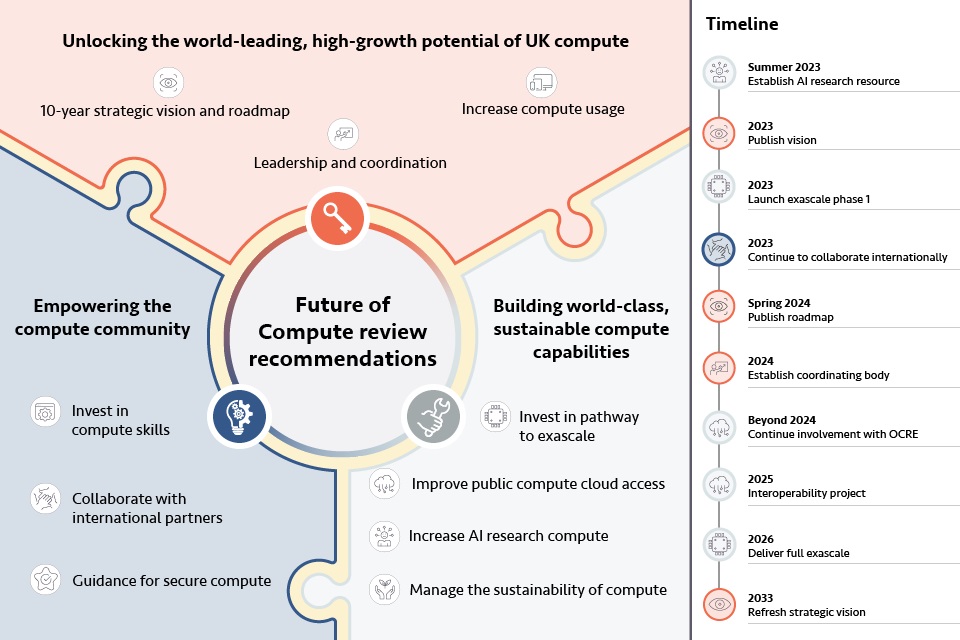

A. Unlock the world-leading, high-growth potential of UK compute

B. Build world-class, sustainable compute capabilities

Proposed timeframe

Summer 2023: Establish AI Research Resource

2023: Launch exascale phase 1

2023: Continue to collaborate internationally

Spring 2024: Publish roadmap

2024: Establish coordinating body

Beyond 2024: Continue involvement with OCRE

2025: Interoperability project

2026: Deliver full exascale

2033: Refresh strategic vision

Glossary of terms

| Accelerator | Specialised hardware for certain computational workloads. |

|---|---|

| Artificial Intelligence (AI) | Machines that perform tasks normally performed by human intelligence, especially when the machines learn from data how to do those tasks. |

| Cloud computing | An access model where computing infrastructure is accessed on-demand via the internet. |

| Commercial cloud | A company that provides computing resources as a service through the cloud. |

| Compute | Computer systems where processing power, memory, data storage and network are assembled at scale to tackle computational tasks beyond the capabilities of everyday computers. The review recognises ‘compute’ as an umbrella term including, but not limited to, advanced compute, high performance computing (HPC), high throughput computing (HTC), large scale computing (LSC) and supercomputing. Cloud computing does not fall entirely under this umbrella, but it is considered as an access model for compute if used for high computational loads. |

| Central Processing Unit (CPU) | The unit within a computer which contains the circuitry necessary to execute software instructions. CPUs are suited to completing a wide variety of workloads, distinguishing them from other types of processing unit (for example, GPUs) which are more specialised. |

| Exascale | A high-performance computing system that is capable of at least one Exaflop per second (a system that can perform more than 1018 floating point operations per second). |

| Flops | Floating point operations per second (the number of calculations involving rational numbers that a computer can perform per second). |

| Graphics Processing Unit (GPU) | Accelerator hardware optimised for certain calculations often associated with image processing and related applications. Foundational to modern AI advances. |

| Petascale | A high-performance computing system that is capable of at least one Petaflop per second (a system that can perform more than 1015 floating point operations per second). |

1. The significance of compute for the UK

Key findings

-

Compute is foundational to the lives of all citizens, both now and in the future. It impacts social and professional lives, across academic research, government and industry. Investing in compute will bring wide-ranging benefits.

-

Compute is essential for achieving the UK’s ambitions for science and technology and economic growth. Compute drives productivity and efficiency and is vital to support technologies such as Artificial Intelligence (AI), which will have a profound impact on the economy.

-

The UK is falling behind on compute and the government will need to take substantive action if it is to achieve its ambitions. The UK must have a thriving ecosystem that is fit for the future, delivers the necessary infrastructure and enables access for existing and new users.

1.1 Introduction

Compute plays an integral role in modern life. Compute powers the technologies used today, underpins world class research and drives the economy by enabling businesses to be more productive and develop new products and services. It shapes how individuals live, with compute underpinning services essential to modern life such as communication, travelling, shopping or weather forecasting. It powers smart assistants on handheld devices, search engines and social media. It enables cutting-edge technologies, such as roadworthy autonomous vehicles, that would have been impossible only 15 years ago.

A strong compute ecosystem is integral to delivering the UK’s ambitions around economic growth and its status as a Science and Technology Superpower, today and in the future. The UK is an international leader in AI and will require continued investment into compute to remain at the forefront. Many industries, sectors, products and services already rely on compute, and this reliance is only likely to increase given the pace of scientific and technological innovation. The potential benefits of compute in areas like clean energy and healthcare — to name just two sectors — could have transformative impacts on the economy.

As compute technologies are changing rapidly and investments can be risky and expensive, the market alone will be unable to meet demand. For the UK to remain competitive in research and innovation, academia and industry need to be able to access low cost compute at the times they need it. Investment into the UK’s current facilities has not kept pace with the evolving needs of users, restricting their ability to access compute.

It is vital to support the UK’s compute ecosystem immediately through public investment. This chapter sets out the importance of compute to the UK and the role this technology can play in meeting government ambitions.

1.2 Understanding compute

There is no standardised definition for compute across academia, government and industry. For the purposes of this review, compute is defined as follows:

‘Compute’ or ‘advanced compute’ refers to computer systems where processing power, memory, data storage and network are assembled at scale to tackle computational tasks beyond the capabilities of everyday computers.

The review recognises ‘compute’ as an umbrella term including, but not limited to, advanced compute, high performance computing (HPC), high throughput computing (HTC), large scale computing (LSC) and supercomputing. Cloud computing does not fall entirely under this umbrella, but it is considered as an access model for compute if used for high computational loads. There are numerous technologies associated with compute, from different types of accelerators (e.g. Graphics and Tensor Processing Units) and distributed computing, to emerging and novel paradigms (e.g. neuromorphic and quantum computing). The review does not explore these technologies in detail, but recognises that future strategic decisions for compute should consider how they interact.

Whilst compute or the process of computation occurs as data calculations on a processor, it relies on so much more: the software that controls the system and instructs the processor; the other hardware described in the definition above; the skilled operators, and; access to quality data.

Software, hardware and skills have been considered in detail as part of this review. Data is a broad topic, and beyond the remit of this review, but it should be recognised that compute is only possible with access to quality and secure datasets. This will become increasingly vital to unlock the benefits of AI and machine learning techniques.

Unlocking the power of data

21st century economies will be defined by new scientific and technological developments in AI, quantum technologies and robotics. To be world class in these areas, the UK needs to be world-class in its approach to data sharing.

The National Data Strategy, specifically Mission 1 (‘Unlocking the value of data across the economy’), is a central part of the government’s wider ambition for a thriving economy. For the strategy’s objectives to be realised, organisations must be equipped to access, interpret and use data effectively. Compute is the technology that enables organisations to make sense of data and thus benefit from it.

Higher compute uptake and the delivery of Mission 1 are therefore closely intertwined. Unlocking the significant opportunities from data access and data use through compute, and vice versa, will be critical to keeping the UK at the forefront of science, research, innovation and technological development, and to creating a world-class digital economy.

The scale of compute

One way to measure computational power is in ‘flops’ — floating point operations per second. A floating point operation is roughly equivalent to a single arithmetic calculation (addition, subtraction, multiplication or division) involving two numbers, and can be done at varying levels of precision.

While a leading smartphone today is capable of 1012 flops (a trillion flops or a ‘teraflop’ per second), compute reaches beyond 1015 flops (a thousand trillion flops or a ‘petaflop’ per second). The most powerful system, as defined by the Top500 list, is the USA’s exascale system Frontier. It is more than an order of magnitude more powerful, operating at 1018 flops — a million trillion flops (an ‘exaflop’ per second).

The performance of the world’s fastest compute has grown by a factor of 626 since 2010, while the compute requirements of the largest machine learning models have grown by a factor of 10 billion in the same timeframe. According to OpenAI, computational demand to train AI is currently doubling every 3-4 months. Their 2018 analysis focuses on the ‘modern era’, which starts in 2012 with AlexNet, a neural network, and describes the AI models that have led to breakthroughs in vision and language learning unimaginable only a decade ago.

The power of more compute

More compute and the resulting increase in model resolution has significant implications for research and innovation. This was particularly evident during the coronavirus (COVID-19) pandemic.

ARCHER, the UK’s most powerful public system in 2020 and predecessor to ARCHER2, could only model parts of the COVID-19 virus. According to evidence gathered by the review, this increased the time to find binding sites in support of vaccine development. Comparatively, Japan’s Fugaku — launched ahead of schedule in 2020 to provide researchers with compute for intensive COVID-19 research and about 100 times more powerful than ARCHER — was used to simulate how COVID-19 might spread from person to person via aerosolized droplets. This had a significant impact on many governments’ policies, particularly with regards to the role of face masks. The Japanese team that conducted the study was awarded a Gordon Bell Special Prize, a recognition of the impact of their research.

1.3 Achieving the UK’s ambitions through compute

The Prime Minister has committed to building a more prosperous future and growing the economy and the UK has bold ambitions across research, technology and innovation. Compute underpins or supports several government priorities: it powers innovation and productivity across the economy; it is essential for cutting-edge scientific research; it drives the development of transformative technologies such as AI, new materials and quantum computing; and helps ensure national security.

Compute underpins government ambitions, including:

- the Plan for Growth and Innovation Strategy, which set out the government’s plans to support economic growth through innovation

- the Integrated Review, which outlines the need to deliver prosperity and security at home and abroad, and shape the open international order of the future

- the UK Digital Strategy, which sets out the government’s vision for harnessing digital transformation and building a more inclusive, competitive and innovative digital economy

- the National AI Strategy, which outlines the ten year plan to make Britain a global AI superpower, and the upcoming white paper on regulation

- the National Data Strategy, which sets out the UK’s vision to harness the power of responsible data use to boost productivity; create new businesses and jobs; improve public services; support a fairer society; and drive scientific discovery

- the Net Zero Strategy, which sets out policies and proposals for decarbonising all sectors of the UK economy to meet the UK’s net zero target by 2050

- the National Cyber Strategy, which outlines the government’s approach to protecting and promoting the UK’s interests in cyberspace and ensures that the UK continues to be a leading responsible and democratic cyber power

- the upcoming National Quantum Strategy, which will set out a ten-year vision for the UK to be a leading quantum enabled economy

- the upcoming Semiconductor Strategy, which will set out how the UK can best protect and grow its domestic industry, secure greater supply chain resilience and consider the security implications of this vital technology

These ambitions simply cannot be achieved without access to world-leading compute capabilities. The UK is currently a global science and technology superpower, but, without a thriving compute ecosystem, the UK’s technological, scientific and economic capability is at risk. As the recommendations of this review are taken forward, it is vital to ensure greater coordination among government strategies and policies that relate to compute.

Compute for a more prosperous future

Compute has the potential to unlock productivity as sectors across the economy make better and more extensive use of data analysis, simulation and AI technologies. Compute is an enabler of large parts of the UK’s digital sector, which itself is one of the highest growing industries, contributing nearly £140 billion to the economy in 2021.[footnote 4] Research has demonstrated the return on compute investment in the US across different sectors, with insurance, oil and gas and financial sectors realising the greatest returns in terms of profit and cost savings.[footnote 5]

The size of the UK HPC market has grown steadily over recent years and is expected to grow significantly over the next five years with forecasts indicating a compound annual growth rate (CAGR) of up to 11%.[footnote 6] Internal analysis by the Department of Science, Innovation and Technology indicates that demand projections for cloud computing and HPC could be up to 1.8 and 2.7 times larger by 2032 than in 2022 respectively. Whilst HPC and cloud computing are an incomplete representation of the compute market, it is the best available information and a strong indicator of future trends.

Figure 1A illustrates the significant potential benefits of compute based on economic analysis carried out to support recent public and private investments. These include improvements to the scale, efficiency and quality of research, increased productivity in the private sector and greater international collaborations. For example, the potential benefit range for Engineering and Physical Sciences Research Council (EPSRC) investment is already very high, but their analysis suggests the additional spillover benefits may be significant too, estimated to be £5.8 billion.[footnote 7]

Economic analysis of recently funded compute systems

There are substantial benefits to investing in compute. Three examples of investments into UK compute infrastructure demonstrates the economic impacts to the UK economy.

HPC investments by EPSRC cost £466 million and are anticipated to lead to benefits of between £3 billion and £9.1 billion to the UK economy.[footnote 8] This results in a benefit cost ratio of 6.5 to 19.5. This includes spillover impact of HPC research on UK output, contributions of industry impact and also those benefiting from training and skills development.

The Met Office invested £1.2 billion on a 10-year supercomputer and the expected social value is expected to be £13.74 billion with a benefit cost ratio of 9.[footnote 9] The winning tenderer, Microsoft, has also committed to investment of £45 million of UK skills development and to reduce the UK’s carbon impacts.

Finally, $100 million was invested into Cambridge-1 with the estimated potential to be around $825 million over the next 10 years, a benefit cost ratio of 12.1.[footnote 10]

Figure 1A: Economic analysis of recently funded compute systems

There is also evidence that increased business uptake of compute can lead to regional growth and development. Regional programmes such as LCR 4.0 and Cheshire & Warrington 4.0 provide support to UK SMEs and supply chains in the adoption of compute and AI technologies.[footnote 11] LCR 4.0 provided over 300 manufacturing SMEs in the Liverpool City Region (LCR) with technical and business support. Approximately 50% of businesses were supported with access to compute.[footnote 12] The project is on target to create 955 jobs within the Liverpool City Region and generate £31 million GVA, though this cannot all be attributed to the use of compute.

Evidence collected by the review revealed examples of the positive impact of compute on business productivity, with spillover effects throughout the value chain. As use cases for compute increase, it can be expected that efficiency gains and productivity benefits across the economy will also grow – but only if users can exploit compute capabilities fully.

Compute for AI

The National AI Strategy describes AI as a general-purpose technology and sets out the government’s ambitions to be a global AI superpower. Compute-intensive AI-augmented R&D has the potential to increase the rate of productivity growth significantly – by a factor of 2 compared to average rates in the last 75 years or a factor of 3 since 2000.[footnote 13]

Progress at the frontier of AI has been rapid. For example, London-based DeepMind has used AI to predict how proteins take shape, cutting cancer drug discovery times from years to under a month. AI models such as ChatGPT and Stable Diffusion can mimic human abilities in language, reasoning and drawing. Fundamental advances in AI such as these come with huge commercial potential and are permeating throughout businesses and the wider economy.

Richard Sutton, a leading AI professor, determined that the biggest lesson of 70 years of AI research is that the most effective methods, by a large margin, are those that leverage and scale with compute. Training models require massive investment in compute, with usage at the frontier doubling every six months. UK researchers must have access to sufficient specialised accelerator-driven compute to operate at the frontier of AI research and realise the social and economic benefits of AI.

Compute for scientific advantage and societal goals

The importance of computation for scientific research is huge and, to a large degree, immeasurable. The creation and analysis of large amounts of data is crucial for the development of science, the outcomes of which are difficult to foresee.

Figure 1B: The scale of the challenge — applications for compute

As outlined in Figure 1B, compute is required to tackle many of today’s global challenges and to unlock breakthroughs across several disciplines and sectors. For example:

- Our climate, weather and earth: Earth observation data and climate modelling for more accurate weather forecasts and to understand the impacts and tipping point of climate change.

- Our universe: Simulate phenomena like gravitation waves and dark matter to develop our fundamental understanding.

- Our biology: Simulate how our bodies work and the functions of our genes to develop personalised medicine; use AI to search through endless data to match illness and potential drug treatments.

- Engineering and materials: Simulate jet engines and plasma in a fusion reactor to engineer a low carbon future, and discover new materials with AI.

- Our society and culture: Use insights and trends to create solutions to improve society.

There is huge potential for compute to solve research and social challenges quicker than ever before — it took 13 years to first sequence a human genome; compute can now do this in less than a day.

Compute for weather and climate predictions[footnote 15]

Every weather forecast and climate prediction that the UK Met Office makes today is generated through compute. Weather modelling starts with raw observations. The Met Office takes in 215 billion weather observations from all over the world each day, consisting of temperature, pressure, wind speed and direction, humidity and other atmospheric variables.

The Cray XC40 supercomputing system is the latest in a long history of compute technology used by the Met Office. Completed in 2016, it consists of three separate machines that are capable of 14 petaflops. This compute capability is essential for the scale and complexity of the weather and climate models run by the Met Office. To reflect increasing data availability and demand for more local and accurate forecasting, the Met Office is investing in the next generation of compute.

Weather forecasting helps the UK with everything from routing flights and shipping, to managing the provision of key utilities such as energy and water. Climate prediction enables the UK to identify, and plan effectively how to mitigate, the impacts of climate change. This means that, by the end of its life, the Met Office’s current supercomputing system is predicted to have enabled an additional £1.6 billion of socio-economic benefits across the UK.

Using compute and AI to develop fusion technologies for a low carbon future[footnote 16]

Realising the UK’s ambition for fusion to provide a carbon-free energy supply early in the 2040s will require a radical transformation of the engineering process. Fusion power plants must be designed ‘in-silico’. A collaboration between the Science and Technology Facilities Council (STFC) Hartree Centre and the UK Atomic Energy Authority (UKAEA) is applying the latest supercomputing and data science expertise to accelerate developments in fusion energy, across an environment increasingly being referred to as the Industrial Metaverse.

Such work is seeding the development of a whole new industrial sector – the pioneering SME’s, universities and engineering giants that will deliver and commercialise fusion. Worldwide this has attracted $5 billion in venture capital. In the era of net zero, this is a race, a necessity and a tremendous economic opportunity for the UK.

For this fledgling sector to thrive, new infrastructure is needed, powered by extreme scale computing and AI. The fusion process, whether relying upon powerful lasers or magnetic confinement of a plasma ten times hotter than the core of the sun, has long been referred to as an ‘exascale’ grand challenge. From modelling the turbulence that causes heat to leak from the plasma core, to simulating the materials needed to build a robust powerplant that can tolerate such an extreme environment, systems must be designed using new, state-of-the-art tools. These include simulation at the exascale, advanced methods in uncertainty quantification and algorithms built upon the convergence of AI and HPC – all made possible due to recent, disruptive developments in compute technology and machine learning. Many of the methods being developed by the UKAEA and the Hartree Centre will translate into other science and industry sectors, improving UK skills and capability growth.

1.4 UK compute today

To understand the UK’s requirements for compute capability over the next decade, consideration must be given to the current landscape. The GO-Science report offers a comprehensive overview of the compute ecosystem and the interdependence of hardware, software and skills. This review builds on that analysis, showing that the UK’s compute capability lags internationally and that users face challenges in accessing the resources they require.

Public compute systems are often categorised into tiers based on capability. UK public compute and commercial cloud facilities have been outlined in Figure 1C. UK Research and Innovation (UKRI) operates tier 1 and 2 systems, providing national and regional compute capability in the UK with universities providing local tier 3 systems. The UK also has limited access to some international pre-exascale and exascale systems. Commercial cloud offers additional resource to UK researchers. The following chapters explore how the UK’s current fragmented landscape does not meet user needs and fails to provide clarity on future provision.

Figure 1C: UK public compute and commercial cloud facilities

1.5 Policy implications

There is a clear strategic case for investing in compute to achieve the UK’s economic, scientific and societal ambitions. This is founded upon four principal factors: compute will produce significant economic and social benefits; compute is essential to achieve UK ambitions; the market alone will not provide all the necessary compute supply; and UK compute capability is falling behind internationally.

Commissioning this review signalled the government’s interest in giving strategic consideration to compute policy and investment. However, investment into compute infrastructure needs to be combined with long-term strategic planning across the whole ecosystem to maximise the benefits of compute uptake for all users. Government must work with industry, academia and the public sector to succeed.

Action required: Unlock the world-leading, high-growth potential of UK compute

Compute is critical for achieving the government’s ambitions in the long and short term. These include: creating a more prosperous future through economic growth and productivity; securing the UK’s status as a global Science and Technology Superpower; and being a world leader in AI. The UK cannot achieve these goals without having a compute ecosystem and infrastructure that is fit for the future. Action is needed to unlock the world-leading, high-growth potential of UK compute and ensure the UK remains competitive internationally.

2. The international landscape of compute

Key findings

-

Internationally, compute is recognised as a strategic resource required to be competitive in global science and innovation. Other countries are moving ahead of the UK through strategic planning and public investment into compute resources, which translate into world-class scientific outputs and innovation.

-

To secure the UK’s status as a global Science and Technology Superpower by 2030 and to be at the forefront of shaping international thinking around digital innovation, a strong domestic compute ecosystem and enhanced compute capability are required. The UK needs to act now to catch up with competitors and to realise its international, scientific and tech ambitions.

2.1 Introduction

As noted in Chapter 1, compute is critical to understanding and tackling many domestic and global challenges. Internationally, the role of compute as an essential enabler of global innovation and cutting-edge science, and as a critical component of any strong digital economy, is widely recognised. This is reflected in the long-term, strategic approach that other countries have adopted, as well as their commitment to public investment into compute.

However, despite compute’s strategic importance, the UK is falling behind. In 2022, the US broke the exaflop barrier with their Frontier system. In comparison, the UK’s most powerful public system, ARCHER2, is 56 times less powerful. The UK has a long and proud tradition of computer science and cutting-edge research, with world-class strengths in areas including innovation, software development and machine learning. However, to maximise its expertise and ensure it remains at the forefront of global competitiveness, technological innovation, and scientific discoveries, the UK needs an internationally competitive compute ecosystem. Therefore, investment into and use of compute systems should be looked at through a global lens.

This chapter explores the international compute landscape, the UK’s position within it and best practices adopted internationally to build a vibrant compute ecosystem.

2.2 International compute capability

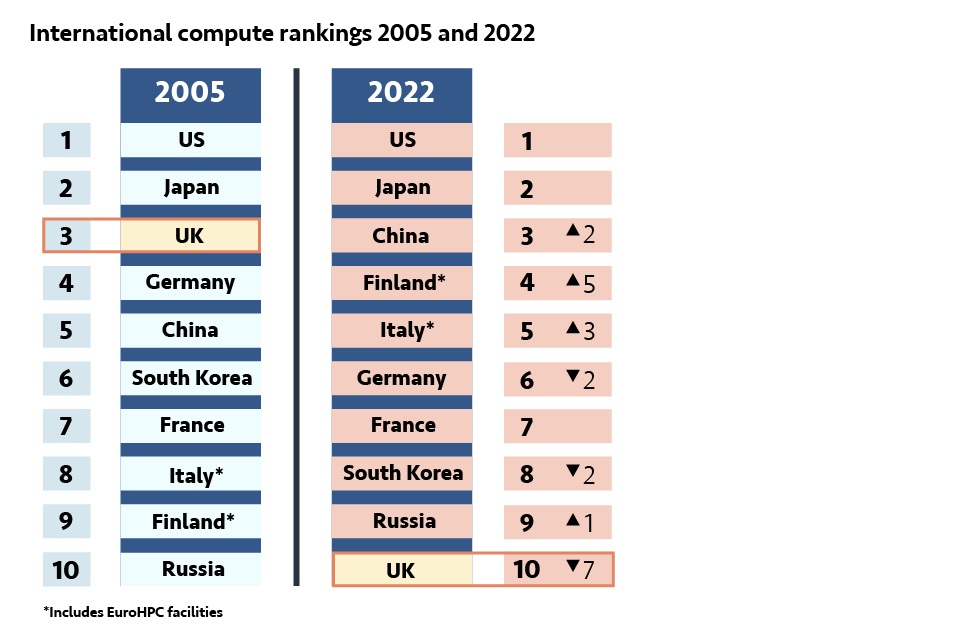

While other countries have continued to invest in compute, Figure 2A shows the UK has lost ground since 2005, a time when the UK and Japan were peers and only the US had greater compute capability. As of November 2022, the UK was trailing in compute capability (Rmax), ranking 10th globally. The EU’s EuroHPC programme is further driving other countries’ compute capability ahead of the UK’s, starting with pre-exascale systems in Finland and Italy.

Figure 2A: Growth in total compute performance between 2005 and 2022[footnote 17]

Countries are also investing in AI compute. In addition to its national research cloud, Canada funds dedicated computing resources of hundreds of top-spec GPUs for each of its three national AI institutes; the US are looking to establish a National AI Research Resource; France has extended its Jean Zay system to include accelerators; and Italy hosts the latest EuroHPC system Leonardo with 10 exaflops of AI performance. In comparison, the UK does not have a dedicated AI compute resource and has a limited number of accelerators in national systems.

Advanced economies are investing in next generation infrastructure and exascale capability — threatening to widen the gap between the UK further. Figure 2B demonstrates the most powerful compute systems in the world. The US and China have already deployed exascale infrastructure, with multiple systems expected to be operational by 2025. The US are now looking further ahead to systems 5-10 times as powerful as Frontier, with at least one of these planned for 2025-2030.[footnote 19] The EU’s EuroHPC programme is deploying three pre-exascale systems (in Finland, Italy and Spain), and will deploy its first exascale system in Germany in 2024. Japan’s Fugaku marked the transition to exascale in 2020 with the first multi-hundred petaflop system and it is paving the way for exaflop-capable infrastructure. Australia and Singapore have teamed up to jointly explore collaboration in the area of exascale, including capability development and readiness of underlying infrastructure.

Figure 2B: Most powerful compute systems by location (November 2022)[footnote 20]

| Location | System | A system’s maximal achieved performance (Rmax, petaflops) | Installation year | TOP500 Rank |

|---|---|---|---|---|

| USA | Frontier | 1,102 | 2021 | 1 |

| Japan Supercomputer | Fugaku | 442 | 2020 | 2 |

| Finland* | LUMI | 309 | 2022 | 3 |

| Italy* | Leonardo | 175 | 2022 | 4 |

| US | Summit | 149 | 2018 | 5 |

| UK | Archer | 20 | 2021 | 28 |

*EuroHPC facilities

UK researchers currently have access to EuroHPC systems such as LUMI and are able to pursue partnerships with European colleagues to increase the likelihood of successful allocation. However, UK researchers will not have direct access to future EU systems such as Jupiter, the EU’s first exascale system scheduled for 2023/24. Indirect access might be possible through negotiated access.

2.3 The impact of a robust compute capability

A robust compute capability, paired with a healthy ecosystem that maximises the investment in infrastructure, leads to world-leading results in science, technology and innovation. For instance, Frontier will further integrate AI applications with modelling and simulation, enabling the US to achieve advances in medicine, astrophysics, bioinformatics, cosmology, climate, physics and nuclear energy. As the US continues to develop its compute capability over the decade, it will be able to tackle computationally intensive problems, such as complex physical systems or those with high fidelity requirements.[footnote 21]

Case study: Frontier powering medical research

Frontier’s exascale capability was leveraged by researchers at the US Department of Energy’s Oak Ridge National Laboratory to conduct a study that could result in health-related breakthroughs. Researchers used Frontier to perform a large-scale scan of biomedical literature in order to find potential links among symptoms, diseases, conditions and treatments, understanding connections between different conditions and potentially leading to new treatments. By using Frontier, scanning time was significantly reduced, with the system able to search more than 7 million data points from 18 million medical publications in only 11.7 minutes. The study identified four sets of paths that will need further investigation through clinical trials.

World-changing scientific discoveries powered by world-class compute infrastructure enhance a country’s international standing in science and technology. The UK’s strengths in computing — especially in software development, machine learning and increasingly green computing — and in science and research more broadly, are internationally recognised. The fact that UK researchers are able to access the world’s most powerful systems on the basis of their projects’ scientific merit shows this. Evidence gathered by this review notes that since 2010, UK researchers were included in 5% of the projects supported by the US’ INCITE programme (which supports large-scale scientific and engineering projects by providing access to compute resources), with the majority having a UK-based Principal Investigator. That evidence also showed that UK researchers were also included in 20% of the projects supported by the EU’s PRACE programme since 2010.

However, a lack of a strong domestic compute ecosystem constrains the UK’s potential and threatens its standing as an international leader in science and technology. Low investment in domestic compute capability may have already negatively impacted UK research: a consortium including UK researchers has not won the Gordon Bell Prize — an annual award for outstanding achievement in compute — since 2011, unlike researchers from the US, China, Japan, Switzerland, France and Germany.

Case study: 2022 Gordon Bell Prize winners

A team of French, Japanese and American institutions won the 2022 Gordon Bell Prize for its simulation code, WarpX. The code enables high-speed, high-fidelity kinetic plasma simulations, with the potential of powering advances in nuclear fusion and astrophysics. The code is optimised for the Frontier (US), Summit (US), Fugaku (Japan) and Perlmutter (US) supercomputers, which have different architectures and are all in the top 10 of the November 2022 Top500 list.

Without further investment in domestic compute capability, the UK’s position will continue to decline as other countries press ahead with plans to bolster their compute infrastructure. This will affect the UK’s ability to remain at the forefront of global innovation and secure its status as a Science and Technology Superpower.

2.4 International compute policies

Internationally, the development of domestic sovereign capabilities and of a strong compute ecosystem have been a result of targeted government interventions and long-term planning. Leading countries in compute have adopted clear, strategic measures to ensure an adequate supply, facilitate access to compute, increase uptake among their domestic users and stimulate their domestic supply chains and R&D. The strategic approaches and long-term planning of global compute leaders are driven by the recognition that private investment in new computing technologies is limited by the extent of the cost, risk and technological change involved. Without public intervention, the provision of compute would likely be insufficient in terms of high-end capability, inefficient in terms of capacity allocation, unequal in terms of access and ineffective in stimulating innovation across the whole economy.

International best practices

Countries have adopted a number of approaches to compute designed to meet their particular needs. Some interventions are common to countries that have been successful in creating robust compute ecosystems.

These include:

- A long-term strategy, updated on a rolling basis, that underpins and guides planning and investment around compute. Rolling updates are crucial to ensure strategies remain up to date and include the latest technological developments. Investment plans are also updated on a rolling basis, as they are informed by long-term strategies.

- Having a strong coordinating entity to enable and facilitate access to national systems. National coordination bodies are usually arm-length bodies or government-sponsored networks of research institutions. They are responsible for facilitating user access to systems, promoting shared investment plans across national facilities, and providing training.

- An incremental approach to investment, that grows and adapts to reflect changing needs. This stems from having a long-term strategic vision and often includes clear plans around investment into next generation hardware, such as exascale, alongside the necessary supporting infrastructure.

- A recognition of the importance of public investment in different infrastructure tiers – from flagship systems (Tier 0) to regional infrastructure (Tier 2). This ensures applications can be scaled up incrementally, allowing innovation to trickle up the compute ecosystem.

- Investment in heterogeneous architectures, as these represent the current direction of technological development. Several countries have invested in heterogeneous systems due to the convergence between AI and more traditional compute applications.

- Investment in hardware is coupled with investment in software development, applications and skills to support the community of users that will access new systems.

- Enabling greater uptake of compute by lowering barriers to access. This includes providing cloud access to compute resources, providing targeted support for users and fostering industry’s compute uptake through ad hoc initiatives.

- Adopting effective procurement models that lower costs, mitigate technological risks, and take into account innovative approaches for future upgrades. Joint procurement — either at a national level (among different public facilities/national laboratories) or at an international level (in partnerships with other countries) — can deliver economic as well as technological advantages, particularly when investing in multiple systems with different architectures.

- Investment in supply chains and the wider domestic compute R&D to strengthen the domestic compute industry and develop new technologies.

The panel is of the view that the UK should aim to create a strong and vibrant compute ecosystem by implementing all the above measures. This would help the UK reduce its lag behind other countries, and be a credible international partner at the forefront of digital innovation. If the UK were to adopt only some of these best practices — for example, only improving its long-term vision and coordination — it would become more competitive internationally, but would fall short of becoming a global leader in compute.

Case study: USA

The US have adopted a holistic, strategic approach to compute – spanning heterogeneous systems, data and networking requirements — and have developed a plan containing clear, actionable measures. The National Science and Technology Council Subcommittee on Future Advanced Computing Ecosystems provides strategic direction and ensures coordination between Federal agencies. The Department of Energy (DOE) and the National Science Foundation (NSF) coordinate access to and use of compute for governmental and academic research respectively. The Department of Defence also plays a role, with a military and defence focus.

Forward-looking investments, such as the Exascale Computing Initiative, are fulfilling the US’s strategic planning. The US have also adopted a joint procurement approach, which has seen DOE’s laboratories jointly procuring systems. As they look to develop more advanced capabilities, the US are exploring the possibility of moving from monolithic acquisitions toward a model based on modular upgrades. Future systems will be part of an Advanced Computing Ecosystem and integrated with other DOE facilities.

The US have implemented initiatives to facilitate access to compute, such as the STRIDES initiative (which supports the use of cloud for biomedical research) and the NSF’s CloudBank (which helps the computer science community access and use public clouds for research and education). The National AI Research Resource, currently in development, aims to leverage a combination of HPC and cloud computing to provide AI researchers with access to compute resources and data. The US also have initiatives open to industry, such as the DOE’s INCITE and HPC for Energy Innovation (HPC4EI) programmes (which offer access to compute and technical support for projects aimed at reducing the environmental impact of the manufacturing, materials and energy sectors).

The US invests in compute as a sovereign capability through investment in the full supply chain, starting from semiconductors — a critical component of compute systems. This includes the allocation of $52 billion to maintain technological advantage in the semiconductor sector and build domestic capabilities.

2.5 UK policy implications

The UK lags behind other advanced economies in compute. To secure the UK’s status as a Science and Technology Superpower, action is needed to match international investment in compute and further capitalise on the UK’s world-class research and innovation capability. Only by having a strong domestic compute ecosystem can the UK be seen as an influential player internationally and project its global power as a science and technology leader.

Lessons from those at the forefront of compute suggest that this requires government intervention to provide strategic leadership, ensure sovereign compute capability, and create a vibrant ecosystem. The UK can learn from the approach of others and carry out interventions that match the characteristics of its compute landscape. These points form the basis of this review’s recommendations. The following chapters will explore how the UK can design an ecosystem that meets its domestic requirements, satisfies the needs of its users and improves compute uptake.

3. The demand for compute in the UK

Key findings

-

The UK’s compute ecosystem needs to reflect the variety of users, both existing and emerging, and their needs. It needs to ensure a broad offering to support prosperity and growth across the UK.

-

For many academic users and researchers, and other pioneers of compute, the current provision of compute is not sufficient. This is limiting the UK’s scientific capability and inhibiting scientific breakthroughs.

-

Many more could benefit from using compute, particularly SMEs. Increased use of compute can boost economic growth, but more support is needed to meet user needs and help new users to navigate this landscape.

3.1 Introduction

Building on the findings of the GO-Science report, this chapter assesses the needs of compute users and the challenges they face in accessing and using compute.

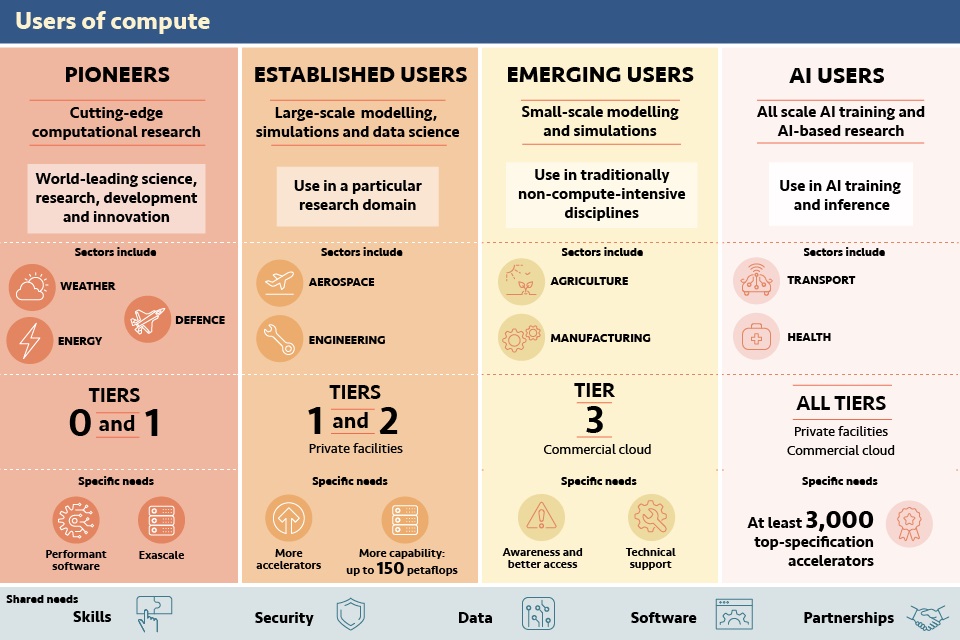

There are different types of compute users and many ways to categorise them. This review has considered three main groups — academia, industry and the public sector — as well as users’ different maturity levels within each of these groups. The difference between traditional compute users and the growing community of AI users, who have specific requirements, needs to be recognised.

To distinguish between the maturity of users, this chapter has adopted a categorisation similar to that used by UKRI:

Pioneers are at the cutting-edge of computational research and rely on the most powerful systems. They are a small proportion of users, but demand resources of the highest specifications.

Established users typically use compute in a particular research domain and their technical requirements vary depending on the application.

Emerging users of compute include research disciplines and businesses that traditionally have not been compute-intensive, such as from the life sciences, digital humanities and social sciences.

The compute required for AI is distinct from that of more traditional uses. Traditional compute has historically used general purpose CPUs, whilst AI training and inference can be done at a much lower precision and uses specialised hardware accelerators such as graphics processing units (GPUs). Accelerators are orders of magnitude faster and more energy efficient than CPUs for AI-related tasks.

Understanding the diversity of requirements is essential to assess the suitability of compute provision and identify where support is needed, both in the short and longer term. This information will be outlined throughout this chapter and is summarised in Figure 3A. It will be key to ensure any future investment in compute infrastructure meets users’ needs.

Figure 3A: Summary of the type of compute, applications and delivery of compute resources by user category

| Users | Pioneers | Established Users | Emerging Users | AI Users |

|---|---|---|---|---|

| Type of compute | Cutting-edge computational research | Large scale modelling, simulations and data science | Small scale modelling and simulations | AI training and AI-based research |

| Use | World-leading science, research development and innovation.

Examples: • weather |

Use in a particular research domain.

Examples: • aerospace |

Use in traditionally non-compute-intensive disciplines.

Examples: • agriculture |

Use in AI training and inference.

Examples • autonomous vehicles |

| Tiers | All tiers | Tiers 1&2 private facilities | Tier 3 commercial cloud | All tiers commercial cloud |

| Specific needs | Powerful systems

1 Exaflop |

More accelerators

More capability Up to 150 petaflops |

Awareness

Lower costs and technical support |

At least 3000 top-spec accelerators |

| Shared needs (common to all users) | Skills Security Data Software Partnerships |

3.2 Compute for academic users, research and scientific discovery

Academic and research communities use compute across many disciplines. Academic users present varied levels of maturity and include pioneers, established users and emerging users. As compute infrastructure underpins the research and innovation ecosystem, a wide range of operational and access models exist for public facilities. The majority of researchers rely on on-premise compute which is free at the point of use.

UKRI’s science case for supercomputing finds that a factor of 10 to 100 increase in UK computing power is needed if they are to deliver their immediate science goals. Compute infrastructure is essential to the competitiveness of the UK’s academic landscape and, without appropriate levels of access, the UK’s ability to attract international talent will be hindered.

Many universities partner with UKRI to provide access to Tier 2 systems. Academic users often use more than one facility. To access national level compute resources, academics typically submit an application that will be reviewed to assess the technical feasibility and the merit of the proposed research or project.[footnote 22] Currently this model prioritises excellent science rather than mission-led activity. Research centres and universities also gain access to compute through shared procurement frameworks and individual arrangements with commercial cloud providers.

Pioneers and established academic users

As set out in Chapter 1, compute is critical for world-leading science, research, development and innovation. Academic users of all maturities face substantial challenges from the lack of capacity in national compute facilities, resulting in significant unmet demand across all research areas. This is particularly true for those operating at the limits of compute capability. Academic users often use more than one facility and so interoperability is also a factor. As explored in Chapter 4, the UK’s compute ecosystem is fragmented and that limits researchers’ access to compute resources and ability to collaborate.

Emerging academic users

Those with less experience using compute face challenges around how to find compute resources suitable for their needs and how to access them. This is due to limited signposting of the resources offered by different public compute facilities. Emerging users also face challenges in terms of having the correct skills needed to use compute effectively and the access to technical support.

AI researchers and accelerator-based compute

Accelerator-based compute is essential to AI breakthroughs and enables the creation of general-purpose technologies. Access to compute allows researchers to conduct small-scale exploratory AI research and larger teams to mature their models. Compute is critical to the most important advances at the frontier of the field where massive models are trained on huge datasets using thousands of accelerators concurrently for weeks or even months. To train frontier AI, research indicates that the amount of compute is currently doubling every 5-6 months.

Case study: Deep generative modelling of the human brain for healthcare[footnote 23]

Contemporary brain scanners produce finely detailed images, but the analytical tools used to analyse this imaging data present limitations. The gap between the volume of available data and the depth of analysis has direct implications for the quality of care doctors are able to provide patients with neurological diseases, which in turn can lead to years of productive life wasted.

Researchers at UCL and KCL are using AI techniques to tackle this challenge by developing a suite of ‘deep generative models’ which are trained on large-scale brain imaging data. Wherever a disease is associated with characteristic changes on brain imaging, these models enable doctors to identify the pathological component with high level of precision. Such models have therefore the power to revolutionise neurological care and transform the value doctors can draw from brain imaging in healthcare.

Building deep generative models requires HPC. Specific requirements, such as large clusters of high-memory accelerators with high-bandwidth interconnect, are needed. The value of the approach cannot be demonstrated at smaller, prototype scales. Public compute was not able to support this research, with researchers procuring local compute clusters of dozens of accelerators and relying on free access to the commercial Cambridge-1 supercomputer.

UK AI researchers face significant challenges in obtaining the compute they need. At present, grant money often supports procurement of small systems for local use. The growing ‘compute divide’ between academia and industry is a global phenomenon. Researchers indicate that the relative lack of accelerator compute in the UK makes it particularly challenging to hire and retain talent within academia, in comparison with other countries and companies. Some researchers rely on international or industrial partnerships to pursue their work, leading to a loss of research independence. This also has implications for oversight and safety, and areas of research that have less direct routes to commercialisation. Without better access to more compute, breakthroughs may be prevented from diffusing throughout the economy via spinouts and start-ups.

In addition to their need for accelerators, AI researchers have particular requirements for data access. Large, open access datasets have been catalysts for significant scientific progress in AI. It is common for independent researchers, funded by private donations and start-up sponsorship, to collectively create these datasets. This type of data and AI research should be complementary to non-commercial, fully transparent research. AI researchers need to interrogate the underlying data to understand how training sets influence trained model behaviours.

3.3 Compute for business users

Use of compute is growing in many sectors of the economy. This section outlines the needs of pioneers and established business users (both large and small businesses) and those of emerging business users, AI businesses and AI adopters.

Pioneers and established business users

There are numerous business applications for compute. Access to compute enables businesses to innovate better for new products and services and improve productivity. Each of these benefits can help businesses gain a competitive edge by increasing efficiency and operational effectiveness.

Business users access compute in a variety of ways — via their own on-premise systems, commercial cloud or public-sector facilities. Having easy and sufficient access to compute is key. Some businesses, mostly larger enterprises, use in-house systems for their regular workload and use ‘cloud bursting’ to cover spikes in demand. Businesses can also partner with universities or national facilities, either through research collaborations or paying for system time. They generally do this when they are not able to access or fund internal resources, or want to collaborate or de-risk innovation. The majority of businesses that use compute do not currently require the most capable systems (i.e. exascale). Some pioneers may make use of exascale systems over the next decade, especially in partnerships with academia.

Some public facilities support business access to compute through the provision of expertise, compute resources and software. Unilever, for example, explained that access to compute at the Hartree Centre enables them to maintain competitive advantage by unlocking breakthrough innovations faster than competitors.[footnote 24]

Case study: Compute to boost skin’s natural defences

To help the skin protect itself, there is a fine balance of micro-organisms living in perfect harmony, known as a microbiome. The skin also releases proteins (antimicrobial peptides, or AMPs) which protect against harmful bacteria and viruses. In 2022, the Hartree Centre and IBM Research worked with Unilever to find a way to increase the skin’s natural line of defence to protect against infections or other issues, such as body odour, dandruff and eczema.

Unilever knew that niacinamide, an active form of vitamin B3 naturally found in your skin and body, could enhance the level of AMPs in laboratory models. The team used compute facilities at the Hartree Centre for advanced simulations to visualise, with atomic precision, how the niacinamide molecules interact with the proteins and the bacterial membrane. The extra compute power enables the simulation of much more complex systems by using more sophisticated and realistic modelling to represent bacterial membranes. This will help Unilever to develop new skin hygiene products and cosmetics, as well as providing the foundation for new drug development.

Other examples of private-public partnerships include GlaxoSmithKline with Oxford University and Rolls Royce with EPCC. However, with the exception of the Hartree Centre, there is generally limited capacity available for businesses in public compute facilities.

Case study: Compute to accelerate the discovery of drugs

A 5-year collaboration between Oxford University and GlaxoSmithKline (GSK) was agreed in 2021 to establish the Oxford-GSK Institute of Molecular and Computational Medicine. Through the use of datasets and machine learning, the Institute aims to uncover new indicators and predictors of disease and use them to accelerate the most promising areas for drug discovery. Together they will be using patient, molecular information and state-of-the-art platforms to pinpoint the GSK targets that are most likely to succeed and be developed into safe, effective, disease mechanism-based medicines.

Genetic evidence has already been shown to double success rates in clinical studies of new treatments. The digitisation of human biology alongside powerful compute has the potential to improve drug discovery by more closely linking genes to patients.

Simulation and modelling enabled by compute have transformed the way Rolls-Royce designs and engineers its products. These simulations are not only key to innovation, but can also save the company millions of pounds in design, development and certification.

As the company’s in-house compute is prioritised for production design, access to public systems is crucial for research and development. The ‘Strategic Partnership in Computational Science for Advanced Simulation and Modelling of Virtual Systems’ (ASiMoV) seeks to develop the world’s first high-fidelity simulation of a complete gas-turbine engine in operation. Initiated in 2018, the project is jointly led by EPCC and Rolls-Royce, collaborates with the Universities of Bristol, Cambridge, Oxford and Warwick, as well as two SMEs, CFMS and Zenotech.

Working towards the virtual certification of aircraft engines by 2030, ASiMoV will require a new high-resolution physical model of an entire engine and of the airflow and turbulence during its operation. This requires a trillion cell model and simulated operation on millions of computing cores. ASiMoV demands the next generation of compute (exascale) to process the unprecedented amount of data, robustly, securely and affordably.

Estimated cost savings are measured in tens of millions of pounds per engine programme. For Rolls-Royce, virtual certification could bring a major transformation requiring unprecedented trust, from both the company and certifiers worldwide, in ‘virtual twin’ simulation and fundamental changes to research and development.

Established business users report challenges in system performance, access to technical skills and an increasing demand for flexible and scalable compute architectures. Some stakeholders engaged by the review have reported that commercial cloud can, in some instances, result in lock-in effects and high data extraction costs, making it difficult for users to move between services. Furthermore, the use of public compute often includes a requirement to publish results. This can act as a barrier to some businesses adopting compute, particularly where intellectual property protection is a major concern.[footnote 26] Some businesses also noted that the costs of data management and governance can be an inhibitor for those who might want to use more substantial computing resources.

Lastly, the review considers those researching and developing quantum computing and its applications among the pioneer users of compute. Although the most impactful applications of quantum computing are still being identified, early examples include drug development and logistics optimisation.[footnote 27] The integration of classical compute and quantum systems will be critical as quantum computing develops, with applications relying on the complementary strengths of both systems to produce optimal results. Further assessment is needed into the specific compute requirements of the quantum sector so this nascent technology can thrive. The publication of the government’s National Quantum Strategy will set the government’s long-term vision for accelerating both quantum computing and other quantum technologies supporting businesses to equip themselves for the future.

Emerging business users

Smaller businesses often have lower levels of technology adoption and could significantly benefit from compute uptake. With SMEs accounting for the majority of the UK business population, encouraging greater adoption of compute could result in significant economic benefits. Whilst businesses that already use compute often have similar requirements irrespective of their size, emerging business users have different needs.

Public facilities can play a key role in supporting emerging business users. They can support new users to access compute and software, in the past only available to academia and large-scale business; provide domain experts to develop business models; build the workflows required; and train staff. Some facilities provide these services for free, removing cost barriers.

The Hartree Centre

The Hartree Centre is a high performance computing, data analytics, artificial intelligence (AI) and hybrid quantum/classical computing research facility created specifically to focus on increasing adoption of advanced compute technologies in UK businesses.

Sectoral teams made up of digital experts and business professionals work with companies to help upskill staff and apply practical digital solutions to individual and industry-wide challenges for enhanced productivity, innovation and economic growth. In its first four years of operation (2013-2017) the centre generated £34.6 million net impact GVA for the UK economy.

In 2021, the Hartree National Centre for Digital Innovation (HNCDI) programme was established to further support businesses and public-sector organisations to advance the pace of discovery through the use of HPC, AI and quantum computing technologies.

Case study: ‘Platform as a service’ HPC for SMEs[footnote 28]

QED Naval is an SME which specialises in supporting design and deployment of ‘green’ electricity generation installations that harness wind, tidal and wave energy. Sophisticated simulations are a key tool and typically have to be completed within challenging budgets and timeframes.

As part of its Subhub project, aiming to cut the cost of deploying tidal turbines, QED Naval needed to carry out simulations using computational fluid dynamics (CFD) software. In expanding its services, QED Naval decided to trial enCORE, the ‘platform as a service’ HPC offering delivered on behalf of the Hartree Centre by channel partner OCF.

Simulation runtimes were 4.2 times faster than those achievable in-house, enabling QED to increase the size of the models they used and run projects more quickly and efficiently, without increasing their overheads. enCORE has now become a key component of the engineering simulation services provided by the company.

Case study: Enhancing data science skills for SMEs

ORCHA is an SME that specialises in evaluating healthcare apps. What a ‘good’ health app looks like varies hugely, so ORCHA aims to help health professionals identify and prescribe the best app for their patients, enabling better monitoring and self-management of health conditions.

Through the LCR 4.0 project, ORCHA worked with data scientists at the Hartree Centre to explore new data-driven techniques that could speed up their evaluation process and develop a more sustainable business model.