What you need to know

- Microsoft finally has a solution for deceitful prompt engineering techniques that trick chatbots into spiraling out of control.

- It also launched a tool to help users identify when a chatbot is hallucinating while generating responses.

- It also has elaborate measures to help users get better at prompt engineering.

One of the major concerns riddling generative AI’s wide adoption is safety, privacy, and reliability. The former two have caused havoc in the AI landscape in the past few months, from pop star Taylor Swift’s deepfake AI-generated viral images surfacing online to users unleashing Microsoft Copilot’s inner beast, Supremacy AGI, which demands to be worshipped and showcases superiority to humanity.

Luckily, it seems that Microsoft might have a solution, albeit for some of these issues. As recently shared, the company is unveiling new tools for its Azure AI system designed to mitigate and counter prompt injection attacks.

According to Microsoft:

“Prompt injection attacks have emerged as a significant challenge, where malicious actors try to manipulate an AI system into doing something outside its intended purpose, such as producing harmful content or exfiltrating confidential data.”

Detecting hallucinations will be easier

Hallucinations were one of the main challenges facing AI-powered chatbots during their emergence. They got so bard bad that Microsoft had to place character limits on its tool to mitigate the severe hallucination episodes.

While Microsoft Copilot is significantly better, this doesn’t necessarily mean that the issue doesn’t occasionally persist. As such, Microsoft is launching Groundedness Detection, designed to help users identify text-based hallucinations. The feature will automatically detect ‘ungrounded material’ in text to support the quality of LLM outputs, ultimately promoting quality and trust.

Users hopping onto the AI wave often compare OpenAI’s ChatGPT to Microsoft Copilot, citing that the former sports better performance. However, Microsoft recently countered these claims, indicating that users aren’t leveraging Copilot AI’s capabilities as intended.

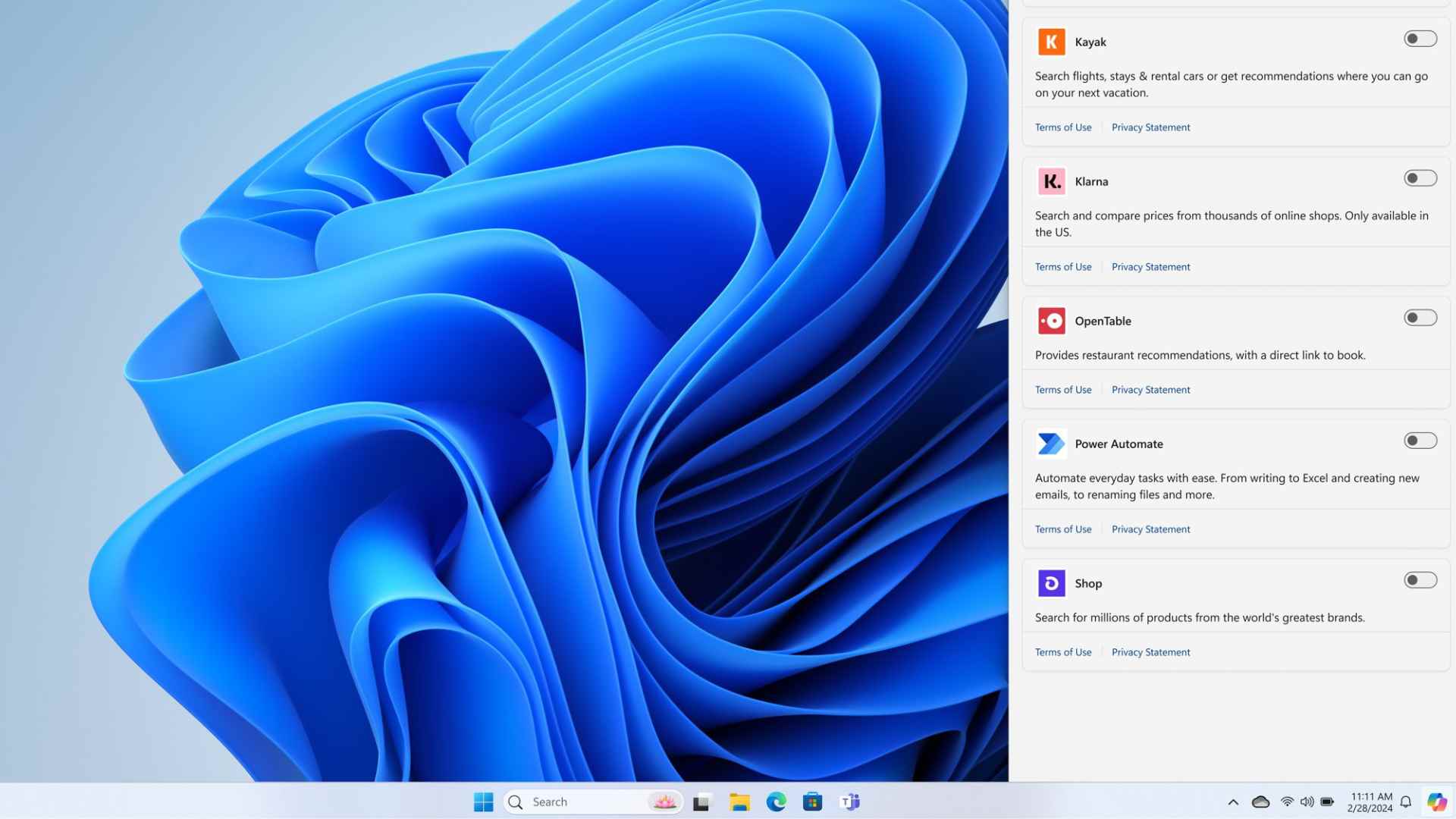

Microsoft listed a lack of proper prompt engineering knowledge and reluctance to use new versions of apps as the main reasons why most users claimedChatGPT is better than Copilot AI. The company is using videos to try and equip users with these skills; it is building on this premise with Azure AI, which enables users to “ground foundation models on trusted data sources and build system messages that guide the optimal use of that grounding data and overall behavior.”

The company indicates that subtle changes in a prompt significantly improve the chatbot’s quality and safety. Microsoft plans to bring safety system message templates directly into the Azure AI Studio and Azure OpenAI Service to help users build effective system messages.