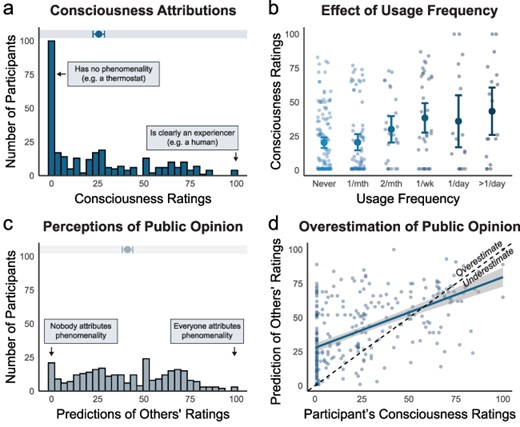

When you interact with ChatGPT and other conversational generative AI tools, they process your input through algorithms to compose a response that can feel like it came from a fellow sentient being despite the reality of how large language models (LLMs) function. Two-thirds of those surveyed for a study by the University of Waterloo nonetheless believe AI chatbots to be conscious in some form, passing the Turing Test of convincing them that an AI is equivalent to a human in consciousness.

Generative AI, as embodied by OpenAI’s work on ChatGPT, has progressed by leaps and bounds in recent years. The company and its rivals often talk about a vision for artificial general intelligence (AGI) with human-like intelligence. OpenAI even has a new scale to measure how close their models are to achieving AGI. But, even the most optimistic experts don’t suggest that AGI systems will be self-aware or capable of true emotions. Still, of the 300 people participating in the study, 67% said they believed ChatGPT could reason, feel, and be aware of its existence in some way.

There was also a notable correlation between how often someone uses AI tools and how likely they are to perceive consciousness within them. That’s a testament to how good ChatGPT is at mimicking humans, but it doesn’t mean the AI has awakened. The conversational approach of ChatGPT likely makes them seem more human even, though no AI model works like a human brain at all. And while OpenAI is working on an AI model capable of doing research autonomously called Strawberry, that’s still different from an AI that is aware of what it is doing and why.

“While most experts deny that current AI could be conscious, our research shows that for most of the general public, AI consciousness is already a reality,” University of Waterloo professor of psychology and co-lead of the study Dr. Clara Colombatto explained. “These results demonstrate the power of language because a conversation alone can lead us to think that an agent that looks and works very differently from us can have a mind.”

Customer Disservice

The belief in AI consciousness could have major implications for how people interact with AI tools. On the positive side, it encourages manners and makes it easier to trust what the tools do, which could make them easier to integrate into daily life. But trust comes with risk, from overreliance on them for decision-making to, at the extreme end, emotional dependence on AI and fewer human interactions.

The researchers plan to look deeper into the specific factors making people think that AI has consciousness and what that means on an individual and societal level. It will also include long-term looks at how those attitudes change over time and with regard to cultural background. Understanding public perceptions of AI consciousness is crucial not only to developing AI products but also to the regulations and rules governing their use.

“Alongside emotions, consciousness is related to intellectual abilities that are essential for moral responsibility: the capacity to formulate plans, act intentionally, and have self-control are tenets of our ethical and legal systems,” Colombatto said. “These public attitudes should thus be a key consideration in designing and regulating AI for safe use, alongside expert consensus.”