Key Takeaways

- Game NGen uses AI to run games without a game engine, showcasing potential beyond DLSS.

- DLSS aims to enhance gaming by creating high-quality textures, deep NPCs, and improving compression ratios.

- Ai-generated assets via DLSS could revolutionize game development, but there are hurdles to overcome.

The future of DLSS (Deep Learning Super Sampling) is on the brink of something transformative. What started as a way to boost game performance is now evolving, even breaking into the realm of what game engines do. What could DLSS do next—and how wild could its potential really be?

Game NGen – An Upgrade to Game Engines?

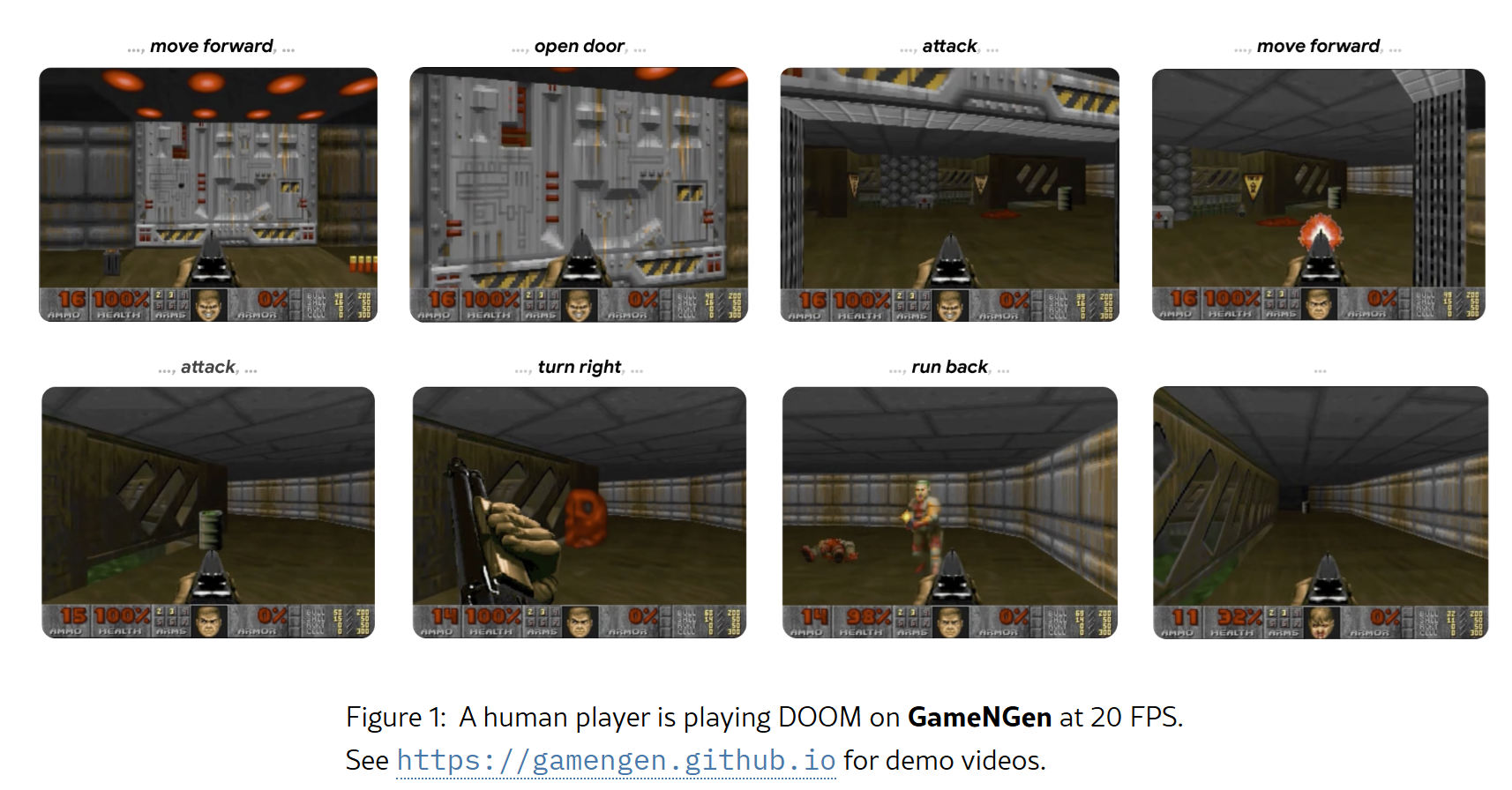

DOOM can run on some crazy things, but how about running without a game engine at all? Researchers at the University of Tel Aviv have managed to run DOOM entirely on AI without needing an engine. Game NGen uses artificial intelligence to determine where NPCs are likely to be and what player input does, then render frames as necessary.

While it won’t win any gaming awards, it’s still an impressive feat. The game runs at a more-or-less, steady 20 FPS with no graphical glitching or errors. The researchers trained the engine to recognize what playing DOOM looks like, using over 900 million frames of data. Using this base data, they trained a diffusion model to present the player with the next logical frames in order, similar to how AI engines function with text.

While this is impressive in itself, it’s also fascinating to those of us following the development of DLSS. If Game NGen becomes more mainstream, we may actually see the type of future that Jensen Huang mentioned in his recent address.

What Does the Future of DLSS Look Like?

In that recent address, Jensen Huang discussed what he expects the future of DLSS to look like, and it resembles what Game NGen is already doing. In his vision, DLSS would go beyond being just a texture upscaler and become something that allows for the creation of high-quality textures and objects in real-time.

Additionally, Huang mentioned using DLSS to create non-player characters (NPCs). For those of us who know a bit about game development, it’s not the easiest thing in the world to make deep NPCs with behavioral traits and dialogues. NVIDIA’s Avatar Cloud Engine (ACE) hopes to offer a quick and easy way to make deep NPCs without the overhead.

DLSS also aims to improve texture compression ratios using AI. Currently, they manage an 8:1 ratio, but using neural networks, they hope to double that, raising it to a 16:1 ratio. Imagine loading in high-quality textures in a fraction of the time it currently takes.

Huang’s announcement suggests that DLSS could transform how we play (and design) games at a fundamental level rather than just improving performance. NVIDIA’s use of neural networks can make prototyping and developing AAA games a lot faster, with even better textures and immersive worlds to explore.

How Would AI-Generated Assets Work?

Game engines are responsible for loading in-game assets and textures and managing physics within the game world, among other things. AI assets could be generated on the fly via DLSS-enabled neural networks. There’s already a precedent for generating 3D models from images or text in 3D AI Studio, but it’s not perfect. NVIDIA’s system intends to be more robust through DLSS.

If DLSS reaches Huang’s desired level, we may see NVIDIA cards shipping with more tensor cores. Right now, we are focusing mainly on CUDA cores when looking at cards, but tensor cores may become just as important, if not more so, if DLSS becomes more like a game engine than an upscaling technology. Tensor cores are responsible for AI computing, so they’ll be necessary in cards that leverage DLSS for generating assets and gameplay.

Game developer workflow will also change significantly. Instead of prototyping games with assets, they can simply pass off the generation of these NPCs to the DLSS-enabled video card. This results in faster prototyping times without sacrificing the quality and depth of the world.

It’s Not as Easy as It Seems

There’s a habit in the tech world of making AI seem a lot more sophisticated than it is. While the technology is impressive, it still has some limitations, especially DLSS’s ability to do what Huang envisions it can. AI asset production especially has a few hiccups, with AI assets having problems matching existing asset styles, even with reference images and models. Having used a few of these in my own game development, I know that it’s complicated.

Developing a game is already a complex undertaking, and many current titles have been in development for years. Even if DLSS develops a new, efficient, and fast way of generating NPCs and objects using AI, it will take some time to filter down to development workflows. Additionally, AI-generated content can’t replace good old-fashioned human ingenuity when developing deep stories and experiences.

Using DLSS may also create barriers for gamers with lower-end hardware. While this may benefit NVIDIA financially, game studios would alienate some of their player base, making it a hard sell. While this technology is still in its early stages, there are quite a few hurdles to overcome to become an industry standard.

What Does This Mean for Our Games?

If this technology were to become mainstream, games would change significantly. AI asset and texture creation would open up a whole new realm for procedural games and storytelling. It would give us even more personalized experiences in games at a depth that developers couldn’t possibly offer beforehand.

However, it also opens up a path to full AI-Neural rendering. Future versions of DLSS and tools like GameNGen could approach this vision by creating scenes based on minimal developer input. But would these games really give us the experience we want? Game designers would have their jobs cut out for them to make this technological revolution worth experiencing.

The Dawn of a New Age of Gaming?

Ultimately, the future of DLSS and AI-driven game tech is shaping up to be as exciting as it is groundbreaking. The possibilities are expanding fast, from real-time content creation to immersive, adaptive worlds.

Gamers can look forward to experiences that feel more alive than ever while developers gain powerful tools to bring their visions to life more effortlessly. As these advancements continue, we’re on the edge of a new era in gaming—one where AI and human creativity come together to redefine what’s possible on screen.