Key Takeaways

- Linux provides tools to manage large text files and volatile data streams.

- Use ‘less’ to scroll, search, and manage file content, or pipe output into it directly.

- Split large files using ‘split’, manage chunks efficiently, and use ‘head’ and ‘tail’ for selective viewing.

If you need to find information inside very large text files, Linux provides all the tools you need, straight out of the box. You can also use them on live text streams.

Information Overload

Most of the time, Linux is very close-lipped. You have to work on the assumption that no news is good news. If your last command didn’t raise an error message, you can assume everything went well.

At other times, Linux can swamp you with information. Before the introduction of systemd and journalctl, the tools and techniques we’re going to cover here would have been used to tame sprawling log files, but they can be applied to any file.

They can also be applied to streams of data and to the output of commands.

Using less

The less command lets you scroll forward and backward through a file a line at a time with Up Arrow and Down Arrow, or a screen at a time with Page Up and Page Down, or jump to the start or end of the file with Home and End.

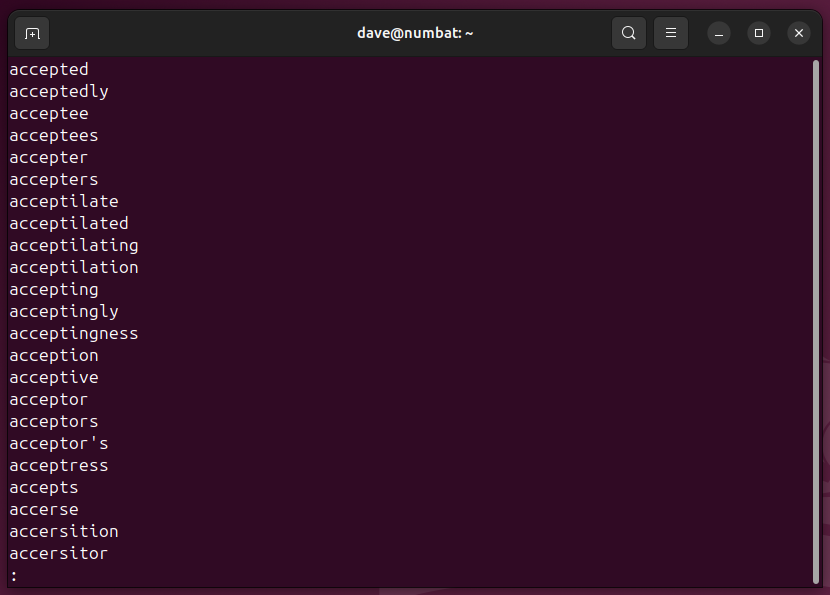

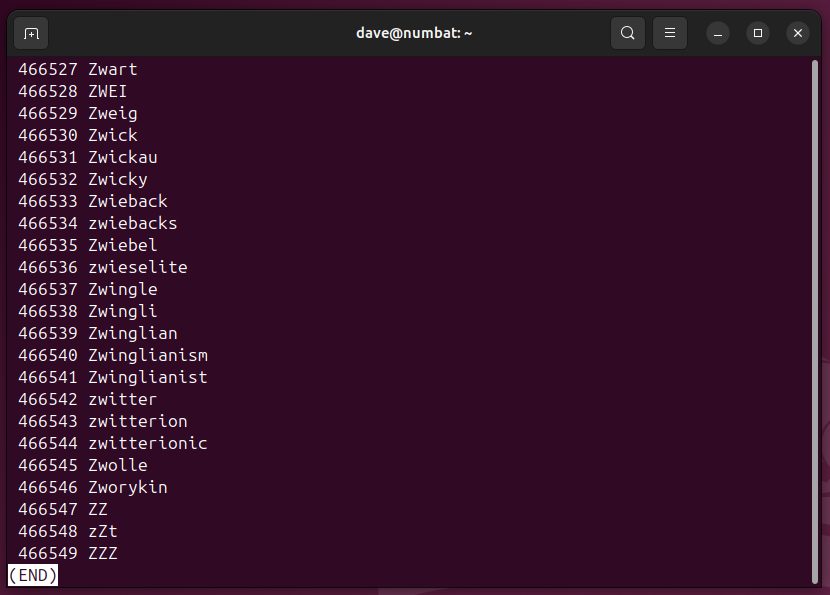

less words.txt You can add line numbers if you add the -N (line numbers) command line option.

less -N words.txt As you can see, there are hundreds of thousands of lines in this file, but less is still pretty nippy, even on this modestly-equipped virtual machine.

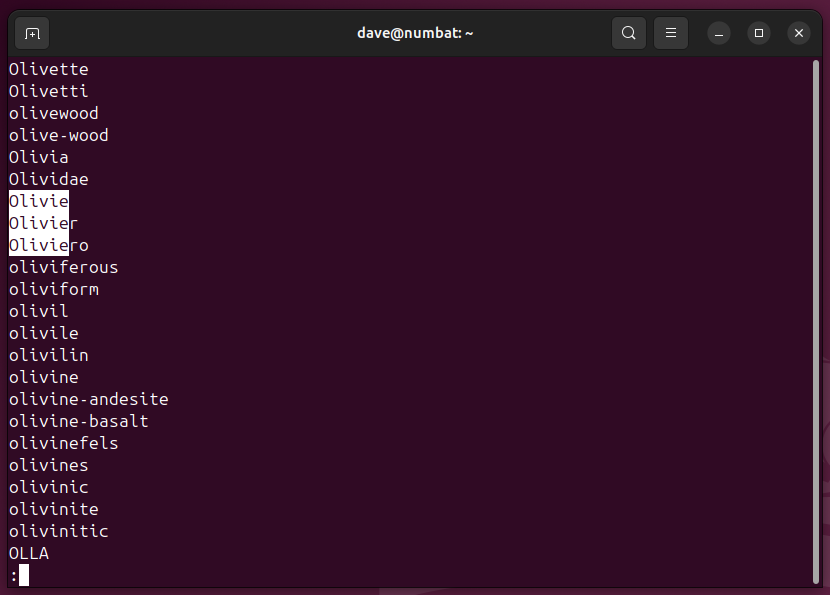

Usefully, less has a search function. Press the forward slash /, type your search clue, and press Enter. If there’s a match, less displays that section of the file, with the match highlighted.

You can jump forward from match to match by pressing n. To do the same in reverse, press N.

By using pipes, you can send the output from a command straight into less.

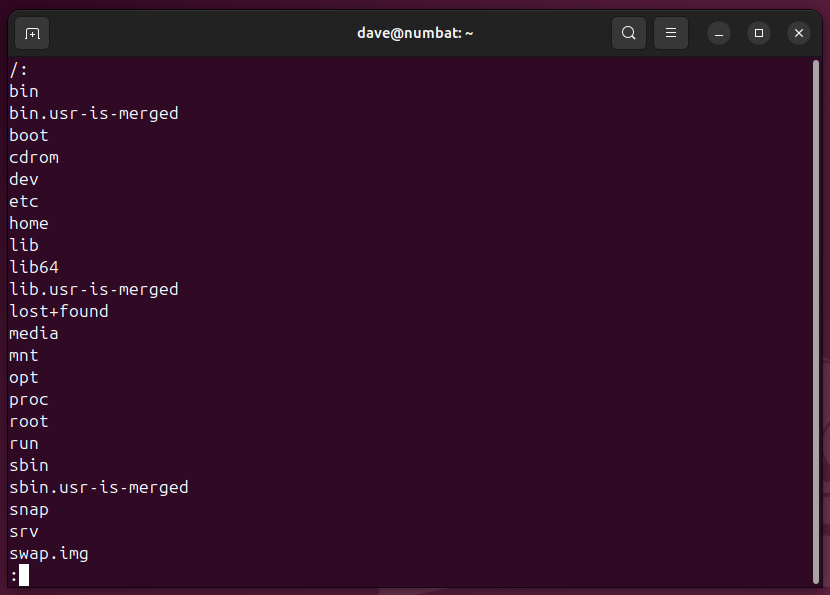

sudo ls -R / | less Just like you can with a file, you can scroll forward and backward, and search for strings.

Redirecting Streams into Files

Piping the output of a command into less is great for one-off needs, but once you close down less, the output vanishes. If you’ll need to work with that output in the future, you should make a permanent copy of it and open the copy in less.

This is easily done by redirecting the output of the command into a file, and opening the file with less.

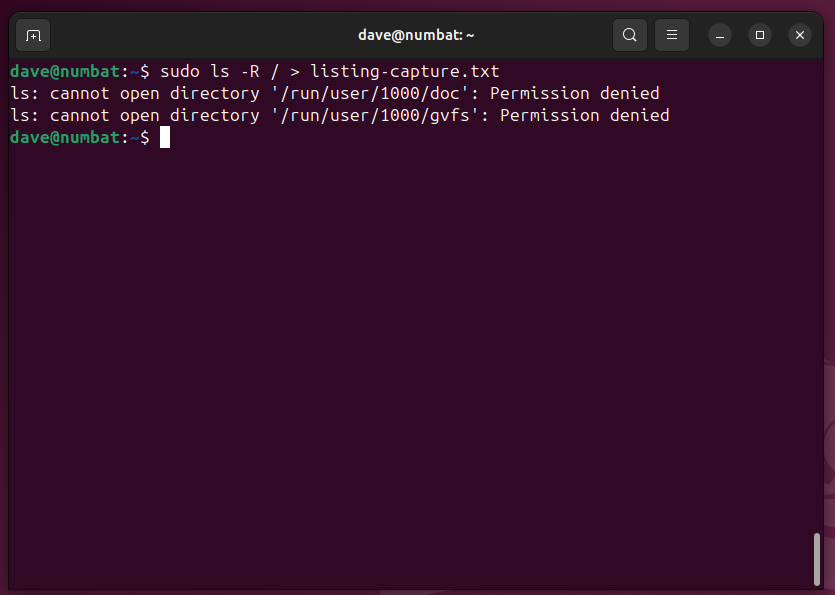

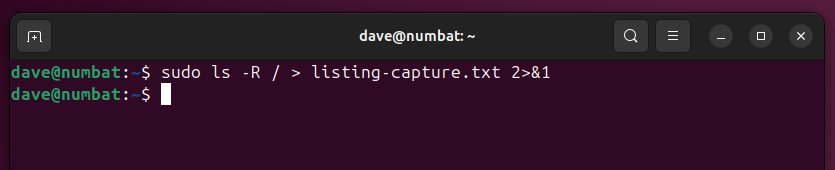

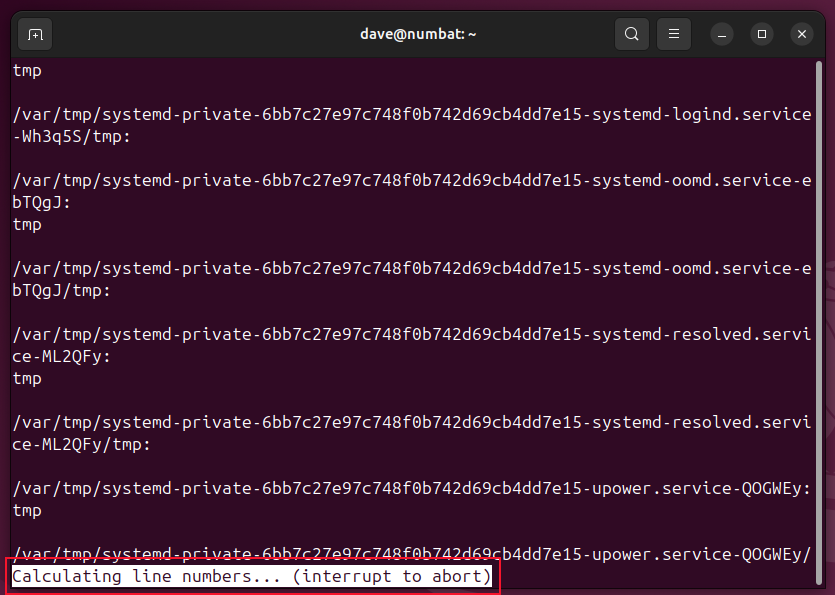

sudo ls -R / > listing-capture.txt Note that warnings or errors are sent to the terminal window so that you can see them. If they were sent to the file, you might miss them. To read the file, we open it with less in the usual way.

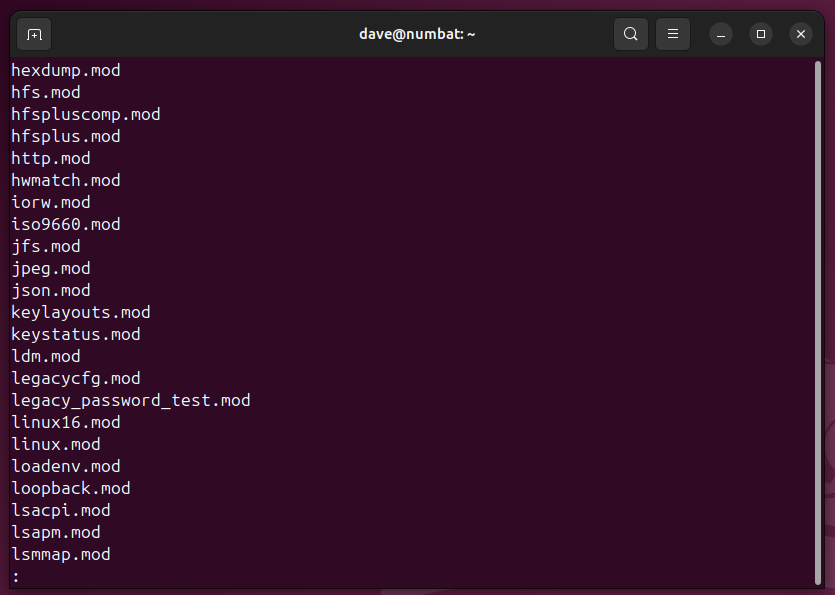

less listing-capture.txt

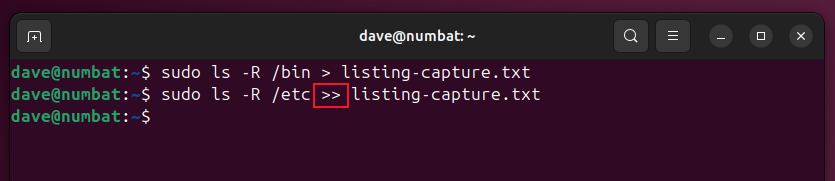

The > operator redirects the output to the named file, and creates the file afresh each time. If you want to capture two (or more) different sets of information in the same file, you need to use the >> operator to append the data to the existing file.

sudo ls -R /bin > listing-capture.txt

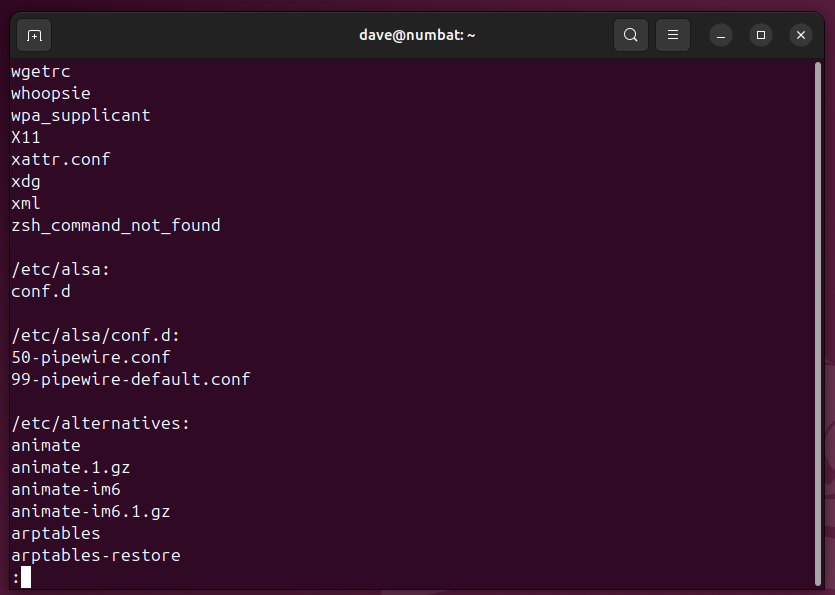

sudo ls -R /etc >> listing-capture.txt Note the use of >> in the second command.

less listing-capture.txt By capturing the output from a command, we’re actually capturing one of the three Linux streams. A command can accept input (stream 0, STDIN), generate output (stream 1, STDOUT), and can raise error messages (stream 2, STDERR).

We’ve been capturing stream 1, the standard output stream. If you want to capture error messages too, you’ll need to capture stream 2, the standard error stream, at the same time. We need to use this odd-looking construct 2>&1, which redirects stream 2 (STDERR) into stream 1 (STDOUT).

sudo ls -R / > listing-capture.txt 2>&1 You can search the file for strings such as warning, error, or other phrases you know appear in the error messages.

less listing-capture.txt

Splitting Files into Smaller Chunks

If your files are so large that less slows down and becomes laggy, you can split the original file into more manageable chunks.

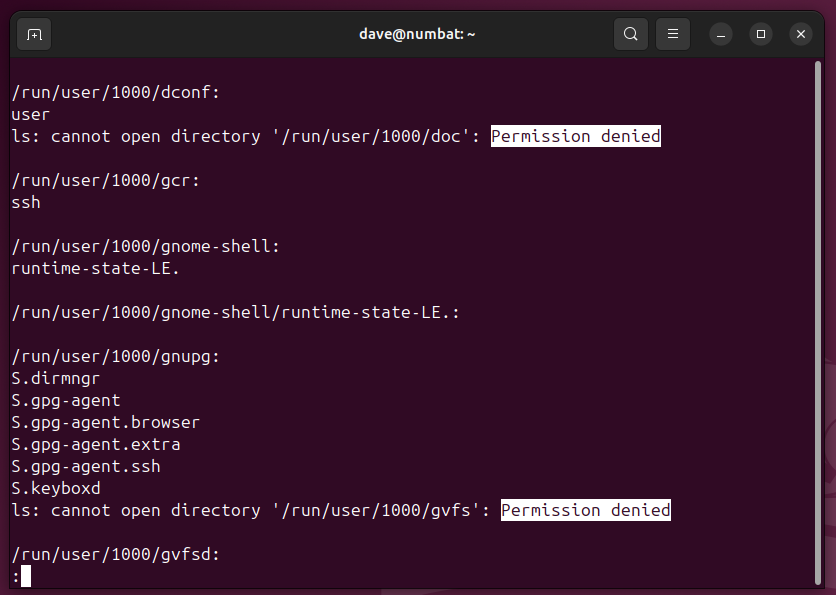

I’ve got a file called big-file.txt. It has 132.8 million lines of text in it, and it’s over 2GB in size.

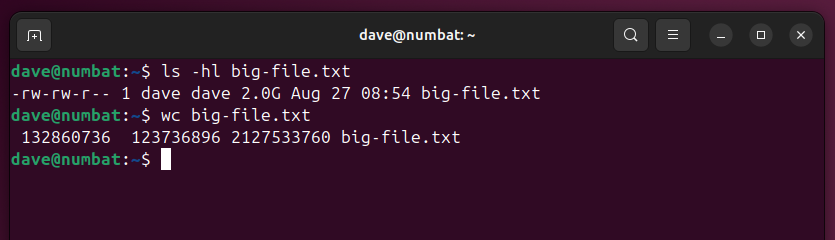

Opening it in less works well enough, unless you try to jump straight to the start or end of the file, or try to do reverse searches from the end of the file.

It works, but slowly.

That’s why we have the split command. As its name suggests, it splits files into smaller files, preserving the original file.

You can split a file into a specified number of smaller files, or you can specify the size you want the split files to be, and split works out how many files to make. But these strategies will leave you with lines and even words split across two files.

Because we’re working with text, and split lines and chopped words will be problematic, it makes sense to specify the split by number of lines. We used the wc command earlier, so we know how many lines we have.

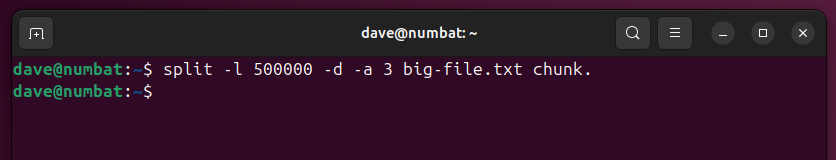

I used the -l (lines) option and specified 500,000 lines. I also used the -d (digits) option to have sequentially numbered files, and the -a (suffix length) option to have the numbers padded with zeroes to 3 digits. The word ‘chunk.’ is the prefix for the split file names.

split -l 500000 -d -a 3 big-file.txt chunk. In our example, this creates 267 files (numbered 000 to 265) files that are more manageable on modestly powered computers.

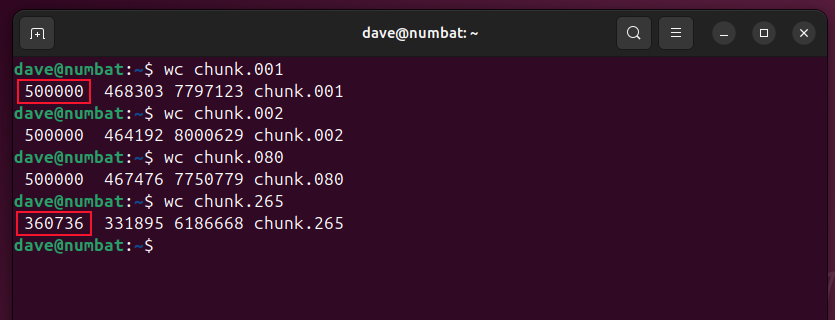

wc chunk.001

wc chunk.002

wc chunk.080

wc chunk.265 Each file contains 500,000 complete lines, apart from the last file. That file has whatever left over amount of lines there were.

Using head and tail

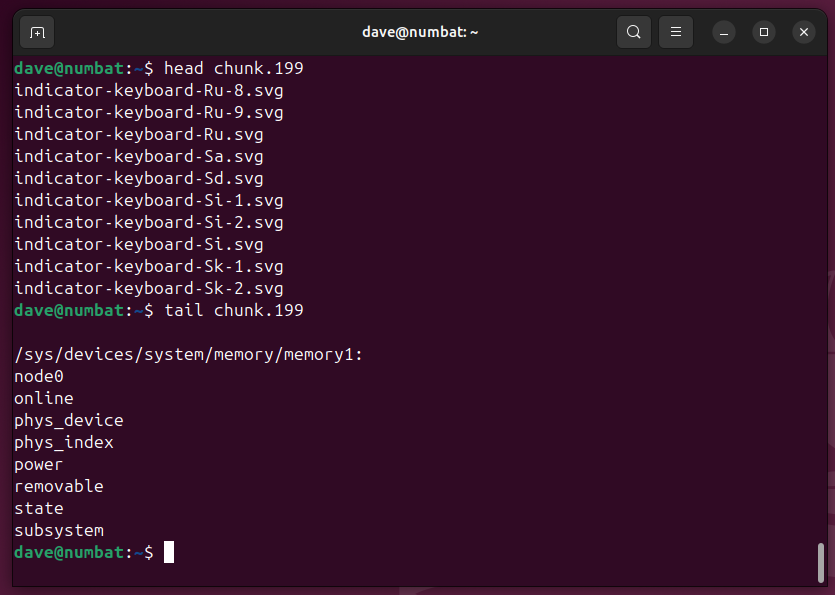

The head and tail commands let you take a look at a selection of lines from the top or end of a file.

head chunk.199

tail chunk.199 By default, you’re shown 10 lines. You can use the -n (lines) option to ask for more or fewer lines.

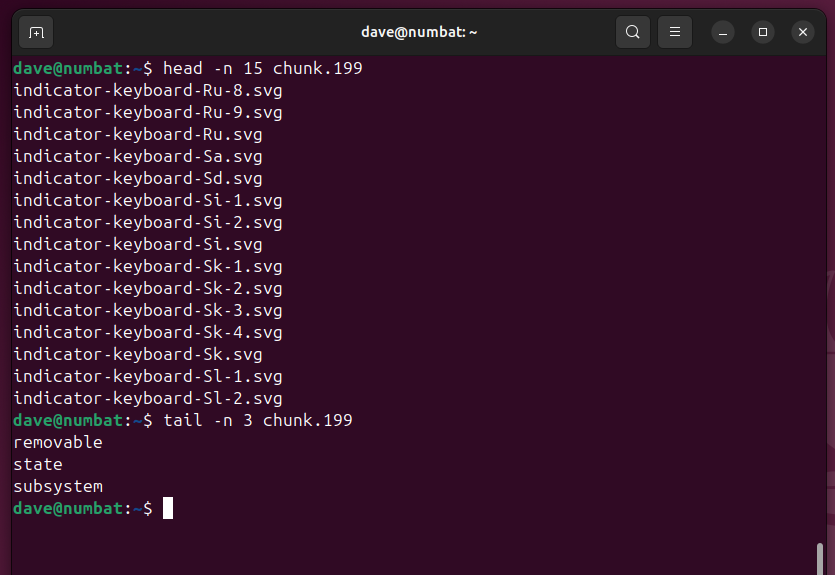

head -n 15 chunk.199

tail -n 3 chunk.199 If you know the region within a file that interests you, you can select that region by piping the output from tail through head. The only quirk is, you need to specify the first line of the region you want to see counted backward from the end of the file, not forward from the start of the file.

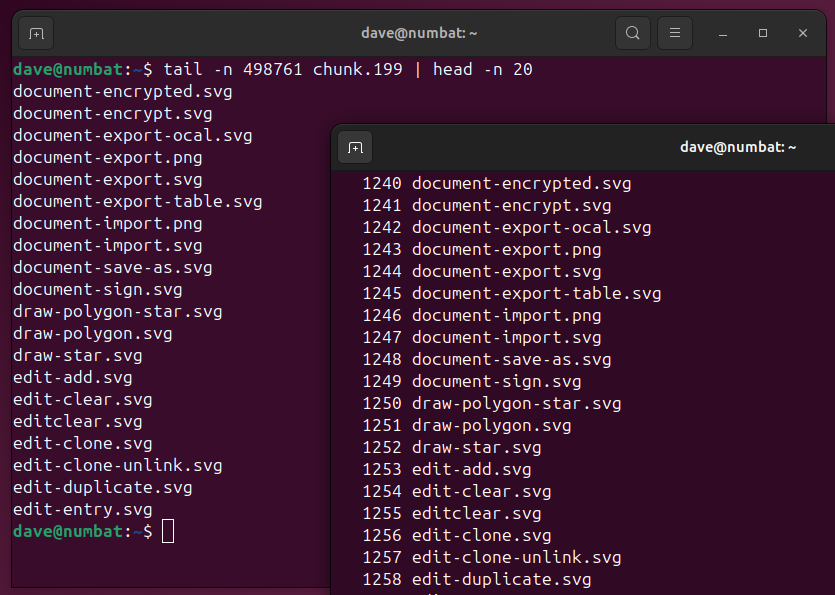

The file has 500,000 lines in it. If we want to see 20 lines starting at line 1240, we need to tell tail to start at line 500,000-1239, which is 498761. Note we subtract the number one less than the line we want to start at.

The output is piped into head which displays the top 20 lines.

tail -n 498761 chunk.199 | head -n 20 Comparing the output to the same file in less, displayed from line 1240 onward, we can see that they match.

Alternatively, you could split the file at the first line of interest, then use head to view the top of the new file.

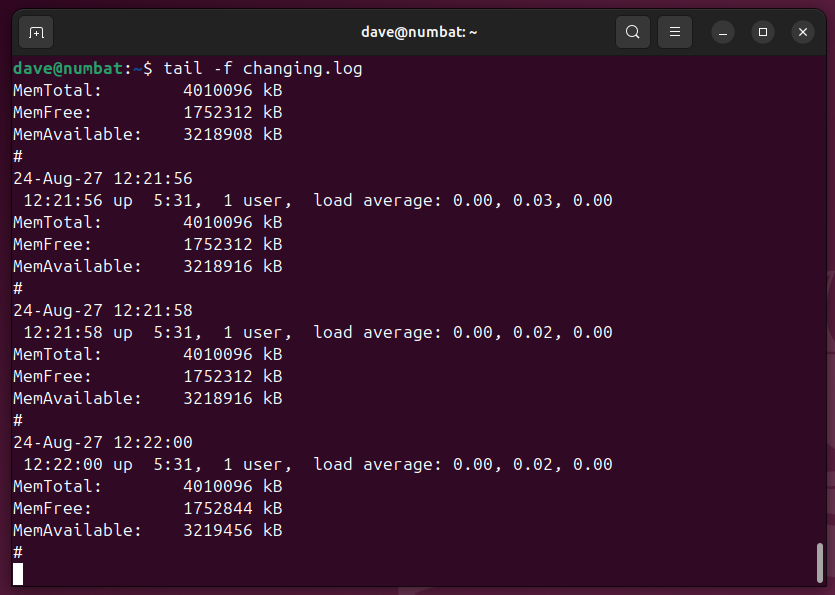

Another trick you can do with tail is monitor changing data. If you have a file that is being updated like a log file, the -f (follow changes) option tells tail to display the bottom of the file whenever it changes.

tail -f changing.log Filtering Lines With grep

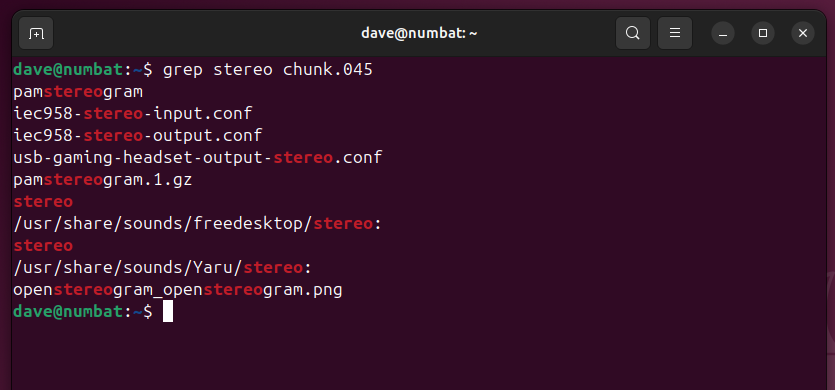

The grep command is very powerful. The simplest example uses grep to find lines in a file that contain the search string.

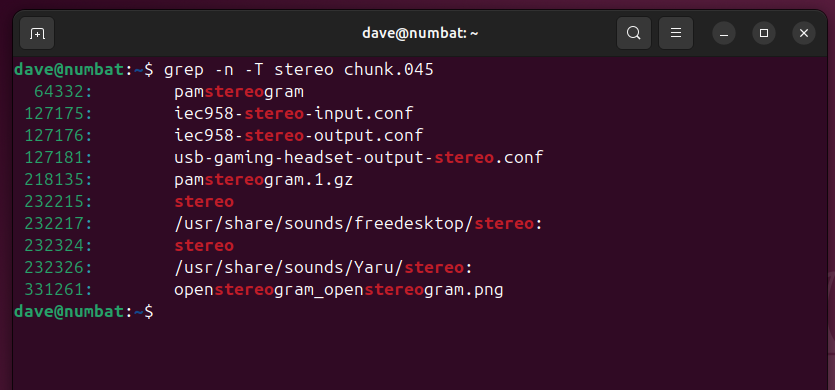

grep stereo chunk.045 We can add line numbers with the -n (line numbers) option so that we can locate those lines in the file, if we want to. The -T (tab) option tabulates the output.

grep -n -T stereo chunk.045

Searching through multiple files is just as easy.

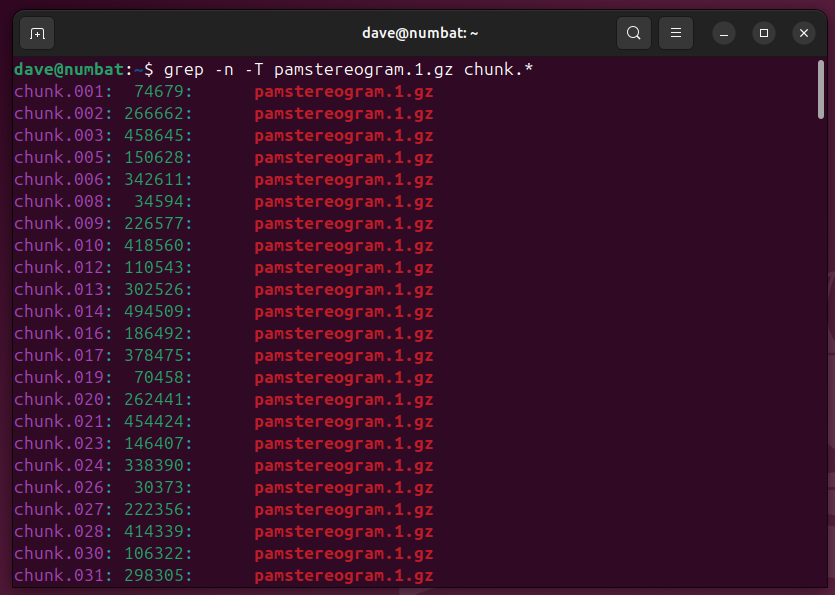

grep -n -T pamstereogram.1.gz chunk.* The filename and the line number are given for each match.

You can also use grep to search through live streams of information.

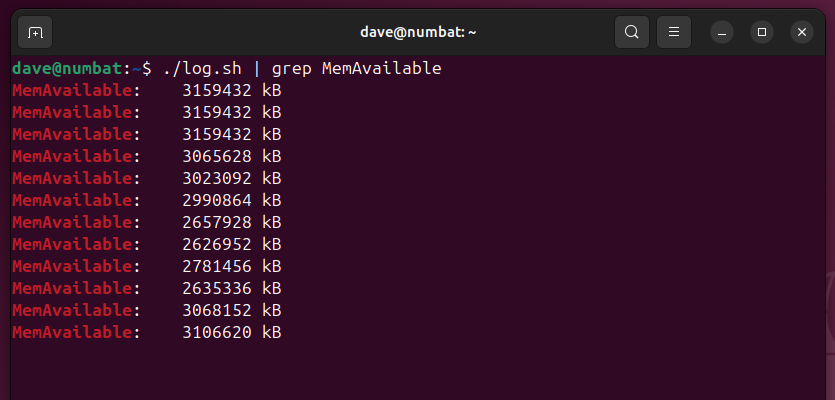

./log.sh | grep MemAvailable The Bigger They Come…

You can get as fancy as you like with grep, and use regular expressions in the search string. For all the commands shown here, I recommend checking out their man pages. They do much more than I’ve been able to cover here, and some of their other options may be valuable to your particular use case.