In an interesting development for the GPU industry, PCIe-attached memory is set to change how we think about GPU memory capacity and performance. Panmnesia, a company backed by South Korea’s KAIST research institute, is working on a technology called Compute Express Link, or CXL, that allows GPUs to utilize external memory resources via the PCIe interface.

Traditionally, GPUs like the RTX 4060 are limited by their onboard VRAM, which can bottleneck performance in memory-intensive tasks such as AI training, data analytics, and high-resolution gaming. CXL leverages the high-speed PCIe connection to attach external memory modules directly to the GPU.

This method provides a low-latency memory expansion option, with performance metrics showing significant improvements over traditional methods. According to reports, the new technology manages to achieve double-digit nanosecond latency, which is a substantial reduction compared to standard SSD-based solutions.

Moreover, this technology isn’t limited to just traditional RAM. SSDs can also be used to expand GPU memory, offering a versatile and scalable solution. This capability allows for the creation of hybrid memory systems that combine the speed of RAM with the capacity of SSDs, further enhancing performance and efficiency.

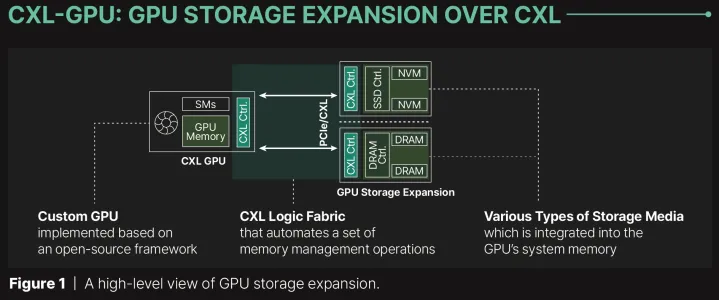

While CXL operates on a PCIe link, integrating this technology with GPUs isn’t straightforward. GPUs lack the necessary CXL logic fabric and subsystems to support DRAM or SSD endpoints. Therefore, simply adding a CXL controller is not feasible.

GPU cache and memory systems only recognize expansions through Unified Virtual Memory (UVM). However, tests done by Panmnesia revealed that UVM had the poorest performance among tested GPU kernels due to overhead from host runtime intervention during page faults and inefficient data transfers at the page level.

To address the issue, Panmnesia developed a series of hardware layers that support all key CXL protocols, consolidated into a unified controller. This CXL 3.1-compliant root complex includes multiple root ports for external memory over PCIe and a host bridge with a host-managed device memory decoder. This decoder connects to the GPU’s system bus and manages the system memory, providing direct access to expanded storage via load/store instructions, effectively eliminating UVM’s issues.

The implications of this technology are far-reaching. For AI and machine learning, the ability to add more memory means handling larger datasets more efficiently, accelerating training times, and improving model accuracy. In gaming, developers can push the boundaries of graphical fidelity and complexity without being constrained by VRAM limitations.

For data centers and cloud computing environments, Panmnesia’s CXL technology provides a cost-effective way to upgrade existing infrastructure. By attaching additional memory through PCIe, data centers can enhance their computational power without requiring extensive hardware overhauls.

Despite its potential, Panmnesia faces a big challenge in gaining industrywide adoption. The best graphics cards from AMD and Nvidia don’t support CLX, and they may never support it. There’s also a high possibility that industry players might develop their own PCIe-attached memory technologies for GPUs. Nonetheless, Panmnesia’s innovation represents a step forward in addressing GPU memory bottlenecks, with the potential to impact high-performance computing and gaming significantly.