Summary

- Space telescopes capture invisible light, which is converted into 1s and 0s for processing on Earth.

- Specialists refine raw data using FITS files to create visuals of space photos in a meticulous process.

- Translating raw data into visuals isn’t editing, it’s necessary to make the invisible aspects of space visible.

We’ve all seen those stunning space photos in their vibrant glory, but did you know that’s not how they look straight out of the telescope? What telescopes see is a lot of invisible light and weird cosmic noise that has to be edited and translated into those iconic images we all recognize.

How Cameras Turn Light Into Images

When we take a picture with a phone or a digital camera, it just pops out instantly. Light goes in on one end, and a colored picture comes out on the screen as soon as you tap the shutter button.

It may feel instant, but that picture started out as a long list of 1s and 0s. When you press the shutter button, your phone converts an exposure of light falling onto its camera sensor into binary (1s and 0s) and then the phone’s processor builds an entire image out of those 1s and 0s.

It first takes that long string of 1s and 0s and builds what’s called a “grayscale brightness map.” This creates distinctions between light and dark, or the contrast in the image.

A few cleanups, filters, and enhancements later, it spits out the final image.

It takes a fraction of a second for the phone to do this math magic, so that we never see any of this happening.

Now imagine there was so much binary data that it couldn’t be processed in a single instance by a single computer processor. Instead, a team of specialists and scientists had to step in and carefully handle the processing. That’s basically how these space telescopes deliver their output.

The Space Telescope Delivery System

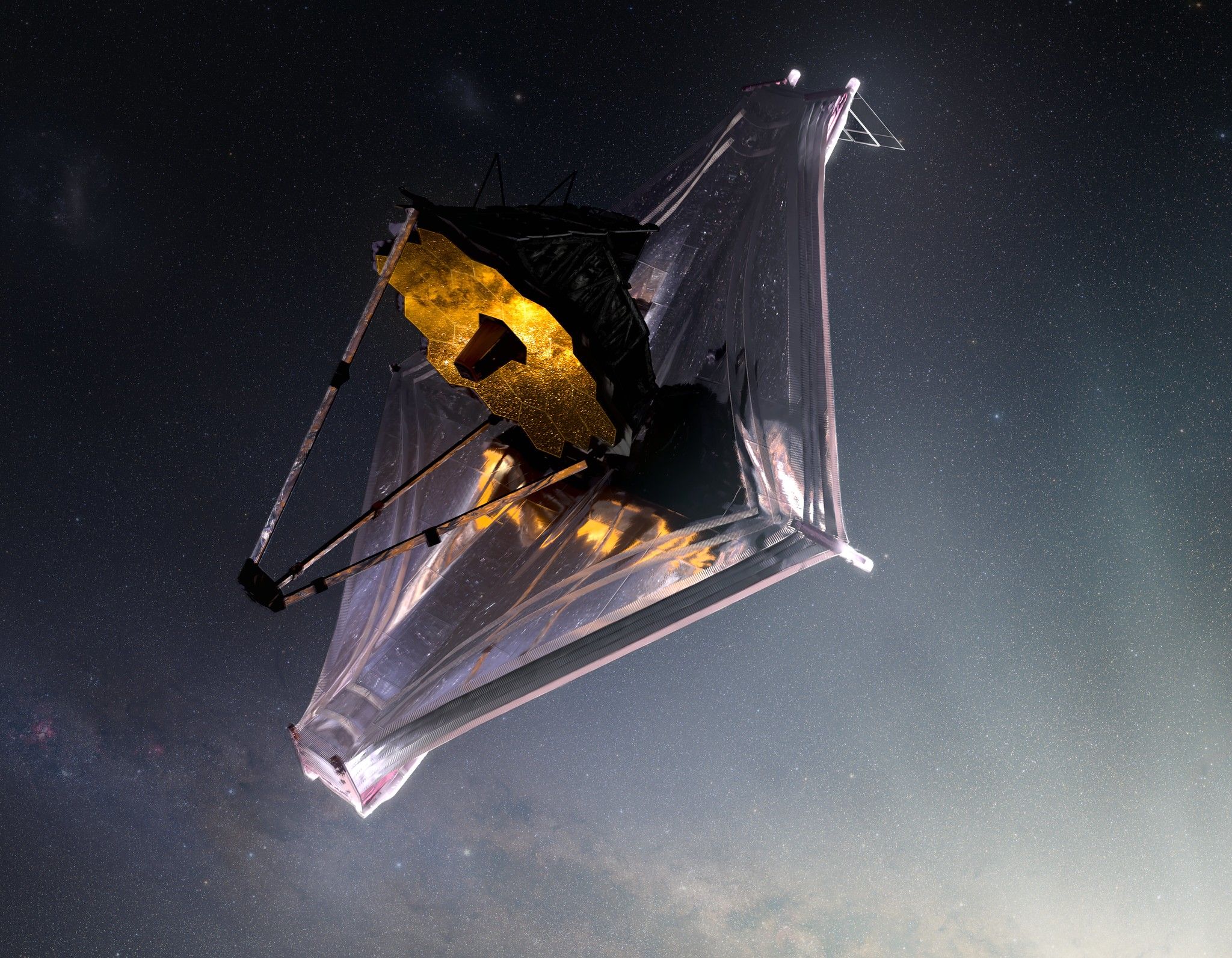

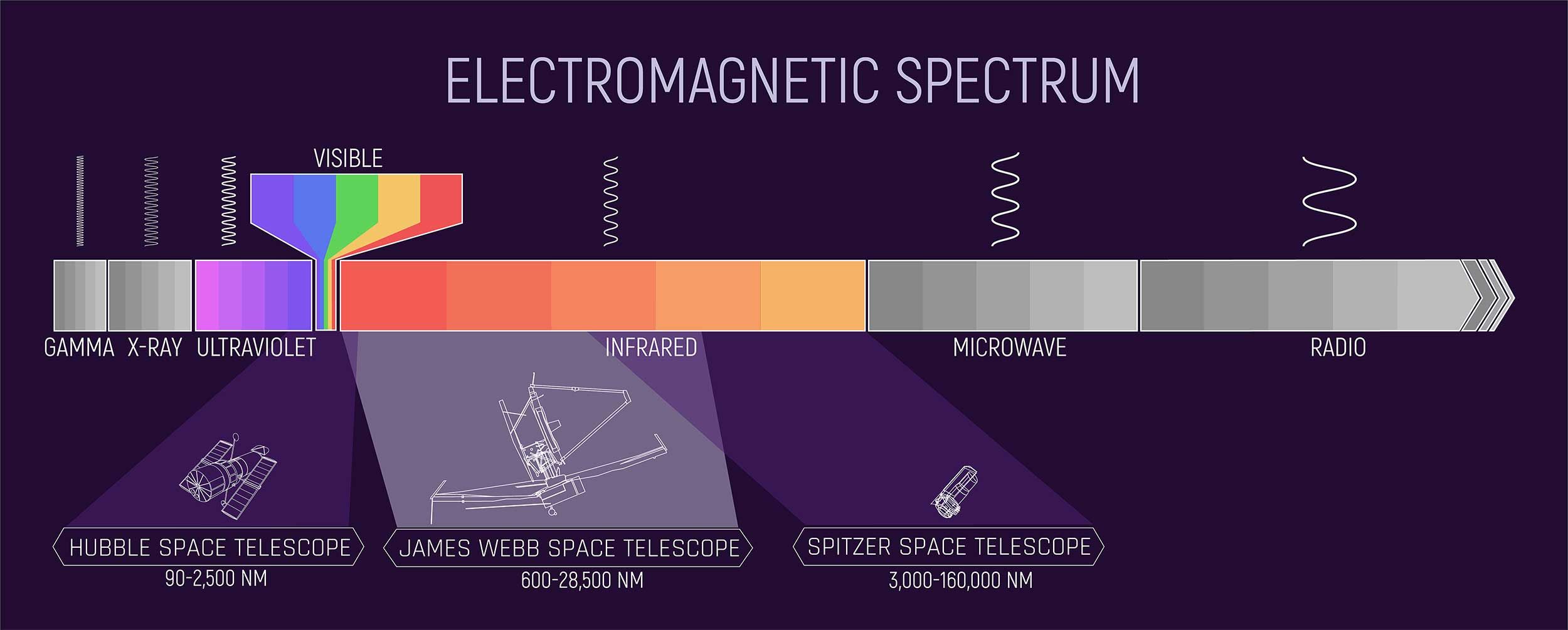

Unlike phone cameras or digicams, space telescopes aren’t tuned to the light we all see. They’re capturing the world we can’t see with our eyes. Telescopes like the James Webb Telescope and the Hubble can “see” invisible light, heat, radiation, and faint traces of far-off particles of ancient dust and gas.

The James Webb Space Telescope captures that invisible light, but it doesn’t process any of it onboard.

The telescope simply turns the light exposures into packets of raw binaries (1s and 0s) and beams them back to Earth. These packets don’t contain any images, only digitized values of dark and light in a string of 1s and 0s (and metadata about the object coordinates and the health of the instruments onboard).

Back on Earth, the Space Telescope Science Institute (STScI) receives these raw binary packets and refines them into a computer-readable format called FITS. FITS or Flexible Image Transport System is a standard for packaging and organizing astronomical data like this.

These FITS files aren’t actual images either, they’re just raw pixel data arranged in order. To pull visual information out of them, you need software tools like the FITS Liberator.

Using the FITS Liberator, you can pull visual information out of those unprocessed pixel arrays. Technically, it’s called “stretching” the FITS file.

The camera onboard the James Webb Telescope has a bunch of physical filters tuned to very narrow bands of the invisible light we’ve been talking about. Some filters are good for picking up specific gases, others are for detecting dust. The output of each filter is packaged into its own FITS file. So one FITS file corresponds to one filter exposure.

Here’s the final piece of the puzzle: each FITS file isolated by a filter can be “stretched” into a grayscale (monochrome) snapshot. With as few as three snapshots on hand, you can composite a full-color image together!

Let’s Build A Space Photo

The FITS files I’ve been talking about are actually publicly available online for anyone to download. The tools needed for “stretching” FITS files and creating composites are free too. So I tried compositing an image out of that space data myself.

Step #1: Downloading the Raw Data

The Mikulski Archive for Space Telescopes (or MAST) is an archive where you can search for any space coordinates or space objects captured by Hubble, Webb, TESS, and a few others.

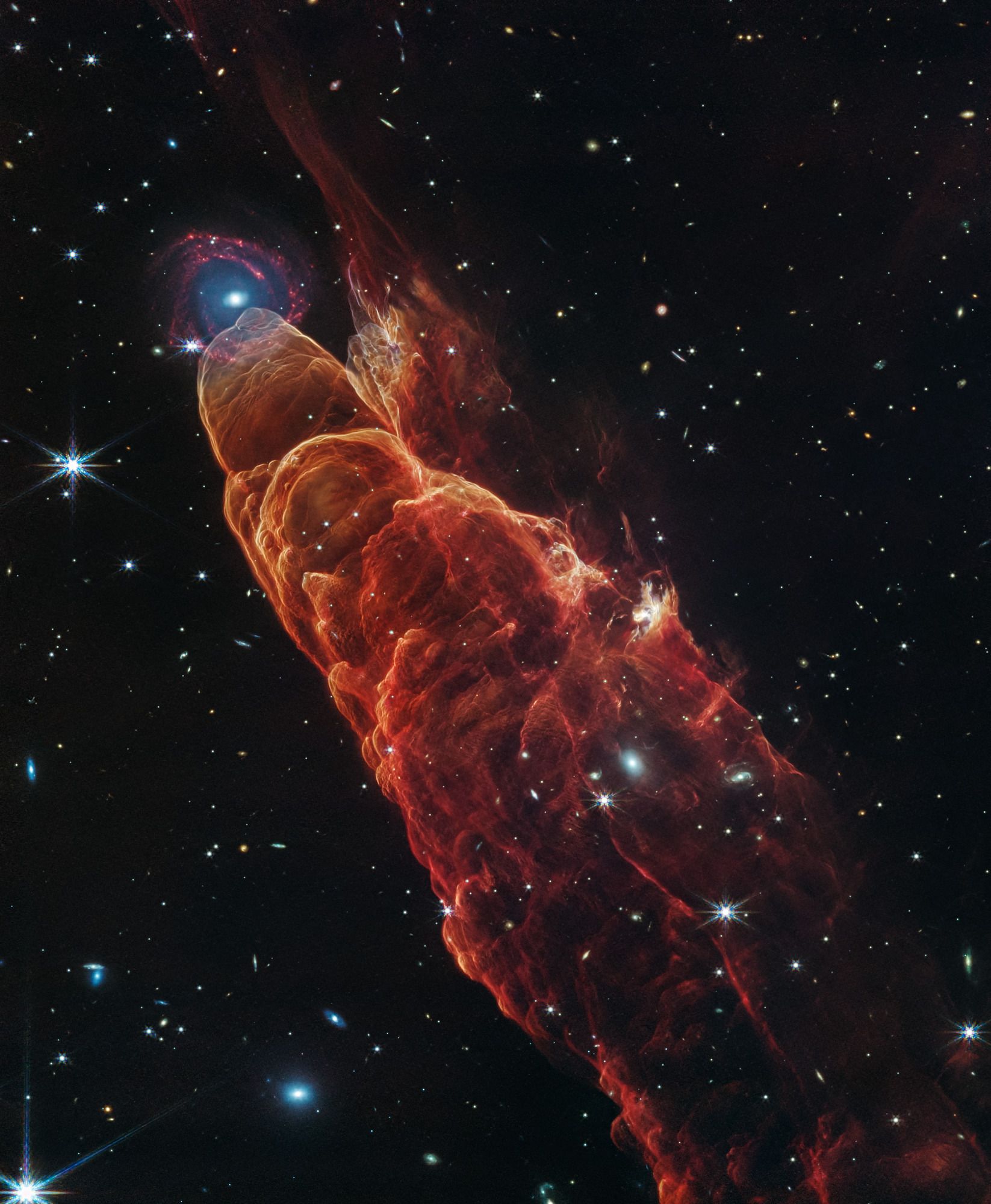

I wanted to construct a Webb image and chose the Pillars of Creation as my subject.

The search returned more than 100 FITS files. These raw datasets are massive. We’re talking 2–5 terabytes of data, perhaps more. I didn’t feel like frying my PC that day, so I decided to only work with 3 files, which weighed around 3GB total.

The beauty of this system is you don’t need every single FITS file from every single filter to make a usable composite. Each FITS file is labeled by the filter it came from, so you can grab one short, one mid, and one long range filter, stretch them, and stack them. That’s enough for a barebones composite.

Step #2: Stretching the FITS

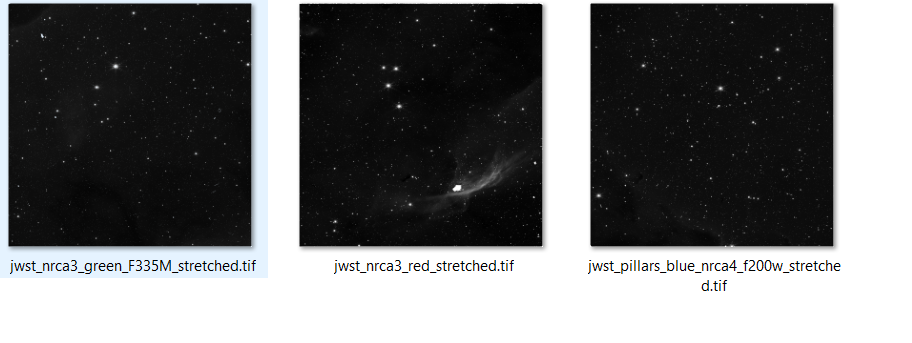

The next step is “stretching” the FITS files. I installed FITS Liberator to do that. It creates grayscale images, which I’ll stack and colorize later.

I adjusted the brightness and darkness levels of the FITS array until it looked right to me. Then I exported it as a black and white image. Repeated the same steps for the other two FITS files. I’m sure there’s a scientific way to do this, but I just eyeballed it.

Step #3: Compositing

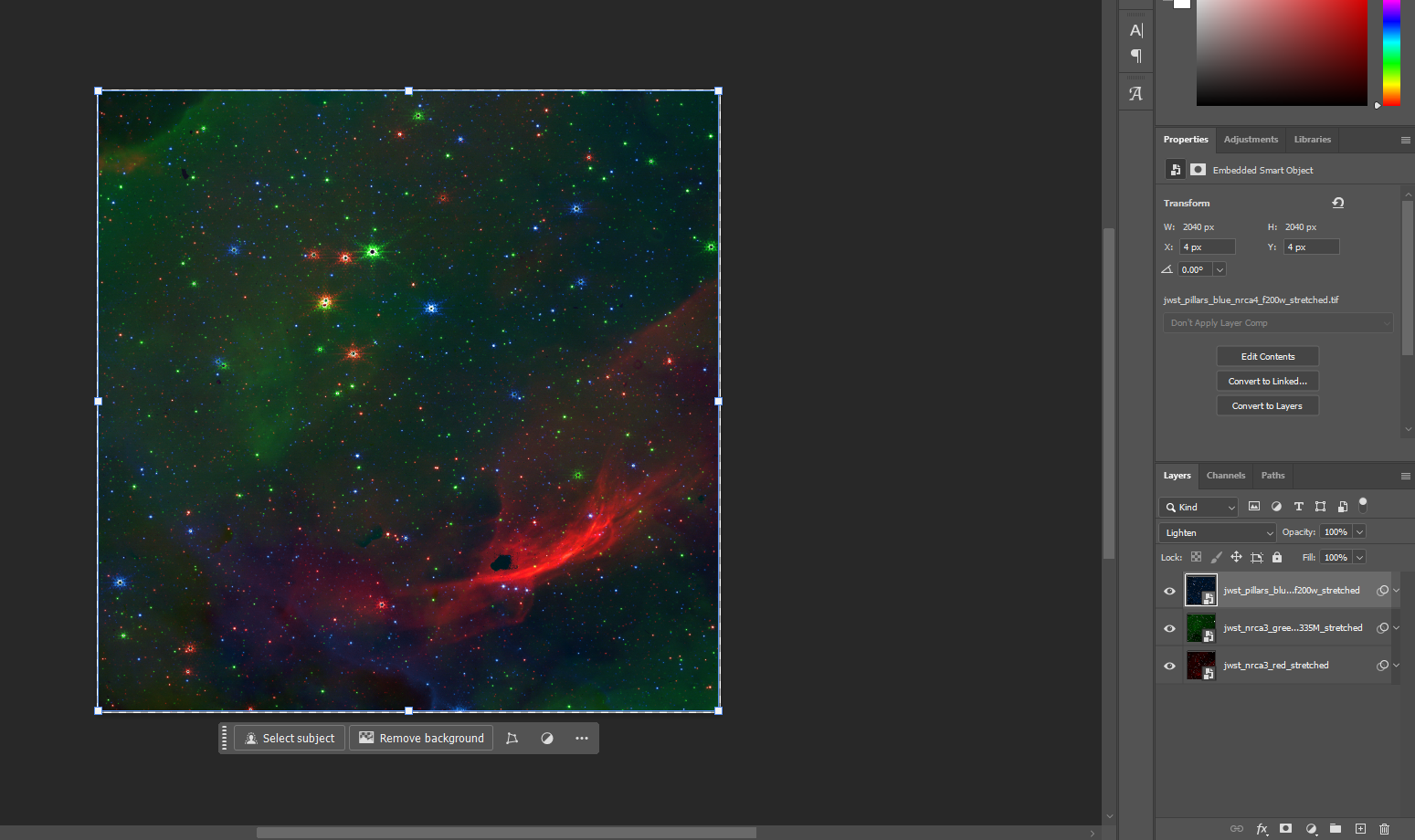

With the three freshly liberated grayscale images of the Pillars of Creation, I was ready to stitch them together.

What you’re looking at in these black and white pictures is an infrared (invisible light) snapshot of this nebula. Since it cannot be seen with the naked eye, we have to “map” or approximate color. Kind of like how thermal vision goggles show heat as red and cold as blue to make that information visible.

Filters onboard the James Webb Space Telescope responsible for filtering infrared on the far end are assigned a violet or blue hue. The near-infrared filters get red or orange. The midrange filters become greenish.

Once we’ve simulated these colors for each image file that originated from a particular filter, we’ll have three channels: red, green, and blue. Stacking them together will give us an RGB image.

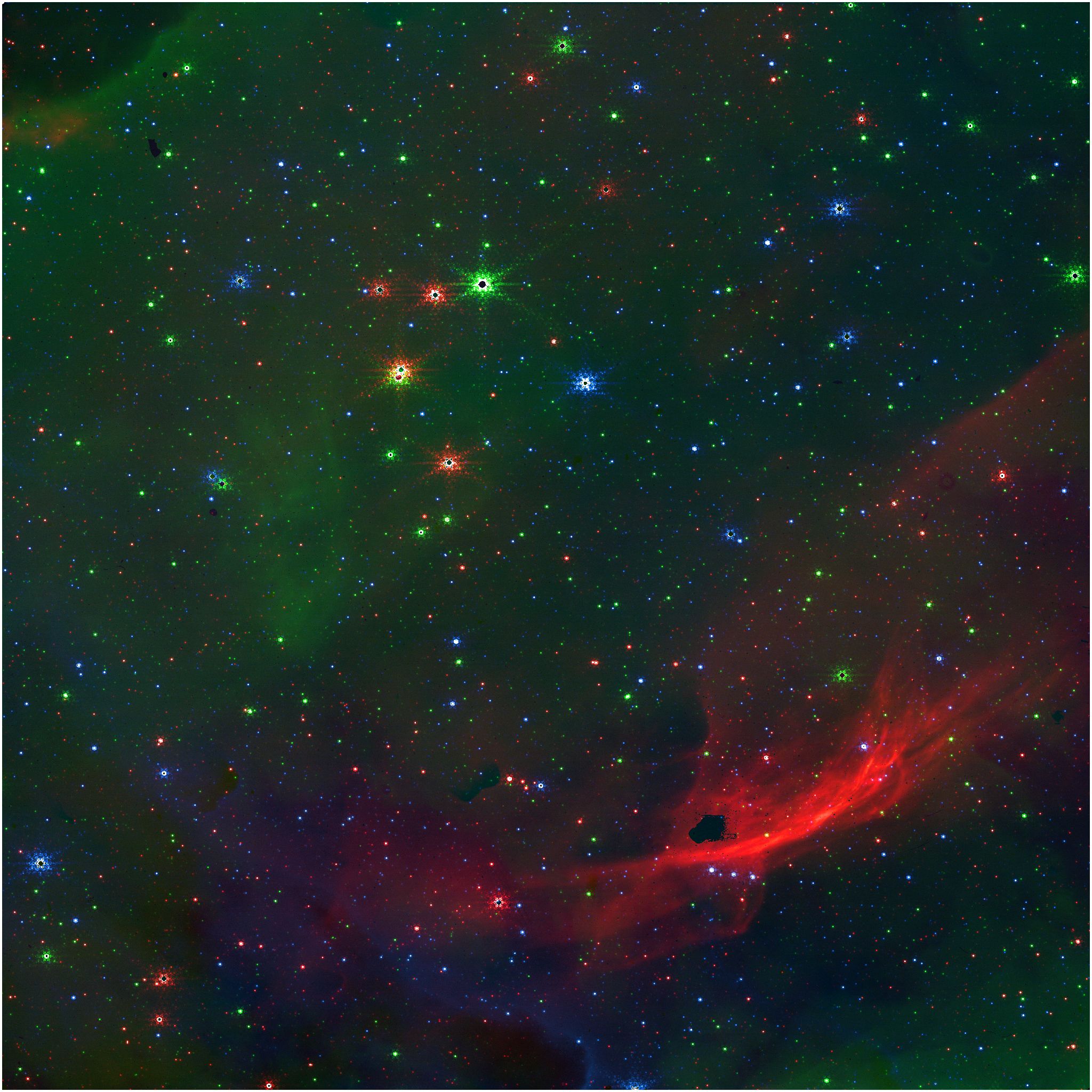

I brought the three monochrome images into Photoshop and colorized them. Blended them together and this was the result.

If our eyes could see in the infrared, the Pillars of Creation might look a little something like this. Note the red cloud. It’s a small part of the Pillars. The star cluster in the middle is NGC 6611.

Why Processing Is Necessary (and Awesome)

But where, you might ask, are the rest of the Pillars in my composition? The MAST zip archive I downloaded had 30 FITS files in it. I only processed 3 of those. Those 27 files held the rest of the details.

It’s an incredibly meticulous and painstaking process because each filter has to be processed one at a time by hand. The stunning composites we’re used to seeing can take several weeks to render, and involve teams of specialists and scientists working in tandem with sophisticated tools to composite and refine these masterpieces. The raw files can contain terabytes of data.

Now that you can appreciate the scale of genius and dedication behind NASA images, it’s also easy to see why these photos are so heavily processed.

What these researchers are doing isn’t editing in the usual sense, it’s more like translation. The telescope beams down bits of 1s and 0s, just raw data, nothing else, and it’s their job to “translate” those numbers into visuals.

The reason we need that translation in the first place is that this stuff is literally invisible to us. To make the invisible visible, we have to “stretch” what the telescope captured into our visual range.

So even when we’re introducing color to these images, we aren’t inventing details. It’s just a way of creating visual distinction and interest.

Plus, it has scientific value. The telescope filters are tuned to capture specific materials and elements. Just by looking at colored composites, astronomers can read actual chemical signatures.

That’s what makes these masterpieces analytical tools. They can answer questions like which gas is which, how hot or cold it is, or how old stars are.

Can You See the Raw Versions?

Yes, you can. They’re publicly available online in NASA’s archives. There are two kinds of raw files you can access. The first are FITS files (the kind I used to build my composite) and the second kind are slightly processed monochrome raw images.

Unlike FITS files which require specialized tools to open, these raw versions are basic JPEGs that have been “stretched” but not processed beyond that. Each space mission typically beams back hundreds of thousands of images over its lifetime, which are promptly uploaded to NASA’s archives. The final processed images take weeks or even months to show up, but these “raw” versions are made available to the public within hours.

Anyone with a decent internet connection and digital storage can access and play with these raw files.

Why Some Space Photos “Look Fake”

You might have heard someone say that NASA photos don’t feel “real.” Or you might have caught yourself in that uncanny valley where the colors feel too vivid, the details too sharp, and the shapes too unrecognizable.

If you’ve been paying attention, you already know the answer to this one.

These pictures aren’t faked, but are translated. The reason they feel alien to us is that they are. The content itself is invisible, but it has been converted into a human-readable format.

It’s not guesswork either. Colors are mapped onto a space telescope’s physical filters based on the frequencies of light they let in. The color is not decoration, it’s annotation. Think of it like highlighting a piece of text with color. The neon yellow just makes it easier to spot.

So the answer to the question, what do unedited space photos look like can either be: they’re just 1s and 0s or they’re lightly processed “stretched” grayscale maps.