Summary

- Vibe coding allows anyone to create software without understanding code, eliminating barriers to entry.

- Vibe-coded apps pose risks due to potential quality and cybersecurity issues AI may miss.

- Large Language Models require human supervision due to potential inaccuracies in code generation.

Anyone can learn to code, but coding is hard. Thanks to the power of AI, you can just get a chatbot to write the code for you, but is that a good idea?

Welcome to the world of “vibe coding,” where anyone can make software, and it doesn’t matter if you don’t actually understand the code itself. Is that awesome, or is it actually a huge problem?

What Exactly Is “Vibe Coding”

The term “vibe coding” is essentially slang for creating computer software code by simply telling an AI what you want the code to do, and the software spitting it out for you. Then you compile the code, run the app, and if everything looks good you call it a day and share your software.

It’s not about exact technical knowledge, but about the “vibe” you’re going for, I guess. In other words, people who do vibe coding are effectively still in the position of a client explaining to a software developer what they want. It’s just that they’ve replaced the human software developer with an AI.

Related

Beginner Coding in Python: Building the Simplest AI Chat Companion Possible

You’ve probably heard of ChatGPT, but what about building a simple chatbot companion of your own?

More People Are Creating Software With AI. That’s Good, Right?

While the messaging of “learn to code” has been loudly spread around for years now, the truth is that while lots of people would like to create software, they have neither the time to learn, nor the resources to hire someone who can code. So that’s a sizable number of people who have ideas for apps or other software, but with no way to make them a reality.

So from one perspective, vibe coding is pretty awesome. It means that, just like AI image generation, the barrier to entry has been dropped through the floor for something that would usually take years of practice and study to do. You could make the argument that this is a democratization of software creation.

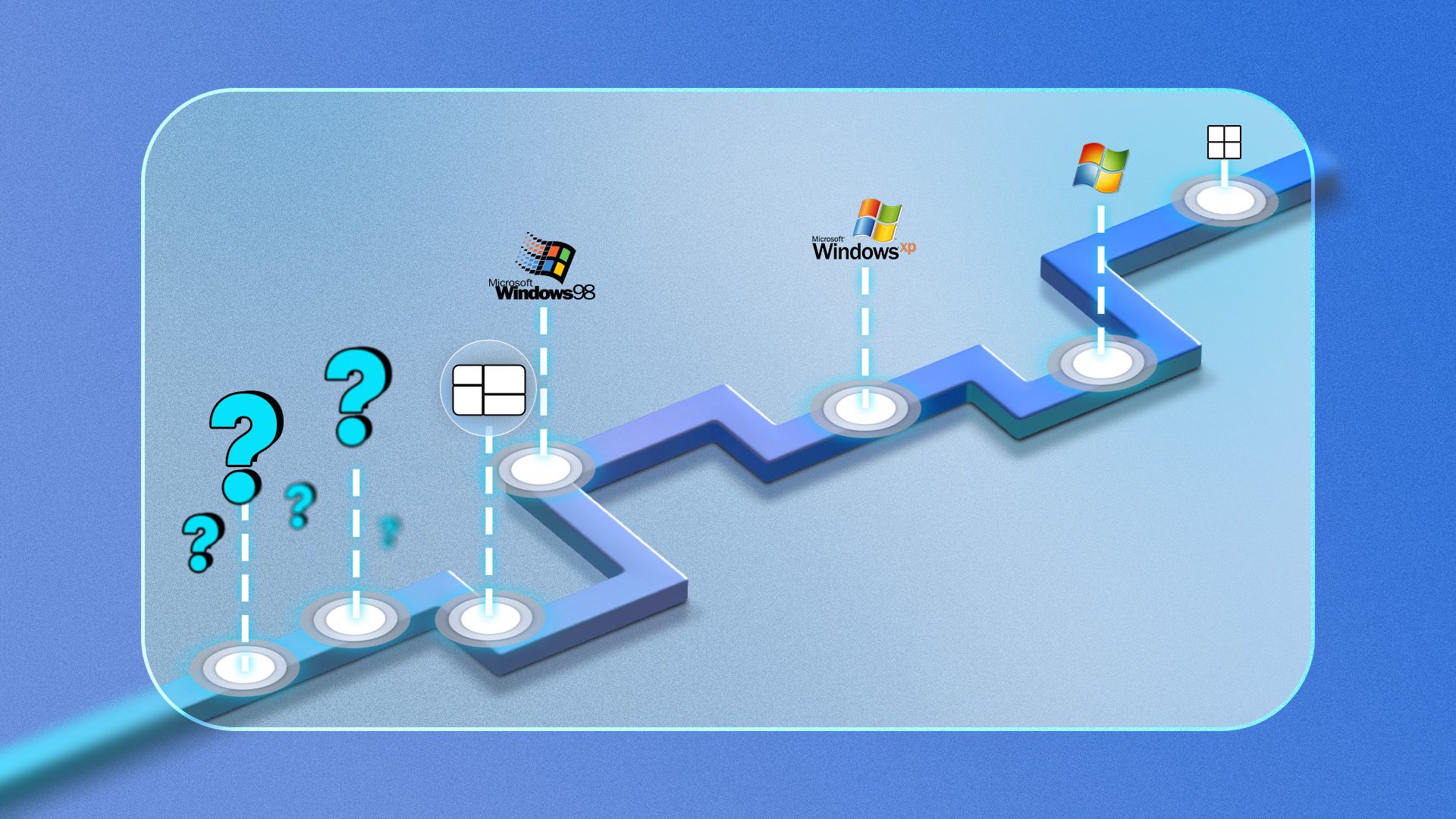

It also falls roughly in the line with the development of programming over the decades. In the beginning, programmers had to work in raw machine code, and then in Assembly language, which is easier for humans to understand, but still pretty close to machine code in function.

Later, high-level coding languages struck a middle ground between something that could be translated easily to machine code and a human language like English. Languages like C or Python might seem cryptic at first glance, but they have syntax much closer to the human side of the spectrum and represent a huge leap in how easy it is to make software.

So, you could see vibe coding as the natural evolution of translating from human to machine language, which is a lovely idea in theory, but presents some problems.

Related

Can You Practically Learn to Code With ChatGPT?

You can learn some things, but it isn’t as simple as you might think.

Vibe-Coded Apps Can Be Dangerous

Here’s the thing—if you have no way of evaluating the quality of your code, it could have all sorts of problems that you’re simply oblivious to. Even if you test the code extensively, and use AI to iteratively fix bugs and issues you find, you still have no idea whether the code is actually good or not. Does it follow good practices for cybersecurity? Does it have some obvious flaw in it that a human coder would spot in an instant? Perhaps more importantly, is there a not-so-obvious issue that only an experienced human coder would clock?

It’s one thing to vibe code a fun little game for your kids or for your personal DIY projects, but if you’re trying to make software that you want to publish, or even sell, the pitfalls are many.

Related

I Tried Coding a Game With ChatGPT, Here’s How It Went

Coding a game is a lot of work, but can ChatGPT make it easier? The truth is, you’ll still need to know how to code.

LLMs Are Always Risky Unless You’re A Subject Expert

Because of the way Large Language Models work, there’s always a chance that it will make a mistake or even make things up that it shouldn’t. When it comes to computer code, even an LLM that’s 99% reliable and correct will still create significant problems when you have hundreds or thousands of lines of code. Even worse, if you ask an LLM to evaluate that code, some of the time it will do it incorrectly for the same reasons.

This is just a symptom of a problem with LLMs on the whole. If you are a subject expert, then an LLM can be a powerful, profound productivity booster. So an ace coder can now simply spend their time debugging that 1% of the code that’s wrong, instead of writing the 99% that’s mostly boilerplate busywork. To someone like this, an LLM coding assistant is the best thing that’s ever happened to them.

For someone that has no idea how to debug code at all, or to someone trying to use an LLM in a subject area they know nothing about, it’s a deadly trap where you don’t know what you don’t know.

Related

What Is an LLM? How AI Holds Conversations

LLMs are an incredibly exciting technology, but how do they work?

There Should Always Be a Human Coder in the Loop

I think this is generally true for any work done by an LLM, but you always need a human expert in the loop to check on the work done by an LLM. Whether that’s coding, or writing a research paper. LLMs will never be 100% trustworthy or reliable, and any number below 100% means you need a human checking on that output.

This doesn’t mean LLMs are useless or that they won’t revolutionize what we can do or how fast we can do it, it just means that we will always have to supervise it competently. So unfortunately, you’ll still have to learn to code. There’s no way around it.