HDR or High Dynamic Range is quite possibly the biggest display upgrade since HD TVs first arrived. Yes, I’d include 4K in that assessment, since HDR has a much bigger visual impact than simply adding more pixels into the mix.

These days, good HDR support and reproduction is a key feature to look for in a television, but what about computer monitors? It’s a feature you have to pay for after all, so is it worth seeking out a (good) HDR monitor?

HDR for Monitors Is a Mess Right Now

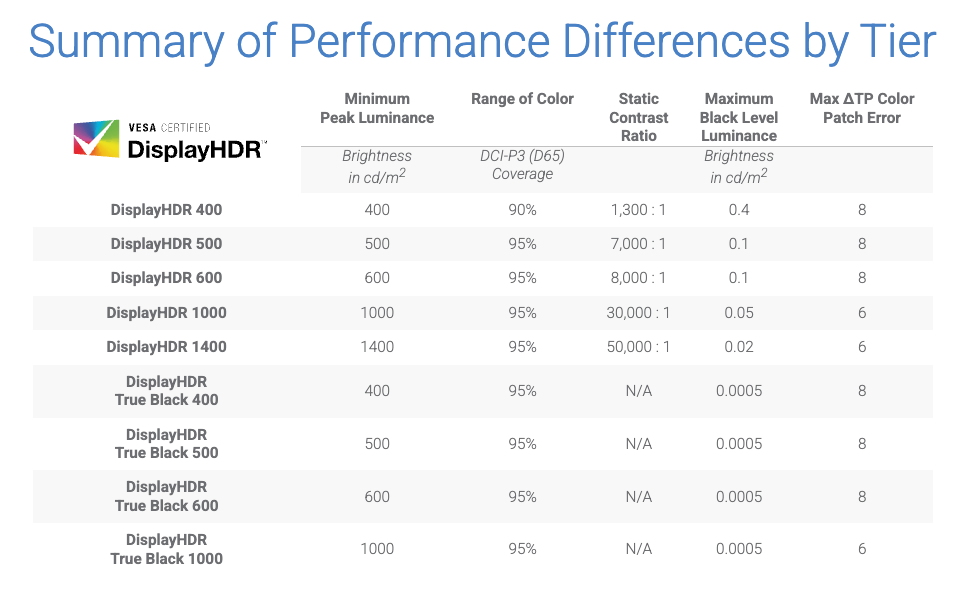

Just because a monitor says “HDR” somewhere on the box doesn’t mean it’s really HDR. The situation with who gets to put an “HDR” logo on their monitor packaging is a little loosey-goosey. If a monitor says “HDR ready” or “DisplayHDR 400” then you’ll want to avoid it for HDR content specifically. In the former case, “HDR Ready” means the monitor can accept and display an HDR signal, but that its reproduction doesn’t necessarily measure up against any sort of standard or certification.

DisplayHDR 400 is the lowest level of VESA HDR certification, but the requirements to get this certification are so laughably low that it’s hard to call the resulting image HDR. Given that the screen only needs to achieve 400 nits of peak brightness. In my opinion, you’ll want nothing less than DisplayHDR 600 certification, and preferably DisplayHDR 1000 or better.

However, it’s important to understand that just because a monitor has poor HDR specs doesn’t make it a bad monitor. There are plenty of monitors that are brilliant in SDR, with great contrast and excellent color accuracy. It’s just that it all falls apart as soon as you activate HDR.

Related

Desktop OS HDR Support Is a Bigger Mess

Since most people use Windows, I’ll start off by saying that HDR on Windows was and still is an absolute disaster. To be fair, Microsoft has improved things, and we now even have AutoHDR on Windows, though it works when it wants to in my experience. There’s also a handy HDR keyboard shortcut (Win+Alt+B) which makes it easy to turn on HDR just before you start a movie or game.

You’ll want to use it too, because the standard Windows desktop is a searing eyesore in HDR mode, which means having to toggle or hope that the app or game you want to use has an automatic HDR toggle built in.

On macOS things aren’t much better. If you’re using one of those expensive external Mac displays, desktop HDR looks fine (or so I’m told) and works well with apps like Final Cut Pro, but third-party app HDR support is a hit-and-miss affair.

Related

How to Watch HDR Content on a Mac

Got a MacBook or external display that supports high dynamic range? Try it out!

I’ve never tried using HDR on a Linux system, but I spent some time looking through the forums before writing this, to see how people were getting on with HDR in Penguin Land, and it works! Well, unless it crashes your NVIDIA system, or you don’t like editing config files, or you like a desktop environment that doesn’t work with HDR.

So, there’s no need to pick on any one particular OS here, HDR is no fun to manage on any of them.

OLED and MiniLED Screens Are the Only HDR-Worthy Models

Since good HDR depends so much on the difference between the darkest and brightest pixels that can be displayed at the same time within the same frame, that leaves flat panel displays without any dimming zones with a distinct disadvantage.

Related

If you only have one big screen zone, your pixels can never achieve true black, and even if you crank the brightness to widen the gap, you’re increasing brightness across the entire screen. This is one of the reasons DisplayHDR 400 isn’t really HDR—it allows for a “screen level” zone.

The more zones you have, the better the HDR picture can be, but screens with a low number of zones also struggle. With miniLED however, which has hundreds or thousands of zones, HDR really comes to life. Higher-end miniLED panels are almost (but not quite) as good as OLED panels, which can achieve perfect black levels by simply switching individual pixels off, or by dimming them individually.

The bottom line is that unless a monitor is using miniLED or OLED technology, manage your expectations when it comes to HDR image quality. The obvious downside here is that both of these screen technologies are still quite expensive, though prices have been steadily coming down over time.

Do You Actually Use HDR Content on Your Desktop?

I guess it should be obvious, but if you aren’t actually viewing any HDR content on your desktop monitor, then its HDR capabilities are a moot point. There’s no reason to pay for good HDR capabilities if you’ll never use them.

Even if you do play games, HDR support is hit-or-miss—especially on PC. While many AAA titles offer impressive HDR, others don’t support it properly, or rely on poor implementations that make things look worse.

HDR Monitors Matter for Content Creators

When all the stars align, HDR on the desktop can be amazing, and if you value that, then you should spend the extra money on a good HDR monitor. However, if you are a content creator, you almost certainly will need a good HDR monitor at some point.

Regardless of which visual medium you work in, the expanded parameters of HDR will be of use to you, and if you’re making HDR content well, then you need to see what the final product will look like. Of course, what you strictly need is a reference-grade HDR monitor, but for small-time creators on a budget, good consumer-grade monitors can get close enough for your needs.

You can’t mess around with quality here though, if you’re doing paying work, then you need to pay for a monitor commensurate with the value of that work, and the time and effort to calibrate it properly.

Most People Should Skip HDR Monitors for Now

From my point of view, most people in the market for a new monitor shouldn’t be too bothered about HDR as a feature. Instead focus on the core specs that matter for an SDR monitor instead:accurate colors, solid brightness, and good contrast is still a better experience than a cheap HDR monitor with a flashy logo and underwhelming performance.

Unless you specifically need it—either for content creation or AAA gaming with HDR assets on a high-end display—you’ll get more bang for your buck elsewhere.