Key Takeaways

- iPhone 15 Pro users can’t access Visual Intelligence but can recreate it with Shortcuts and Google Lens.

- Recreate the Visual Intelligence feature by creating a shortcut in the Shortcuts app and assigning it to the Action Button.

- If you don’t have an Action Button, use the back tap gesture to access the visual intelligence feature.

If you have an iPhone 15 Pro or iPhone 15 Pro Max, you may feel a little cheated. Your iPhone can run Apple Intelligence but doesn’t get the Visual Intelligence feature available on iPhone 16 models. Thankfully, you can recreate most of what this feature does by using the Shortcuts app and Google Lens.

What Is Visual Intelligence on iPhone 16?

Visual Intelligence is part of Apple’s suite of AI-powered Apple Intelligence tools. It uses your iPhone’s camera to capture an image of whatever you’re looking at. It will then bring up information about the image that you capture.

By pointing your iPhone at an object and long pressing the Camera Control button on iPhone 16 models, you can translate text, get information about businesses, or get details about the product or object you’re looking at.

Visual Intelligence is very similar to Google Lens, a feature from Google that you can use to get information about images. Google for iPhone includes Google Lens, which can use your iPhone’s camera to search for information on the things you can see around you. The big difference with Visual Intelligence is that you can run it with a touch of the Camera Control button without fiddling around opening apps.

The iPhone 15 Pro and iPhone 15 Pro Max have enough RAM to run Apple Intelligence features. However, because they don’t have Camera Control buttons, there’s no way to access Visual Intelligence.

The good news is that it’s possible to recreate something similar to the Visual Intelligence feature and assign it to the Action Button on an iPhone 15 Pro or iPhone 15 Pro Max. If your iPhone doesn’t have an Action Button, it’s not all bad news, either. You can use the back tap gesture on your iPhone to recreate visual intelligence even on much older iPhones.

Create a Shortcut to Take and Pass a Photo to Google Lens

To recreate the visual intelligence feature on an iPhone 15 or iPhone 15 Pro, you can create a custom shortcut in the Shortcuts app and assign it to the Action Button. When you open the camera and point it at an object, pressing the Action Button will run the shortcut. This will take a photo and pass that photo to Google Lens in the Google app, which offers similar visual search features to Apple’s Visual Intelligence.

If you don’t already have it on your iPhone, download and install the Google app. Open the Shortcuts app and tap the “+” (plus) icon in the top right of the screen to create a new shortcut. Tap “Search Actions,” type “Take Photo” and select the “Take Photo” action.

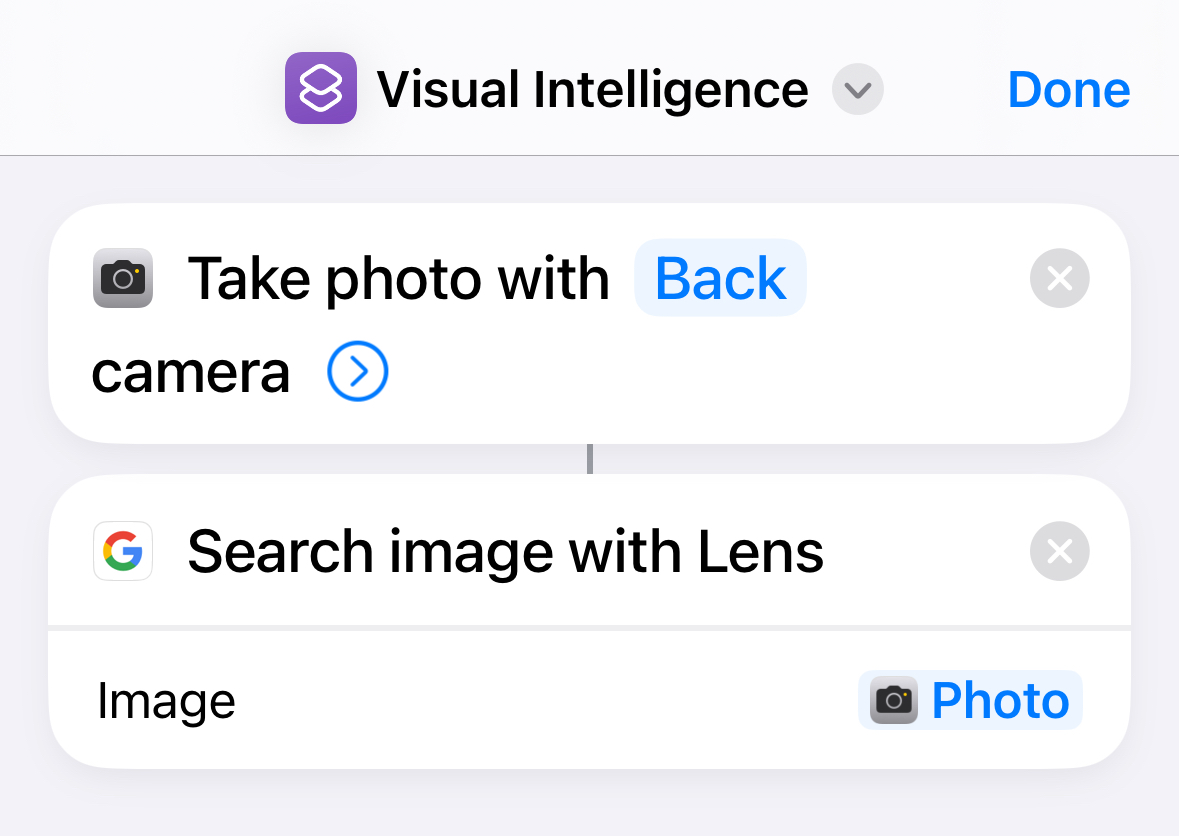

Tap the arrow icon and toggle “Show Camera Preview” off. This will make the shortcut take a photo for you. Tap “Search Actions” again, type “Search Image” and select “Search Image With Google Lens.” This will pass the photo that’s just been taken and use it to search in the Google app using Google Lens.

Tap the name of your shortcut and select “Rename.” Give it a memorable name, tap “Done” at the top right of the screen, and your shortcut will be saved.

Your completed shortcut should look like the image below.

Assign Your Google Lens Shortcut to the Action Button

Since the Camera app will be open when you’re using this shortcut, you won’t be able to access it via an app icon, widget, or control. You’ll need to assign it to the Action Button, which will run the shortcut any time you press it, even when the Camera app is open.

Go to Settings > Action Button. Swipe left through the options until you reach the Shortcut option. Tap the dropdown and select your newly created shortcut. Your shortcut will now run whenever the Action Button is pressed.

Use the Google Lens Shortcut iPhones Without an Action Button

The Action Button is only found on the iPhone 16 models as well as the iPhone 15 Pro or iPhone 15 Pro Max. If you have a different model, you can still use this shortcut, however. There is an accessibility feature that you can use to perform an action when you tap the back of your iPhone three times. One of the options is to run a shortcut, meaning you can assign your Google Lens shortcut to the back tap gesture.

Go to Settings > Accessibility > Touch. Scroll to the very bottom of the screen and tap “Back Tap.” Select “Triple Tap,” scroll down, and select your newly created shortcut.

Using Your Google Lens Shortcut

Now that you’ve created your Shortcut and assigned it to your Action Button or the back tap gesture, you can use it in place of Visual Intelligence.

Open your Camera app and point it at the object that you want to search for. Press the Action Button or triple-tap the back of your iPhone. The shortcut will run; the Camera app will take a photo, and it will be passed to Google Lens, which will open automatically.

In Google Lens, you should see the photo at the top of the screen, with information beneath. You can use the drag handles to select the part of the image that you want information on. The information you see will depend on the object you have captured.

If your image contains a product such as a piece of furniture or an item of clothing, you’ll find shopping results for similar products in the results. If your image contains a plant or animal, you’ll find information about the species of plant or animal recognized in the image.

When your image contains text in a foreign language, tapping “Translate” will superimpose the translation over the image. If the image includes a problem from a subject such as math, history, or science, tapping “Homework” will bring up information about how to solve the problem.

There’s one quirk I discovered when using this shortcut. When you’ve finished using Google Lens, you should tap the back arrow at the top left of the screen to close out of Google Lens. If you don’t, the next time you use your shortcut, the image shown in Google Lens will be the same as the last time you used it.

For iPhone 15 Pro and iPhone 15 Pro Max users, the lack of Visual Intelligence is disappointing, to say the least. Thankfully, it’s fairly easy to recreate the feature using Shortcuts and the Google app.